Online Databases

Online Databases

Online databases are :

onldb.starp.bnl.gov:3501 - contains 'RunLog', 'Shift Sign-up' and 'Online' databases

onldb.starp.bnl.gov:3502 - contains 'Conditions_<subsysname>' databases (online daemons)

onldb.starp.bnl.gov:3503 - contains 'RunLog_daq' database (RTS system)

db01.star.bnl.gov:3316/trigger is a special buffer db for FileCatalog migration database

Replication slaves :

onldb2.starp.bnl.gov:3501 (slave of onldb.starp.bnl.gov:3501)

onldb2.starp.bnl.gov:3502 (slave of onldb.starp.bnl.gov:3502)

onldb2.starp.bnl.gov:3503 (slave of onldb.starp.bnl.gov:3503)

.gif)

How-To Online DB Run Preparation

This page contains basic steps only, please see subpages for details on Run preparations!

I. The following paragraphs should be examined *before* the data taking starts:

--- onldb.starp.bnl.gov ---

1. DB: make sure that databases at ports 3501, 3502 and 3503 are running happily. It is useful to check that onldb2.starp, onl10.starp and onl11.starp have replication on and running.

2. COLLECTORS AND RUNLOG DAEMON: onldb.starp contains "old" versions of metadata collectors and RunLogDB daemon. Collector daemons need to be recompiled and started before the "migration" step. One should verify with "caGet <subsystem>.list" command that all EPICS variables are being transmitted and received without problems. Make sure no channels produce "cannot be connected" or "timeout" or "cannot contact IOC" warnings. If they do, please contact Slow Controls expert *before* enabling such service. Also, please keep in mind that RunLogDB daemon will process runs only if all collectors are started and collect meaningful data.

3. FASTOFFLINE: To allow FastOffline processing, please enable cron record which runs migrateDaqFileTags.new.pl script. Inspect that script and make sure that $minRun variable is pointing to some recently taken run or this script will consume extra resource from online db.

4. MONITORING: As soon as collector daemons are started, database monitoring scripts should be enabled. Please see crontabs under 'stardb' and 'staronl' accounts for details. It is recommended to verify that nfs-exported directory on dean is write-accessible.

Typical crontab for 'stardb' account would be like:

*/3 * * * * /home/stardb/check_senders.sh > /dev/null

*/3 * * * * /home/stardb/check_cdev_beam.sh > /dev/null

*/5 * * * * /home/stardb/check_rich_scaler_log.sh > /dev/null

*/5 * * * * /home/stardb/check_daemon_logs.sh > /dev/null

*/15 * * * * /home/stardb/check_missing_sc_data.sh > /dev/null

*/2 * * * * /home/stardb/check_stale_caget.sh > /dev/null

(don't forget to set email address to your own!)

Typical crontab for 'staronl' account would look like:

*/10 * * * * /home/staronl/check_update_db.sh > /dev/null

*/10 * * * * /home/staronl/check_qa_migration.sh > /dev/null

--- onl11.starp.bnl.gov ---

1. MQ: make sure that qpid service is running. This service processes MQ requests for "new" collectors and various signals (like "physics on").

2. DB: make sure that mysql database server at port 3606 is running. This database stores data for mq-based collectors ("new").

3. SERVICE DAEMONS: make sure that mq2memcached (generic service), mq2memcached-rt (signals processing) and mq2db (storage) services are running.

4. COLLECTORS: grab configuration files from cvs, and start cdev2mq and ds2mq collectors. Same common sense rule applies: please check that CDEV and EPICS do serve data on those channels first. Also, collectors may be started at onl10.starp.bnl.gov if onl11.starp is busy with something (unexpected IO stress tests, user analysis jobs, L0 monitoring scripts, etc).

--- onl13.starp.bnl.gov ---

1. MIGRATION: check crontab for 'stardb' user. Mare sure that "old" and "new" collector daemons are really running, before moving further. Verify that migration macros experience no problems by trying some simple migration script. If it breaks saying that library is not found or something - find latest stable (old) version of STAR lib and set it to .cshrc config file. If tests succeed, enable cron jobs for all macros, and verify that logs contain meaningful output (no errors, warnings etc).

--- dean.star.bnl.gov ---

1. PLOTS: Check dbPlots configuration, re-create it as a copy with incremented Run number if neccesary. Subsystem experts tend to check those plots often, so it is better to have dbPlots and mq collectors up and running a little earlier than the rest of services.

2. MONITORING:

- Replication monitor aka Mon (replication should be on for all online servers);

- "old" collection daemon monitor (should be all green, some yellow possible);

- mq-based collectors, by checking "MQ Collectors" tab at Online Control Center (should be all green);

- check IOC monitor, to make sure that no EPICS channels are stuck.

- check physics on/off monitor after the fill, to make sure that CDEV transmits data correctly. If there is no data, then cdev2mq-rt service is not running. If data does not look realistic (shifted/offset timestamps) - please contact CAD, or at least let Jamie Dunlop know about it.

- check dbPlots to see that all collectors are really serving data and there are no delays.

3. RUNLOG - now RunLog browser should display recent runs.

--- db03.star.bnl.gov ---

1. TRIGGER COUNTS check cront tab for root, it should have the following records:

40 5 * * * /root/online_db/cron/fillDaqFileTag.sh

0,10,15,20,25,30,35,40,45,50,55 * * * * /root/online_db/sum_insTrgCnt >> /root/online_db/trgCnt.log

First script copies daqFileTag table from online db to local 'trigger' database. Second script calculates trigger counts for FileCatalog (Lidia). Please make sure that both migration and trigger counting work before you enable it in the crontab. There is no monitoring to enable for this service.

--- dbbak.starp.bnl.gov ---

1. ONLINE BACKUPS: make sure that mysql-zrm is taking backups from onl10.starp.bnl.gov for all three ports. It should take raw backups daily and weekly, and logical backups once per month or so. It is generally recommended to periodically store weekly / monthly backups to HPSS, for long-term archival using /star/data07/dbbackup directory as temporary buffer space.

II. The following paragraphs should be examined *after* the data taking stops:

1. DB MERGE: Online databases from onldb.starp (all three ports) and onl11.starp (port 3606) should be merged into one. Make sure you keep mysql privilege tables from onldb.starp:3501. Do not overwrite it with 3502 or 3503 data. Add privileges allowing read-only access to mq_collector_<bla> tables from onl11.starp:3606 db.

2. DB ARCHIVE PART ONE: copy merged database to dbbak.starp.bnl.gov, and start it with incremented port number. Compress it with mysqlpack, if needed. Don't forget to add 'read-only' option to mysql config. It is generally recommended to put an extra copy to NAS archive, for fast restore if primary drive crashes.

3. DB ARCHIVE PART TWO: archive merged database, and split resulting .tgz file into chunks of ~4-5 GB each. Ship those chunks to HPSS for long-term archival using /star/data07/dbbackup as temporary(!) buffer storage space.

4. STOP MIGRATION macros at onl13.starp.bnl.gov - there is no need to run that during summer shutdown period.

5. STOP trigger count calculations at db03.star.bnl.gov for the reason above.

HOW-TO: access CDEV data (copy of CAD docs)

As of Feb 18th 2011, previously existing content of this page is removed.

If you need to know how to access RHIC or STAR data available through CDEV interface, please read official CDEV documentation here : http://www.cadops.bnl.gov/Controls/doc/usingCdev/remoteAccessCdevData.html

Documentation for CDEV access codes used in Online Data Collector system will be available soon in appropriate section of STAR database documentation.

-D.A.

HOW-TO: compile RunLogDb daemon

- Login to root@onldb.starp.bnl.gov

- Copy latest "/online/production/database/Run_[N]/" directory contents to "/online/production/database/Run_[N+1]/"

- Grep every file and replace "Run_[N]" entrances with "Run_[N+1]" to make sure daemons pick up correct log directories

- $> cd /online/production/database/Run_9/dbSenders/online/Conditions/run

- $> export DAEMON=RunLogDb

- $> make

- /online/production/database/Run_9/dbSenders/bin/RunLogDbDaemon binary should be compiled at this stage

TBC

HOW-TO: create a new Run RunLog browser instance and retire previous RunLog

1. New RunLog browser:

- Copy the contents of the "/var/www/html/RunLogRunX" to "/var/www/html/RunLogRunY", where X is the previous Run ID, and Y is the current Run ID

- Change symlink "/var/www/html/RunLog" pointing to "/var/www/html/RunLogX" to "/var/www/html/RunLogY"

- TBC

2. Retire Previous RunLog browser:

- Grep source files and replace all "onldb.starp : 3501/3503" with "dbbak.starp:340X", where X is the id of the backed online database.

- TrgFiles.php and ScaFiles.php contain reference "/RunLog/", which should be changed to "/RunLogRunX/", where X is the run ID

- TBC

3. Update /admin/navigator.php immediately after /RunLog/ rotation! New run range is required.

HOW-TO: enable Online to Offline migration

Migration macros reside on stardb@onllinux6.starp.bnl.gov .

$> cd dbcron/macros-new/

(you should see no StRoot/StDbLib here, please don't check it out from CVS either - we will use precompiled libs)

First, one should check that Load Balancer config env. variable is NOT set :

$> printenv|grep DB

DB_SERVER_LOCAL_CONFIG=

(if it says =/afs/... .xml, then it should be reset to "" in .cshrc and .login scripts)

Second, let's check that we use stable libraries (newest) :

$> printenv | grep STAR

...

STAR_LEVEL=SL08e

STAR_VERSION=SL08e

...

(SL08e is valid for 2009, NOTE: no DEV here, we don't want to be affected by changed or broken DEV libraries)

OK, initial settings look good, let's try to load Fill_Magnet.C macro (easiest to see if its working or not) :

$> root4star -b -q Fill_Magnet.C

You should see some harsh words from Load Balancer, that's exactly what we need - LB should be disabled for our macros to work. Also, there should not be any segmentation violations. Initial macro run will take some time to process all runs known to date (see RunLog browser for run numbers).

Let's check if we see the entries in database:

$> mysql -h robinson.star.bnl.gov -e "use RunLog_onl; select count(*) from starMagOnl where entryTime > '2009-01-01 00:00:00' " ;

(entryTime should be set to current date)

+----------+

| count(*) |

+----------+

| 1589 |

+----------+

Now, check the run numbers and magnet current with :

$> mysql -h robinson.star.bnl.gov -e "use RunLog_onl; select * from starMagOnl where entryTime > '2009-01-01 00:00:00' order by entryTime desc limit 5" ;

+--------+---------------------+--------+-----------+---------------------+--------+---------+----------+----------+-----------+------------+------------------+

| dataID | entryTime | nodeID | elementID | beginTime | flavor | numRows | schemaID | deactive | runNumber | time | current |

+--------+---------------------+--------+-----------+---------------------+--------+---------+----------+----------+-----------+------------+------------------+

| 66868 | 2009-02-16 10:08:13 | 10 | 0 | 2009-02-15 20:08:00 | ofl | 1 | 1 | 0 | 10046008 | 1234743486 | -4511.1000980000 |

| 66867 | 2009-02-16 10:08:13 | 10 | 0 | 2009-02-15 20:06:26 | ofl | 1 | 1 | 0 | 10046007 | 1234743486 | -4511.1000980000 |

| 66866 | 2009-02-16 10:08:12 | 10 | 0 | 2009-02-15 20:02:42 | ofl | 1 | 1 | 0 | 10046006 | 1234743486 | -4511.1000980000 |

| 66865 | 2009-02-16 10:08:12 | 10 | 0 | 2009-02-15 20:01:39 | ofl | 1 | 1 | 0 | 10046005 | 1234743486 | -4511.1000980000 |

| 66864 | 2009-02-16 10:08:12 | 10 | 0 | 2009-02-15 19:58:20 | ofl | 1 | 1 | 0 | 10046004 | 1234743486 | -4511.1000980000 |

+--------+---------------------+--------+-----------+---------------------+--------+---------+----------+----------+-----------+------------+------------------+

If you see that, you are OK to start cron jobs (see "crontab -l") !

HOW-TO: online databases, scripts and daemons

Online db enclave includes :

primary databases: onldb.starp.bnl.gov, ports : 3501|3502|3503

repl.slaves/hot backup: onldb2.starp.bnl.gov, ports : 3501|3502|3503

read-only online slaves: mq01.starp.bnl.gov, mq02.starp.bnl.gov 3501|3502|3503

trigger database: db01.star.bnl.gov, port 3316, database: trigger

Monitoring:

http://online.star.bnl.gov/Mon/

(scroll down to see online databases. db01 is monitored, it is in offline slave group)

Tasks:

1. Slow Control data collector daemons

$> ssh stardb@onldb.starp.bnl.gov;

$> cd /online/production/database/Run_11/dbSenders;

./bin/ - contains scripts for start/stop daemons

./online/Conditions/ - contains source code for daemons (e.g. ./online/Conditions/run is RunLogDb)

See crontab for monitoring scripts (protected by lockfiles)

Monitoring page :

http://online.star.bnl.gov/admin/daemons/

2. Online to Online migration

- RunLogDb daemon performs online->online migration. See previous paragraph for details (it is one of the collector daemons, because it shares the source code and build system). Monitoring: same page as for data collectors daemons;

- migrateDaqFileTags.pl perl script moves data from onldb.starp:3503/RunLog_daq/daqFileTag to onldb.starp:3501/RunLog/daqFileTag table (cron-based). This script is essential for OfflineQA, so RunLog/daqFileTag table needs to be checked to ensure proper OfflineQA status.

3. RunLog fix script

$> ssh root@db01.star.bnl.gov;

$> cd online_db;

sum_insTrgCnt is the binary to perform various activities per recorded run, and it is run as cron script (see crontab -l).

4. Trigger data migration

$> ssh root@db01.star.bnl.gov

/root/online_db/cron/fillDaqFileTag.sh <- cron script to perform copy from online trigger database to db01

BACKUP FOR TRIGGER CODE:

1. alpha.star.bnl.gov:/root/backups/db01.star.bnl.gov/root

2. bogart.star.bnl.gov:/root/backups/db01.star.bnl.gov/root

5. Online to Offline migration

$> ssh stardb@onl13.starp.bnl.gov;

$> cd dbcron/macros-new; ls;

Fill*.C macros are the online->offline migration macros. There is no need in local/modified copy of the DB API, all macros use regular STAR libraries (see tcsh init scripts for details)

Macros are cron jobs. See cron for details (crontab -l). Macros are lockfile-protected to avoid overlap/pileup of cron jobs.

Monitoring :

http://online.star.bnl.gov/admin/status/

MQ-based Online API

MQ-based Online API

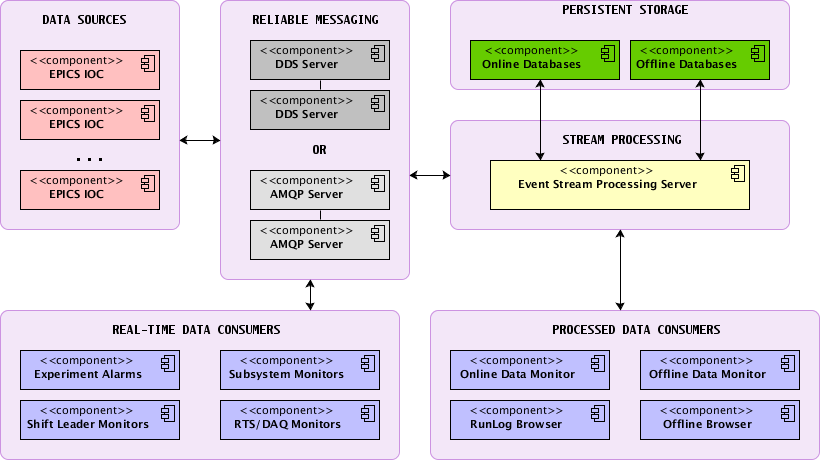

Intro

New Online API proposal: Message-Queue-based data exchange for STAR Online domain;

Purpose

Primary idea is to replace current DB-centric STAR Online system with industrial-strength Message Queueing service. Online databases will, then, take a proper data storage role, leaving information exchange to MQ server. STAR, as an experiment in-progress, is still growing every year, so standard information exchange protocol is required for all involved parties to enable efficient cross-communications.

It is proposed to leave EPICS system as it is now for Slow Controls part of Online domain, and allow easy data export from EPICS to MQ via specialized epics2mq services. Further, data will be stored to MySQL (or some other storage engine) via mq2db service(s). Clients could retrieve archived detector conditions either via direct MySQL access as it is now, or through properly formatted request to db2mq service.

[introduction-talk] [implementation-talk]

Primary components

- qpid: AMQP 0.10 server [src rpm] ("qpid-cpp-mrg" package, not the older "qpidc" one);

- python-qpid : python bindings to AMQP [src rpm] (0.7 version or newer);

- Google Protobuf: efficient cross-language, cross-platform serialization/deserialization library [src rpm];

- EPICS: Experimental Physics and Industrial Control System [rpm];

- log4cxx: C++ implementation of highly successful log4j library standard [src rpm];

Implementation

- epics2mq : service, which queries EPICS via EasyCA API, and submits Protobuf-encoded results to AMQP server;

- mq2db : service, which listens to AMQP storage queue for incoming Protobuf-encoded data, decodes it and stores it to MySQL (or some other backend db);

- db2mq : service, which listens to AMQP requests queue, queries backend database for data, encodes it in Protobuf format and send it to requestor;

- db2mq-client : example of client program for db2mq service (requests some info from db);

- mq-publisher-example : minimalistic example on how to publish Protobuf-encoded messages to AMQP server

- mq-subscriber-example : minimalistic example on how to subscribe to AMQP server queue, receive and decode Protobuf messages

Use-Cases

- STAR Conditions database population: epics2mq -> MQ -> mq2db; db2mq -> MQ -> db2mq-client;

- Data exchange between Online users: mq-publisher -> MQ -> mq-subscriber;

How-To: simple usage example

- login to [your_login_name]@onl11.starp.bnl.gov ;

- checkout mq-publisher-example and mq-subscriber-example from STAR CVS (see links above);

- compile both examples by typing "make" ;

- start both services, in either order. obviously, there's no dependency on publisher/subscriber start order - they are independent;

- watch log messages, read code, modify to your needs!

How-To: store EPICS channels to Online DB

- login to onl11.starp.bnl.gov;

- checkout epics2mq service from STAR CVS and compile it with "make";

- modify epics2mq-converter.ini - set proper storage path string, add desired epics channel names;

- run epics2mq-service as service: "nohup ./epics2mq-service epics2mq-converter.ini >& out.log &"

- watch new database, table and records arriving to Online DB - mq2db will automatically accept messages and create appropriate database structures!

How-To: read archived EPICS channel values from Online DB

- login to onl11.starp.bnl.gov;

- checkout db2mq-client from STAR CVS, compile with "make";

- modify db2mq-client code to your needs, or copy relevant parts into your project;

- enjoy!

MQ Monitoring

QPID server monitoring hints

To see what service is connected to our MQ server, one should use qpid-stat. Example:

$> qpid-stat -c -S cproc -I localhost:5672 Connections client-addr cproc cpid auth connected idle msgIn msgOut ======================================================================================================== 127.0.0.1:54484 db2mq-service 9729 anonymous 2d 1h 44m 1s 2d 1h 39m 52s 29 0 127.0.0.1:56594 epics2mq-servic 31245 anonymous 5d 22h 39m 51s 4m 30s 5.15k 0 127.0.0.1:58283 epics2mq-servic 30965 anonymous 5d 22h 45m 50s 30s 5.16k 0 127.0.0.1:58281 epics2mq-servic 30813 anonymous 5d 22h 49m 18s 4m 0s 5.16k 0 127.0.0.1:55579 epics2mq-servic 28919 anonymous 5d 23h 56m 25s 1m 10s 5.20k 0 130.199.60.101:34822 epics2mq-servic 19668 anonymous 2d 1h 34m 36s 10s 17.9k 0 127.0.0.1:43400 mq2db-service 28586 anonymous 6d 0h 2m 38s 10s 25.7k 0 127.0.0.1:38496 qpid-stat 28995 guest@QPID 0s 0s 108 0

MQ Routing

QPID server routing (slave mq servers) configuration

MQ routing allows to forward selected messages to remote MQ servers.

$> qpid-route -v route add onl10.starp.bnl.gov:5672 onl11.starp.bnl.gov:5672 amq.topic gov.bnl.star.#

$> qpid-route -v route add onl10.starp.bnl.gov:5672 onl11.starp.bnl.gov:5672 amq.direct gov.bnl.star.#

$> qpid-route -v route add onl10.starp.bnl.gov:5672 onl11.starp.bnl.gov:5672 qpid.management console.event.#

ORBITED automatic startup fix for RHEL5

HOW TO FIX "ORBITED DOES NOT LISTEN TO PORT XYZ" issue

/etc/init.d/orbited needs to be corrected, because --daemon option does not work for RHEL5 (orbited does not listen to desired port). Here what is needed:

Edit /etc/init.d/orbited and :

1. add

ORBITED="nohup /usr/bin/orbited > /dev/null 2>&1 &"

to the very beginning of the script, just below "lockfile=<bla>" line

2. modify "start" subroutine to use $ORBITED variable instead of --daemon switch. It should look like this :

daemon --check $prog $ORBITED

Enjoy your *working* "/sbin/service/orbited start" command ! Functionality could be verified by trying lsof -i :[your desired port], (e.g. ":9000") - it should display "orbited"

Online Recipes

- Restore RunLog Browser

- Restore Migration Code

- Restore Online Daemons

MySQL trigger for oversubscription protection

How-to enable total oversubscription check for Shift Signup (mysql trigger) :

delimiter |

CREATE TRIGGER stop_oversubscription_handler BEFORE INSERT ON Shifts

FOR EACH ROW BEGIN

SET @insert_failed := "";

SET @shifts_required := (SELECT shifts_required FROM ShiftAdmin WHERE institution_id = NEW.institution_id);

SET @shifts_exist := (SELECT COUNT(*) FROM Shifts WHERE institution_id = NEW.institution_id);

IF ( (@shifts_exist+1) >= (@shifts_required * 1.15)) THEN

SET @insert_failed := "oversubscription protection error";

SET NEW.beginTime := null;

SET NEW.endTime := null;

SET NEW.week := null;

SET NEW.shiftNumber := null;

SET NEW.shiftTypeID := null;

SET NEW.duplicate := null;

END IF;

END;

|

delimiter ;

Restore Migration Macros

The Migration Macros are monitored here, if they have stopped the page will display values in red.

- Call Database Expert 356-2257

- Log onto onlinux6.starp.bnl.gov - as stardb: Ask Michael, Wayne, Gene, Jerome for password

- check for runaway processes - for example, these are run as crons so a process starts before its previous exectution finishes, the process never ends and they build up

- If this is the case kill each of the processes

- cd to dbcron

Restore RunLog Browser

If the Run Log Browser is not Updating...

- make sure all options are selected and deselect "filter bad"

often - people will complain about missing runs and they are just not selected - Call DB EXPERT: 349-2257

- Check onldb.star.bnl.gov

- log onto node as stardb (password known by Michael, Wayne, Jerome, Gene)

- df -k ( make sure a disk did not fill) - If it did: cd into the appropriate data directory

(i.e. /mysqldata00/Run_7/port_3501/mysql/data) and copy /dev/null into the large log file onldb.starp.bnl.gov.log

`cp /dev/null > onldb.starp.bnl.gov.log` - make sure back-end runlog DAEMON is running

- execute /online/production/database/Run_7/dbSenders/bin/runWrite.sh status

Running should be returned - restart deamon - execute /online/production/database/Run_7/dbSenders/bin/runWrite.sh start

Running should be returned - if Not Running is returned there is a problem with the code or with daq

- contact DAQ expert to check their DB sender system

- refer to the next section below as to debugging/modifying recompiling code

- execute /online/production/database/Run_7/dbSenders/bin/runWrite.sh status

- Make sure Database is running

- mysql -S /tmp/mysql.3501.sock

- To restart db

- cd to /online/production/database/config

- execute `mysql5.production start 3501`

- try to connect

- debug/modify Daemon Code (be careful and log everything you do)

- check log file at /online/production/database/Run_7/dbSenders/run this may point to an obvious problem

- Source code is located in /online/production/database/Run_7/dbSenders/online/Condition/run

- GDB is not Available usless the code is recompiled NOT as a daemon

- COPY Makefile_debug to Makefile (remember to copy Makefile_good back to Makefile when finished)

- setenv DAEMON RunLogSender

- make

- executable is at /online/production/database/Run_7/dbSenders/bin

- gdb RunLog

Online Server Port Map

Below is a port/node mapping for the online databases both Current and Archival

Archival

| Run/Year | NODE | Port |

| Run 1 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3400 |

| Run 2 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3401 |

| Run 3 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3402 |

| Run 4 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3403 |

| Run 5 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3404 |

| Run 6 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3405 |

| Run 7 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3406 |

| Run 8 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3407 |

| Run 9 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3408 |

| Run 10 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3409 |

| Run 11 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3410 |

| Run 12 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3411 |

| Run 13 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3412 |

| Run 14 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3413 |

| Run 15 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3414 |

| Run 16 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3415 |

| Run 17 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3416 |

| Run 18 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3417 |

| Run 19 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3418 |

| Run 20 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3419 |

| Run 21 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3420 |

| Run 22 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3421 |

| Run 23 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3422 |

| Run 24 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3423 |

CURRENT (RUN 24/25)

| DATABASE | NODE | Port |

| [MASTER] Run Log, Conditions_rts, Shift Signup, Shift Log | onldb.starp.bnl.gov | 3501 |

| [MASTER] Conditions | onldb.starp.bnl.gov | 3502 |

| [MASTER] Daq Tag Tables | onldb.starp.bnl.gov | 3503 |

| [SLAVE] Run Log, Conditions_rts, Shift Signup, Shift Log | onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov mq01.starp.bnl.gov mq02.starp.bnl.gov |

3501 |

| [SLAVE] Conditions | onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov mq01.starp.bnl.gov mq02.starp.bnl.gov |

3502 |

| [SLAVE] Daq Tag Tables | onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov mq01.starp.bnl.gov mq02.starp.bnl.gov |

3503 |

| [MASTER] MQ Conditions DB | mq01.starp.bnl.gov | 3606 |

| [SLAVE] MQ Conditions DB | mq02.starp.bnl.gov onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov |

3606 |

| RTS Database (MongoDB cluster) | mongodev01.starp.bnl.gov mongodev02.starp.bnl.gov mongodev03.starp.bnl.gov |

27017 |

End of the run procedures:

1. Freeze databases (especially ShiftSignup on 3501) by creating a new db instance on the next sequential port from the archival series and copy all dbs from all three ports to this port.

2. Move the previous run to dbbak.starp

3. Send email announcing the creation of this port, so web pages can be changed.

4. Tar/zip the directories and ship them to HPSS.

Prior to the next Run:

1. Clear out the dbs on the "current" ports. NOTE: do not clear out overhead tables (e.g., Nodes, NodeRelations, blahIDs etc.). There is a script on onldb /online/production/databases/createTables which does this. Make sure you read the README.

UPDATE: more advanced script to flush db ports is attached to this page (chdb.sh). Existing " createPort350X.sql " files do not care about overhead tables!

UPDATE.v2: RunLog.runTypes & RunLog.detectorTypes should not be cleared too // D.A.

UPDATE.V3: RunLog.destinationTypes should not be cleared // D.A.

Confirm all firewall issues are resolved from both the IP TABLES on the local host and from an institutional networking perspective. This should only really need to be addressed with a new node, but it is good to confirm prior to advertising availability.

Update the above tables.

2. Verify that Online/Offline Detector ID list matches to RunLog list (table in RunLog db) :

http://www.star.bnl.gov/cgi-bin/protected/cvsweb.cgi/StRoot/RTS/include/rtsSystems.h

Online to Offline migration monitoring

ONLINE TO OFFLINE MIGRATION

online.star.bnl.gov/admin/status.php

If all entries are red - chances are we are not running - or there is a

gap between runs e.g., a beam dump.

In this case please check the last run and or/time with the RunLog or

Shift Log to confirm that the last run was migrated (This was most

likely the case last night).

If one entry is red - please be sure that the latest value is _recent_

as some dbs are filled by hand once a year.

So we have a problem if ....

If an entry is red, other values are green, the of the red last value

was recent.

All values are red and you know we have been taking data for more than

.75 hours, This a rough estimate of time, but keep in mind migration of

each db happens at different time intervals so entries won't turn red

all at once nor will they turn green all at once. In fact RICH scalars

only get moved once an hour so it will not be uncommon to see this red

for a while after we just start taking data.

code is on stardb@onl13.starp.bnl.gov (formerly was on onllinux6.starp):

~/stardb/dbcron/macrcos

Below is an output of cron tab to start the processes uncomment the crons in stardbs cron tab

Times of transfers are as follows:

1,41 * * * * TpcGas

0,15,30,45 * * * * Clock

5,20,35,50 * * * * RDO

10,40 * * * * FTPCGAS

15 * * * * FTPCGASOUT

0,30 * * * * Trigger

25 * * * * TriggerPS

10,40 * * * * BeamInfo

15 * * * * Magnet

3,23,43 * * * * MagFactor

45 * * * * RichScalers

8,24,44 * * * * L0Trigger

6,18,32,48 * * * * FTPCVOLTAGE

10,35,50 * * * * FTPCTemps

check the log file in ../log to make sure the crons are moving data.

Backups of migration scripts and crontab are located here :

1. alpha.star.bnl.gov:/root/backups/onl13.starp.bnl.gov/dbuser

2. bogart.star.bnl.gov:/root/backups/onl13.starp.bnl.gov/dbuser

Standard caGet vs improved caGet comparison

Standard caGet vs. improved caGet comparison

here is my summary of caget's performance studies done yesterday+today : 1. Right now, "normal" (sequential mode) caget from CaTools package takes 0.25 sec to fetch 400 channels, and, according to callgrind, it could be made even faster if I optimize various printf calls (40% speedup possible, see callgrind tree dump) : http://www.star.bnl.gov/~dmitry/tmp/caget_sequential.png [Valgrind memcheck reports 910kb RAM used, no memory leaks] 2. At the same time, "bulk" (parallel mode) caget from EzcaScan package takes 13 seconds to fetch same 400 channels. Here is a callgrind tree again: http://www.star.bnl.gov/~dmitry/tmp/caget_parallel.png [Valgrind memcheck reports 970kb RAM used, no memory leaks] For "parallel" caget, most of the time is spent on Ezca_getTypeCount, and Ezca_pvlist_search. I tried all possible command-line options available for this caget, with same result. This makes me believe that caget from EzcaScan package is even less optimized in terms of performance. It could be better optimized in terms of network usage, though (otherwise those guys won't even mention "improvement over regular caget" in their docs). Another thing is that current sequential caget is *possibly* using same "bulk" mode internally (that "ca_array_get" function is seen for both cagets).. Oh, if this matters, for this test I used EPICS base 3.14.8 + latest version of EzcaScan package recompiled with no/max optimizations in gcc.