Data Management

The data management section will have information on data transfer and development/consolidation of tools used in STAR for Grid data transfer.

SRM/DRM Testing June/July 2007

SRM/DRM Testing June/July 2007

Charge

From email:

We had a discussion with Arie Shoshani and group pertaining

to the use of SRM (client and site caching) in our analysis

scenario. We agreed we would proceed with the following plan,

giving ourselves the best shot at achieving the milestone we

have with the OSG.

- first of all, we will try to restore the SRM service both at

LBNL and BNL . This will require

* Disk space for the SRM cache at LBNL - 500 GB is plenty

* Disk space for the SRM cache at BNL - same size is fine

- we hope for a test of transfer to be passed to the OSG troubleshooting

team who will stress test the data transfer as we have defined i.e.

* size test and long term stability - we would like to define a test

where each job would transfer 500 MB of data from LBNL to BNL

We would like 100 jobs submitted at a time

For the test to be run for at least a few days

* we would like to be sure the test includes burst of

100 requests transfer /mn to SRM

+ the success matrix

. how many time the service had to be restarted

. % success on data transfer

+ we need to document the setup i.e.number of streams

(MUST be greater than 1)

- whenever this test is declared successful, we would use

the deployment in our simulation production in real

production mode - the milestone would then behalf

achieved

- To make our milestone fully completed, we would reach

+1 site. The question was which one?

* Our plan is to move to SRM v2.2 for this test - this

is the path which is more economical in terms of manpower,

OSG deliverables and allow for minimal reshuffling of

manpower and current assignment hence increasing our

chances for success.

* FermiGrid would not have SRM 2.2 however

=> We would then UIC for this, possibly leveraging OSG

manpower to help with setting up a fully working

environment.

Our contact people would be

- Doug Olson for LBNL working with Alex Sim, Andrew Rose,

Eric Hjort (whenever necessary) and Alex Sim

* The work with the OSG troubleshooting team will be

coordinated from LBNL side

* We hope Andrew/Eric will work along with Alex to

set the test described above

- Wayne Betts for access to the infrastructure at BNL

(assistance from everyone to clean the space if needed)

- Olga Barannikova will be our contact for UIC - we will

come back to this later according to the strawman plan

above

As a reminder, I have discussed with Ruth that at

this stage, and after many years of work which are bringing

exciting and encouraging sign of success (the recent production

stability being one) I have however no intent to move, re-scope

or re-schedule our milestone. Success of this milestone is path

forward to make Grid computing part of our plan for the future.

As our visit was understood and help is mobilize, we clearly

see that success is reachable.

I count on all of you for full assistance with

this process.

Thank you,

--

,,,,,

( o o )

--m---U---m--

Jerome

Test Plan (Alex S., 14 June)

Hi all,

The following plan will be performed for STAR SRM test by SDM group with

BeStMan SRM v2.2.

Andrew Rose will duplicate, in the mean time, the successful analysis case

that Eric Hjort had previously.

1. small local setup

1.1. small number of analysis jobs will be submitted directly to PDSF job

queue.

1.2. A job will transfer files from datagrid.lbl.gov via gsiftp into the

PDSF project working cache.

1.3. a fake analysis will be performed to produce a result file.

1.4 the job will issue srm-client to call BeStman to transfer the result

file out to datagrid.lbl.gov via gsiftp.

2. small remote setup

2.1. small number of analysis jobs will be submitted directly to PDSF job

queue.

2.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

2.3. a fake analysis will be performed to produce a result file.

2.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

3. large local setup

3.1. about 100-200 analysis jobs will be submitted directly to PDSF job

queue.

3.2. A job will transfer files from datagrid.lbl.gov via gsiftp into the

PDSF project working cache.

3.3. a fake analysis will be performed to produce a result file.

3.4 the job will issue srm-client to call BeStman to transfer the result

file out to datagrid.lbl.gov via gsiftp.

4. large remote setup

4.1. about 100-200 analysis jobs will be submitted directly to PDSF job

queue.

4.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

4.3. a fake analysis will be performed to produce a result file.

4.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

5. small remote sums setup

5.1. small number of analysis jobs will be submitted to SUMS.

5.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

5.3. a fake analysis will be performed to produce a result file.

5.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

6. large remote setup

6.1. about 100-200 analysis jobs will be submitted to SUMS.

6.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

6.3. a fake analysis will be performed to produce a result file.

6.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

7. have Andrew and Lidia use the setup #6 to test with real analysis jobs

8. have a setup #5 on UIC and test

9. have a setup #6 on UIC and test

10. have Andrew and Lidia use the setup #9 to test with real analysis jobs

Any questions? I'll let you know when things are in progress.

-- Alex

asim at lbl dot gov

Site Bandwidth Testing

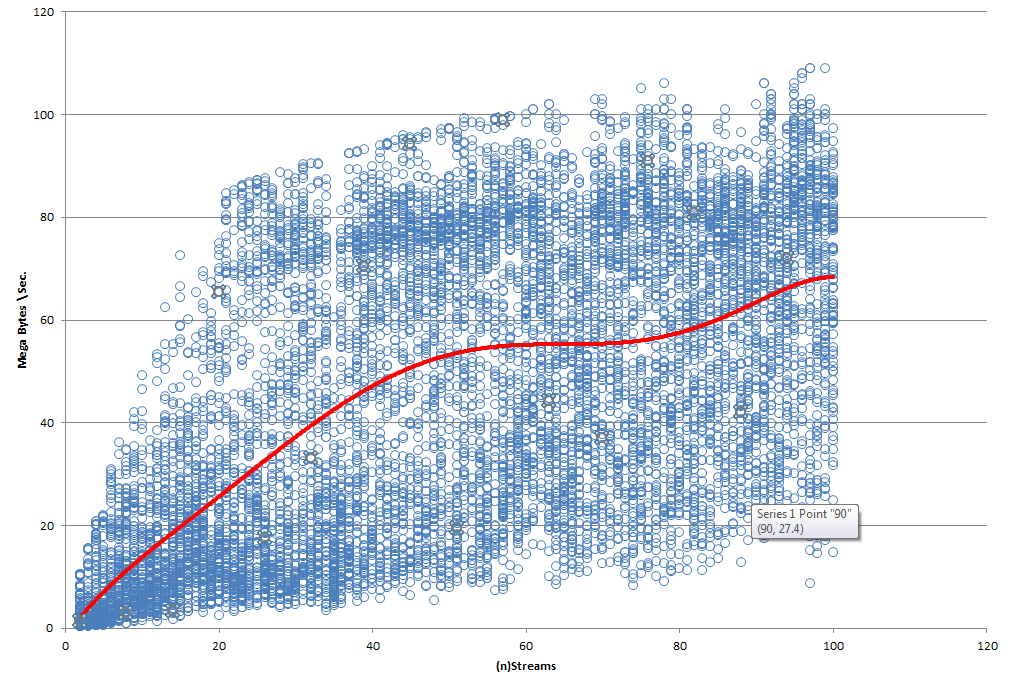

The above is a bandwidth test done using the tool iperf (version iperf_2.0.2-4_i386) between the site KISTI (ui03.sdfarm.kr) and BNL (stargrid03) around the beginning of the year 2014. The connection was noted to collapse (drop to zero) a few times during testing before a full plot could be prepared.

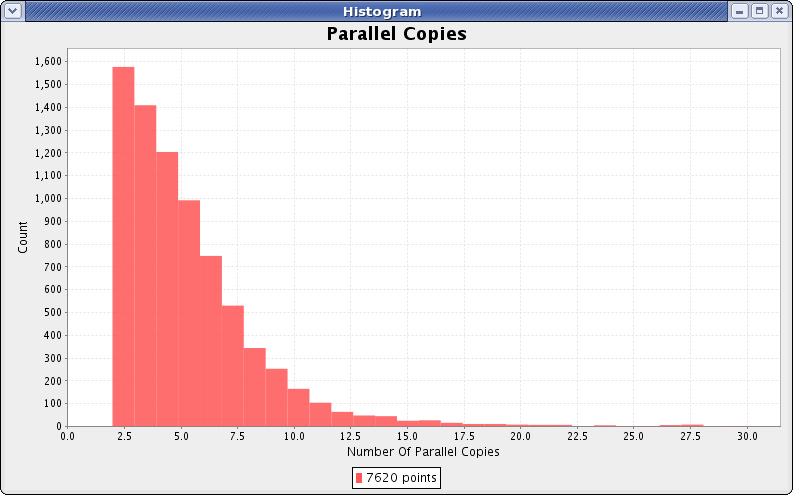

The above histogram shows the number of simultaneous copies in one minute bins, extracted from a few week segment of the actual production at KISTI. Solitary copies are suppressed because they overwhelm the plot. Copies represent less than 1% of the jobs total run time.

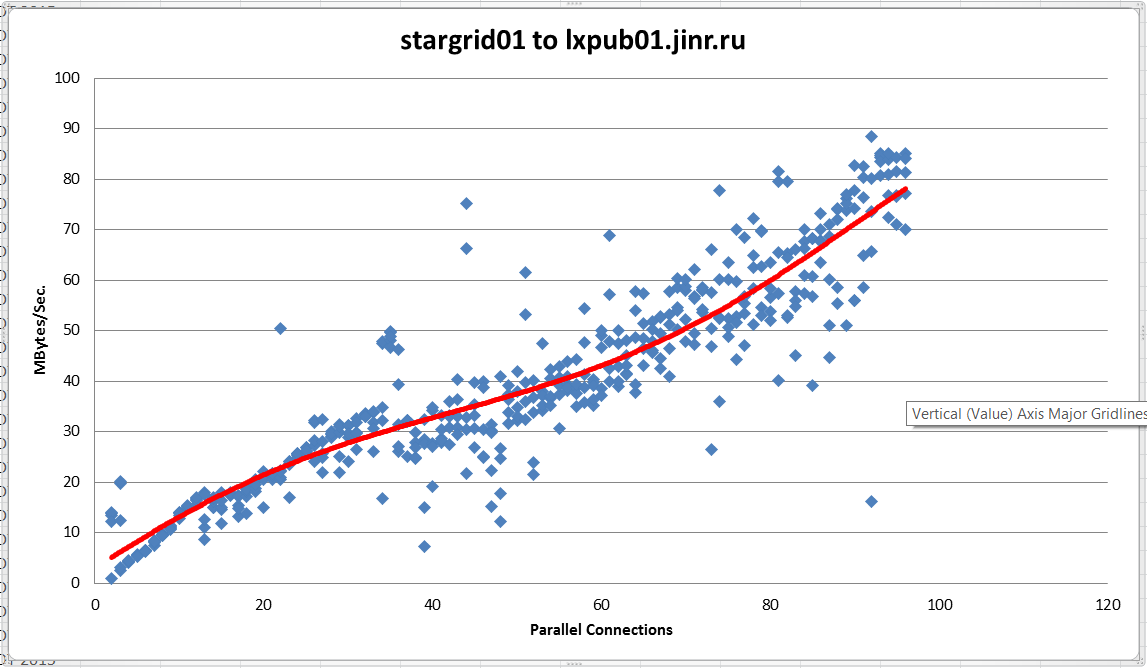

The above is a bandwidth test done using the tool iperf (version iperf_2.0.2-4_i386) between the site Dubna (lxpub01.jinr.ru) and BNL (stargrid01) on 8/14/2015. After exactly 97 parallel connections the connection was noted to collapse with many parallel processes timing out, this behavior was consistent across three attempts but was not present at any lower number of parallel connections. It is suspected that a soft limit is placed on the number of parallel processes somewhere.The raw data is attached at the bottom.

The 2006 STAR analysis scenario

This page will describe in detail the STAR analysis scenario as it was in ~2006. This scenario involves SUMS grid job submission at RCF through condor-g to PDSF using SRM's at both ends to transfer input and output files in a managed fashion.

Transfer BNL/PDSF, summer 2009

This page will document the data transfers from/to PDSF to/from BNL in the summer/autumn of 2009.

October 17, 2009

I repeated earlier tests I had run with Dan Gunter (see below "Previous results"). It takes onlt 3 streams to saturate the 1GigE network interface of stargrid04.

[stargrid04] ~/> globus-url-copy -vb file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

2389704704 bytes 23.59 MB/sec avg 37.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -tcp-bs 8388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

1569718272 bytes 35.39 MB/sec avg 39.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

1607467008 bytes 35.44 MB/sec avg 38.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -p 2 -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

3414425600 bytes 72.36 MB/sec avg 63.95 MB/sec inst

[stargrid04] ~/> globus-url-copy -p 4 -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

8569487360 bytes 108.97 MB/sec avg 111.80 MB/sec inst

[stargrid04] ~/> globus-url-copy -p 3 -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

5576065024 bytes 106.36 MB/sec avg 109.70 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

625999872 bytes 9.95 MB/sec avg 19.01 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -tcp-bs 4388608 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

1523580928 bytes 30.27 MB/sec avg 38.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -p 2 -tcp-bs 4388608 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

8712617984 bytes 71.63 MB/sec avg 75.87 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -p 3 -tcp-bs 4388608 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

7064518656 bytes 102.08 MB/sec avg 111.88 MB/sec inst

October 15, 2009 - evening

After replacing network card to 10GigE so that we could plug directly into the core switch quicktest gives:

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005 ------------------------------------------------------------ Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte ------------------------------------------------------------ [ 3] local 130.199.6.109 port 50291 connected with 128.55.36.74 port 60005 [ ID] Interval Transfer Bandwidth [ 3] 0.0-120.0 sec 4.39 GBytes 314 Mbits/sec [ 3] MSS size 1368 bytes (MTU 1408 bytes, unknown interface)

More work tomorrow.

October 15, 2009

Comparison between the signal from an optical tap at the NERSC border with the tcpdump on the node showed most of the loss happening between the border and pdsfsrm.nersc.gov.

More work was done to optimize single-stream throughput.

- pdsfsrm was moved from a switch that serves the rack where it resides to a switch that is one level up and closer to the border

- a configuration of the forcedeth driver was changed (options forcedeth optimization_mode=1 poll_interval=100 set in /etc/modprobe.conf).

Changes resulted in an improved throughput but it is stillfar from what should be (see details below). We are going to insert a 10 GigE card into the node and move it even closer to the border.

Here are the results with those buffer memory settings as of the morning 10/15/2009. There is a header from the first -------------------------------------------------------------------------

measurement and then results from a few tests run minutes apart.

-------------------------------------------------------------------------

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005

-------------------------------------------------------------------------

Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte

-------------------------------------------------------------------------

[ 3] local 130.199.6.109 port 44070 connected with 128.55.36.74 port 60005

[ ID] Interval Transfer Bandwidth [ 3] 0.0-120.0 sec 1.81 GBytes 129 Mbits/sec

[ 3] 0.0-120.0 sec 3.30 GBytes 236 Mbits/sec

[ 3] 0.0-120.0 sec 1.86 GBytes 133 Mbits/sec

[ 3] 0.0-120.0 sec 2.04 GBytes 146 Mbits/sec

[ 3] 0.0-120.0 sec 3.61 GBytes 258 Mbits/sec

[ 3] 0.0-120.0 sec 1.88 GBytes 135 Mbits/sec

[ 3] 0.0-120.0 sec 3.35 GBytes 240 Mbits/sec

Then I restored the "dtn" buffer memory settings - again morning 10/15/2009 and I got similar if not worse results:

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005

-------------------------------------------------------------------------

Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte

-------------------------------------------------------------------------

[ 3] local 130.199.6.109 port 44361 connected with 128.55.36.74 port 60005

[ ID] Interval Transfer Bandwidth [ 3] 0.0-120.0 sec 2.34 GBytes 168 Mbits/sec

[ 3] 0.0-120.0 sec 1.42 GBytes 101 Mbits/sec

[ 3] 0.0-120.0 sec 2.08 GBytes 149 Mbits/sec

[ 3] 0.0-120.0 sec 2.13 GBytes 152 Mbits/sec

[ 3] 0.0-120.0 sec 1.76 GBytes 126 Mbits/sec

[ 3] 0.0-120.0 sec 1.42 GBytes 102 Mbits/sec

[ 3] 0.0-120.0 sec 2.07 GBytes 148 Mbits/sec

[ 3] 0.0-120.0 sec 2.07 GBytes 148 Mbits/sec

And here if for comparison and to show how things vary with more or less same load on pdsfgrid2 results for the "dtn" settings

just like above from 10/14/2009 afternoon.--------------------------------------------------------------------------------------

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005

--------------------------------------------------------------------------------------

Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte

--------------------------------------------------------------------------------------

[ 3] local 130.199.6.109 port 34366 connected with 128.55.36.74 port 60005

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-120.0 sec 1.31 GBytes 93.5 Mbits/sec

[ 3] 0.0-120.0 sec 1.58 GBytes 113 Mbits/sec

[ 3] 0.0-120.0 sec 1.75 GBytes 126 Mbits/sec

[ 3] 0.0-120.0 sec 1.88 GBytes 134 Mbits/sec

[ 3] 0.0-120.0 sec 2.56 GBytes 183 Mbits/sec

[ 3] 0.0-120.0 sec 2.53 GBytes 181 Mbits/sec

[ 3] 0.0-120.0 sec 3.25 GBytes 232 Mbits/sec

Since the "80Mb/s or worse" persisted for a long time and was measured on various occasions the new numbers are due to the forceth param or the switch change. Most probably it was the switch. It is also true that the "dtn" settings were able to cope slightly better with the location on the Dell switch but seem to be not doing much when pdsfgrid2 is plugged directly into the "old pdsfcore" switch.

October 2, 2009

Notes on third party srm-copy to PDSF:

1) on PDSF interactive node, you need to set up your environment:

source /usr/local/pkg/OSG-1.2/setup.csh

2) srm-copy (recursive) has the following form:

srm-copy gsiftp://stargrid04.rcf.bnl.gov//star/institutions/lbl_prod/andrewar/transfer/reco/production_dAu/ReversedFullField/P08ie/2008/023b/ srm://pdsfsrm.nersc.gov:62443/srm/v2/server\?SFN=/eliza9/starprod/reco/production_dAu/ReversedFullField/P08ie/2008/023/ -recursive -td /eliza9/starprod/reco/production_dAu/ReversedFullField/P08ie/2008/023/

October 1, 2009

We conducted srm-copy tests between RCF and PDSF this week. Initially, the rates we saw for a third party srm-copy between RCF (stargrid04) and PDSF (pdsfsrm) are detailed in plots from Dan:

September 24, 2009

We updated the transfer proceedure to make use of the OSG automated monitoring tools. Perviously, the transfers ran between stargrid04 and one of the NERSC data transfer nodes. To take advantage of Dan's automated log harvesting, we're switiching the target to pdsfsrm.nersc.gov.

Transfers between stargrid04 and pdsfsrm are fairly stable at ~20MBytes/sec (as reported by the "-vb" option in the globus-url-copy). The command used is of the form:

globus-url-copy -r -p 15 gsiftp://stargrid04.rcf.bnl.gov/[dir]/ gisftp://pdsfsrm.nersc.gov/[target dir]/

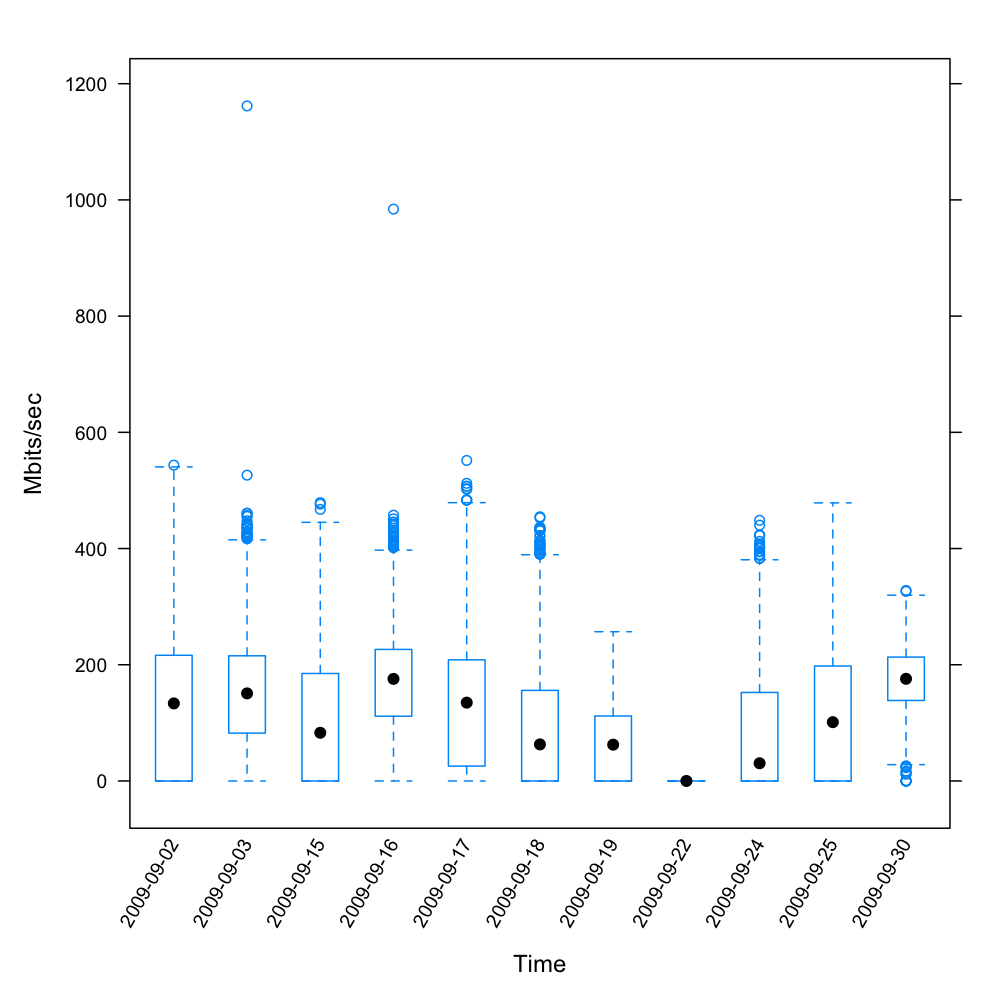

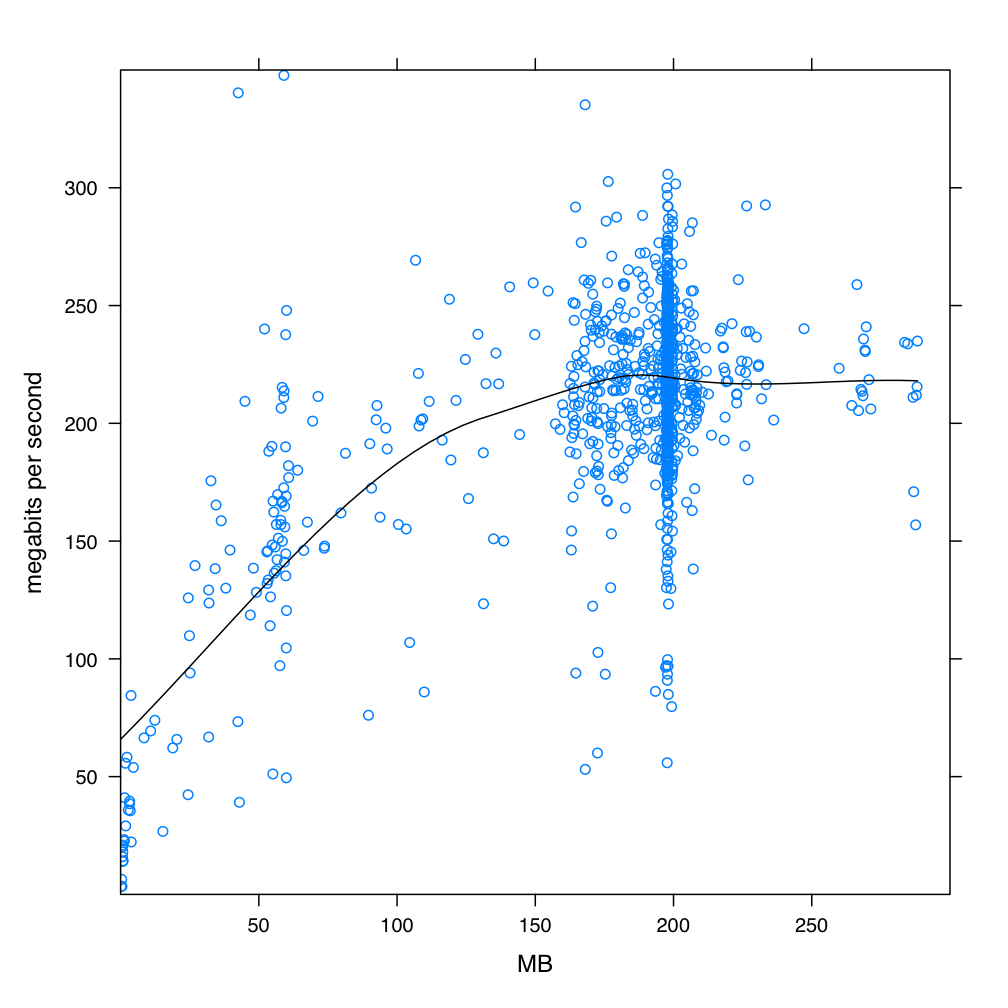

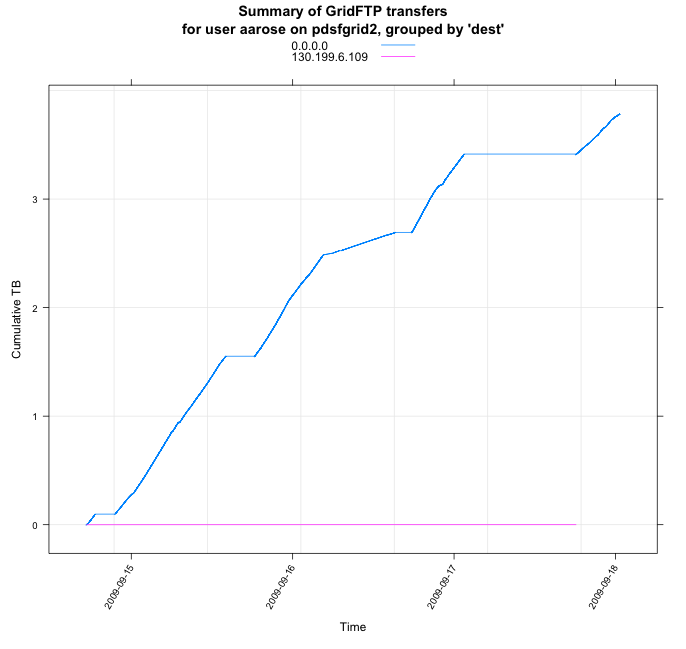

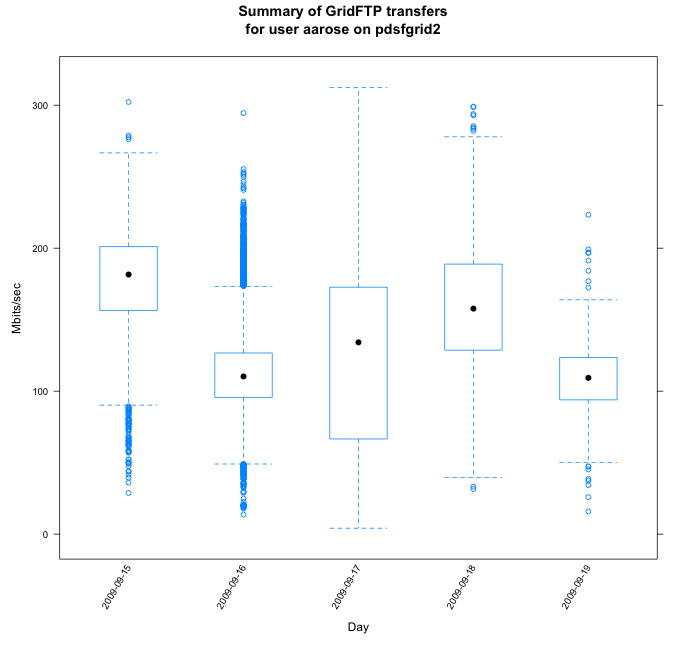

Plots from the first set using the pdsfsrm node:

The most recent rates seen are given in Dan's plots from Sept. 23rd:

So, the data transfer is progressing at ~100-200 Mb/s. We will next compare to rates using the new BeStMan installation at PDSF.

Previous results

Tests have been repeated as a new node (stargrid10) became available. We ran from the SRM end host at PDSF pdsfgrid2.nersc.gov to the new stargrid10.rhic.bnl.gov endpoint at BNL . Because of firewalls we could only run from PDSF to BNL, not the other way. A 60-second test got about 75Mb/s. This number is consistent with earlier iperf tests between stargrid02 and pdsfgrid2.

globus-url-copy with 8 streams would go up 400Mb/s and 16 streams 550MB/s. Also with stargrid10, the transfer rates would be the same to and from BNL.

Details below.

pdsfgrid2 59% iperf -s -f m -m -p 60005 -w 8388608 -t 60 -i 2

------------------------------------------------------------

Server listening on TCP port 60005

TCP window size: 16.0 MByte (WARNING: requested 8.00 MByte)

------------------------------------------------------------

[ 4] local 128.55.36.74 port 60005 connected with 130.199.6.208 port 36698

[ 4] 0.0- 2.0 sec 13.8 MBytes 57.9 Mbits/sec

[ 4] 2.0- 4.0 sec 19.1 MBytes 80.2 Mbits/sec

[ 4] 4.0- 6.0 sec 4.22 MBytes 17.7 Mbits/sec

[ 4] 6.0- 8.0 sec 0.17 MBytes 0.71 Mbits/sec

[ 4] 8.0-10.0 sec 2.52 MBytes 10.6 Mbits/sec

[ 4] 10.0-12.0 sec 16.7 MBytes 70.1 Mbits/sec

[ 4] 12.0-14.0 sec 17.4 MBytes 73.1 Mbits/sec

[ 4] 14.0-16.0 sec 16.1 MBytes 67.7 Mbits/sec

[ 4] 16.0-18.0 sec 15.8 MBytes 66.4 Mbits/sec

[ 4] 18.0-20.0 sec 17.5 MBytes 73.6 Mbits/sec

[ 4] 20.0-22.0 sec 17.6 MBytes 73.7 Mbits/sec

[ 4] 22.0-24.0 sec 18.1 MBytes 75.8 Mbits/sec

[ 4] 24.0-26.0 sec 19.5 MBytes 81.7 Mbits/sec

[ 4] 26.0-28.0 sec 19.3 MBytes 80.9 Mbits/sec

[ 4] 28.0-30.0 sec 13.8 MBytes 58.1 Mbits/sec

[ 4] 30.0-32.0 sec 14.5 MBytes 60.7 Mbits/sec

[ 4] 32.0-34.0 sec 14.7 MBytes 61.8 Mbits/sec

[ 4] 34.0-36.0 sec 14.6 MBytes 61.2 Mbits/sec

[ 4] 36.0-38.0 sec 17.2 MBytes 72.2 Mbits/sec

[ 4] 38.0-40.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 40.0-42.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 42.0-44.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 44.0-46.0 sec 19.5 MBytes 81.7 Mbits/sec

[ 4] 46.0-48.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 48.0-50.0 sec 19.1 MBytes 79.9 Mbits/sec

[ 4] 50.0-52.0 sec 19.3 MBytes 80.9 Mbits/sec

[ 4] 52.0-54.0 sec 19.4 MBytes 81.3 Mbits/sec

[ 4] 54.0-56.0 sec 19.4 MBytes 81.5 Mbits/sec

[ 4] 56.0-58.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 58.0-60.0 sec 19.5 MBytes 81.7 Mbits/sec

[ 4] 0.0-60.4 sec 489 MBytes 68.0 Mbits/sec

[ 4] MSS size 1368 bytes (MTU 1408 bytes, unknown interface)

The client was on stargrid10.

on stargrid10

from stargrid10 to pdsfgrid2:

[stargrid10] ~/> globus-url-copy -vb file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

513802240 bytes 7.57 MB/sec avg 9.09 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 4 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

1863843840 bytes 25.39 MB/sec avg 36.25 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 6 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

3354394624 bytes 37.64 MB/sec avg 44.90 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 8 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

5016649728 bytes 47.84 MB/sec avg 57.35 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 12 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

5588647936 bytes 62.70 MB/sec avg 57.95 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 16 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

15292432384 bytes 74.79 MB/sec avg 65.65 MB/sec inst

Cancelling copy...

and on stargrid10 the other way, from pdsfgrid2 to stargrid10 (similar although slightly better)

[stargrid10] ~/> globus-url-copy -vb gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null Source: gsiftp://pdsfgrid2.nersc.gov/dev/ Dest: file:///dev/

zero -> null

1693450240 bytes 11.54 MB/sec avg 18.99 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 4 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null Source: gsiftp://pdsfgrid2.nersc.gov/dev/ Dest: file:///dev/

zero -> null

12835618816 bytes 45.00 MB/sec avg 73.50 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 8 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null Source: gsiftp://pdsfgrid2.nersc.gov/dev/ Dest: file:///dev/

zero -> null

14368112640 bytes 69.20 MB/sec avg 100.50 MB/sec inst

And now on pdsfgrid2 from pfsfgrid2 to stargrid10 (similar to the result for 4 stream in same direction above)

pdsfgrid2 70% globus-url-copy -vb -p 4 file:///dev/zero gsiftp://stargrid10.rcf.bnl.gov/dev/null Source: file:///dev/ Dest: gsiftp://stargrid10.rcf.bnl.gov/dev/

zero -> null

20869021696 bytes 50.39 MB/sec avg 73.05 MB/sec inst

Cancelling copy...

and to stargrid02, really, really bad. but since the node is going away we won't be investigating the mistery.

pdsfgrid2 71% globus-url-copy -vb -p 4 file:///dev/zero gsiftp://stargrid02.rcf.bnl.gov/dev/null Source: file:///dev/ Dest: gsiftp://stargrid02.rcf.bnl.gov/dev/

zero -> null

275513344 bytes 2.39 MB/sec avg 2.40 MB/sec inst

Cancelling copy...

12 Mar 2009

Baseline from bwctl from SRM end host at PDSF -- pdsfgrid2.nersc.gov -- to a perfsonar endpoint at BNL -- lhcmon.bnl.gov. Because of firewalls, could only run from PDSF to BNL, not the other way around. Last I checked, this direction was getting about 5Mb/s from SRM. A 60-second test to the perfsonar host got about 275Mb/s.

Summary: Current baseline from perfSONAR is more than 50X what we're seeing.

RECEIVER START

bwctl: exec_line: /usr/local/bin/iperf -B 192.12.15.23 -s -f m -m -p 5008 -w 8388608 -t 60 -i 2

bwctl: start_tool: 3445880257.865809

------------------------------------------------------------

Server listening on TCP port 5008

Binding to local address 192.12.15.23

TCP window size: 16.0 MByte (WARNING: requested 8.00 MByte)

------------------------------------------------------------

[ 14] local 192.12.15.23 port 5008 connected with 128.55.36.74 port 5008

[ 14] 0.0- 2.0 sec 7.84 MBytes 32.9 Mbits/sec

[ 14] 2.0- 4.0 sec 38.2 MBytes 160 Mbits/sec

[ 14] 4.0- 6.0 sec 110 MBytes 461 Mbits/sec

[ 14] 6.0- 8.0 sec 18.3 MBytes 76.9 Mbits/sec

[ 14] 8.0-10.0 sec 59.1 MBytes 248 Mbits/sec

[ 14] 10.0-12.0 sec 102 MBytes 428 Mbits/sec

[ 14] 12.0-14.0 sec 139 MBytes 582 Mbits/sec

[ 14] 14.0-16.0 sec 142 MBytes 597 Mbits/sec

[ 14] 16.0-18.0 sec 49.7 MBytes 208 Mbits/sec

[ 14] 18.0-20.0 sec 117 MBytes 490 Mbits/sec

[ 14] 20.0-22.0 sec 46.7 MBytes 196 Mbits/sec

[ 14] 22.0-24.0 sec 47.0 MBytes 197 Mbits/sec

[ 14] 24.0-26.0 sec 81.5 MBytes 342 Mbits/sec

[ 14] 26.0-28.0 sec 75.9 MBytes 318 Mbits/sec

[ 14] 28.0-30.0 sec 45.5 MBytes 191 Mbits/sec

[ 14] 30.0-32.0 sec 56.2 MBytes 236 Mbits/sec

[ 14] 32.0-34.0 sec 55.5 MBytes 233 Mbits/sec

[ 14] 34.0-36.0 sec 58.0 MBytes 243 Mbits/sec

[ 14] 36.0-38.0 sec 61.0 MBytes 256 Mbits/sec

[ 14] 38.0-40.0 sec 61.6 MBytes 258 Mbits/sec

[ 14] 40.0-42.0 sec 72.0 MBytes 302 Mbits/sec

[ 14] 42.0-44.0 sec 62.6 MBytes 262 Mbits/sec

[ 14] 44.0-46.0 sec 64.3 MBytes 270 Mbits/sec

[ 14] 46.0-48.0 sec 66.1 MBytes 277 Mbits/sec

[ 14] 48.0-50.0 sec 33.6 MBytes 141 Mbits/sec

[ 14] 50.0-52.0 sec 63.0 MBytes 264 Mbits/sec

[ 14] 52.0-54.0 sec 55.7 MBytes 234 Mbits/sec

[ 14] 54.0-56.0 sec 56.9 MBytes 239 Mbits/sec

[ 14] 56.0-58.0 sec 59.5 MBytes 250 Mbits/sec

[ 14] 58.0-60.0 sec 50.7 MBytes 213 Mbits/sec

[ 14] 0.0-60.3 sec 1965 MBytes 273 Mbits/sec

[ 14] MSS size 1448 bytes (MTU 1500 bytes, ethernet)

bwctl: stop_exec: 3445880322.405938

RECEIVER END

11 Feb 2009

By: Dan Gunter and Iwona Sakrejda

Measured between the STAR SRM hosts at NERSC/PDSF and Brookhaven:

- pdsfgrid2.nersc.gov (henceforth, "PDSF")

- stargrid02.rcf.bnl.gov (henceforth, "BNL")

Current data flow is from PDSF to BNL, but plans are to have data flow both ways.

All numbers are in megabits per second (Mb/s). Layer 4 (transport) protocol was TCP. Tests were at least 60 sec. long, 120 sec. for the higher numbers (to give it time to ramp up). All numbers are approximate, of course.

Both sides had recent Linux kernels with auto-tuning. The max buffer sizes were at Brian Tierney's recommended sizes.

From BNL to PDSF

Tool: iperf

- 1 stream: 50-60 Mb/s (but some dips around 5Mb/s)

- 8 or 16 streams: 250-300Mb/s aggregate

Tool: globus-url-copy (see PDSF to BNL for details). This was to confirm that globus-url-copy and iperf were roughly equivalent.

- 1 stream: ~70 Mb/s

- 8 streams: 250-300 Mb/s aggregate. Note: got same number with PDSF iptables turned off.

From PDSF to BNL

Tool: globus-url-copy (gridftp) -- iperf could not connect, which we proved was due to BNL restrictions by temporarily disabling IPtables at PDSF. To avoid any possible I/O effects, ran globus-url-copy from /dev/zero to /dev/null.

- 1 stream: 5 Mb/s

- 8 streams: 40 Mb/s

- 64 streams: 250-300 Mb/s aggregate. Note: got same number with PDSF iptables turned off.

18 Aug 2008 - BNL (stargrid02) - LBLnet (dlolson)

Below are results from iperf tests bnl to lbl. 650 Mbps with very little loss is quite good. For the uninformed (like me), we ran iperf server on dlolson.lbl.gov listening on port 40050, then ran client on stargrid02.rcf.bnl.gov sending udp packets with max rate of 1000 Mbps [olson@dlolson star]$ iperf -s -p 40050 -t 60 -i 1 -u [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 40.0-41.0 sec 78.3 MBytes 657 Mbits/sec 0.012 ms 0/55826 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 41.0-42.0 sec 78.4 MBytes 658 Mbits/sec 0.020 ms 0/55946 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 42.0-43.0 sec 78.4 MBytes 658 Mbits/sec 0.020 ms 0/55911 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 43.0-44.0 sec 76.8 MBytes 644 Mbits/sec 0.023 ms 0/54779 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 44.0-45.0 sec 78.4 MBytes 657 Mbits/sec 0.016 ms 7/55912 (0.013%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 45.0-46.0 sec 78.4 MBytes 658 Mbits/sec 0.016 ms 0/55924 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 46.0-47.0 sec 78.3 MBytes 656 Mbits/sec 0.024 ms 0/55820 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 47.0-48.0 sec 78.3 MBytes 657 Mbits/sec 0.016 ms 0/55870 (0%) [stargrid02] ~/> iperf -c dlolson.lbl.gov -t 60 -i 1 -p 40050 -u -b 1000M [ ID] Interval Transfer Bandwidth [ 3] 40.0-41.0 sec 78.3 MBytes 657 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 41.0-42.0 sec 78.4 MBytes 658 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 42.0-43.0 sec 78.4 MBytes 657 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 43.0-44.0 sec 76.8 MBytes 644 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 44.0-45.0 sec 78.4 MBytes 657 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 45.0-46.0 sec 78.4 MBytes 658 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 46.0-47.0 sec 78.2 MBytes 656 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 47.0-48.0 sec 78.3 MBytes 657 Mbits/sec Additional notes: iperf server at bnl would not answer tho we used port 29000 with GLOBUS_TCP_PORT_RANGE=20000,30000 iperf server at pdsf (pc2608) would not answer either.

25 August 2008 BNL - PDSF iperf results, after moving pdsf grid nodes to 1 GigE net

(pdsfgrid5) iperf % build/bin/iperf -s -p 40050 -t 20 -i 1 -u ------------------------------------------------------------ Server listening on UDP port 40050 Receiving 1470 byte datagrams UDP buffer size: 64.0 KByte (default) ------------------------------------------------------------ [ 3] local 128.55.36.73 port 40050 connected with 130.199.6.168 port 56027 [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0- 1.0 sec 78.5 MBytes 659 Mbits/sec 0.017 ms 14/56030 (0.025%) [ 3] 0.0- 1.0 sec 44 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 1.0- 2.0 sec 74.1 MBytes 621 Mbits/sec 0.024 ms 8/52834 (0.015%) [ 3] 1.0- 2.0 sec 8 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 2.0- 3.0 sec 40.4 MBytes 339 Mbits/sec 0.023 ms 63/28800 (0.22%) [ 3] 2.0- 3.0 sec 63 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 3.0- 4.0 sec 73.0 MBytes 613 Mbits/sec 0.016 ms 121/52095 (0.23%) [ 3] 3.0- 4.0 sec 121 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 4.0- 5.0 sec 76.6 MBytes 643 Mbits/sec 0.020 ms 18/54661 (0.033%) [ 3] 4.0- 5.0 sec 18 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 5.0- 6.0 sec 76.8 MBytes 644 Mbits/sec 0.015 ms 51/54757 (0.093%) [ 3] 5.0- 6.0 sec 51 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 6.0- 7.0 sec 77.1 MBytes 647 Mbits/sec 0.016 ms 40/55012 (0.073%) [ 3] 6.0- 7.0 sec 40 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 7.0- 8.0 sec 74.9 MBytes 628 Mbits/sec 0.040 ms 64/53414 (0.12%) [ 3] 7.0- 8.0 sec 64 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 8.0- 9.0 sec 76.0 MBytes 637 Mbits/sec 0.021 ms 36/54189 (0.066%) [ 3] 8.0- 9.0 sec 36 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 9.0-10.0 sec 75.6 MBytes 634 Mbits/sec 0.018 ms 21/53931 (0.039%) [ 3] 9.0-10.0 sec 21 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 10.0-11.0 sec 54.7 MBytes 459 Mbits/sec 0.038 ms 20/38994 (0.051%) [ 3] 10.0-11.0 sec 20 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 11.0-12.0 sec 75.6 MBytes 634 Mbits/sec 0.019 ms 37/53939 (0.069%) [ 3] 11.0-12.0 sec 37 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 12.0-13.0 sec 74.1 MBytes 622 Mbits/sec 0.056 ms 4/52888 (0.0076%) [ 3] 12.0-13.0 sec 24 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 13.0-14.0 sec 75.4 MBytes 633 Mbits/sec 0.026 ms 115/53803 (0.21%) [ 3] 13.0-14.0 sec 115 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 14.0-15.0 sec 77.1 MBytes 647 Mbits/sec 0.038 ms 50/54997 (0.091%) [ 3] 14.0-15.0 sec 50 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 15.0-16.0 sec 75.2 MBytes 631 Mbits/sec 0.016 ms 26/53654 (0.048%) [ 3] 15.0-16.0 sec 26 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 16.0-17.0 sec 78.2 MBytes 656 Mbits/sec 0.039 ms 39/55793 (0.07%) [ 3] 16.0-17.0 sec 39 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 17.0-18.0 sec 76.6 MBytes 643 Mbits/sec 0.017 ms 35/54635 (0.064%) [ 3] 17.0-18.0 sec 35 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 18.0-19.0 sec 76.5 MBytes 641 Mbits/sec 0.039 ms 23/54544 (0.042%) [ 3] 18.0-19.0 sec 23 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 19.0-20.0 sec 78.0 MBytes 654 Mbits/sec 0.017 ms 1/55624 (0.0018%) [ 3] 19.0-20.0 sec 29 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0-20.0 sec 1.43 GBytes 614 Mbits/sec 0.018 ms 19/1044598 (0.0018%) [ 3] 0.0-20.0 sec 864 datagrams received out-of-order [stargrid02] ~/> iperf -c pdsfgrid5.nersc.gov -t 20 -i 1 -p 40050 -u -b 1000M ------------------------------------------------------------ Client connecting to pdsfgrid5.nersc.gov, UDP port 40050 Sending 1470 byte datagrams UDP buffer size: 128 KByte (default) ------------------------------------------------------------ [ 3] local 130.199.6.168 port 56027 connected with 128.55.36.73 port 40050 [ ID] Interval Transfer Bandwidth [ 3] 0.0- 1.0 sec 78.5 MBytes 659 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 1.0- 2.0 sec 74.1 MBytes 621 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 2.0- 3.0 sec 40.4 MBytes 339 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 3.0- 4.0 sec 73.0 MBytes 613 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 4.0- 5.0 sec 76.6 MBytes 643 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 5.0- 6.0 sec 76.8 MBytes 644 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 6.0- 7.0 sec 77.1 MBytes 647 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 7.0- 8.0 sec 74.8 MBytes 628 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 8.0- 9.0 sec 76.0 MBytes 637 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 9.0-10.0 sec 75.6 MBytes 634 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 10.0-11.0 sec 54.6 MBytes 458 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 11.0-12.0 sec 75.7 MBytes 635 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 12.0-13.0 sec 74.1 MBytes 622 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 13.0-14.0 sec 75.4 MBytes 633 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 14.0-15.0 sec 77.1 MBytes 647 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 15.0-16.0 sec 75.2 MBytes 631 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 16.0-17.0 sec 78.2 MBytes 656 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 17.0-18.0 sec 76.6 MBytes 643 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 18.0-19.0 sec 76.4 MBytes 641 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 0.0-20.0 sec 1.43 GBytes 614 Mbits/sec [ 3] Sent 1044598 datagrams [ 3] Server Report: [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0-20.0 sec 1.43 GBytes 614 Mbits/sec 0.017 ms 19/1044598 (0.0018%) [ 3] 0.0-20.0 sec 864 datagrams received out-of-order

Transfers to/from Birmingham

IntroductionTransfers where either the source or target are on the Birmingham cluster. I am keeping a log of these as they come up. I don't do them too often so it will take a while to accumulate enough data points to discern any patterns…

| Date | Type | Size | Command | Duration | p | rate agg. | rate/p | Source | Destination |

| 2006.9.5 | DAQ | 40Gb | g-u-c | up to 12 hr | 3-5 | 1 MB/s | ~0.2 MB/s | pdsfgrid1,2,4 | rhilxs |

| 2006.10.6 | MuDst | 50 Gb | g-u-c | 3-5 hr | 15 | ~3.5 MB/s | 0.25 MB/s | rhilxs | pdsfgrid2,4,5 |

| 2006.10.20 | event.root geant.root | 500 Gb | g-u-c -nodcau | 38 hr | 9 | 3.7 MB/s | 0.41 MB/s | rhilxs | garchive |

Notes

g-u-c is just shorthand for globus-url-copy

'p' is the total number of simultaneous connections and is the sum of the parameter for g-u-c -p option for all the commands running together

e.g. 4 g-u-c commands with no -p option gives total p=4 but 3 g-u-c commands with -p 5 gives total p=15

Links

May be useful for the beginner?

PDSF Grid info