Grid and Cloud

These pages are dedicated to the GRID effort in STAR as part of our participation in the Open Science Grid.

Our previous pages are being migrated tot his area. Please find the previous content here.

Articles and publications

- October 10th 2012 - The Next Step of HPC in the Cloud

- July 28th 2011 - Magellan Tackles the Mysterious Proton Spin

- June 1st 2011 - The case of the missing proton spin

- March 24th 2010 - Video of the week - RHIC’s hot quark soup

- May 29th 2009 - Nimbus cloud project saves brainiacs' bacon

- May 2nd 2009 - Number Crunching Made Easy

- April 8th 2009 - Feature - Clouds make way for STAR to shine (also as OSG highlight)

- April 2nd 2009 - Nimbus and cloud computing meet STAR production demands (see also HPCwire)

- August 13th 2008 - Feature - STAR of the show

- April 30th 2008 - The new Nimbus: first steps in the clouds

- September 2007 - CHEP 2007 OSG SUMS Workspace Demo (also attached)

- August 7th 2006 - SunGrid and the STAR Experiment (flyer attached)

- June 2006 - From Data To Discovery

- July 27th 2005 - Sao Paulo Joins STAR GRID

- October 25th, 2005 - Probing the Perfect Liquid with the STAR Grid

- June 14th 2006 - SUMS schedules MIT

- June 28th 2006 - São Paulo Seizes Grid Initiative

- March 19th 2004 - Physics results from the STAR experiment at RHIC benefit from production Grid data services

- September 25th 2002 - STAR/HRM achieves robust, effective, Terabyte-scale multi-file replication

Data Management

The data management section will have information on data transfer and development/consolidation of tools used in STAR for Grid data transfer.

SRM/DRM Testing June/July 2007

SRM/DRM Testing June/July 2007

Charge

From email:

We had a discussion with Arie Shoshani and group pertaining

to the use of SRM (client and site caching) in our analysis

scenario. We agreed we would proceed with the following plan,

giving ourselves the best shot at achieving the milestone we

have with the OSG.

- first of all, we will try to restore the SRM service both at

LBNL and BNL . This will require

* Disk space for the SRM cache at LBNL - 500 GB is plenty

* Disk space for the SRM cache at BNL - same size is fine

- we hope for a test of transfer to be passed to the OSG troubleshooting

team who will stress test the data transfer as we have defined i.e.

* size test and long term stability - we would like to define a test

where each job would transfer 500 MB of data from LBNL to BNL

We would like 100 jobs submitted at a time

For the test to be run for at least a few days

* we would like to be sure the test includes burst of

100 requests transfer /mn to SRM

+ the success matrix

. how many time the service had to be restarted

. % success on data transfer

+ we need to document the setup i.e.number of streams

(MUST be greater than 1)

- whenever this test is declared successful, we would use

the deployment in our simulation production in real

production mode - the milestone would then behalf

achieved

- To make our milestone fully completed, we would reach

+1 site. The question was which one?

* Our plan is to move to SRM v2.2 for this test - this

is the path which is more economical in terms of manpower,

OSG deliverables and allow for minimal reshuffling of

manpower and current assignment hence increasing our

chances for success.

* FermiGrid would not have SRM 2.2 however

=> We would then UIC for this, possibly leveraging OSG

manpower to help with setting up a fully working

environment.

Our contact people would be

- Doug Olson for LBNL working with Alex Sim, Andrew Rose,

Eric Hjort (whenever necessary) and Alex Sim

* The work with the OSG troubleshooting team will be

coordinated from LBNL side

* We hope Andrew/Eric will work along with Alex to

set the test described above

- Wayne Betts for access to the infrastructure at BNL

(assistance from everyone to clean the space if needed)

- Olga Barannikova will be our contact for UIC - we will

come back to this later according to the strawman plan

above

As a reminder, I have discussed with Ruth that at

this stage, and after many years of work which are bringing

exciting and encouraging sign of success (the recent production

stability being one) I have however no intent to move, re-scope

or re-schedule our milestone. Success of this milestone is path

forward to make Grid computing part of our plan for the future.

As our visit was understood and help is mobilize, we clearly

see that success is reachable.

I count on all of you for full assistance with

this process.

Thank you,

--

,,,,,

( o o )

--m---U---m--

Jerome

Test Plan (Alex S., 14 June)

Hi all,

The following plan will be performed for STAR SRM test by SDM group with

BeStMan SRM v2.2.

Andrew Rose will duplicate, in the mean time, the successful analysis case

that Eric Hjort had previously.

1. small local setup

1.1. small number of analysis jobs will be submitted directly to PDSF job

queue.

1.2. A job will transfer files from datagrid.lbl.gov via gsiftp into the

PDSF project working cache.

1.3. a fake analysis will be performed to produce a result file.

1.4 the job will issue srm-client to call BeStman to transfer the result

file out to datagrid.lbl.gov via gsiftp.

2. small remote setup

2.1. small number of analysis jobs will be submitted directly to PDSF job

queue.

2.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

2.3. a fake analysis will be performed to produce a result file.

2.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

3. large local setup

3.1. about 100-200 analysis jobs will be submitted directly to PDSF job

queue.

3.2. A job will transfer files from datagrid.lbl.gov via gsiftp into the

PDSF project working cache.

3.3. a fake analysis will be performed to produce a result file.

3.4 the job will issue srm-client to call BeStman to transfer the result

file out to datagrid.lbl.gov via gsiftp.

4. large remote setup

4.1. about 100-200 analysis jobs will be submitted directly to PDSF job

queue.

4.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

4.3. a fake analysis will be performed to produce a result file.

4.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

5. small remote sums setup

5.1. small number of analysis jobs will be submitted to SUMS.

5.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

5.3. a fake analysis will be performed to produce a result file.

5.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

6. large remote setup

6.1. about 100-200 analysis jobs will be submitted to SUMS.

6.2. A job will transfer files from stargrid?.rcf.bnl.gov via gsiftp into

the PDSF project working cache.

6.3. a fake analysis will be performed to produce a result file.

6.4 the job will issue srm-client to call BeStman to transfer the result

file out to stargrid?.rcf.bnl.gov via gsiftp.

7. have Andrew and Lidia use the setup #6 to test with real analysis jobs

8. have a setup #5 on UIC and test

9. have a setup #6 on UIC and test

10. have Andrew and Lidia use the setup #9 to test with real analysis jobs

Any questions? I'll let you know when things are in progress.

-- Alex

asim at lbl dot gov

Site Bandwidth Testing

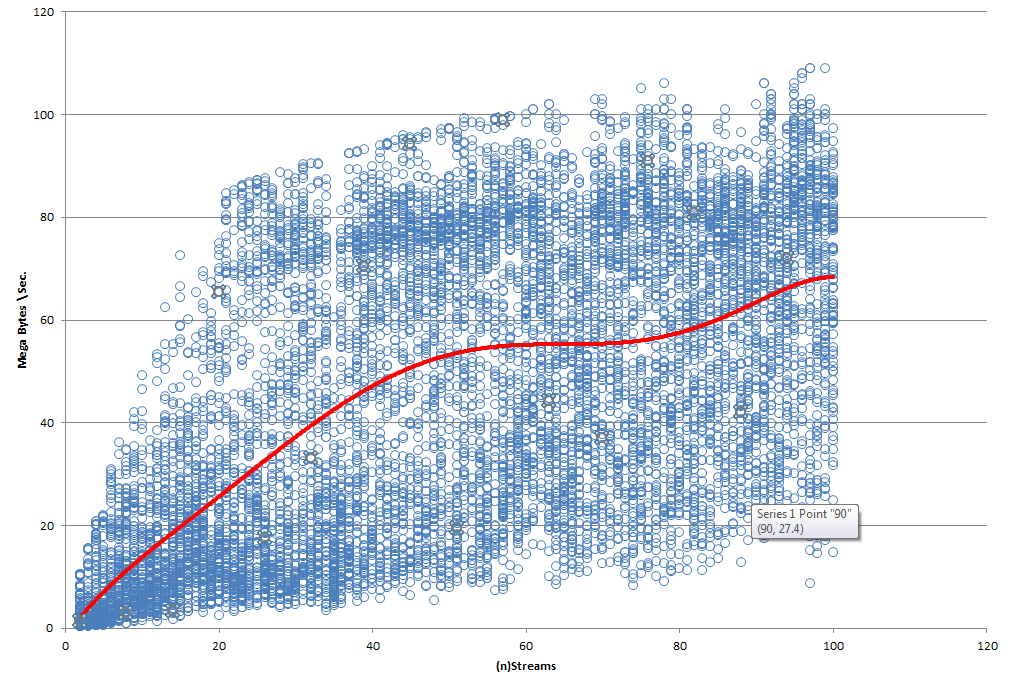

The above is a bandwidth test done using the tool iperf (version iperf_2.0.2-4_i386) between the site KISTI (ui03.sdfarm.kr) and BNL (stargrid03) around the beginning of the year 2014. The connection was noted to collapse (drop to zero) a few times during testing before a full plot could be prepared.

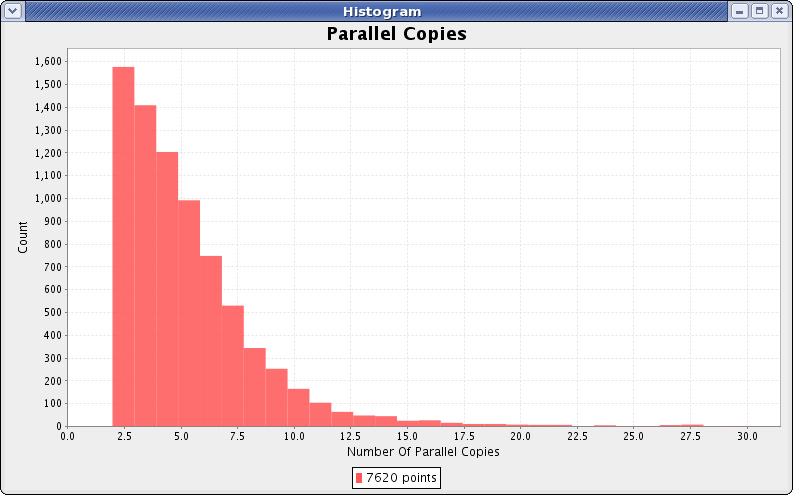

The above histogram shows the number of simultaneous copies in one minute bins, extracted from a few week segment of the actual production at KISTI. Solitary copies are suppressed because they overwhelm the plot. Copies represent less than 1% of the jobs total run time.

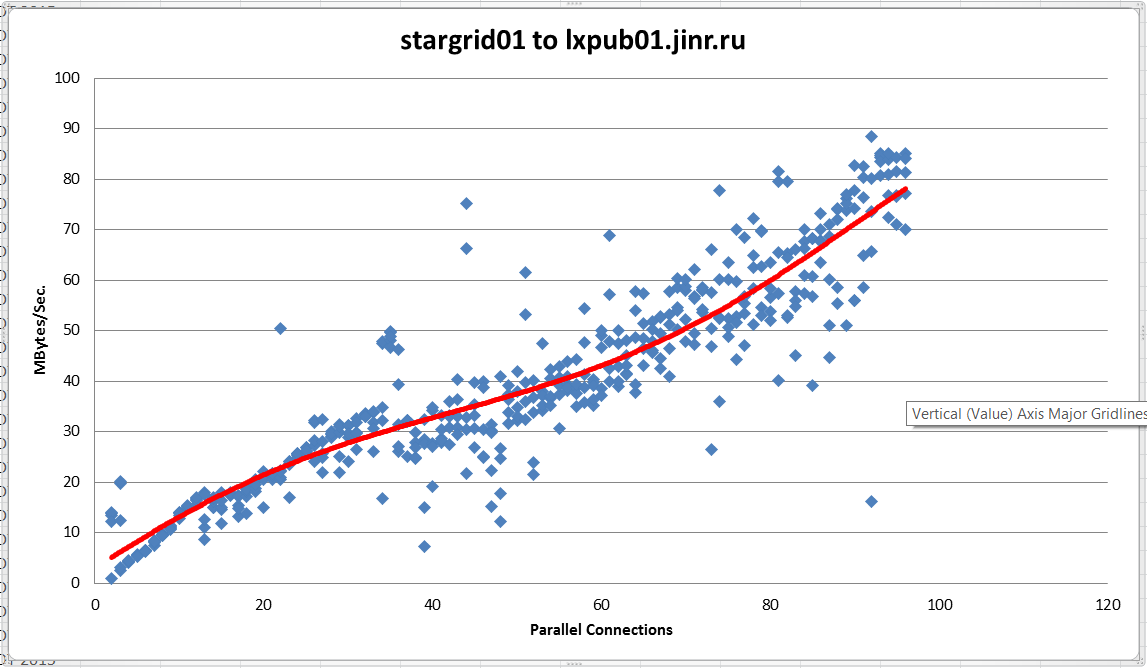

The above is a bandwidth test done using the tool iperf (version iperf_2.0.2-4_i386) between the site Dubna (lxpub01.jinr.ru) and BNL (stargrid01) on 8/14/2015. After exactly 97 parallel connections the connection was noted to collapse with many parallel processes timing out, this behavior was consistent across three attempts but was not present at any lower number of parallel connections. It is suspected that a soft limit is placed on the number of parallel processes somewhere.The raw data is attached at the bottom.

The 2006 STAR analysis scenario

This page will describe in detail the STAR analysis scenario as it was in ~2006. This scenario involves SUMS grid job submission at RCF through condor-g to PDSF using SRM's at both ends to transfer input and output files in a managed fashion.

Transfer BNL/PDSF, summer 2009

This page will document the data transfers from/to PDSF to/from BNL in the summer/autumn of 2009.

October 17, 2009

I repeated earlier tests I had run with Dan Gunter (see below "Previous results"). It takes onlt 3 streams to saturate the 1GigE network interface of stargrid04.

[stargrid04] ~/> globus-url-copy -vb file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

2389704704 bytes 23.59 MB/sec avg 37.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -tcp-bs 8388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

1569718272 bytes 35.39 MB/sec avg 39.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

1607467008 bytes 35.44 MB/sec avg 38.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -p 2 -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

3414425600 bytes 72.36 MB/sec avg 63.95 MB/sec inst

[stargrid04] ~/> globus-url-copy -p 4 -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

8569487360 bytes 108.97 MB/sec avg 111.80 MB/sec inst

[stargrid04] ~/> globus-url-copy -p 3 -vb -tcp-bs 4388608 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null

5576065024 bytes 106.36 MB/sec avg 109.70 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

625999872 bytes 9.95 MB/sec avg 19.01 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -tcp-bs 4388608 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

1523580928 bytes 30.27 MB/sec avg 38.00 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -p 2 -tcp-bs 4388608 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

8712617984 bytes 71.63 MB/sec avg 75.87 MB/sec inst

[stargrid04] ~/> globus-url-copy -vb -p 3 -tcp-bs 4388608 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null

7064518656 bytes 102.08 MB/sec avg 111.88 MB/sec inst

October 15, 2009 - evening

After replacing network card to 10GigE so that we could plug directly into the core switch quicktest gives:

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005 ------------------------------------------------------------ Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte ------------------------------------------------------------ [ 3] local 130.199.6.109 port 50291 connected with 128.55.36.74 port 60005 [ ID] Interval Transfer Bandwidth [ 3] 0.0-120.0 sec 4.39 GBytes 314 Mbits/sec [ 3] MSS size 1368 bytes (MTU 1408 bytes, unknown interface)

More work tomorrow.

October 15, 2009

Comparison between the signal from an optical tap at the NERSC border with the tcpdump on the node showed most of the loss happening between the border and pdsfsrm.nersc.gov.

More work was done to optimize single-stream throughput.

- pdsfsrm was moved from a switch that serves the rack where it resides to a switch that is one level up and closer to the border

- a configuration of the forcedeth driver was changed (options forcedeth optimization_mode=1 poll_interval=100 set in /etc/modprobe.conf).

Changes resulted in an improved throughput but it is stillfar from what should be (see details below). We are going to insert a 10 GigE card into the node and move it even closer to the border.

Here are the results with those buffer memory settings as of the morning 10/15/2009. There is a header from the first -------------------------------------------------------------------------

measurement and then results from a few tests run minutes apart.

-------------------------------------------------------------------------

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005

-------------------------------------------------------------------------

Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte

-------------------------------------------------------------------------

[ 3] local 130.199.6.109 port 44070 connected with 128.55.36.74 port 60005

[ ID] Interval Transfer Bandwidth [ 3] 0.0-120.0 sec 1.81 GBytes 129 Mbits/sec

[ 3] 0.0-120.0 sec 3.30 GBytes 236 Mbits/sec

[ 3] 0.0-120.0 sec 1.86 GBytes 133 Mbits/sec

[ 3] 0.0-120.0 sec 2.04 GBytes 146 Mbits/sec

[ 3] 0.0-120.0 sec 3.61 GBytes 258 Mbits/sec

[ 3] 0.0-120.0 sec 1.88 GBytes 135 Mbits/sec

[ 3] 0.0-120.0 sec 3.35 GBytes 240 Mbits/sec

Then I restored the "dtn" buffer memory settings - again morning 10/15/2009 and I got similar if not worse results:

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005

-------------------------------------------------------------------------

Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte

-------------------------------------------------------------------------

[ 3] local 130.199.6.109 port 44361 connected with 128.55.36.74 port 60005

[ ID] Interval Transfer Bandwidth [ 3] 0.0-120.0 sec 2.34 GBytes 168 Mbits/sec

[ 3] 0.0-120.0 sec 1.42 GBytes 101 Mbits/sec

[ 3] 0.0-120.0 sec 2.08 GBytes 149 Mbits/sec

[ 3] 0.0-120.0 sec 2.13 GBytes 152 Mbits/sec

[ 3] 0.0-120.0 sec 1.76 GBytes 126 Mbits/sec

[ 3] 0.0-120.0 sec 1.42 GBytes 102 Mbits/sec

[ 3] 0.0-120.0 sec 2.07 GBytes 148 Mbits/sec

[ 3] 0.0-120.0 sec 2.07 GBytes 148 Mbits/sec

And here if for comparison and to show how things vary with more or less same load on pdsfgrid2 results for the "dtn" settings

just like above from 10/14/2009 afternoon.--------------------------------------------------------------------------------------

[stargrid04] ~/> iperf -c pdsfsrm.nersc.gov -m -w 8388608 -t 120 -p 60005

--------------------------------------------------------------------------------------

Client connecting to pdsfsrm.nersc.gov, TCP port 60005 TCP window size: 8.00 MByte

--------------------------------------------------------------------------------------

[ 3] local 130.199.6.109 port 34366 connected with 128.55.36.74 port 60005

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-120.0 sec 1.31 GBytes 93.5 Mbits/sec

[ 3] 0.0-120.0 sec 1.58 GBytes 113 Mbits/sec

[ 3] 0.0-120.0 sec 1.75 GBytes 126 Mbits/sec

[ 3] 0.0-120.0 sec 1.88 GBytes 134 Mbits/sec

[ 3] 0.0-120.0 sec 2.56 GBytes 183 Mbits/sec

[ 3] 0.0-120.0 sec 2.53 GBytes 181 Mbits/sec

[ 3] 0.0-120.0 sec 3.25 GBytes 232 Mbits/sec

Since the "80Mb/s or worse" persisted for a long time and was measured on various occasions the new numbers are due to the forceth param or the switch change. Most probably it was the switch. It is also true that the "dtn" settings were able to cope slightly better with the location on the Dell switch but seem to be not doing much when pdsfgrid2 is plugged directly into the "old pdsfcore" switch.

October 2, 2009

Notes on third party srm-copy to PDSF:

1) on PDSF interactive node, you need to set up your environment:

source /usr/local/pkg/OSG-1.2/setup.csh

2) srm-copy (recursive) has the following form:

srm-copy gsiftp://stargrid04.rcf.bnl.gov//star/institutions/lbl_prod/andrewar/transfer/reco/production_dAu/ReversedFullField/P08ie/2008/023b/ srm://pdsfsrm.nersc.gov:62443/srm/v2/server\?SFN=/eliza9/starprod/reco/production_dAu/ReversedFullField/P08ie/2008/023/ -recursive -td /eliza9/starprod/reco/production_dAu/ReversedFullField/P08ie/2008/023/

October 1, 2009

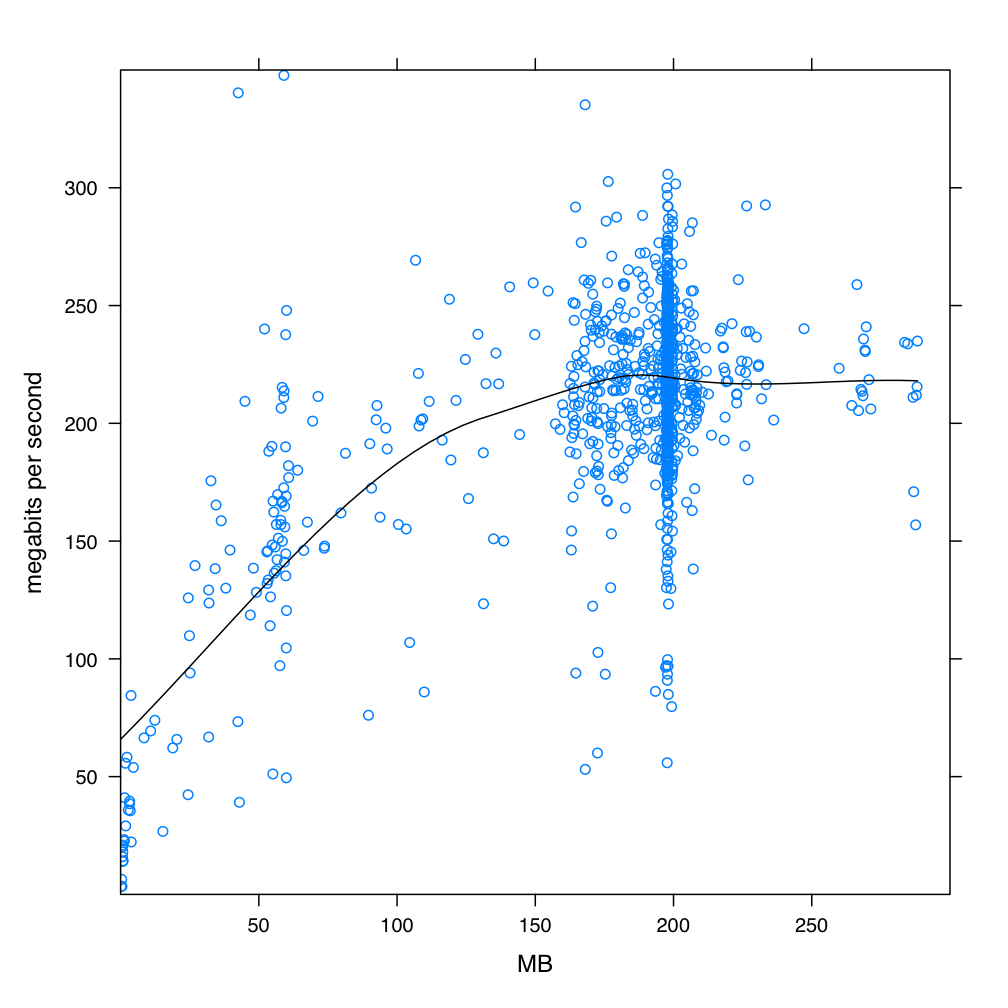

We conducted srm-copy tests between RCF and PDSF this week. Initially, the rates we saw for a third party srm-copy between RCF (stargrid04) and PDSF (pdsfsrm) are detailed in plots from Dan:

September 24, 2009

We updated the transfer proceedure to make use of the OSG automated monitoring tools. Perviously, the transfers ran between stargrid04 and one of the NERSC data transfer nodes. To take advantage of Dan's automated log harvesting, we're switiching the target to pdsfsrm.nersc.gov.

Transfers between stargrid04 and pdsfsrm are fairly stable at ~20MBytes/sec (as reported by the "-vb" option in the globus-url-copy). The command used is of the form:

globus-url-copy -r -p 15 gsiftp://stargrid04.rcf.bnl.gov/[dir]/ gisftp://pdsfsrm.nersc.gov/[target dir]/

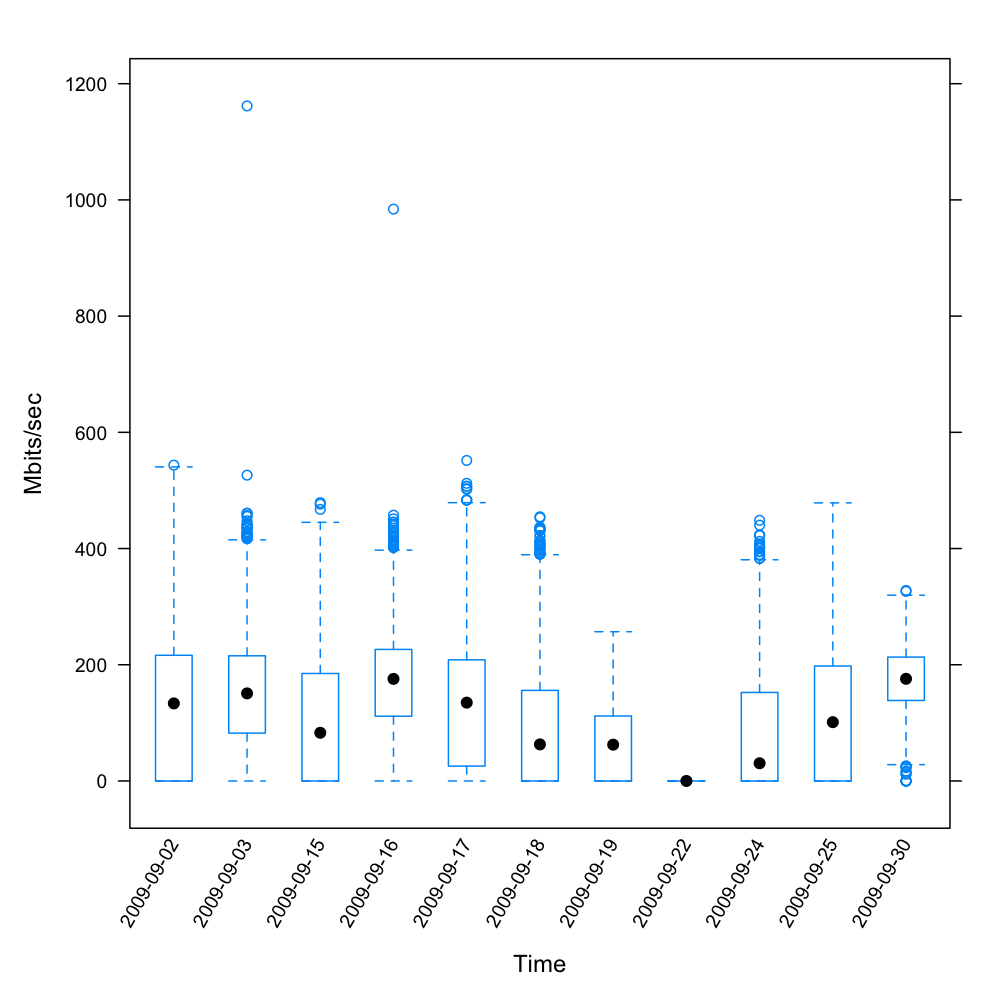

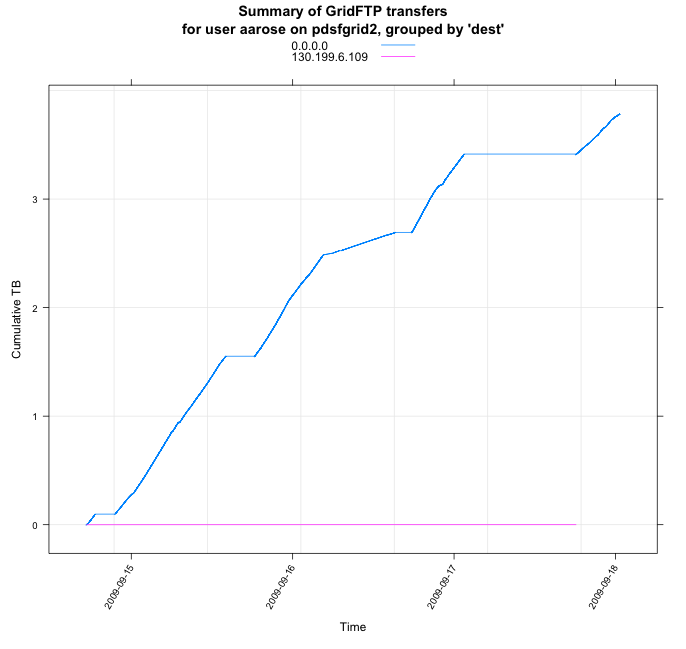

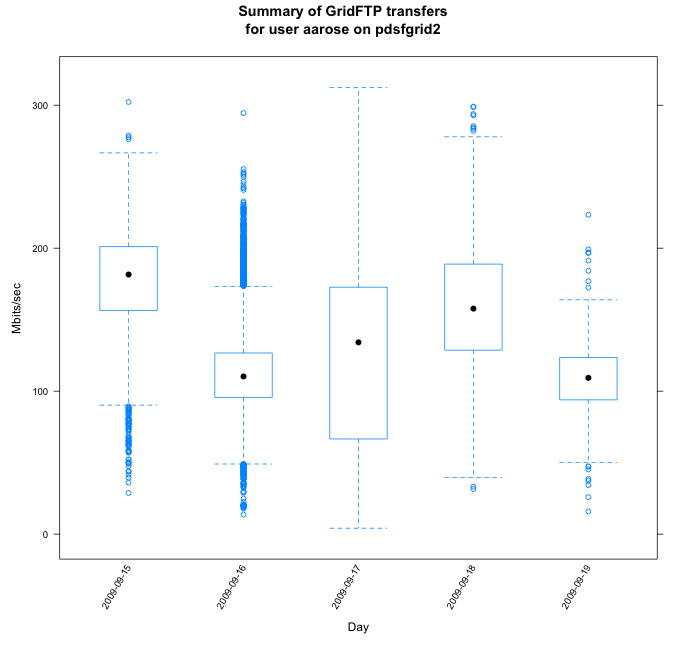

Plots from the first set using the pdsfsrm node:

The most recent rates seen are given in Dan's plots from Sept. 23rd:

So, the data transfer is progressing at ~100-200 Mb/s. We will next compare to rates using the new BeStMan installation at PDSF.

Previous results

Tests have been repeated as a new node (stargrid10) became available. We ran from the SRM end host at PDSF pdsfgrid2.nersc.gov to the new stargrid10.rhic.bnl.gov endpoint at BNL . Because of firewalls we could only run from PDSF to BNL, not the other way. A 60-second test got about 75Mb/s. This number is consistent with earlier iperf tests between stargrid02 and pdsfgrid2.

globus-url-copy with 8 streams would go up 400Mb/s and 16 streams 550MB/s. Also with stargrid10, the transfer rates would be the same to and from BNL.

Details below.

pdsfgrid2 59% iperf -s -f m -m -p 60005 -w 8388608 -t 60 -i 2

------------------------------------------------------------

Server listening on TCP port 60005

TCP window size: 16.0 MByte (WARNING: requested 8.00 MByte)

------------------------------------------------------------

[ 4] local 128.55.36.74 port 60005 connected with 130.199.6.208 port 36698

[ 4] 0.0- 2.0 sec 13.8 MBytes 57.9 Mbits/sec

[ 4] 2.0- 4.0 sec 19.1 MBytes 80.2 Mbits/sec

[ 4] 4.0- 6.0 sec 4.22 MBytes 17.7 Mbits/sec

[ 4] 6.0- 8.0 sec 0.17 MBytes 0.71 Mbits/sec

[ 4] 8.0-10.0 sec 2.52 MBytes 10.6 Mbits/sec

[ 4] 10.0-12.0 sec 16.7 MBytes 70.1 Mbits/sec

[ 4] 12.0-14.0 sec 17.4 MBytes 73.1 Mbits/sec

[ 4] 14.0-16.0 sec 16.1 MBytes 67.7 Mbits/sec

[ 4] 16.0-18.0 sec 15.8 MBytes 66.4 Mbits/sec

[ 4] 18.0-20.0 sec 17.5 MBytes 73.6 Mbits/sec

[ 4] 20.0-22.0 sec 17.6 MBytes 73.7 Mbits/sec

[ 4] 22.0-24.0 sec 18.1 MBytes 75.8 Mbits/sec

[ 4] 24.0-26.0 sec 19.5 MBytes 81.7 Mbits/sec

[ 4] 26.0-28.0 sec 19.3 MBytes 80.9 Mbits/sec

[ 4] 28.0-30.0 sec 13.8 MBytes 58.1 Mbits/sec

[ 4] 30.0-32.0 sec 14.5 MBytes 60.7 Mbits/sec

[ 4] 32.0-34.0 sec 14.7 MBytes 61.8 Mbits/sec

[ 4] 34.0-36.0 sec 14.6 MBytes 61.2 Mbits/sec

[ 4] 36.0-38.0 sec 17.2 MBytes 72.2 Mbits/sec

[ 4] 38.0-40.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 40.0-42.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 42.0-44.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 44.0-46.0 sec 19.5 MBytes 81.7 Mbits/sec

[ 4] 46.0-48.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 48.0-50.0 sec 19.1 MBytes 79.9 Mbits/sec

[ 4] 50.0-52.0 sec 19.3 MBytes 80.9 Mbits/sec

[ 4] 52.0-54.0 sec 19.4 MBytes 81.3 Mbits/sec

[ 4] 54.0-56.0 sec 19.4 MBytes 81.5 Mbits/sec

[ 4] 56.0-58.0 sec 19.5 MBytes 81.6 Mbits/sec

[ 4] 58.0-60.0 sec 19.5 MBytes 81.7 Mbits/sec

[ 4] 0.0-60.4 sec 489 MBytes 68.0 Mbits/sec

[ 4] MSS size 1368 bytes (MTU 1408 bytes, unknown interface)

The client was on stargrid10.

on stargrid10

from stargrid10 to pdsfgrid2:

[stargrid10] ~/> globus-url-copy -vb file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

513802240 bytes 7.57 MB/sec avg 9.09 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 4 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

1863843840 bytes 25.39 MB/sec avg 36.25 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 6 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

3354394624 bytes 37.64 MB/sec avg 44.90 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 8 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

5016649728 bytes 47.84 MB/sec avg 57.35 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 12 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

5588647936 bytes 62.70 MB/sec avg 57.95 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 16 file:///dev/zero gsiftp://pdsfgrid2.nersc.gov/dev/null Source: file:///dev/ Dest: gsiftp://pdsfgrid2.nersc.gov/dev/

zero -> null

15292432384 bytes 74.79 MB/sec avg 65.65 MB/sec inst

Cancelling copy...

and on stargrid10 the other way, from pdsfgrid2 to stargrid10 (similar although slightly better)

[stargrid10] ~/> globus-url-copy -vb gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null Source: gsiftp://pdsfgrid2.nersc.gov/dev/ Dest: file:///dev/

zero -> null

1693450240 bytes 11.54 MB/sec avg 18.99 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 4 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null Source: gsiftp://pdsfgrid2.nersc.gov/dev/ Dest: file:///dev/

zero -> null

12835618816 bytes 45.00 MB/sec avg 73.50 MB/sec inst

Cancelling copy...

[stargrid10] ~/> globus-url-copy -vb -p 8 gsiftp://pdsfgrid2.nersc.gov/dev/zero file:///dev/null Source: gsiftp://pdsfgrid2.nersc.gov/dev/ Dest: file:///dev/

zero -> null

14368112640 bytes 69.20 MB/sec avg 100.50 MB/sec inst

And now on pdsfgrid2 from pfsfgrid2 to stargrid10 (similar to the result for 4 stream in same direction above)

pdsfgrid2 70% globus-url-copy -vb -p 4 file:///dev/zero gsiftp://stargrid10.rcf.bnl.gov/dev/null Source: file:///dev/ Dest: gsiftp://stargrid10.rcf.bnl.gov/dev/

zero -> null

20869021696 bytes 50.39 MB/sec avg 73.05 MB/sec inst

Cancelling copy...

and to stargrid02, really, really bad. but since the node is going away we won't be investigating the mistery.

pdsfgrid2 71% globus-url-copy -vb -p 4 file:///dev/zero gsiftp://stargrid02.rcf.bnl.gov/dev/null Source: file:///dev/ Dest: gsiftp://stargrid02.rcf.bnl.gov/dev/

zero -> null

275513344 bytes 2.39 MB/sec avg 2.40 MB/sec inst

Cancelling copy...

12 Mar 2009

Baseline from bwctl from SRM end host at PDSF -- pdsfgrid2.nersc.gov -- to a perfsonar endpoint at BNL -- lhcmon.bnl.gov. Because of firewalls, could only run from PDSF to BNL, not the other way around. Last I checked, this direction was getting about 5Mb/s from SRM. A 60-second test to the perfsonar host got about 275Mb/s.

Summary: Current baseline from perfSONAR is more than 50X what we're seeing.

RECEIVER START

bwctl: exec_line: /usr/local/bin/iperf -B 192.12.15.23 -s -f m -m -p 5008 -w 8388608 -t 60 -i 2

bwctl: start_tool: 3445880257.865809

------------------------------------------------------------

Server listening on TCP port 5008

Binding to local address 192.12.15.23

TCP window size: 16.0 MByte (WARNING: requested 8.00 MByte)

------------------------------------------------------------

[ 14] local 192.12.15.23 port 5008 connected with 128.55.36.74 port 5008

[ 14] 0.0- 2.0 sec 7.84 MBytes 32.9 Mbits/sec

[ 14] 2.0- 4.0 sec 38.2 MBytes 160 Mbits/sec

[ 14] 4.0- 6.0 sec 110 MBytes 461 Mbits/sec

[ 14] 6.0- 8.0 sec 18.3 MBytes 76.9 Mbits/sec

[ 14] 8.0-10.0 sec 59.1 MBytes 248 Mbits/sec

[ 14] 10.0-12.0 sec 102 MBytes 428 Mbits/sec

[ 14] 12.0-14.0 sec 139 MBytes 582 Mbits/sec

[ 14] 14.0-16.0 sec 142 MBytes 597 Mbits/sec

[ 14] 16.0-18.0 sec 49.7 MBytes 208 Mbits/sec

[ 14] 18.0-20.0 sec 117 MBytes 490 Mbits/sec

[ 14] 20.0-22.0 sec 46.7 MBytes 196 Mbits/sec

[ 14] 22.0-24.0 sec 47.0 MBytes 197 Mbits/sec

[ 14] 24.0-26.0 sec 81.5 MBytes 342 Mbits/sec

[ 14] 26.0-28.0 sec 75.9 MBytes 318 Mbits/sec

[ 14] 28.0-30.0 sec 45.5 MBytes 191 Mbits/sec

[ 14] 30.0-32.0 sec 56.2 MBytes 236 Mbits/sec

[ 14] 32.0-34.0 sec 55.5 MBytes 233 Mbits/sec

[ 14] 34.0-36.0 sec 58.0 MBytes 243 Mbits/sec

[ 14] 36.0-38.0 sec 61.0 MBytes 256 Mbits/sec

[ 14] 38.0-40.0 sec 61.6 MBytes 258 Mbits/sec

[ 14] 40.0-42.0 sec 72.0 MBytes 302 Mbits/sec

[ 14] 42.0-44.0 sec 62.6 MBytes 262 Mbits/sec

[ 14] 44.0-46.0 sec 64.3 MBytes 270 Mbits/sec

[ 14] 46.0-48.0 sec 66.1 MBytes 277 Mbits/sec

[ 14] 48.0-50.0 sec 33.6 MBytes 141 Mbits/sec

[ 14] 50.0-52.0 sec 63.0 MBytes 264 Mbits/sec

[ 14] 52.0-54.0 sec 55.7 MBytes 234 Mbits/sec

[ 14] 54.0-56.0 sec 56.9 MBytes 239 Mbits/sec

[ 14] 56.0-58.0 sec 59.5 MBytes 250 Mbits/sec

[ 14] 58.0-60.0 sec 50.7 MBytes 213 Mbits/sec

[ 14] 0.0-60.3 sec 1965 MBytes 273 Mbits/sec

[ 14] MSS size 1448 bytes (MTU 1500 bytes, ethernet)

bwctl: stop_exec: 3445880322.405938

RECEIVER END

11 Feb 2009

By: Dan Gunter and Iwona Sakrejda

Measured between the STAR SRM hosts at NERSC/PDSF and Brookhaven:

- pdsfgrid2.nersc.gov (henceforth, "PDSF")

- stargrid02.rcf.bnl.gov (henceforth, "BNL")

Current data flow is from PDSF to BNL, but plans are to have data flow both ways.

All numbers are in megabits per second (Mb/s). Layer 4 (transport) protocol was TCP. Tests were at least 60 sec. long, 120 sec. for the higher numbers (to give it time to ramp up). All numbers are approximate, of course.

Both sides had recent Linux kernels with auto-tuning. The max buffer sizes were at Brian Tierney's recommended sizes.

From BNL to PDSF

Tool: iperf

- 1 stream: 50-60 Mb/s (but some dips around 5Mb/s)

- 8 or 16 streams: 250-300Mb/s aggregate

Tool: globus-url-copy (see PDSF to BNL for details). This was to confirm that globus-url-copy and iperf were roughly equivalent.

- 1 stream: ~70 Mb/s

- 8 streams: 250-300 Mb/s aggregate. Note: got same number with PDSF iptables turned off.

From PDSF to BNL

Tool: globus-url-copy (gridftp) -- iperf could not connect, which we proved was due to BNL restrictions by temporarily disabling IPtables at PDSF. To avoid any possible I/O effects, ran globus-url-copy from /dev/zero to /dev/null.

- 1 stream: 5 Mb/s

- 8 streams: 40 Mb/s

- 64 streams: 250-300 Mb/s aggregate. Note: got same number with PDSF iptables turned off.

18 Aug 2008 - BNL (stargrid02) - LBLnet (dlolson)

Below are results from iperf tests bnl to lbl. 650 Mbps with very little loss is quite good. For the uninformed (like me), we ran iperf server on dlolson.lbl.gov listening on port 40050, then ran client on stargrid02.rcf.bnl.gov sending udp packets with max rate of 1000 Mbps [olson@dlolson star]$ iperf -s -p 40050 -t 60 -i 1 -u [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 40.0-41.0 sec 78.3 MBytes 657 Mbits/sec 0.012 ms 0/55826 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 41.0-42.0 sec 78.4 MBytes 658 Mbits/sec 0.020 ms 0/55946 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 42.0-43.0 sec 78.4 MBytes 658 Mbits/sec 0.020 ms 0/55911 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 43.0-44.0 sec 76.8 MBytes 644 Mbits/sec 0.023 ms 0/54779 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 44.0-45.0 sec 78.4 MBytes 657 Mbits/sec 0.016 ms 7/55912 (0.013%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 45.0-46.0 sec 78.4 MBytes 658 Mbits/sec 0.016 ms 0/55924 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 46.0-47.0 sec 78.3 MBytes 656 Mbits/sec 0.024 ms 0/55820 (0%) [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 47.0-48.0 sec 78.3 MBytes 657 Mbits/sec 0.016 ms 0/55870 (0%) [stargrid02] ~/> iperf -c dlolson.lbl.gov -t 60 -i 1 -p 40050 -u -b 1000M [ ID] Interval Transfer Bandwidth [ 3] 40.0-41.0 sec 78.3 MBytes 657 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 41.0-42.0 sec 78.4 MBytes 658 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 42.0-43.0 sec 78.4 MBytes 657 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 43.0-44.0 sec 76.8 MBytes 644 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 44.0-45.0 sec 78.4 MBytes 657 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 45.0-46.0 sec 78.4 MBytes 658 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 46.0-47.0 sec 78.2 MBytes 656 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 47.0-48.0 sec 78.3 MBytes 657 Mbits/sec Additional notes: iperf server at bnl would not answer tho we used port 29000 with GLOBUS_TCP_PORT_RANGE=20000,30000 iperf server at pdsf (pc2608) would not answer either.

25 August 2008 BNL - PDSF iperf results, after moving pdsf grid nodes to 1 GigE net

(pdsfgrid5) iperf % build/bin/iperf -s -p 40050 -t 20 -i 1 -u ------------------------------------------------------------ Server listening on UDP port 40050 Receiving 1470 byte datagrams UDP buffer size: 64.0 KByte (default) ------------------------------------------------------------ [ 3] local 128.55.36.73 port 40050 connected with 130.199.6.168 port 56027 [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0- 1.0 sec 78.5 MBytes 659 Mbits/sec 0.017 ms 14/56030 (0.025%) [ 3] 0.0- 1.0 sec 44 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 1.0- 2.0 sec 74.1 MBytes 621 Mbits/sec 0.024 ms 8/52834 (0.015%) [ 3] 1.0- 2.0 sec 8 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 2.0- 3.0 sec 40.4 MBytes 339 Mbits/sec 0.023 ms 63/28800 (0.22%) [ 3] 2.0- 3.0 sec 63 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 3.0- 4.0 sec 73.0 MBytes 613 Mbits/sec 0.016 ms 121/52095 (0.23%) [ 3] 3.0- 4.0 sec 121 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 4.0- 5.0 sec 76.6 MBytes 643 Mbits/sec 0.020 ms 18/54661 (0.033%) [ 3] 4.0- 5.0 sec 18 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 5.0- 6.0 sec 76.8 MBytes 644 Mbits/sec 0.015 ms 51/54757 (0.093%) [ 3] 5.0- 6.0 sec 51 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 6.0- 7.0 sec 77.1 MBytes 647 Mbits/sec 0.016 ms 40/55012 (0.073%) [ 3] 6.0- 7.0 sec 40 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 7.0- 8.0 sec 74.9 MBytes 628 Mbits/sec 0.040 ms 64/53414 (0.12%) [ 3] 7.0- 8.0 sec 64 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 8.0- 9.0 sec 76.0 MBytes 637 Mbits/sec 0.021 ms 36/54189 (0.066%) [ 3] 8.0- 9.0 sec 36 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 9.0-10.0 sec 75.6 MBytes 634 Mbits/sec 0.018 ms 21/53931 (0.039%) [ 3] 9.0-10.0 sec 21 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 10.0-11.0 sec 54.7 MBytes 459 Mbits/sec 0.038 ms 20/38994 (0.051%) [ 3] 10.0-11.0 sec 20 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 11.0-12.0 sec 75.6 MBytes 634 Mbits/sec 0.019 ms 37/53939 (0.069%) [ 3] 11.0-12.0 sec 37 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 12.0-13.0 sec 74.1 MBytes 622 Mbits/sec 0.056 ms 4/52888 (0.0076%) [ 3] 12.0-13.0 sec 24 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 13.0-14.0 sec 75.4 MBytes 633 Mbits/sec 0.026 ms 115/53803 (0.21%) [ 3] 13.0-14.0 sec 115 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 14.0-15.0 sec 77.1 MBytes 647 Mbits/sec 0.038 ms 50/54997 (0.091%) [ 3] 14.0-15.0 sec 50 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 15.0-16.0 sec 75.2 MBytes 631 Mbits/sec 0.016 ms 26/53654 (0.048%) [ 3] 15.0-16.0 sec 26 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 16.0-17.0 sec 78.2 MBytes 656 Mbits/sec 0.039 ms 39/55793 (0.07%) [ 3] 16.0-17.0 sec 39 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 17.0-18.0 sec 76.6 MBytes 643 Mbits/sec 0.017 ms 35/54635 (0.064%) [ 3] 17.0-18.0 sec 35 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 18.0-19.0 sec 76.5 MBytes 641 Mbits/sec 0.039 ms 23/54544 (0.042%) [ 3] 18.0-19.0 sec 23 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 19.0-20.0 sec 78.0 MBytes 654 Mbits/sec 0.017 ms 1/55624 (0.0018%) [ 3] 19.0-20.0 sec 29 datagrams received out-of-order [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0-20.0 sec 1.43 GBytes 614 Mbits/sec 0.018 ms 19/1044598 (0.0018%) [ 3] 0.0-20.0 sec 864 datagrams received out-of-order [stargrid02] ~/> iperf -c pdsfgrid5.nersc.gov -t 20 -i 1 -p 40050 -u -b 1000M ------------------------------------------------------------ Client connecting to pdsfgrid5.nersc.gov, UDP port 40050 Sending 1470 byte datagrams UDP buffer size: 128 KByte (default) ------------------------------------------------------------ [ 3] local 130.199.6.168 port 56027 connected with 128.55.36.73 port 40050 [ ID] Interval Transfer Bandwidth [ 3] 0.0- 1.0 sec 78.5 MBytes 659 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 1.0- 2.0 sec 74.1 MBytes 621 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 2.0- 3.0 sec 40.4 MBytes 339 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 3.0- 4.0 sec 73.0 MBytes 613 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 4.0- 5.0 sec 76.6 MBytes 643 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 5.0- 6.0 sec 76.8 MBytes 644 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 6.0- 7.0 sec 77.1 MBytes 647 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 7.0- 8.0 sec 74.8 MBytes 628 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 8.0- 9.0 sec 76.0 MBytes 637 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 9.0-10.0 sec 75.6 MBytes 634 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 10.0-11.0 sec 54.6 MBytes 458 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 11.0-12.0 sec 75.7 MBytes 635 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 12.0-13.0 sec 74.1 MBytes 622 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 13.0-14.0 sec 75.4 MBytes 633 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 14.0-15.0 sec 77.1 MBytes 647 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 15.0-16.0 sec 75.2 MBytes 631 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 16.0-17.0 sec 78.2 MBytes 656 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 17.0-18.0 sec 76.6 MBytes 643 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 18.0-19.0 sec 76.4 MBytes 641 Mbits/sec [ ID] Interval Transfer Bandwidth [ 3] 0.0-20.0 sec 1.43 GBytes 614 Mbits/sec [ 3] Sent 1044598 datagrams [ 3] Server Report: [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 3] 0.0-20.0 sec 1.43 GBytes 614 Mbits/sec 0.017 ms 19/1044598 (0.0018%) [ 3] 0.0-20.0 sec 864 datagrams received out-of-order

Transfers to/from Birmingham

IntroductionTransfers where either the source or target are on the Birmingham cluster. I am keeping a log of these as they come up. I don't do them too often so it will take a while to accumulate enough data points to discern any patterns…

| Date | Type | Size | Command | Duration | p | rate agg. | rate/p | Source | Destination |

| 2006.9.5 | DAQ | 40Gb | g-u-c | up to 12 hr | 3-5 | 1 MB/s | ~0.2 MB/s | pdsfgrid1,2,4 | rhilxs |

| 2006.10.6 | MuDst | 50 Gb | g-u-c | 3-5 hr | 15 | ~3.5 MB/s | 0.25 MB/s | rhilxs | pdsfgrid2,4,5 |

| 2006.10.20 | event.root geant.root | 500 Gb | g-u-c -nodcau | 38 hr | 9 | 3.7 MB/s | 0.41 MB/s | rhilxs | garchive |

Notes

g-u-c is just shorthand for globus-url-copy

'p' is the total number of simultaneous connections and is the sum of the parameter for g-u-c -p option for all the commands running together

e.g. 4 g-u-c commands with no -p option gives total p=4 but 3 g-u-c commands with -p 5 gives total p=15

Links

May be useful for the beginner?

PDSF Grid info

Documentation

This page will add documents / documentation links and help for Grid beginners or experts. Those documents are either created by us or gathered from the internet.

Getting site information from VORS

VORS (Virtual Organization Resource Selector) provides information about grid sites similar to GridCat. You can find VORS information here.As per information received at a GOC meeting on 8/14/06, VORS information is the to be be the preferred OSG information service. VORS provides information through the HTTP protocol. This can be in plane text format or HTML, both are viewable from a web browser. For the html version use the link:

Virtual Organization Selection

The plain text version may be more important because it can be easily parsed by other programs. This allows for the writing of information service modules for SUMS in a simple way.

Step 1:

Go to the link below in a web browser:

VORS text interface

Note that to get the text version index.cgi is replaced with tindex.cgi. This will bring up a page that looks like this:

238,Purdue-Physics,grid.physics.purdue.edu:2119,compute,OSG,PASS,2006-08-21 19:16:25 237,Rice,osg-gate.rice.edu:2119,compute,OSG,FAIL,2006-08-21 19:17:07 13,SDSS_TAM,tam01.fnal.gov:2119,compute,OSG,PASS,2006-08-21 19:17:10 38,SPRACE,spgrid.if.usp.br:2119,compute,OSG,PASS,2006-08-21 19:17:51 262,STAR-Bham,rhilxs.ph.bham.ac.uk:2119,compute,OSG,PASS,2006-08-21 19:23:12 217,STAR-BNL,stargrid02.rcf.bnl.gov:2119,compute,OSG,PASS,2006-08-21 19:24:11 16,STAR-SAO_PAULO,stars.if.usp.br:2119,compute,OSG,PASS,2006-08-21 19:26:55 44,STAR-WSU,rhic23.physics.wayne.edu:2119,compute,OSG,PASS,2006-08-21 19:29:10 34,TACC,osg-login.lonestar.tacc.utexas.edu:2119,compute,OSG,FAIL,2006-08-21 19:30:23 19,TTU-ANTAEUS,antaeus.hpcc.ttu.edu:2119,compute,OSG,PASS,2006-08-21 19:30:54

This page holds little information about the site its self however it links the site with the resource number of the site. The resource number is the first number that starts each line. It this example site STAR-BNL is resource 217.

Step 2:

To find out more useful information about the site this has to be applied to the link below (note I have already filled in 217 for STAR-BNL):

STAR-BNL VORS Information

The plane text information that comes back will look like this:

#VORS text interface (grid = All, VO = all, res = 217) shortname=STAR-BNL gatekeeper=stargrid02.rcf.bnl.gov gk_port=2119 globus_loc=/opt/OSG-0.4.0/globus host_cert_exp=Feb 24 17:32:06 2007 GMT gk_config_loc=/opt/OSG-0.4.0/globus/etc/globus-gatekeeper.conf gsiftp_port=2811 grid_services= schedulers=jobmanager is of type fork jobmanager-condor is of type condor jobmanager-fork is of type fork jobmanager-mis is of type mis condor_bin_loc=/home/condor/bin mis_bin_loc=/opt/OSG-0.4.0/MIS-CI/bin mds_port=2135 vdt_version=1.3.9c vdt_loc=/opt/OSG-0.4.0 app_loc=/star/data08/OSG/APP data_loc=/star/data08/OSG/DATA tmp_loc=/star/data08/OSG/DATA wntmp_loc=: /tmp app_space=6098.816 GB data_space=6098.816 GB tmp_space=6098.816 GB extra_variables=MountPoints SAMPLE_LOCATION default /SAMPLE-path SAMPLE_SCRATCH devel /SAMPLE-path exec_jm=stargrid02.rcf.bnl.gov/jobmanager-condor util_jm=stargrid02.rcf.bnl.gov/jobmanager sponsor_vo=star policy=http://www.star.bnl.gov/STAR/comp/Grid

From the unix command line the command wget can be used to collect this information. From inside a java application the Socket class can be used to pull this information back as a String, and then parse as needed.

Globus 1.1.x

QuickStart.pdf is for Globus version 1.1.3 / 1.1.4 .

Globus Toolkit Error FAQ

Globus Toolkit Error FAQ

For GRAM error codes, follow this link.

The purpose of this document is to outline common errors encountered after the installation and setup of the Globus Toolkit.

- GRAM Job Submission failed because the connection to the server failed (check host and port) (error code 12)

- error in loading shared libraries

- ERROR: no valid proxy, or lifetime to small (one hour)

- GRAM Job submission failed because authentication with the remote server failed (error code 7)

- GRAM Job submission failed bacause authentication failed: remote certificate not yet valid (error code 7)

- GRAM Job submission failed bacause authentication failed: remote certificate has expired (error code 7)

- GRAM Job submission failed because data transfer to the server failed (error code 10)

- GRAM Job submission failed because authentication failed: Expected target subject name="/CN=host/hostname"

Target returned subject name="/O=Grid/O=Globus/CN=hostname.domain.edu" (error code 7) - Problem with local credentials no proxy credentials: run grid-proxy-init or wgpi first

- GRAM Job submission failed because authentication failed: remote side did not like my creds for unknown reason

- GRAM Job submission failed because the job manager failed to open stdout (error code 73)

or

GRAM Job submission failed because the job manager failed to open stderr (error code 74) - GRAM Job submission failed because the provided RSL string includes variables that could not be identified (error code 39)

- 530 Login incorrect / FTP LOGIN REFUSED (shell not in /etc/shells)

- globus_i_gsi_gss_utils.c:866: globus_i_gsi_gss_handshake: Unable to verify remote side's credentials: Couldn't verify the remote certificate

OpenSSL Error: s3_pkt.c:1031: in library: SSL routines, function SSL3_READ_BYTES: sslv3 alert bad certificate (error code 7) - globus_gsi_callback.c:438: globus_i_gsi_callback_cred_verify: Could not verify credential: self signed certificate in certificate chain (error code 7)

or

globus_gsi_callback.c:424: globus_i_gsi_callback_cred_verify: Can't get the local trusted CA certificate: Cannot find issuer certificate for local credential (error code 7) - SSL3_GET_CLIENT_CERTIFICATE: no certificate returned

- undefined symbol: lutil_sasl_interact

followed by a failure to load a module

/usr/local/globus-2.4.2/etc/grid-info-slapd.conf: line 23: failed to load or initialize module libback_giis.la

- GRAM Job Submission failed because the connection to the server failed (check host and port) (error code 12)

Diagnosis

Your client is unable to contact the gatekeeper specified. Possible causes include:- The gatekeeper is not running

- The host is not reachable.

The gatekeeper is on a non-standard port

Solution

Make sure the gatekeeper is being launched by inetd or xinetd. Review the Install Guide if you do not know how to do this. Check to make sure that ordinary TCP/IP connections are possible; can you ssh to the host, or ping it? If you cannot, then you probably can't submit jobs either. Check for typos in the hostname.

Try telnetting to port 2119. If you see a "Unable to load shared library", the gatekeeper was not built statically, and does not have an appropriate LD_LIBRARY_PATH set. If that is the case, either rebuild it statically, or set the environment variable for the gatekeeper. In inetd, use /usr/bin/env to wrap the launch of the gatekeeper, or in xinetd, use the "env=" option.

Check the $GLOBUS_LOCATION/var/globus-gatekeeper.log if it exists. It may tell you that the private key is insecure, so it refuses to start. In that case, fix the permissions of the key to be read only by the owner.

If the gatekeeper is on a non-standard port, be sure to use a contact string of host:port.

Back to top - error in loading shared libraries

Diagnosis

LD_LIBRARY_PATH is not set.

Solution

If you receive this as a client, make sure to read in either $GLOBUS_LOCATION/etc/globus-user-env.sh (if you are using a Bourne-like shell) or $GLOBUS_LOCATION/etc/globus-user-env.csh (if you are using a C-like shell)

Back to top - ERROR: no valid proxy, or lifetime to small (one hour)

Diagnosis

You are running globus-personal-gatekeeper as root, or did not run grid-proxy-init.

Solution

Don't run globus-personal-gatekeeper as root. globus-personal-gatekeeper is designed to allow an ordinary user to establish a gatekeeper using a proxy from their personal certificate. If you are root, you should setup a gatekeeper using inetd or xinetd, and using your host certificates. If you are not root, make sure to run grid-proxy-init before starting the personal gatekeeper.

Back to top - GRAM Job submission failed because authentication with the remote server failed (error code 7)

Diagnosis

Check the $GLOBUS_LOCATION/var/globus-gatekeeper.log on the remote server. You will probably see something like:

Authenticated globus user: /O=Grid/O=Globus/OU=your.domain/OU=Your Name

Failure: globus_gss_assist_gridmap() failed authorization. rc =1Solution

This indicates that your account is not in the grid-mapfile. Create the grid-mapfile in /etc/grid-security (or wherever the -gridmap flag in $GLOBUS_LOCATION/etc/globus-gatekeeper.conf points to) with an entry pairing your subject name to your user name. Review the Install Guide if you do not know how to do this. If you see "rc = 7", you may have bad permissions on the /etc/grid-security/. It needs to be readable so that users can see the certificates/ subdirectory.

Back to top - GRAM Job submission failed bacause authentication failed: remote certificate not yet valid (error code 7)

Diagnosis

This indicates that the remote host has a date set greater than five minutes in the future relative to the remote host.

Try typing "date -u" on both systems at the same time to verify this. (The "-u" specifies that the time should be displayed in universal time, also known as UTC or GMT.)

Solution

Ultimately, synchronize the hosts using NTP. Otherwise, unless you are willing to set the client host date back, you will have to wait until your system believes that the remote certificate is valid. Also, be sure to check your shell environment to see if you have any time zone variables set.

Back to top - GRAM Job submission failed because authentication failed: remote certificate has expired (error code 7)

Diagnosis

This indicates that the remote host has an expired certificate.

To double-check, you can use grid-cert-info or grid-proxy-info. Use grid-cert-info on /etc/grid-security/hostcert.pem if you are dealing with a system level gatekeeper. Use grid-proxy-info if you are dealing with a personal gatekeeper.

Solution

If the host certificate has expired, use grid-cert-renew to get a renewal. If your proxy has expired, create a new one with grid-proxy-init.

Back to top - GRAM Job submission failed because data transfer to the server failed (error code 10)

Diagnosis

Check the $GLOBUS_LOCATION/var/globus-gatekeeper.log on the remote server. You will probably see something like:

Authenticated globus user: /O=Grid/O=Globus/OU=your.domain/OU=Your Name

Failure: globus_gss_assist_gridmap() failed authorization. rc =1Solution

This indicates that your account is not in the grid-mapfile. Create the grid-mapfile in /etc/grid-security (or wherever the -gridmap flag in $GLOBUS_LOCATION/etc/globus-gatekeeper.conf points to) with an entry pairing your subject name to your user name. Review the Install Guide if you do not know how to do this.

Back to top - GRAM Job submission failed because authentication failed: Expected target subject name="/CN=host/hostname"

Target returned subject name="/O=Grid/O=Globus/CN=hostname.domain.edu" (error code 7)Diagnosis

New installations will often see errors like the above where the expected target subject name has just the unqualified hostname but the target returned subject name has the fully qualified domain name (e.g. expected is "hostname" but returned is "hostname.domain.edu").

This is usually becuase the client looks up the target host's IP address in /etc/hosts and only gets the simple hostname back.

Solution

The solution is to edit the /etc/hosts file so that it returns the fully qualified domain name. To do this find the line in /etc/hosts that has the target host listed and make sure it looks like:

xx.xx.xx.xx hostname.domain.edu hostname

Where "xx.xx.xx.xx" should be the numeric IP address of the host and hostname.domain.edu should be replaced with the actual hostname in question. The trick is to make sure the full name (hostname.domain.edu) is listed before the nickname (hostname).

If this only happens with your own host, see the explanation of the failed to open stdout error, specifically about how to set the GLOBUS_HOSTNAME for your host.

Back to top - Problem with local credentials no proxy credentials: run grid-proxy-init or wgpi first

Diagnosis

You do not have a valid proxy.

Solution

Run grid-proxy-init

Back to top - GRAM Job submission failed because authentication failed: remote side did not like my creds for unknown reason

Diagnosis

Check the $GLOBUS_LOCATION/var/globus-gatekeeper.log on the remote host. It probably says "remote certificate not yet valid". This indicates that the client host has a date set greater than five minutes in the future relative to the remote host.

Try typing "date -u" on both systems at the same time to verify this. (The "-u" specifies that the time should be displayed in universal time, also known as UTC or GMT.)

Solution

Ultimately, synchronize the hosts using NTP. Otherwise, unless you are willing to set the client host date back, you will have to wait until the remote server believes that your proxy is valid. Also, be sure to check your shell environment to see if you have any time zone variables set.

Back to top - GRAM Job submission failed because the job manager failed to open stdout (error code 73)

Or GRAM Job submission failed because the job manager failed to open stderr (error code 74)

Diagnosis

The remote job manager is unable to open a connection back to your client host. Possible causes include:- Bad results from globus-hostname. Try running globus-hostname on your client. It should output the fully qualified domain name of your host. This is the information which the GRAM client tools use to let the jobmanager on the remote server know who to open a connection to. If it does not give a fully qualified domain name, the remote host may be unable to open a connection back to your host.

- A firewall. If a firewall blocks the jobmanager's attempted connection back to your host, this error will result.

- Troubles in the ~/.globus/.gass_cache on the remote host. This is the least frequent cause of this error. It could relate to NFS or AFS issues on the remote host.

It is also possible that the CA that issued your Globus certificate is not trusted by your local host. Running 'grid-proxy-init -verify' should detect this situation.

Solution

Depending on the cause from above, try the following solutions:- Fix the result of 'hostname' itself. You can accomplish this by editing /etc/hosts and adding the fully qualified domain name of your host to this file. See how to do this in the explanation of the expected target subject error. If you cannot do this, or do not want to do this, you can set the GLOBUS_HOSTNAME environment variable to override the result of globus-hostname. Set GLOBUS_HOSTNAME to the fully qualified domain name of your host.

- To cope with a firewall, use the GLOBUS_TCP_PORT_RANGE environment variable. If your host is behind a firewall, set GLOBUS_TCP_PORT_RANGE to the allowable incoming connections on your firewall. If the firewall is in front of the remote server, you will need the remote site to set GLOBUS_TCP_PORT_RANGE in the gatekeeper's environment to the allowable incoming range of the firewall in front of the remote server. If there are firewalls on both sides, perform both of the above steps. Note that the allowable ranges do not need to coincide on the two firewalls; it is, however, necessary that the GLOBUS_TCP_PORT_RANGE be valid for both incoming and outgoing connections of the firewall it is set for.

- If you are working with AFS, you will want the .gass_cache directory to be a link to a local filesystem. If you are having NFS trouble, you will need to fix it, which is beyond the scope of this document.

Install the trusted CA for your certificate on the local system.

Back to top - GRAM Job submission failed because the provided RSL string includes variables that could not be identified (error code 39)

Diagnosis

You submitted a job which specifies an RSL substitution which the remote jobmanager does not recognize. The most common case is using a 2.0 version of globus-job-get-output with a 1.1.x gatekeeper/jobmanager.

Solution

Currently, globus-job-get-output will not work between a 2.0 client and a 1.1.x gatekeeper. Work is in progress to ensure interoperability by the final release. In the meantime, you should be able to modify the globus-job-get-output script to use $(GLOBUS_INSTALL_PATH) instead of $(GLOBUS_LOCATION).

Back to top - 530 Login incorrect / FTP LOGIN REFUSED (shell not in /etc/shells)

Diagnosis

The 530 Login incorrect usually indicates that your account is not in the grid-mapfile, or that your shell is not in /etc/shells.

Solution

If your account is not in the grid-mapfile, make sure to get it added. If it is in the grid-mapfile, check the syslog on the machine, and you may see the /etc/shells message. If that is the case, make sure that your shell (as listed in finger or chsh) is in the list of approved shells in /etc/shells.

Back to top - globus_i_gsi_gss_utils.c:866: globus_i_gsi_gss_handshake: Unable to verify remote side's credentials: Couldn't verify the remote certificate

OpenSSL Error: s3_pkt.c:1031: in library: SSL routines, function SSL3_READ_BYTES: sslv3 alert bad certificate (error code 7)Diagnosis

This error message usually indicates that the server you are connecting to doesn't trust the Certificate Authority (CA) that issued your Globus certificate.

Solution

Either use a certificate from a different CA or contact the administer of the resource you are connecting to and request that they install the CA certificate in their trusted certificates directory.

Back to top - globus_gsi_callback.c:438: globus_i_gsi_callback_cred_verify: Could not verify credential: self signed certificate in certificate chain (error code 7)

Or globus_gsi_callback.c:424: globus_i_gsi_callback_cred_verify: Can't get the local trusted CA certificate: Cannot find issuer certificate for local credential (error code 7)

Diagnosis

This error message indicates that your local system doesn't trust the certificate authority (CA) that issued the certificate on the resource you are connecting to.

Solution

You need to ask the resource administrator which CA issued their certificate and install the CA certificate in the local trusted certificates directory.

Back to top - SSL3_GET_CLIENT_CERTIFICATE: no certificate returned

Diagnosis

This error message indicates that the name in the certificate for the remote party is not legal according local signing_policy file for that CA.

Solution

You need to verify you have the correct signing policy file installed for the CA by comparing it with the one distributed by the CA.

Back to top - undefined symbol: lutil_sasl_interact

Diagnosis

Globus replica catalog was installed along with MDS/Information Services.

Solution

Do not install the replica bundle into a GLOBUS_LOCATION containing other Information Services. The Replica Catalog is also deprecated - use RLS instead.

Back to top

Intro to FermiGrid site for STAR users

The FNAL_FERMIGRID site policy and some documentation can be found here:

http://fermigrid.fnal.gov/policy.html

You must use VOMS proxies (rather than grid certificate proxies) to run at this site. A brief intro to voms proxies is here: Introduction to voms proxies for grid cert users

All users with STAR VOMS proxies are mapped to a single user account ("star").

Technical note: (Quoting from an email that Steve Timm sent to Levente) "Fermigrid1.fnal.gov is not a simple jobmanager-condor. It is emulating the jobmanager-condor protocol and then forwarding the jobs on to whichever clusters have got free slots, 4 condor clusters and actually one pbs cluster behind it too." For instance, I noticed jobs submitted to this gatekeeper winding up at the USCMS-FNAL-WC1-CE site in MonAlisa. (What are the other sites?)

You can use SUMS to submit jobs to this site (though this feature is still in beta testing) following this example:

star-submit-beta -p dynopol/FNAL_FERMIGRID jobDesription.xml

where jobDescription.xml is the filename of your job's xml file.

Site gatekeeper info:

Hostname: fermigrid1.fnal.gov

condor queue is available (fermigrid1.fnal.gov/jobmanager-condor)

If no jobmanager is specified, the job runs on the gatekeeper itself (jobmanager-fork, I’d assume)

[stargrid02] ~/> globus-job-run fermigrid1.fnal.gov

/bin/cat /etc/redhat-release

Scientific Linux Fermi LTS release 4.2 (Wilson)

Fermi worker node info:

[stargrid02] ~/> globus-job-run fermigrid1.fnal.gov/jobmanager-condor /bin/cat /etc/redhat-release

Scientific Linux SL release 4.2 (Beryllium)

[stargrid02] ~/> globus-job-run fermigrid1.fnal.gov/jobmanager-condor /usr/bin/gcc -v

Using built-in specs.

Configured with: ../configure --prefix=/usr --mandir=/usr/share/man --infodir=/usr/share/info --enable-shared --enable-threads=posix --disable-checking --with-system-zlib --enable-__cxa_atexit --disable-libunwind-exceptions --enable-java-awt=gtk --host=i386-redhat-linux

Thread model: posix

gcc version 3.4.4 20050721 (Red Hat 3.4.4-2)

There doesn't seem to be a GNU fortran compiler such as g77 on the worker nodes.

Open question: What is the preferred file transfer mechanism?

In GridCat they list an SRM server at srm://fndca1.fnal.gov:8443/ but I have not made any attempt to use it.

Introduction to voms proxies for grid cert users

The information in a voms proxy is a superset of the information in a grid certificate proxy. This additional information includes details about the VO of the user. For users, the potential benefit is the possibility to work as a member of multiple VOs with a single DN and have your jobs accounted accordingly. Obtaining a voms-proxy (if all is well configured) is as simple as “voms-proxy-init -voms star” (This is of course for a member of the STAR VO).Here is an example to illustrate the difference between grid proxies and voms proxies (note that the WARNING and Error lines at the top don’t seem to preclude the use of the voms proxy – the fact is that I don’t know why those appear or what practical implications there are from the underlying cause – I hope to update this info as I learn more):

[stargrid02] ~/> voms-proxy-info -allWARNING: Unable to verify signature!

Error: Cannot find certificate of AC issuer for vo star

subject : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856/CN=proxy

issuer : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

identity : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

type : proxy

strength : 512 bits

path : /tmp/x509up_u2302

timeleft : 4:10:20

=== VO star extension information ===

VO : star

subject : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

issuer : /DC=org/DC=doegrids/OU=Services/CN=vo.racf.bnl.gov

attribute : /star/Role=NULL/Capability=NULL

timeleft : 4:10:19

[stargrid02] ~/> grid-proxy-info -all

subject : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856/CN=proxy

issuer : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

identity : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

type : full legacy globus proxy

strength : 512 bits

path : /tmp/x509up_u2302

timeleft : 4:10:14

In order to obtain the proxy, the VOMS server for the requested VO must be contacted (with the potential drawback that it introduces a dependency on a working VOMS server that doesn’t exist with a simple grid cert. It is worth further noting that either a VOMS or GUMS server (I should investigate this) will also be contacted by VOMS-aware gatekeepers to authenticate the users at job submission time, behind the scenes. One goal (or consequence at least) of this sort of usage is to eliminate static grid-map-files.)

Something else to note (and investigate): the voms-proxy doesn’t necessarily last as long as the basic grid cert proxy – the voms part can apparently expire independent of the grid-proxy. Consider this example, in which the two expiration times are different:

[stargrid02] ~/> voms-proxy-info -allWARNING: Unable to verify signature!

Error: Cannot find certificate of AC issuer for vo star

subject : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856/CN=proxy

issuer : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

identity : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

type : proxy

strength : 512 bits

path : /tmp/x509up_u2302

timeleft : 35:59:58

=== VO star extension information ===

VO : star

subject : /DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856

issuer : /DC=org/DC=doegrids/OU=Services/CN=vo.racf.bnl.gov

attribute : /star/Role=NULL/Capability=NULL

timeleft : 23:59:58

(Question: What determines the duration of the voms-proxy extension - the VOMS server or the user/client?)

Technical note 1: on stargrid02, the “vomses” file, which lists the URL for VOMS servers, was not in a default location used by voms-proxy-init, and thus it was not actually working (basically, it worked just like grid-proxy-init). I have put an existing vomses file in /opt/OSG-0.4.1/voms/etc and it seems content to use it.

Technical note 2: neither stargrid03’s VDT installation nor the WNC stack on the rcas nodes has VOMS tools. I’m guessing that the VDT stack is too old on stargrid03 and that voms-proxy tools are missing on the worker nodes because that functionality isn't really needed on a worker node.

Job Managers

Several job managers are available as part of any OSG/VDT/Globus deploymenets. They may restrict access to keywords fundamental to job control and efficiency or may not even work.The pages here will documents the needed changes or features.

Condor Job Manager

Condor job manager code is provided as-is for quick code inspection. The version below is from the OSG 0.4.1 software stack.

LSF job manager

LSF job manager code below is from globus 2.4.3.

SGE Job Manager

SGE job manager code was developed by the UK Grid eScience. It is provided as-is for quick code inspection. The version below is as integrated in VDT 1.5.2 (post OSG 0.4.1). Please, note that the version below includes patches provided by the RHIC/STAR VO. Consult SGE Job Manager patch for more information.

Modifying Virtual Machine Images and Deploying Them

Modifying Virtual Machine Images and Deploying Them

The steps:

login to stargrid01

Check that your ssh public key is in $home/.ssh/id_rsa.pub, if not put it there.

Select the base image you wish to modify. You will find the name of the image you are currently using for your cluster by looking inside:

/star/u/lbhajdu/ec2/workspace-cloud-client-010/samples/[cluster discretions].xmlOpen up this file you will find a structure that looks something like the one below. There are two <workspace> blocks one for the gatekeeper and one for the worker nodes. The name of the image for the worker node is in the second block in-between the <image> tags. So for the example below the name would be osgworker-012.

To make a modification to the image we have to mount/deploy that image. Once we know the name, simply type:

./bin/cloud-client.sh --run --name [image name] --hours 50Where [image name] is the name we found in step 3. This image will be up for 50 hours. You will have to save the image before you run out of time, else all of your changes will be lost.

The output of this command will look something like:

[stargrid01] ~/ec2/workspace-cloud-client-010/> ./bin/cloud-client.sh --run --name osgworker-012 --hours 50

(Overriding old GLOBUS_LOCATION '/opt/OSG-0.8.0-client/globus')

(New GLOBUS_LOCATION: '/star/u/lbhajdu/ec2/workspace-cloud-client-010/lib/globus')

SSH public keyfile contained tilde:

- '~/.ssh/id_rsa.pub' --> '/star/u/lbhajdu/.ssh/id_rsa.pub'

Launching workspace.

Workspace Factory Service:

https://tp-vm1.ci.uchicago.edu:8445/wsrf/services/WorkspaceFactoryService

Creating workspace "vm-003"... done.

IP address: 128.135.125.29

Hostname: tp-x009.ci.uchicago.edu

Start time: Tue Jan 13 13:59:04 EST 2009

Shutdown time: Thu Jan 15 15:59:04 EST 2009

Termination time: Thu Jan 15 16:09:04 EST 2009

Waiting for updates.

"vm-003" reached target state: Running

Running: 'vm-003'It will take some time for the command to finish, usually a few minutes. Make sure you do not loose the output for this command. Inside the output there are two pieces of information you must note. They are the hostname and the handle. In this example the hostname is tp-x009.ci.uchicago.edu and the handle is vm-003.

Next log on to the host using the host name from step 4. Note that your ssh public key will be copied to the /root/.ssh/id_rsa.pub. To log on type:

ssh root@[hostname]

Example:

ssh root@tp-x009.ci.uchicago.edu

Next make the change(s) to the image, you wish to make (this step is up to you).

To save the changes you will need the handle from step 2. And you will need to pick a name for the new image. Run this command:

./bin/cloud-client.sh --save --handle [handle name] --newname [new image name]

Where [handle name] is replaced with the name of the handle and [new image name] is replaced with the new image’s name. If you do not use the name option you will overwrite your image. Here is an example with the values from above.

./bin/cloud-client.sh --save --handle vm-003 --newname starworker-sl08f

The output will look something like this:

[stargrid01] ~/ec2/workspace-cloud-client-010/> ./bin/cloud-client.sh --save --handle vm-004 --newname starworker-sl08e

(Overriding old GLOBUS_LOCATION '/opt/OSG-0.8.0-client/globus')

(New GLOBUS_LOCATION: '/star/u/lbhajdu/ec2/workspace-cloud-client-010/lib/globus')

Saving workspace.

- Workspace handle (EPR): '/star/u/lbhajdu/ec2/workspace-cloud-client-010/history/vm-004/vw-epr.xml'

- New name: 'starworker-sl08e'

Waiting for updates.

"Workspace #919": TransportReady, calling destroy for you.

"Workspace #919" was terminated.This is an optional step, because the images can be several GB big you may want to delete the old image with this command:

./bin/cloud-client.sh --delete --name [old image name]This is what it would look like:

(Overriding old GLOBUS_LOCATION '/opt/OSG-0.8.0-client/globus')

(New GLOBUS_LOCATION: '/star/u/lbhajdu/ec2/workspace-cloud-client-010/lib/globus')

Deleting: gsiftp://tp-vm1.ci.uchicago.edu:2811//cloud/56441986/starworker-sl08f

Deleted.To start up a cluster with the new image you will need to modify one of the:

/star/u/lbhajdu/ec2/workspace-cloud-client-010/samples/[cluster discretion].xml

file inside the <workspace> block of the worker node replace <image> with the name of your own image from step 7. You can also set the number of worker node images you wish to bring up by setting the number in the <quantity> tag.

Note: Be careful remember there are usually at least two <workspace> blocks in each xml fie.

Next just bring up the cluster like any other VM cluster. (See my Drupal documentation)

<workspace>

<name>head-node</name>

<image>osgheadnode-012</image>

<quantity>1</quantity>

.

.

.

</workspace>

<workspace>

<name>compute-nodes</name>

<image>osgworker-012</image>

<quantity>3</quantity>

<nic interface=”eth1”>private</nic>

.

.

.

</workspace>

Rudiments of grid map files on gatekeepers

This is intended as a practical introduction to mapfiles for admins of new sites to help get the *basics* working and avoid some common problems with grid user management and accounting.It should be stressed that manually maintaining mapfiles is the most primitive user management technique. It is not scalable and it has been nearly obsoleted by two factors:

1. There are automated tools for maintaining mapfiles (GUMS with VOMS in the background, for instance, but that's not covered here).

2. Furthermore, VOMS proxies are replacing grid certificate proxies, and the authentication mechanism no longer relies on static grid mapfiles, but instead can dynamically authenticate against GUMS or VOMS servers directly for each submitted job.

But let's ignore all that and proceed with good old-fashioned hand edits of two critical files on your gatekeeper:

/etc/grid-security/grid-mapfile

and

$VDT_LOCATION/monitoring/grid3-user-vo-map.txt

(the location of the grid-mapfile in /etc/grid-security is not universal, but that's the default location)

In the grid-mapfile, you'll want entries like the following, in which user DNs are mapped to specific user accounts. You can see from this example that multiple DNs can map to one user account (rgrid000 in this case):

#---- members of vo: star ----#

"/DC=org/DC=doegrids/OU=People/CN=Valeri Fine 224072" fine

"/DC=org/DC=doegrids/OU=People/CN=Wayne Betts 602856" wbetts

#---- members of vo: mis ----#

"/DC=org/DC=doegrids/OU=People/CN=John Rosheck (GridScan) 474533" rgrid000

"/DC=org/DC=doegrids/OU=People/CN=John Rosheck (GridCat) 776427" rgrid000

(The lines starting with '#' are comments and are ignored.)

You see that if you want to support the STAR VO, then you will need to include the DN for every STAR user with a grid cert (though as of this writing, it is only a few dozen, and only a few of them are actively submitting any jobs. Those two above are just a sampling.) You can support multiple VOs if you wish, as we see with the MIS VO. But MIS is a special VO -- it is a core grid infrustructure VO, and the DNs shown here are special testing accounts that you'll probably want to include so that you appear healthy in various monitoring tools.

In the grid3-user-vo-map.txt file, things are only slightly more complicated, and could look like this:

#User-VO map

# #comment line, format of each regular line line: account VO

# Next 2 lines with VO names, same order, all lowercase, with case (lines starting with #voi, #VOc)

#voi mis star

#VOc MIS STAR

#---- accounts for vo: star ----#

fine star

wbetts star

#---- accounts for vo: mis ----#

rgrid000 mis

(Here one must be careful -- the '#' symbol denotes comments, but the two lines starting with #voi and #VOc are actually read by VORS (this needs to be fleshed out), so keep them updated with your site's actual supported VOs.)

In this example, we see that users 'fine' and 'wbetts' are mapped to the star VO, while 'rgrid000' is mapped to the mis VO.

Maintaining this user-to-VO map is not critical to running jobs at your site, but it does have important functions:

1. MonAlisa uses this file in its accounting and monitoring (such as VO jobs per site)

2. VORS advertises the supported VOs at each site based on this file, and users use VORS to locate sites that claim to support their VO... thus if you claim to support a VO that you don't actually support, then sooner or later someone from that VO will submit jobs to your site, which will fail and then THEY WILL REPORT YOU TO THE GOC!

(Don't worry, there's no great penalty, just the shame of having to respond to the GOC ticket. Note that updates to this file can take several hours to be noticed by VORS.)

If you aren't familiar with VORS or MonAlisa, then hop to it. You can find links to both of them here:

http://www.opensciencegrid.org/?pid=1000098

SRM instructions for bulk file transfer to PDSF

These links describe how to do bulk file transfers from RCF to PDSF.

How to run the transfers

The first step is to figure out what files you want to transfer and make some file lists for SRM transfers:At PDSF make subdirectories ~/xfer ~/hrm_g1 ~/hrm_g1/lists

Copy from ~hjort/xfer the files diskOrHpss.pl, ConfigModule.pm and Catalog.xml into your xfer directory.

You will need to contact ELHjort@lbl.gov to get Catalog.xml because it has administrative privileges in it.

Substitute your username for each "hjort" in ConfigModule.pm.

Then in your xfer directory run the script (in redhat8):

pdsfgrid1 88% diskOrHpss.pl

Usage: diskOrHpss.pl [production] [trgsetupname] [magscale]

e.g., diskOrHpss.pl P04ij ppMinBias FullField

pdsfgrid1 89%

Note that trgsetupname and magscale are optional. This script may take a while depending on what you specify. If all goes well you'll get some files created in your hrm_g1/lists directory. A brief description of the files the script created:

*.cmd: Commands to transfer files from RCF disks

*.srmcmd: Commands to transfer files from RCF HPSS

in lists:

*.txt: File list for transfers from RCF disks

*.rndm: Same as *.txt but randomized in order

*.srm: File list for transfer from RCF HPSS

Next you need to get your cert installed in the grid-mapfiles at PDSF and at RCF. At PDSF you do it in NIM. Pull up your personal info and find the "Grid Certificates" tab. Look at mine to see the form of what you need to enter there. For RCF go here:

http://orion.star.bnl.gov/STAR/comp/Grid/Infrastructure/certs-vomrs/

Also, you'll need to copy a file of mine into your directory:

cp ~hjort/hrm_g1/pdsfgrid1.rc ~/hrm_g1/pdsfgrid1.rc

That's the configuration file for the HRM running on pdsfgrid1. When you've got that done you can try to move some files by executing one of the srm-copy.linux commands found in the .cmd or .srmcmd file.

Monitoring transfers

You can tell if transfers are working from the messages in your terminal window.You can monitor the transfer rate on the pdsfgrid1 ganglia page on the “bytes_in” plot. However, it’s also good to verify that rrs is entering the files into the file catalog as they are sunk into HPSS. This can be done with get_file_list.pl:

pdsfgrid1 172% get_file_list.pl -as Admin -keys 'filename' -limit 0 –cond 'production=P06ic' | wc -l

11611

pdsfgrid1 173%

A more specific set of conditions will of course result in a faster query. Note that the “-as Admin” part is required if you run this in the hrm_g1 subdirectory due to the Catalog.xml file. If you don't use it you will query the PDSF mirror of the BNL file catalog instead of the PDSF file catalog.

Running the HRM servers at PDSF

I suggest creating your own subdirectory ~/hrm_g1 similar to ~hjort/hrm_g1. Then copy from my directory to yours the following files:

setup

hrm

pdsfgrid1.rc

hrm_rrs.rc

Catalog.xml (coordinate permissions w/me)

Substitute your username for “hjort” in these files and then start the HRM by doing “source hrm”. Note that you need to run in redhat8 and your .chos file is ignored on grid nodes so you need to chos to redhat8 manually. If successful you should see the following 5 tasks running:

pdsfgrid1 149% ps -u hjort

PID TTY TIME CMD

8395 pts/1 00:00:00 nameserv

8399 pts/1 00:00:00 trm.linux

8411 pts/1 00:00:00 drmServer.linux

8461 pts/1 00:00:00 rrs.linux

8591 pts/1 00:00:00 java

pdsfgrid1 150%

Note that the “hrm” script doesn’t always work depending on the state things are in but it should always work if the 5 tasks shown above are all killed first.

Running the HRM servers at RCF

I suggest creating your own subdirectory ~/hrm_grid similar to ~hjort/hrm_grid. Then copy from my directory to yours the following files:

srm.sh

hrm

bnl.rc

drmServer.linux (create the link)

trm.linux (create the link)

Substitute your username for “hjort” in these files and then start the HRM by doing “source hrm”. If successful you should see the following 3 tasks running:

[stargrid03] ~/hrm_grid/> ps -u hjort

PID TTY TIME CMD

13608 pts/1 00:00:00 nameserv

13611 pts/1 00:00:00 trm.linux