Heavy Flavor

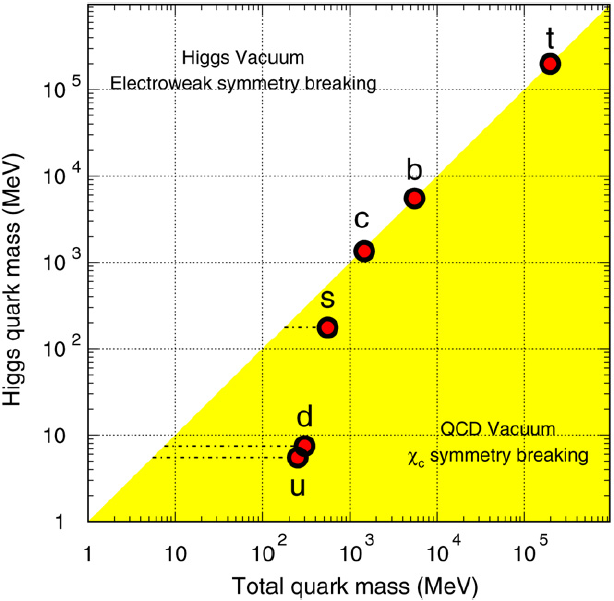

Depending on the energy scale, there are two mechanisms that generate quark masses with different degrees of importance: current quark masses are generated by the electroweak symmetry breaking mechanism (Higgs mass) and spontaneous chiral symmetry breaking leads to the constituent quark masses in QCD (QCD mass). The QCD interaction strongly affects the light quarks (u, d, s) while the heavy quark masses (c, b, t) are mainly determined by the Higgs mechanism. In high-energy nuclear collisions at RHIC, heavy quarks are produced through gluon fusion and qq¯ annihilation. Heavy quark production is also sensitive to the parton distribution function. Unlike the light quarks, heavy quark masses are not modified by the surrounding QCD medium (or the excitations of the QCD medium) and the value of their masses is much higher than the initial excitation of the system. It is these differences between light and heavy quarks in a medium that make heavy quarks an ideal probe to study the properties of the hot and dense medium created in high-energy nuclear collisions.

Depending on the energy scale, there are two mechanisms that generate quark masses with different degrees of importance: current quark masses are generated by the electroweak symmetry breaking mechanism (Higgs mass) and spontaneous chiral symmetry breaking leads to the constituent quark masses in QCD (QCD mass). The QCD interaction strongly affects the light quarks (u, d, s) while the heavy quark masses (c, b, t) are mainly determined by the Higgs mechanism. In high-energy nuclear collisions at RHIC, heavy quarks are produced through gluon fusion and qq¯ annihilation. Heavy quark production is also sensitive to the parton distribution function. Unlike the light quarks, heavy quark masses are not modified by the surrounding QCD medium (or the excitations of the QCD medium) and the value of their masses is much higher than the initial excitation of the system. It is these differences between light and heavy quarks in a medium that make heavy quarks an ideal probe to study the properties of the hot and dense medium created in high-energy nuclear collisions.

Heavy flavor analyses at STAR can be separated into quarkonia, open heavy flavor and heavy flavor leptons.

Abstracts, Presentations and Proceedings

Abstracts

This page is maintained by Gang Wang.

#9995# DNP (fall meeting) 2010

Abstracts for DNP (fall meeting) 2010 (Nov. 2-6, 2010, Santa Fe, NM)

- Wenqin Xu

Title: Extracting bottom quark production cross section from p+p collisions at RHIC

The STAR collaboration has measured the non-photonic electron (NPE) production at high transverse momentum (pT ) at middle rapidity in p + p collisions at sqrt(s) = 200 GeV at the Relativistic Heavy Ion Collider (RHIC). The relative contributions of bottom and charm hadrons to NPE have also been obtained through electron hadron azimuthal

correlation studies. Combining these two, we are able to determine the high pT mid-rapidity electron spectra

from bottom and charm decays, separately.

PYTHIA with different tunes and FONLL calculations have been compared with this measured electron spectrum

from bottom decays to extract the bb-bar differential cross section after normalization to the measured spectrum.

The extrapolation of the total bb-bar production cross section in the whole kinematic range and its dependence

on spectrum shapes from model calculations will also be discussed.

- Yifei Zhang

Title: Open charm hadron reconstruction via hadronic decays in p+p collisions at $sqrt{s}$ = 200 GeV

Heavy quarks are believed to be an ideal probe to study the properties of the QCD medium produced in the relativistic heavy ion collisions. Heavy quark production in elementary particle collisions is expected to be better calculated in the perturbative QCD. Precision understanding on both the charm production total cross section and the fragmentation in p+p collisions is a baseline to further explore the QCD medium via open charm and charmonium in heavy ion collisions.

Early RHIC measurements in p+p collisions which were carried out via semi-leptonic decay electrons provides limited knowledge on the heavy quark production due to the incomplete kinematics, the limited momentum coverage and the mixed contribution from various charm and bottom hadrons in the electron approach. In this talk, we will present

the reconstruction of open charm hadrons (D0 and D*) via the hadronic decays in p+p collisions at $sqrt{s}$ = 200 GeV in the STAR experiment. The analysis is based on the large p+p minimum bias sample collected in RHIC Run9. The Time-Of-Flight detector, which covered 72% of the whole barrel in Run9, was used to improve the decay daughter

identification. Physics implications from this analysis will be presented.

- Xin Li

Title: Non-photonic Electron Measurements in 200 GeV p+p collisions at RHIC-STAR

Compared to the light quarks, heavy quarks are produced early in the collisions and interact very differently with the strongly couple QGP(sQGP) created at RHIC. In addition, their large masses are created mostly from the spontaneous symmetry breaking. All these features make heavy quark an ideal probe to study the sQGP. One of the critical references in these studies is the heavy quark production in p+p collisions, which also provides a crucial test to the pQCD. Measuring electrons from heavy quark semi-leptonic decay (non-photonic electron) is one of the major approaches to study heavy quark production at RHIC.

We will present STAR measurements on the mid-rapidity non-photonic electron production at pT>2 GeV/c in 200 GeV p+p collisions using the datasets from the 2008 and 2005 runs, which have dramatically different photonic backgrounds. We will compare our measurements with the published results at RHIC and also report the status of the analysis at pT<2 GeV/c using the dataset from the 2009 run.

- Jonathan Bouchet

Title: Reconstruction of charmed decays using microvertexing techniques with the STAR Silicon Detectors

Due to their production at the early stages, heavy flavor particles are of interest to study the properties of the matter created in heavy ion collisions. Direct topological reconstruction of $D$ and $B$ mesons, as opposed to indirect methods using semi-leptonic decay channels [1], provides a precise measurement and thus disentangles the $b$ and $c$ quarks contributions [2].

In this talk we present a microvertexing technique used in the reconstruction of $D^{0}$ decay vertex ($D^{0} \rightarrow K^{-}\pi^{+}$) and its charge conjugate. The significant combinatorial background can be reduced by means of

secondary vertex reconstruction and other track cut variables. Results of this method using the silicon detector information of the STAR experiment at RHIC will be presented for the Au+Au system at $\sqrt{s_{NN}}$ = 200 GeV.

[1]A. Abelev et al., Phys. Rev. Lett. {\bf 98} (2007) 192301

[2]N. Armesto et al., Phys. Lett. B{\bf 637} (2006) 362-366.

#9996# Hard Probe 2010

Abstracts for 2010 Hard Probe Meeting (Oct. 10-15, 2010, Eilat, Israel)

- Wei Xie

Heavy quarks are unique probes to study the strongly coupled Quark-Gluon Plasma created at RHIC. Unlike light quarks, heavy quark masses come mostly from spontaneous symmetry breaking, which makes them ideal for studying the medium's QCD properties. Due to their large masses, they are produced early in the collisions and are expected to interact with the medium quite differently from that of light quarks. Detailed studies on the production of open heavy flavor mesons and heavy quarkonium in heavy-ion collisions and the baseline $p+p$ and $d+A$ collisions provide crucial information in understanding the medium's properties. With the large acceptance TPC, Time of Flight, EM Calorimeter and future Heavy-Flavor Tracker, STAR has the capabilities to study heavy quark production in the dense medium in all different directions. In this talk, we will review the current status as well as the future perspectives of heavy quark studies in STAR experiment.

- Zebo Tang

Title: $J/\psi$ production at high pT at STAR

The $c\bar{c}$ bound state $J/\psi$ provides a unique tool to probe the hot dense medium produced in heavy-ion collisions, but to date its production mechanism is not understood clearly neither in heavy-ion collisions nor in hadron hadron collisions. Measurement of $J/\psi$ production at high $p_T$ is particularly interesting since at high $p_T$

the various models give different predictions. More over some model calculations on $J/\psi$ production are only applicable at intermediate/high $p_T$. Besides, high $p_T$ particles are widely used to study the parton-medium interactions in heavy-ion collisions. In this talk, we will present the measurement of mid-rapidity (|y|<1) $J/\psi \rightarrow

e^+e^-$ production at high $p_T$ in p+p and Cu+Cu collisions at 200 GeV, that used a trigger on electron energy deposited in Electromagnetic Calorimeter. The $J/\psi$ $p_T$ spectra and nuclear modification factors will be compared to model calculations to understand its production mechanism and medium modifications. The $J/\psi$-hadron azimuthal angle correlation will be presented to disentangle $B$-mesons contributions to inclusive $J/\psi$. Progresses

from on-going analyses in p+p collisions at 200GeV taken in year 2009 high luminosity run will be also reported.

-

Rosi Reed

Title: $\Upsilon$ production in p+p, d+Au, Au+Au collisions at $\sqrt{{S}_{NN }} = $ 200 GeV in STAR

Quarkonia is a good probe of the dense matter produced in heavy-ion collisions at RHIC because it is produced early in the collision and the production is theorized to be suppressed due to the Debye color screening of the potential between the heavy quarks. A model dependent measurement of the temperature of the Quark Gluon Plasma (QGP) can be determined by examining the ratio of the production of various quarkonia states in heavy ion collisions versus p+p collisions because lattice calculations indicate that the quarkonia states will be sequentially suppressed. Suppression is quantified by calculating ${R}_{AA}$, which is the ratio of the production in p+p scaled by the number of binary collisions to the production in Au+Au. The $\Upsilon$ states are of particular interest because at 200 GeV the effects of feed down and co-movers are smaller than for J/$\psi$, which decreases the systematic uncertainty of the ${R}_{AA} calculation. In addition to hadronic absorption, additional cold nuclear matter effects, such as shadowing of the PDFs, can be determined from d+Au collisions. We will present our results for mid-rapidity $\Upsilon$ production in p+p, as well as our preliminary results in d+Au and Au+Au at $\sqrt{{S}_{NN }}$ = 200 GeV. These results will then be compared with theoretical QCD calculations.

-

Wei Li

Title: Non$-$Photonic Electron and Charged Hadron Azimuthal Correlation in 500 GeV p+p Collisionsions at RHIC

Due to the dead cone effect, heavy quarks were expected to lose less energy than light quarks since the current theory predicted that the dominant energy loss mechanism is gluon radiation for heavy quarks. Whereas non-photonic electron from heavy quark decays show similar suppression as light hadrons at high $p_{T}$ in central Au+Au collisions. It is important to separate the bottom contribution to non-photonic electron for the better understanding of heavy flavor

production and energy loss mechanism in ultra high energy heavy ion collisions. B decay contribution is approximately 50$\%$ at a transverse momentum of $p_{T}$$\geq$5 GeV/c in 200 GeV p+p collisions from STAR results. In this talk, we will present the azimuthal correlation analysis of non-photonic electrons with charged hadrons at $p_{T}$$\geq$6.5 GeV/c in p+p collisions at $\sqrt{s}$ = 500 GeV at RHIC. The results are compared to PYTHIA simulations to disentangle

charm and bottom contribution of semi-leptonic decays to non-photonic electrons.

-

Gang Wang

Title: B/D Contribution to Non-Photonic Electrons and Status of Non-Photonic Electron $v_2$ at RHIC

In contrast to the expectations due to the dead cone effect, non-photonic electrons from decays of heavy quark carrying hadrons show a similar suppression as light hadrons at high $p_{T}$ in central 200 GeV Au+Au collisions at RHIC. It is important to separate the charm and bottom contributions to non-photonic electrons to better understand the heavy flavor production and energy loss mechanism in high energy heavy ion collisions. Heavy quark energy loss and heavy quark evolution in the QCD medium can also lead to an elliptic flow $v_2$ of heavy quarks which can be studied through $v_2$ of non-photonic electrons.

In this talk, we present the azimuthal correlation analysis of non-photonic electrons with charged hadrons at 1.5 GeV/c < $p_{T}$ < 9.5 GeV/c in p+p collisions at $\sqrt{s}$ = 200 GeV at RHIC, with the removal of J/$\Psi$ contribution to non-photonic electrons. The results are compared with PYTHIA simulations to disentangle charm and bottom contributions of semi-leptonic decays to non-photonic electrons. B decay contribution is approximately 50$\%$ at the electron transverse momentum of $p_{T}$ > 5 GeV/c in 200 GeV p+p collisions from STAR results. Incorporating the spectra and energy loss information of non-photonic electrons, we further estimate the spectra and energy loss of the electrons from B/D decays. Status of $v_2$ measurements for non-photonic electrons will also be discussed for 200 GeV Au+Au collisions with RHIC run2007 data.

#9997# APS 2010 April Meeting

Abstracts for 2010 APS April Meeting (Feb. 13-17, 2010, Washington DC)

- Jonathan Bouchet

Title: Performance studies of the Silicon Detectors in STAR towards microvertexing of rare decays

Abstract: Heavy quarks production ($b$ and $c$) as well as their elliptic flow can be used as a probe of the thermalization of the medium created in heavy ions collisions. Direct topological reconstruction of charmed and bottom decays is then needed to obtain this precise measurement. To achieve this goal the silicon detectors of the STAR experiment are explored. These detectors, a Silicon Drift (SVT) 3-layer detector[1] and a Silicon Strip one-layer detector[2] provide tracking very near to the beam axis and allow us to search for heavy flavour with microvertexing methods. $D^{0}$ meson reconstruction including the silicon detectors in the tracking algorithm will be presented for the Au+Au collisions at $\sqrt{s_{NN}}$ = 200 GeV, and physics opportunities will be discussed.

[1]R. Bellwied et al., Nucl. Inst. Methods A499 (2003) 640.

[2]L. Arnold et al., Nucl. Inst. and Methods A499 (2003) 652.

- Matt Cervantes

Title: Upsilon + Hadron correlations at the Relativistic Heavy-Ion Collider (RHIC)

Abstract: STAR has the capability to reconstruct the heavy quarkonium states of both the J/Psi and Upsilon particles produced by the collisions at the Relativistic Heavy Ion Collider (RHIC). The systematics of prompt production of heavy quarkonium is not fully described by current models, e.g. the Color Singlet Model (CSM) and the Color Octect Model. Hadronic activity directly around the heavy quarkonium has been proposed [1] as an experimental observable to measure the radiation emitted off the coloured heavy quark pair during production. Possible insight into the prompt production mechanism of heavy quarkonium can be obtained from this measured activity. Using STAR data from dAu collisions at sqrt(s_NN)= 200 GeV, the high S/B ratio found in Upsilon reconstruction [2] can enable us to perform an analysis of Upsilon + Hadron correlations. We will present our initial investigation of such an analysis.

[1] Kraan, A. C., arXiv:0807.3123.

[2] Liu, H., STAR Collaboration, arXiv:0907.4538.

PWG convener to press the approval button

On this page, we collect the information about which PWG convener to press the final approval button for which conference.

| Conference | Convener |

| 2018 Hot Quarks | Rongrong Ma |

| 2018 Hard Probes | Petr Chaloupka |

| 2018 EJC | Petr Chaloupka |

| 2018 ATHIC | Zebo Tang |

| 2018 Zimanyi School | Petr Chaloupka |

| 2019 Bormio | Rongrong Ma |

| 2019 IIT Indore | Zebo Tang |

| 2019 QCD Moriond | Petr Chaloupka |

| 2019 APS April Meeting | Sooraj Radhakrishnan |

| 2019 QWG | Zebo Tang |

| 2019 FAIRness | Zebo Tang |

| 2019 SQM | Petr Chaloupka |

| 2019 AUM | Sooraj Radhakrishnan |

Presentations

#9997# WWND2010

Jan 2-9, 2010 Winter Workshop on Nuclear Dynamics (Ocho Rios, Jamaica)

- Manuel Calderon: Quarkonia in STAR: Results and Future Plans

- Sarah LaPointe: Heavy Flavor Measurements in STAR and Future Measurements Using the HFT

#9998# DNP/JPS 2009 meeting

Oct. 13-17, 2009 DNP/JPS 2009 meeting (Big Island, Hawaii)

- Daniel Kikola: J/psi production in Au+Au and Cu+Cu collisions at sqrt(sNN) = 200 GeV at STAR

- Chris Powell: Low pT J/psi production in d+Au collisions at sqrt(sNN) = 200 GeV in STAR

- Rosi Reed: Upsilon production in p+p, d+Au, Au+Au collisions at 200 GeV in STAR

- Barbara Trzeciak (poster): Production of high pT J/psi in p+p collisions at sqrt(s) = 200 GeV in STAR

#9999# SQM 2009 meeting

Sept. 27-Oct. 2, 2009 SQM 2009 meeting (Buzios, Brazil)

Proceedings

HF PWG QM2011 analysis topics

Random list of collected topics for HF PWG QM2011 (as 10.8.2010)

Gang Wang: NPE v2 and possible NPE-h correlation

based on 200 GeV data

Wenqin Xu: Non-photonic electron spectrum in available Run10 AuAu data, and calculate the R_AA

Rosi Reed: Upsilon RAA in the 200 GeV

Yifei,David,Xin: Charm hadron measurement via the hadronic decays in both Run9 p+p and

Run10 AuAu 200 GeV collisions

Zebo Tang: High-pT J/psi spectra and correlations in run9 p+p and its R_AA in run10 200GeV Au+Au

Xin Li/ Mustafa Mustafa: Run09 p+p and Run10 Au+Au NPE cross section.

Matt Cervantes: Upsilon+hadron correlations

Chris Powell: low pT J/Psi in run 10 200GeV Au+Au to obtain R_AA and polarization measurement

Barbara Trzeciak: J/psi polarization with large

statistic p+p sample (run 9).

HF PWG Preliminary plots

This page collects the preliminary plots approved by the HF PWG.

1) All the preliminary plots MUST contain a "STAR Preliminary" label.

2) Please include at least pdf and png versions for the figures

3) Where to put the data points: it is recommended to put the data point at the x position whose yield is equal to the averge yield of the bin.

-

Rongrong presented a couple of slides at the PWGC meeting: http://www.star.bnl.gov/protected/lfspectra/marr/Analysis/PlaceDataPoints.pdf.

- See also the classic paper by Lafferty and Wyatt, NIM A355 (1995) 541-547 or see http://inspirehep.net/record/374024.

Open Heavy Flavor

| Year | System | Physics figures | First shown | Link to figures |

| 2014+2016 | Au+Au @ 200 GeV | HFT: D+/- RAA | 2020 HP | plots |

| 2014+2016 | Au+Au @ 200 GeV | HFT: Ds+/- spectra, ratio | 2019 QM |

plots |

| 2016 | Au+Au @ 200 GeV | HFT: D+/- RAA | 2018 QM | plots |

| 2016 | d+Au @ 200 GeV | HFT: D0 | 2018 QM | plots |

| 2014 | Au+Au @ 200 GeV | HFT: D*/D0 ratio | 2018 QM | plots |

| 2014+2016 | Au+Au @ 200 GeV | HFT: D0 v1 | 2018 QM | plots |

| 2014+2016 | Au+Au @ 200 GeV | HFT: non-prompt Jpsi | 2017 QM | plots |

| 2014 | Au+Au @ 200 GeV | HFT: non-prompt D0 | 2017 QM | plots |

| 2014 | Au+Au @ 200 GeV | HFT: B/D->e | 2017 QM | plots |

| 2014 2014+2016 |

Au+Au @ 200 GeV | HFT: Lc/D0 Ds/D0 ratio HFT: Lc/D0ratio HFT: Lc/D0 Ds/D0 vs ALICE |

2017 QM 2018 QM 2019 Moriond |

plots plots plots |

| 2014 | Au+Au @ 200 GeV | HFT: Ds RAA and v2 | 2017 CPOD | plots |

| 2014 | Au+Au @ 200 GeV | HFT: D+/- | 2017 QM | plots |

| 2014 | Au+Au @ 200 GeV | HFT: D0 v3 | 2017 QM | plots |

| 2014 | Au+Au @ 200 GeV | D0-hadron correlation | 2017 QM | plots |

| 2014 | Au+Au @ 200 GeV | HFT: D0 RAA HFT: D0 RAA HFT: D0 RAA and v2 |

2019 SQM 2018 QM |

|

Quarkonium

| Year | System | Physics figures | First shown | Link to figures |

| 2018 | isobar @ 200 GeV | Minimum Bias: Jpsi RAA | 2022 QM | plots slides (USTC) slides (UIC) sildes (combined) |

| 2015 | p+p @ 200 GeV | Dimuon: Jpsi with jet activity | 2022 QM | plots slides |

| 2014 | Au+Au @ 200 GeV | Dimuon: Jpsi RAA, low pT | 2022 QM | plots slides |

| 2017 | Au+Au @ 54.4 GeV | Minimum Bias: Jpsi RAA | 2021 SQM | plots slides |

| 2011 | p+p @ 500 GeV | BEMC: Jpsi in jet | 2020 HP | plots |

| 2015 | p+Au @ 200 GeV | BEMC: Jpsi RpA | 2020 HP | plots |

| 2016 2014 2011 |

Au+Au @ 200 GeV | MTD/HT: Upsilon RAA | 2018 QM |

plots |

| 2015 | p+p, p+Au @ 200 GeV | MTD: Jpsi cross-section, RpA | 2017 QM | plots |

| 2015 | p+p @ 200 GeV | MTD: Jpsi polarization | 2017 PANIC | plots |

| 2015 | p+p, p+Au @ 200 GeV | BEMC: Upsilon RpAu | 2017 QM | plots |

| 2014 | Au+Au @ 200 GeV | MTD: Jpsi RAA, v2, Upsilon ratio | 2015 QM 2016 sQM |

plots |

| 2013 | p+p @ 500 GeV | MTD: Jpsi yield vs. event activity |

2015 HP |

plots |

| 2013 | p+p @ 500 GeV | MTD: Jpsi cross-section | 2016 sQM | plots |

| 2012 | U+U @ 193 GeV | MB: low-pT Jpsi excess | 2016 sQM | plots |

| 2012 | U+U @ 193 GeV | MB/BEMC: Jpsi v2 | 2017 QM | plots |

| 2012 | p+p @ 200 GeV | MB/BEMC: Jpsi cross-section, event activity BEMC: Jpsi polarization |

2016 QWG | plots plots |

| 2011 | Au+Au @ 200 GeV | MB/BEMC: Jpsi v2 | 2015 QM | plots |

| 2011 | Au+Au @ 200 GeV | MB: low-pT Jpsi excess | 2016 sQM | plots |

| 2011 | p+p @ 500 GeV | BEMC: Jpsi cross-section | WWND | plots |

| 2011 | p+p @ 500 GeV | HT: Upsilon cross-section HT: Upsilon event activity |

2017 QM 2018 PWRHIC |

plots |

Electrons from Heavy Flavor Decay

| Year | System | Physics figures | First shown | Link to figures |

| 2017 | Au+Au @ 27 & 54.4 GeV | NPE v2 | 2020 HP | plots |

| 2014+2016 | Au+Au @ 200 GeV | HF electron: fraction, RAA, double ratio | 2019 QM | plots |

| 2014 | Au+Au @ 200 GeV | NPE cross-section; RAA (without HFT) | 2017 QM | plots |

| 2012 | p+p @ 200 GeV | NPE-hadron correlation, b fraction | 2016 Santa Fe | plots |

| 2012 | p+p @ 200 GeV | NPE cross-section; udpated RAA | 2015 QM | plots |

Heavy Quark Physics in Nucleus-Nucleus Collisions Workshop at UCLA

We will organize a workshop on heavy quark physics in nucleus-nucleus collisions from January 22-24, 2009. The workshop will be hosted by the Department of Physics and Astronomy, University of California at Los Angeles.

NPE Analyses

PicoDst production requests

This page collects the picoDst (re)production requested made by the HF PWG| Priority | Dataset | Data stream | Special needs | Chain option | Production status | Comments |

| 0 | production_pAu200_2015 | st_physics st_ssdmb |

BEMC | PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:6 | Done with SL18b | Needed for QM2018 |

| 2 | dAu200_production_2016 | st_physics | BEMC, FMS | PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:6 | Benefit QM2018 analysis | |

| 3 | production_pAu200_2015 | st_mtd | BEMC | PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:6 | ||

| 4 | AuAu200_production_2016 AuAu200_production2_2016 |

st_physics | BEMC, FMS | PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:3 | ||

| 5 | AuAu_200_production_2014 AuAu_200_production_low_2014 AuAu_200_production_mid_2014 AuAu_200_production_high_2014 |

st_mtd |

BEMC | mtdMatch, y2014a, PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:3 | ||

| 1 | AuAu_200_production_low_2014 AuAu_200_production_mid_2014 |

st_physics | BEMC | mtdMatch, y2014a, PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:3 | ||

| 6 | production_pp200long_2015 production_pp200long2_2015 production_pp200long3_2015 production_pp200trans_2015 |

st_physics st_ssdmb |

BEMC | mtdMatch, y2015c,PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:6 | ||

| production_pp200_2015 | st_mtd | mtdMatch, y2015c, PicoVtxMode:PicoVtxVpdOrDefault, TpcVpdVzDiffCut:6, PicoCovMtxMode:PicoCovMtxSkip |

Upsilon Analysis

Links related to Upsilon Analysis.

- Upsilon paper page from Pibero.

- Technical Note is located in Attachments to this page.

- TeX source (saved as .txt so drupal doesn't complain) for Technical Note is also in Attachments.

- Upsilon paper drafts are found below.

Combinatorial background subtraction for e+e- signals

It is common to use the formula 2*sqrt(N++ N--) to model the combinatorial background when studying e+e- signals, e.g. for J/psi and Upsilon analyses. We can obtain this formula in the following way.

Assume we have an event in which there are Nsig particles that decay into e+e- pairs. Since each decay generates one + and one - particle, the total number of unlike sign combinations we can make is N+- = Nsig2. To obtain the total number of pairs that are just random combinations, we subtract the number of pairs that came from a real decay. So we have

N+-comb=Nsig2-Nsig=Nsig(Nsig-1)

For the number of like-sign combinations, for example for the ++ combinations, there will be a total of (Nsig-1) pairs that can be made by the first positron, then (Nsig-2) that can be made by the second positron, and so on. So the total number of ++ combinations will be

N++ = (Nsig-1) + (Nsig - 2) + ... + (Nsig - (Nsig-1)) + (Nsig-Nsig)

Where there are Nsig terms. Factoring, we get:

N++ = Nsig2 - (1+2+...+Nsig) = Nsig2 - (Nsig(Nsig+1))/2 = (Nsig2 - Nsig)/2=Nsig(Nsig-1)/2

Similarly,

N-- = Nsig(Nsig-1)/2

If there are no acceptance effects, either the N++ or the N-- combinations can be used to model the combinatorial background by simply multiplying them by 2. The geometric average also works:

2*sqrt(N++ N--) = 2*Nsig(Nsig-1)/sqrt(4) = Nsig(Nsig-1) = N+-comb.

The geometric average can also work for cases where there are acceptance differences, with the addition of a multiplicative correction factor R to take the relative acceptance of ++ and -- pairs into account. So the geometric average is for the case R=1 (similar acceptance for ++ and --).

Estimating Acceptance Uncertainty due to unknown Upsilon Polarization

The acceptance of Upsilon decays depends on the polarization of the Upsilon. We do not have enough statistics to measure the polarization. It is also not clear even at higher energies if there is a definite pattern: there are discrepancies between CDF and D0 about the polarization of the 1S. The 2S and 3S show different polarizations trends than the 1S. So for the purposes of the paper, we will estimate the uncertainty due to the unknown Upsilon polarization using two extremes: fully transverse and fully longitudinal polarization. This is likely an overestimate, but the effect is not the dominant source of uncertainty, so for the paper it is good enough.

There are simulations of the expected acceptance for the unpolarized, longitudinal and transverse cases done by Thomas:

http://www.star.bnl.gov/protected/heavy/ullrich/ups-pol.pdf

Using the pT dependence of the acceptance for the three cases (see page 9 of the PDF) we must then apply it to our measured upsilons. We do this by obtaining the pT distribution of the unlike sign pairs (after subtracting the like-sign combinatorial background) in the Upsilon mass region and with |y|<0.5. This is shown below as the black data points.

.gif)

The data points are fit with a function of the form A pT2 exp(-pT/T), shown as the solid black line (fit result: A=18.0 +/- 8.3, T = 1.36 +/- 0.16 GeV/c). We then apply the correction for the three cases, shown in the histograms (with narrow line width). The black is the correction for the unpolarized case (default), the red is for the longitudinal and the blue is for the transverse case. The raw yield can be obtained by integrating the histogram or the function. These give 89.7 (histo) and 89.9 (fit), which given the size of the errors is a reasonable fit. We can obtain the acceptance corrected yield (we ignore all other corrections here) by integrating the histograms, which give:

- Unpol: 158.9 counts

- Trans: 156.4 counts

- Longi: 163.6 counts

We estimate from this that fully transverse Upsilons should have a yield lower by -1.6% and fully longitudinal Upsilons should have a higher yield by 2.9%. We use this as a systematic uncertainty in the acceptance correction.

In addition, the geometrical acceptance can vary in the real data due to masked towers which are not accounted for in the simulation. We estimate that this variation is of order 25 towers (which is used in the 2007 and 2008 runs as the number of towers allowed to be dynamically masked). This adds 25/4800 = 0.5% to the uncertainty in the geometrical acceptance.

Estimating Drell-Yan contribution from NLO calculation.

Ramona calculated the cross section for DY at NLO and sent the data points to us. These were first shown in the RHIC II Science Workshop, April 2005, in her Quarkonium talk and her Drell-Yan (and Open heavy flavor) talk.

The total cross section (integral of all mass points in the region |y|<5) is 19.6 nb (Need to check if there is an additional normalization with Ramona, but the cross section found by PHENIX using Pythia is 42 nb with 100% error bar, so a 19.6 nb cross section is certainly consistent with this). She also gave us the data in the region |y|<1, where the cross section is 5.24 nb. The cross section as a function of invariant mass in the region |y|<1 is shown below.

.gif)

The black curve includes a multiplication with an error function (as we did for the b-bbar case) and normalized such that the ratio between the blue and the black line is 8.5% at 10 GeV/c to account for the efficiency and acceptance found in embedding for the Upsilon 1s. The expected counts in the region 8-11 are 20 +/- 3, where the error is given by varying the parameters of the error function within its uncertainty. The actual uncertainty is likely bigger than this if we take into account the overall normalization uncertainty in the calculation.

I asked Ramona for the numbers in the region |y|<0.5, since that is what we use in STAR. The corresponding plot is below.

.gif)

The integral of the data points gives 2.5 nb. The integral of the data between 7.875 and 11.125 GeV/c2 is 42.30 pb. The data is parameterized by the function shown in blue. The integral of the function in the same region gives 42.25 pb, so it is quite close to the calculation. In the region 8<m<11 GeV/c2, the integral of the funciton is 38.6 pb. The expected counts with this calculation are 25 for both triggers.

Response to PRD referee comments on Upsilon Paper

First Round of Referee Responses

Click here for second round.

-------------------------------------------------------------------------

Report of Referee A:

-------------------------------------------------------------------------

This is really a well-written paper. It was a pleasure to read, and I

have only relatively minor comments.

We thank the reviewer for careful reading of our paper and for providing

useful feedback. We are pleased to know that the reviewer finds the

paper to be well written. We have incorporated all the comments into a

new version of the draft.

Page 3: Although there aren't published pp upsilon cross sections there

is a published R_AA and an ee mass spectrum shown in E. Atomssa's QM09

proceedings. This should be referenced.

We are aware of the PHENIX results from

E. Atomssa, Nucl.Phys.A830:331C-334C,2009

and three other relevant QM proceedings:

P. Djawotho, J.Phys.G34:S947-950,2007

D. Das, J.Phys.G35:104153,2008

H. Liu, Nucl.Phys.A830:235C-238C,2009

However, it is STAR's policy to not reference our own preliminary data on the manuscript we submit for publication on a given topic, and by extension not to reference other preliminary experimental data on the same topic either.

Page 4, end of section A: Quote trigger efficiency.

The end of Section A now reads:

"We find that 25% of the Upsilons produced at

midrapidity have both daughters in the BEMC acceptance and at least one

of them can fire the L0 trigger. The details of the HTTP

trigger efficiency and acceptance are discussed in Sec. IV"

Figure 1: You should either quote L0 threshold in terms of pt, or plot

vs. Et. Caption should say L0 HT Trigger II threshold.

We changed the figure to plot vs. E_T, which is the quantity that is

measured by the calorimeter. For the electrons in the analysis, the

difference between p_T and E_T is negligible, so the histograms in

Figure 1 are essentially unchanged. We changed the caption as suggested.

Figures 3-6 would benefit from inclusion of a scaled minimum bias spectrum

to demonstrate the rejection factor of the trigger.

We agree that it is useful to quote the rejection factor of the trigger.

We prefer to do so in the text. We added to the description of Figure

3 the following sentence: "The rejection factor achieved with Trigger

II, defined as the number of minimum bias events counted by the trigger scalers

divided by the number events where the upsilon trigger was issued, was

found to be 1.8 x 105."

Figure 9: There should be some explanation of the peak at E/p = 2.7

We investigated this peak, and we traced it to a double counting error.

The problem arose due to the fact that the figure was generated from

a pairwise Ntuple, i.e. one in which each row represented a pair of

electrons (both like-sign and unlike-sign pairs included), each with a

value of E and p, instead of a single electron Ntuple. We had plotted

the value of E/p for the electron candidate which matched all possible

high-towers in the event. The majority of events have only one candidate

pair, so there were relatively few cases where there was double

counting. We note that for pairwise quantities such as opening angle and

invariant mass, each entry in the Ntuple is still different. However,

the case that generated the peak at E/p = 2.7 in the figure was traced

to one event that had one candidate positron track, with its

corresponding high-tower, which was paired with several other electron

and positron candidates. Each of these entries has a different invariant

mass, but the same E/p for the first element of the pair. So its entry

in Figure 9, which happened to be at E/p=2.7, was repeated several times

in the histogram. The code to generate the data histogram in Figure 9

has now been corrected to guarantee that the E/p distribution is made

out of unique track-cluster positron candidates. The figure in the paper

has been updated. The new histogram shows about 5 counts in that

region. As a way to gauge the effect the double counting had on the

E/p=1 area of the figure, there were about 130 counts in the figure at

the E/p=1 peak position in the case with the double-counting error, and

there are about 120 counts in the peak after removing the

double-counting. The fix leads to an improved match between the data

histogram and the Monte Carlo simulations. We therefore leave the

efficiency calculation, which is based on the Monte Carlo Upsilon

events, unchanged. The pairwise invariant mass distribution from which

the main results of the paper are obtained is unaffected by this. We

thank the reviewer for calling our attention to this peak, which allowed

us to find and correct this error.

-------------------------------------------------------------------------

Report of Referee B:

-------------------------------------------------------------------------

The paper reports the first measurement of the upsilon (Y) cross-section

in pp collisions at 200 GeV. This is a key piece of information, both

in the context of the RHIC nucleus-nucleus research program and in its

own right. The paper is rather well organized, the figures are well

prepared and explained, and the introduction and conclusion are clearly

written. However, in my opinion the paper is not publishable in its

present form: some issues, which I enumerate below, should be addressed

by the authors before that.

The main problems I found with the paper have to do with the estimate

of the errors. There are two issues:

The first: the main result is obtained by integrating the counts above

the like-sign background between 8 and 11 GeV in figure 10, quoted to

give 75+-20 (bottom part of table III). This corresponds the sum Y +

continuum. Now to get the Y yield, one needs to subtract an estimated

contribution from the continuum. Independent of how this has been

estimated, the subtraction can only introduce an additional absolute

error. Starting from the systematic error on the counts above background,

the error on the estimated Y yield should therefore increase, whereas

in the table it goes down from 20 to 18.

Thanks for bringing this issue to our attention. It is true that when

subtracting two independently measured numbers, the statistical

uncertainty in the result of the subtraction can only be larger than the

absolute errors of the two numbers, i.e. if C = A - B, and error(A) and

error(B) are the corresponding errors, then the statistical error on C

would be sqrt(error(B)2+error(A)2) which would yield a larger absolute

error than either error(A) or error(B). However, the extraction of the

Upsilon yield in the analysis needs an estimate of the continuum

contribution, but the key difference is that it is not obtained by an

independent measurement. The two quantities, namely the Upsilon yield

and the continuum yield, are obtained ultimately from the same source:

the unlike sign dielectron distribution, after the subtraction of the

like-sign combinatorial background. This fact causes an

anti-correlation between the two yields, the larger the continuum yield,

the smaller the Upsilon yield. So one cannot treat the subtraction of

the continuum yield and the Upsilon yield as the case for independent

measurements. This is why in the paper we discuss that an advantage of

using the fit includes taking automatically into account the correlation

between the continuum and the Upsilon yield. So the error that is

quoted in Table III for all the "Upsilon counts", i.e. the Fitting

Results, the Bin-by-bin Counting, and the Single bin counting, is quoted

by applying the percent error on the Upsilon yield obtained from the

fitting method, which is the best way to take the anti-correlation

between the continuum yield and the Upsilon yield into account. We will

expand on this in section VI.C, to help clarify this point. We thank the referee for

alerting us.

The second issue is somewhat related: the error on the counts (18/54, or

33%) is propagated to the cross section (38/114) as statistical error,

and a systematic error obtained as quadratic sum of the systematic

uncertainties listed in Table IV is quoted separately. The uncertainty on

the subtraction of the continuum contribution (not present in Table IV),

has completely disappeared, in spite of being identified in the text as

"the major contribution to the systematic uncertainty" (page 14, 4 lines

from the bottom).

This is particularly puzzling, since the contribution of the continuum

is even evaluated in the paper itself (and with an error). This whole

part needs to be either fixed or, in case I have misunderstood what the

authors did, substantially clarified.

We agree that this can be clarified. The error on the counts (18/54, or

33%) includes two contributions:

1) The (purely statistical) error on the unlike-sign minus like sign

subtraction, which is 20/75 or 26%, as per Table III.

2) The additional error from the continuum contribution, which we

discuss in the previous comment, and is not just a statistical sum of

the 26% statistical error and the error on the continuum, rather it must

include the anti-correlation of the continuum yield and the Upsilon

yield. The fit procedure takes this into account, and we arrive at the

combined 33% error.

The question then arises how to quote the statistical and systematic

uncertainties. One difficulty we faced is that the subtraction of the

continuum contribution is not cleanly separated between statistical and

systematic uncertainties. On the one hand, the continuum yield of 22

counts can be varied within the 1-sigma contours to be as low as 14 and

as large as 60 counts (taking the range of the DY variation from Fig.

12). This uncertainty is dominated by the statistical errors of the

dielectron invariant mass distribution from Fig. 11. Therefore, the

dominant uncertainty in the continuum subtraction procedure is

statistical, not systematic. To put it another way, if we had much

larger statistics, the uncertainty in the fit would be much reduced

also. On the other hand, there is certainly a model-dependent component

in the subtraction of the continuum, which is traditionally a systematic

uncertainty. We chose to represent the combined 33% percent error as a

statistical uncertainty because a systematic variation in the results

would have if we were to choose, say, a different model for the continuum

contribution, is smaller compared to the variation allowed by the

statistical errors in the invariant mass distribution. In other words,

the reason we included the continuum subtraction uncertainty together in

the quote of the statistical error was that its size in the current

analysis ultimately comes from the statistical precision of our

invariant mass spectrum. We agree that this is not clear in the text,

given that we list this uncertainty among all the other systematic

uncertainties, and we have modified the text to clarify this. Perhaps a

more appropriate way to characterize the 33% error is that it includes

the "statistical and fitting error", to highlight the fact that in

addition to the purely statistical errors that can be calculated from

the N++, N-- and N+- counting statistics, this error includes the

continuum subtraction error, which is based on a fit that takes into

account the statistical error on the invariant mass spectrum, and the

important anti-correlation between the continuum yield and the Upsilon

yield. We have added an explanation of these items in the updated draft of

the paper, in Sec VI.C.

There are a few other issues which in my opinion should be dealt with

before the paper is fit for publication:

- in the abstract, it is stated that the Color Singlet Model (CSM)

calculations underestimate the Y cross-section. Given that the discrepancy

is only 2 sigma or so, such a statement is not warranted. "Seems to

disfavour", could perhaps be used, if the authors really insist in making

such a point (which, however, would be rather lame). The statement that

CSM calculations underestimate the cross-section is also made in the

conclusion. There, it is even commented, immediately after, that the

discrepancy is only a 2 sigma effect, resulting in two contradicting

statements back-to-back.

Our aim was mainly to be descriptive. To clarify our intent, the use of

"underestimate" is in the sense that if we move our datum point lower by the

1-sigma error of our measurement and this value is higher than the top

end of the CSM calculation. We quantify this by saying that the

size of the effect is about 2-sigma. We think that the concise statement

"understimate by 2sigma" objectively summarizes the observation, without

need to use more subjective statements, and we modified

the text in the abstract and conclusion accordingly.

- on page 6 it is stated that the Trigger II cuts were calculated offline

for Trigger I data. However, it is not clear if exactly the same trigger

condition was applied offline on the recorded values of the original

trigger input data or the selection was recalculated based on offline

information. This point should be clarified.

Agreed. We have added the sentence: "The exact same trigger condition was

applied offline on the recorded values of the original trigger input data."

- on page 7 it is said that PYTHIA + Y events were embedded in zero-bias

events with a realistic distribution of vertex position. Given that

zero-bias events are triggered on the bunch crossing, and do not

necessarily contain a collision (and even less a reconstructed vertex),

it is not clear what the authors mean.

We do not know if the statement that was unclear is how the realsitic

vertex distribution was obtained or if the issue pertained to where the analyzed collision comes from.

We will try to clarify both instances. The referee has correctly understood

that the zero-bias events do not necessarily contain a collision.

That is why the PYTHIA simulated event is needed. The zero-bias events

will contain additional effects such as out of time pile-up in the Time

Projection Chamber, etc. In other words, they will contain aspects of

the data-taking environment which are not captured by the PYTHIA events.

That is what is mentioned in the text:

"These zero-bias events do not always have a collision in the given

bunch crossing, but they include all the detec-

tor effects and pileup from out-of-time collisions. When

combined with simulated events, they provide the most

realistic environment to study the detector e±ciency and

acceptance."

The simulated events referred to in this text are the PYTHIA events, and

it is the simulated PYTHIA event, together with the Upsilon, that

provides the collision event to be studied for purposes of acceptance

and efficiency. In order to help clarify our meaning, we have also added

statements to point out that the dominant contribution to the TPC occupancy

is from out of time pileup.

Regarding the realistic distribution of vertices,

this is obtained from the upsilon triggered events (not from the zero-bias events, which

have no collision and typically do not have a found vertex, as the referee correctly

interpreted). We have added a statement to point this out and hopefully this will make

the meaning clear.

- on page 13 the authors state that they have parametrized the

contribution of the bbar contribution to the continuum based on a PYTHIA

simulation. PYTHIA performs a leading order + parton shower calculation,

while the di-electon invariant mass distribution, is sensitive to

next-to-leading order effects via the angular correlation of the the two

produced b quarks. Has the maginuted of this been evaluated by comparing

PYTHIA results with those of a NLO calculation?

We did not do so for this paper. This is one source of systematic

uncertainty in the continuum contribution, as discussed in the previous

remarks. For this paper, the statistics in the dielectron invariant

mass distribution are such that the variation in the shape of the b-bbar

continuum between LO and NLO would not contribute a significant

variation to the Upsilon yield. This can be seen in Fig. 12, where the

fit of the continuum allows for a removal of the b-bbar yield entirely,

as long as the Drell-Yan contribution is kept. We expect to make such

comparisons with the increased statistics available in the run 2009

data, and look forward to including NLO results in the next analysis.

- on page 13 the trigger response is emulated using a turn-on function

parametrised from the like-sign data. Has this been cross-checked with a

simulation? If yes, what was the result? If not, why?

We did not cross check the trigger response on the continuum with a

simulation, because a variation of the turn-on function parameters gave

a negligible variation on the extracted yields, so it was not deemed

necessary. We did use a simulation of the trigger response on simulated

Upsilons (see Fig. 6, dashed histogram).

Finally, I would like to draw the attention of the authors on a few less

important points:

- on page 6 the authors repeat twice, practically with the same words,

that the trigger rate is dominated by di-jet events with two back-to-back

pi0 (once at the top and once near the bottom of the right-side column).

We have changed the second occurrence to avoid repetitiveness.

- all the information of Table I is also contained in Table 4; why is

Table I needed?

We agree that all the information in Table I is contained in Table 4

(except for the last row, which shows the combined efficiency for the

1S+2S+3S), so it could be removed. We have included it for convenience

only: Table I helps in the discussion of the acceptance and

efficiencies, and gives the combined overall correction factors, whereas

the Table IV helps in the discussion of the systematic uncertainties of

each item.

- in table IV, the second column says "%", which is true for the

individual values of various contributions to the systematic uncertainty,

but not for the combined value at the bottom, which instead is given

in picobarn.

Agreed. We have added the pb units for the Combined error at the bottom of the

table.

- in the introduction (firts column, 6 lines from the bottom) the authors

write that the observation of suppression of Y would "strongly imply"

deconfinement. This is a funny expression: admitting that such an

observation would imply deconfinement (which some people may not be

prepared to do), what's the use of the adverb "strongly"? Something

either does or does not imply something else, without degrees.

We agree that the use of "imply" does not need degrees, and we also

agree that some people might not be prepared to admit that such an

observation would imply deconfinement. We do think that such an

observation would carry substantial weight, so we have rephrased that

part to "An observation of suppression of Upsilon

production in heavy-ions relative to p+p would be a strong argument

in support of Debye screening and therefore of

deconfinement"

We thank the referee for the care in reading the manuscript and for all

the suggestions.

Second Round of Referee Responses

> I think the paper is now much improved. However,

> there is still one point (# 2) on which I would like to hear an

> explanation from the authors before approving the paper, and a

> couple of points (# 6 and 7) that I suggest the authors should

> still address.

> Main issues:

> 1) (errors on subtraction of continuum contribution)

> I think the way this is now treated in the paper is adequate

> 2) (where did the subtraction error go?)

> I also agree that the best way to estimate the error is

> to perform the fit, as is now explicitly discussed in the paper.

> Still, I am surprised, that the additional error introduced by

> the subtraction of the continuum appears to be negligible

> (the error is still 20). In the first version of the paper there

> was a sentence – now removed – stating that the uncertainty

> on the subtraction of the continuum contribution was one

> of the main sources of systematic uncertainty!

> -> I would at least like to hear an explanation about

> what that sentence

> meant (four lines from the bottom of page 14)

Response:

Regarding the size of the error:

The referee is correct in observing that the error before

and after subtraction is 20, but it is important to note

that the percentage error is different. Using the numbers

from the single bin counting, we get

75.3 +/- 19.7 for the N+- - 2*sqrt(N++ * N--),

i.e. the like-sign subtracted unlike-sign signal. The purely

statistical uncertainty is 19.7/75.3 = 26%. When we perform

the fit, we obtain the component of this signal that is due

to Upsilons and the component that is due to the Drell-Yan and

b-bbar continuum, but as we discussed in our previous response,

the yields have an anti-correlation, and therefore there is no

reason why the error in the Upsilon yield should be larger in

magnitude than the error of the like-sign subtracted unlike-sign

signal. However, one must note that the _percent_ error does,

in fact, increase. The fit result for the upsilon yield alone

is 59.2 +\- 19.8, so the error is indeed the same as for the

like-sign subtracted unlike-sign signal, but the percent error

is now larger: 33%. In other words, the continuum subtraction

increases the percent error in the measurement, as it should.

Note that if we one had done the (incorrect) procedure of adding

errors in quadrature, using an error of 14.3 counts for the

continuum yield and an error of 19.7 counts for the

background-subtracted unlike-sign signal, the error on the

Upsilon yield would be 24 counts. This is a relative error of 40%, which

is larger than the 33% we quote. This illustrates the effect

of the anti-correlation.

Regarding the removal of the sentence about the continuum

subtraction contribution to the systematic uncertainty:

During this discussion of the continuum subtraction and

the estimation of the errors, we decided to remove the

sentence because, as we now state in the paper, the continuum

subtraction uncertainty done via the fit is currently

dominated by the statistical error bars of the data in Fig. 11,

and is therefore not a systematic uncertainty. A systematic

uncertainty in the continuum subtraction would be estimated,

for example, by studying the effect on the Upsilon yield that

a change from the Leading-Order PYTHIA b-bbar spectrum we use

to a NLO b-bbar spectrum, or to a different Drell-Yan parameterization.

As discussed in the response to point 6), a complete

removal of the b-bbar spectrum, a situation allowed by the fit provided

the Drell-Yan yield is increased, produces a negligible

change in the Upsilon yield. Hence, systematic variations

in the continuum do not currently produce observable changes

in the Upsilon yield. Varying the continuum yield

of a given model within the statistical error bars does, and

this uncertainty is therefore statisitcal. Therefore, we removed the

sentence stating that the continuum subtraction is one

of the dominant sources of systematic uncertainty because

in the reexamination of that uncertainty triggered by the

referee's comments, we concluded that it is more appropriate

to consider it as statistical, not systematic, in nature.

We have thus replaced that sentence, and in its stead

describe the uncertainty in the cross

section as "stat. + fit", to draw attention to the fact that

this uncertainty includes the continuum subtraction uncertainty

obtained from the fit to the data. The statements in the paper

in this respect read (page 14, left column):

It should be noted that

with the statistics of the present analysis, we find that the

allowed range of variation of the continuum yield in the fit is

still dominated by the statistical error bars of the invariant mass

distribution, and so the size of the 33% uncertainty is mainly

statistical in nature. However, we prefer to denote

the uncertainty as “stat. + fit” to clarify that it includes the estimate of the anticorrelation

between the Upsilon and continuum yields obtained

by the fitting method. A systematic uncertainty due to

the continuum subtraction can be estimated by varying

the model used to produce the continuum contribution

from b-¯b. These variations produce a negligible change in

the extracted yield with the current statistics.

We have added our response to point 6) (b-bbar correlation systematics)

to this part of the paper, as it pertains to this point.

> Other issues:

> 3) (two sigma effect)

> OK

> 4) (Trigger II cuts)

> OK

> 5) (embedding)

> OK

> 6) (b-bbar correlation)

> I suggest adding in the paper a comment along the lines of what

> you say in your reply

> 7) (trigger response simulation)

> I suggest saying so explicitly in the paper

Both responses have been added to the text of the paper.

See page 13, end of col. 1, (point 7) and page 14, second column (point 6).

> Less important points:

> 8) (repetition)

> OK

> 9) (Table I vs Table IV)

> OK…

> 10) (% in last line of Table IV)

> OK

> 11) (“strongly imply”)

> OK

We thank the referee for the care in reading the manuscript, and look forward to

converging on these last items.

Upsilon Analysis in d+Au 2008

Upsilon yield and nuclear modification factor in d+Au collisions at sqrt(s)=200 GeV.

PAs: Anthony Kesich, and Manuel Calderon de la Barca Sanchez.

- Dataset QA

- Trigger ID, runs

- Trigger ID = 210601

- ZDC East signal + BEMC HT at 18 (Et>4.3 GeV) + L2 Upsilon

- Total Sampled Luminosity: 32.66 nb^-1; 1.216 Mevents

- http://www.star.bnl.gov/protected/common/common2008/trigger2008/lum_pertriggerid_dau2008.txt

- Trigger ID = 210601

- Run by Run QA

- Integrated Luminosity estimate

- Systematic Uncertainty

- Trigger ID, runs

- Acceptance (Check with Kurt Hill)

- Raw pT, y distribution of Upsilon

- Accepted pT, y distribution of Upsilons

- Acceptance

- Raw pT, eta distribution of e+,e- daughters

- Accepted pT, eta distribution of e+,e- daughters

- Comparison plots between single-electron embedding, Upsilon embedding

- L0 Trigger

- DSM-ADC Distribution (data, i.e. mainly background)

- DSM-ADC Distribution (Embedding) For accepted Upsilons, before and after L0 trigger selection

- Systematic Uncertainty (Estimate of possible calibration and resolution systematic offsets).

- "highest electron/positron Et" distribution from embedding (Accepted Upsilons, before and after L0 trigger selection)

- L2 Trigger

- E1 Cluster Et distribution (data, i.e. mainly background)

- E1 Cluster Et distribution (embedding, L0 triggered, before and after all L2 trigger cuts)

- L2 pair opening angle (cos theta) data (i.e. mainly background)

- L2 pair opening angle (cos theta) embedding. Needs map of (phi,eta)_MC to (phi,eta)_L2 from single electron embedding. Then a map from r1=(phi,eta, R_emc) to r1=(x,y,z) so that one can do cos(theta^L2) = r1.dot(r2)/(r1.mag()*r2.mag()). Plot cos theta distribution for L0 triggered events, before and after all L2 trigger cuts. (Kurt)

- L2 pair invariant mass from data (i.e. mainly background)

- L2 pair invariant mass from embedding. Needs simulation as for cos(theta), so that one can do m^2 = 2 * E1 * E2 * (1 - cos(theta)) where E1 and E2 are the L2 cluster energies. Plot the invariant mass distribution fro L0 triggered events, before and after all L2 trigger cuts. (Check with Kurt)

- PID

- dE/dx

- dE/dx vs p for the Upsilon triggered data

- nsigma_dE/dx calibration of means and sigmas (done by C. Powell for his J/Psi work)

- Cut optimization (Maximization of electron effective signal)

- Final cuts for use in data analysis

- E/p

- E/p distributions for various p bins

- Study of E calibration and resolution between data and embedding (for L0 Trigger systematic uncertainty)

- Resolution and comparison with embedding (for cut efficiency estimation)

- dE/dx

- Yield extraction

- Invariant mass distributions

- Unlike-sign and Like-sign inv. mass

- Like-sign subtracted inv. mass

- Crystal-Ball shapes from embedding/simulation. Crystal-ball parameters to be used in fit

- Fit to Like-sign subtracted inv. mass, using CB, DY, b-bbar

- Contour plot (1sigma and 2sigma) of b-bbar cross section vs. DY cross section

- Upsilon yield estimation and stat. + fit error

- Invariant mass distributions

- Cross section calculation.

- Yield, dN/dy

- Integrated luminosity (for 1/N_events, where N_events were the total events sampled by the L0 trigger)

- Efficiency (Numbers for each state, and cross-section-branching-ratio-weighted average)

- Uncertainty

- pt Distribution (invariant, i.e. 1/N_event 1/2pi, 1/pt dN/dpt dy) in |y|<0.5 vs pt) This might need one to do the CB, DY, bbbar fit in pt bins.

- Nuclear Modification Factor

- Estimation of <Npart> for the dataset, and uncertainty.

- Putting it all together: dN/dy in dAu, Npart, Luminosity (N_events), divided by the pp numbers (dsigma/dy, sigma_pp)

- Plot of R_dAu vs y, comparison with theory

- Plot of R_dAu vs Npart, together with Au+Au

- Plot of R_dAu vs pt. Try to do together with Au+Au (minbias, maybe in centrality bins, but maybe not enough stats)

Upsilon Analysis in p+p 2009

Upsilon cross-section in p+p collisions at sqrt(sNN) = 200 GeV, 2009 data.

PAs: Kurt Hill, Andrew Peterson, Gregory Wimsatt, Anthony Kesich, Rosi Reed, Manuel Calderon de la Barca Sanchez.

- Dataset QA (Andrew Peterson)

- Trigger ID, runs

- Run by Run QA

- Integrated Luminosity estimate

- Systematic Uncertainty

- Acceptance (Kurt Hill)

- Raw pT, y distribution of Upsilon

- Accepted pT, y distribution of Upsilons

- Acceptance

- Raw pT, eta distribution of e+,e- daughters

- Accepted pT, eta distribution of e+,e- daughters

- Comparison plots between single-electron embedding, Upsilon embedding

- L0 Trigger

- DSM-ADC Distribution (data, i.e. mainly background) (Drew)

- DSM-ADC Distribution (Embedding) For accepted Upsilons, before and after L0 trigger selection

- Systematic Uncertainty (Estimate of possible calibration and resolution systematic offsets).

- "highest electron/positron Et" distribution from embedding (Accepted Upsilons, before and after L0 trigger selection)

- L2 Trigger

- E1 Cluster Et distribution (data, i.e. mainly background)

- E1 Cluster Et distribution (embedding, L0 triggered, before and after all L2 trigger cuts)

- L2 pair opening angle (cos theta) data (i.e. mainly background)

- L2 pair opening angle (cos theta) embedding. Needs map of (phi,eta)_MC to (phi,eta)_L2 from single electron embedding. Then a map from r1=(phi,eta, R_emc) to r1=(x,y,z) so that one can do cos(theta^L2) = r1.dot(r2)/(r1.mag()*r2.mag()). Plot cos theta distribution for L0 triggered events, before and after all L2 trigger cuts. (Kurt)

- L2 pair invariant mass from data (i.e. mainly background)

- L2 pair invariant mass from embedding. Needs simulation as for cos(theta), so that one can do m^2 = 2 * E1 * E2 * (1 - cos(theta)) where E1 and E2 are the L2 cluster energies. Plot the invariant mass distribution fro L0 triggered events, before and after all L2 trigger cuts. (Kurt)

- PID (Greg)

- dE/dx

- dE/dx vs p for the Upsilon triggered data

- nsigma_dE/dx calibration of means and sigmas

- Cut optimization (Maximization of electron effective signal)

- Final cuts for use in data analysis

- E/p

- E/p distributions for various p bins

- Study of E calibration and resolution between data and embedding (for L0 Trigger systematic uncertainty)

- Resolution and comparison with embedding (for cut efficiency estimation) (Kurt and Greg)

- dE/dx

- Yield extraction

- Invariant mass distributions

- Unlike-sign and Like-sign inv. mass (Drew)

- Like-sign subtracted inv. mass (Drew)

- Crystal-Ball shapes from embedding/simulation. (Kurt) Crystal-ball parameters to be used in fit (Drew)

- Fit to Like-sign subtracted inv. mass, using CB, DY, b-bbar.

- Contour plot (1sigma and 2sigma) of b-bbar cross section vs. DY cross section. (Drew)

- Upsilon yield estimation and stat. + fit error. (Drew)

- (2S+3S)/1S (Drew)

- Invariant mass distributions

- pT Spectra (Drew)

- Cross section calculation.

- Yield

- Integrated luminosity

- Efficiency (Numbers for each state, and cross-section-branching-ratio-weighted average)

- Uncertainty

- h+/h- Corrections

Upsilon Analysis in p+p 2009 data - Acceptance

- Acceptance (Kurt Hill) - Upsilon acceptance aproximated using a simulation that constructs Upsilons (flat in pT and y), lets them decay to e+e- pairs in the Upsilon's rest frame, and uses detector response functions generated from a single electron embedding to model detector effects. An in depth study of this method will also be included.

- Raw pT, y distribution of Upsilon

- Accepted pT, y distribution of Upsilons

- Acceptance

- Raw pT, eta distribution of e+,e- daughters

- Accepted pT, eta distribution of e+,e- daughters

- Comparison plots between single-electron embedding, Upsilon embedding

Upsilon Analysis in p+p 2009 data - Acceptance

- Acceptance (Kurt Hill)

- Raw pT, y distribution of Upsilon

- Accepted pT, y distribution of Upsilons

- Acceptance

- Raw pT, eta distribution of e+,e- daughters

- Accepted pT, eta distribution of e+,e- daughters

- Comparison plots between single-electron embedding, Upsilon embedding

Upsilon Paper: pp, d+Au, Au+Au

Page to collect the information for the Upsilon paper based on the analysis of

Anthony (4/24):

in data, the electrons were selected via 0<nSigE<3, R<0.02. For pt<5, we fit to 0<adc<303. For pt>5, 303<adc<1000.

In embedding, the only selections are the p range, R<0.02, and eleAcceptTrack. The embedding pt distro was reweighted to match the data.

Anthony (4/5): Added new Raa plot with comparison to strickland's supression models

Anthony (4/4): I attached some dAu cross section plots on this page. The eps versions are on nuclear. The cross sections are as follows:

all: 25.9±4.0 nb

0-2: 1.8±1.7 nb

2-4: 10.9±2.9 nb

4-6. 5.2±5.3 nb

6-8: 0.57±0.59 nb

I expect the cross sections to change once I get new efficiences from embedding, but not by a whole lot.

Drew (4/6): Got Kurt's new lineshapes, efficiencies, and double-ERF parameters today. Uploading the fits to them. I'm not sure I believe the fits...

Bin-by-Bin Counting Cross Section by pT (GeV/c):

|y|<1.0 all: 134.6 ± 10.6 pb

0-2: 27.6 ± 6.3 pb

2-4: 39.1 ± 5.8 pb

4-6: 19.9 ± 3.8 pb

6-8: 13.6 ± 5.1 pb

|y|<0.5 all: 119.2 ± 12.4 pb

0-2: 23.8 ± 6.8 pb

2-4: 35.9 ± 7.4 pb

4-6: 19.0 ± 4.5 pb

6-8: 14.2 ± 4.6 pb

The double ERF is a turn-off from the L2 trigger's mass cut. Kurt used the form: ( {erf*[(m-p1)/p2]+1}*{erf*[(p3-m)/p4]+1} )/2, but I used /4 in the actual fit because each ERF can be at most 2. By fits are also half a bin shifted from Tony's, we'll need to agree on it at some point. The |y|<1 are divided by 2 units in rapidity, and the |y|<0.5 by 1 unit.

Upsilon pp, dAu, AuAu Paper Documents

This page is for collecting the following documents related to the Upsilon pp (2009), dAu (2008) and AuAu (2010) paper:

- Paper Proposal (Most Recent: Version 3)

- New in v3: Now says we're going for PLB and has the E772 and MC plots included. Also has |y|<1.0 results

- Technical Note (Most Recent: Version 6)

- New in v3: AuAu consistency analysis and expanded summary table

- New in v4: Added JPsi study of linewidth and 1S numbers

- New in v6 : Final version for paper as resubmitted to PLB

- Paper Draft (Latest: Version 25)

- New in v26: Updated with changes made in PLB proof

- v25-resub: Version as re-submitted to PLB (no line numbers).

- New in v25: Updated acknowledgements.

- New in v24: Minor changes to discussion or TPC misalignment

- New in v23.1: Added systematics to Fig. 3

- New in v23: Updated with comments from Lanny and Thomas. Changes are in red.

- New in v22: Made changed based on GPC responses to our responses to the referees. Also, all Tables are now correct. Changes are in blue.

- New in v21: Changed in response to PLB referee comments. Changed results to likelihood fits. Added binding energy plot. Tabs. II and III are NOT correct.

- New in v20: ???

- New in v19: Updated with minor comments from Thomas on Nov 25.

- New in v18: Incorporated lost changes from v16. Added 3 UC Davis undergrads to the author list.

- New in v17: A few more changes from GPC comments and addition of AuAu cross sections

- New in v16: Changes from GPC comments after collaboration review

- New in v15: Collaboration review changes

- New in v14: English QA changes

- New in v13: Mostly minor edits suggested by Lanny and Thomas

- New in v12: Updated the MC section to addredd |y|<0.5. Also did some other, minor graphwork on fig 3b

- New in v11: Updated with latest comments from the GPC. Official version before the first GPC meeting

- New in v10: Updated from PWG discussion. Cleaned and enchanced plots

- New in v9: Cleaned up v8

- New in v8: Added analysis of 1S state and discussion of E772

- New in v7: Made many changes based on first round of GPC e-mails. Summaries of changes and responses can be found on the responses sub-page.

- New in v6: Cleaned up most plots. Reworded end of intro. Cleaned up triggering threshold discussion. Added labels for subfigures.

- New in v5.1: Made stylistic clarifications and fixed a few typos. Updated dAu mass spectrum legend to explain grey curve.

- New in v5: PLB formatting and some plot clean-up

- New in v4: E772 results and |y|<1.0 and |y|<0.5 both included for AuAu

Responses to Collaboration comments

- Comments from JINR

- Comments from Tsinghua

- Comments from UCLA

- Comments from Creighton

- Comments from WUT

- Comments from BNL

Thanks to all the people who submitted comments. These have helped to improve the draft. Please find the responses to the comments below.

Comments from JINR (Stanislav Vokal)

1) Page 3, line 40, „The cross section for bottomonium production is smaller than that of charmonium [8-10]...“, check it, is there any cross section for bottomonium production in these papers?

Answer: Both papers report a quarkonium result. The PHENIX papers quote a J/psi cross section of ~178 nb. Our paper from the 2006 data quotes the Upsilon cross section at 114 pb.

2) Page 3, lines 51-52, „compared to s_ccbar approx 550 - 1400 mb [13, 14]). ...“. It should be checked, in [13] s is about 0.30 mb and in [14], Tab.VII, s is about 0.551 – 0.633 mb.“.

Answer: In Ref. 13, the 0.3 mb is for dsigma/dy, not for sigma_cc. To obtain sigma_cc, one has to use a multiplicative factor of 4.7 that was obtained from simulations (Pythia), as stated in that reference. This gives a cross section of ~ 1.4 mb, which is the upper value we quote (1400 \mu b). In reference 14, in Table VIII the low value of 550 \mu b is the lower value we use in the paper. So both numbers we quote are consistent with the numbers from those two references.

3) Page 3, line 78, „...2009 (p+p)...“ and line 80 „20.0 pb-1... “, In Ref. [10] the pp data taken during 2006 were used, 7.9 pb-1, it seems that this data sample was not included in the present analysis. Am I true? If yes – please explain, why? If the data from 2006 are included in the present draft, then add such information in the text, please.

Answer: That is correct: the data from 2006 was not included in the present analysis. There were two major differences. The first difference is the amount of inner material. In 2006 (and 2007), the SVT was still in STAR. In 2008, 2009, and 2010, which are the runs we are discussing in this paper, there was no SVT. This makes the inner material different in 2006 compared to 2009, but it is kept the same in the entire paper. This is the major difference. The inner material has a huge effect on electrons because of bremsstrahlung, and this distorts the line shape of the Upsilons. The second difference is that the trigger in 2006 was different than in 2009. This difference in triggers is not insurmountable, but given the difference in the amount of inner material, it was not worth to try to join the two datasets. We have added a comment to the text about this:

4) Page 4, Fig.1, numbers on the y-axe should be checked, because in [10], Fig.10, are practically the same acounts, but the statistic is 3 times smaller;

Answer: The number that matters is the counts in the Upsilon signal. In Fig. 10 of Ref. 10, there is a lot more combinatorial background (because of the aforementioned issue with the inner material), so when looking at the total counts one sees a similar number than in the present paper. However, in the case of the 2006 data, most of the counts are from background. The actual signal counts in the highest bin of the 2006 data are ~55-30 = 25, whereas the signal counts in the present paper are ~ 50 - 5 = 45 in the highest bin. When you also notice that the 2006 plot had bins that were 0.5 GeV/c^2 wide, compared to the narrower bins of 0.2 GeV/c^2 we are using in Figure 1 (a), it should now be clear that the 2009 data has indeed more statistics.

5) Page 5, line 31, „114 ± 38+23-14 pb [10]“, value 14 should 24;

Answer: Correct. We have fixed this typo. Thank you.

6) Page 5, Fig.2, yee and yY should be identical;

Answer: We will fix the figures to use one symbol for the rapidity of the upsilons throughout the paper.

7) Page 5, Fig.2 – description, „Results obtained by PHENIX are shown as filled tri-angles.“ à diamond;

Answer: Fixed.

8) Page 6, Fig.3a, here should be hollow star for STAR 1S (dAu) as it is in Fig.3b;

Answer: Fixed.

9) Page 8, line 7, „we find RAA(1S) = 0.44 ± ...“ à should be 0.54;

Answer: Fixed.

10) Page 8, lines 9-12, „The ratio of RAA(1S) to RAA(1S+2S+3S) is consistent with an RAA(2S+3S) approximately equal to zero, as can be seen by examining the mass range 10-11 GeV/c2 in Fig. 4.“, it is not clear, check this phrase, please;

Answer: We have modified this phrase to the following: "If 2S+3S states were completely dissociated in Au+Au collisions, then R_AA(1S+2S+3S) would be approximately equal to $R_AA(1S) \times 0.69$. This is consistent with our observed R_AA values, and can also be inferred

11) Page 8, line 26, „CNM“, it means Cold Nuclear Matter suppression? – should be explained in text;

Answer: The explanation of the CNM acronym is now done in the Introduction.

12) Page 9, line 30-31, „The cross section in d+Au collisions is found to be = 22 ± 3(stat. + fit)+4- 3(syst.) nb.“, but there is no such results in the draft before;

Answer: This result is now given in the same paragraph where the corresponding pp cross section is first

stated, right after the description of Figure 1.

13) Page 9, line 34, „0.08(p+p syst.).“ à „0.07(p+p syst.).“, see p.7;

Answer: Fixed. It was 0.08

14) Page 10, Ref [22], should be added: Eur. Phys. J C73, 2427 (2013);

Answer: We added the published bibliography information to Ref [22].

15) Page 10,, Ref [33] is missing in the draft.

Answer: We have now removed it. It was left over from a previous version of the draft which included text that has since been deleted.

Comments from Tsinghua

1) Replace 'Upsilon' in the title and text with the Greek symbol.

Answer: Done.

2) use the hyphen consistently across the whole paper, for example, sometimes you use 'cold-nulcear matter', and at another place 'cold-nuclear-matter'. Another example is 'mid-rapidity', 'mid rapidity', 'midrapidity'...

Answer: On the hyphenation, if the words are used as an adjectivial phrase, then those need to be hyphenated. In the phrase "the cold-nuclear-matter effects were observed", the words "cold-nuclear-matter" are modifying

the word "effects", so they are hyphenated. However, from a previous comment we decided to use the acronym "CNM" for "cold-nuclear matter", which avoids the hyphenation. We now use "mid-rapidity" throughout the paper.

3) For all references, remove the 'arxiv' information if the paper has been published.

Answer: We saw that published papers in PLB do include the arxiv information in their list of references. For the moment, we prefer to keep it there since not all papers are freely available online, but arxiv manuscripts are. We will leave the final word to the journal, if the editors ask us to remove it, then we will do so.

4) Ref. [33] is not cited in the text. For CMS, the latest paper could be added, PRL 109, 222301 (2012).

Answer: Ref [33] was removed. Added the Ref. to the latest CMS paper on Upsilon suppression.

5) For the model comparisons, you may also compare with another QGP suppression model, Y. Liu, et al., PLB 697, 32-36 (2011)

Answer: This model is now included in the draft too, and plotted for comparison to our data in Fig. 5c.

6) page 3, line 15, you may add a reference to lattice calculations for Tc ~ 175 MeV.

Answer: Added a reference to hep-lat/0305025.

7) Fig 1a, \sqrt{s_{NN}} -> \sqrt{s}. In the caption, |y| -> |y_{ee}|

Answer: Fixed.