Run 5

2005 Charged Pion Data / Simulation Comparison

Motivation:Estimates of trigger bias systematic error are derived from simulation. This page compares yields obtained from data and simulation to test the validity of the PYTHIA event generator and our detector geometry model.

Conditions:

- Simulation DB timestamp: dbMk->SetDateTime(20050506,214129). I pick this table up from the DB, rather than from Dave's private directory. Dave changed the timestamps on the files in his directory, so the two files do not match. It turns out that in this case they only differ by one tower (4580), and this tower's status is != 1 in both tables, so there is effectively no difference.

- Data runlist: I use a version of the jet golden run list containing 690 runs. I have heard there is an updated version floating around, but I have yet to get my hands on it

- Simulation files obtained from production P05ih, including larger event samples from Lidia's recent email (http://www.star.bnl.gov/HyperNews-star/protected/get/starsoft/6437.html) but excluding the 2_3, 45_55, and 55_65 GeV samples.

- This is strictly a charged hadron comparison, there is no dE/dx PID cut. The dE/dx dsitributions in simulation are way off.

- Cuts: nFitPoints>25 && |dca|<1. && |eta|<1. && |vz|<60. && pt>2. Also for data I require good spin info and relative luminosity information (these conditions are mostly subsumed by the runlist requirement).

Combine PYTHIA partonic pt samples by filling histograms with weight = sample_weight/nevents, using sample_weights

- 3_4 = 1.287;

- 4_5 = 3.117e-1;

- 5_7 = 1.360e-1;

- 7_9 = 2.305e-2;

- 9_11 = 5.494e-3;

- 11_15 = 2.228e-3;

- 15_25 = 3.895e-4;

- 25_35 = 1.016e-5;

- above_35 = 5.299e-7;

Results:

At the moment I've just linked the raw PDFs at the bottom of the page. The index in the title indicates the charge of the particle being studied. The plots are perhaps a bit hard to follow without labels (next on the list), so here's a guide. Page 1 has pt distributions for the triggers in the order listed above. Pages 2-6 are eta distributions, with each page devoted to a single trigger, again in the order given above. The first plot on each page is integrated over all pt, and then the remaining plots separate the distribution into 1 GeV pt slices. Pages 7-11 repeat this structure for phi, and 12-16 do the same for the z-vertex distributions.

Conclusions:

The agreement between data and simulation appears to be me to be quite good across the board. The jet-patch triggers are particularly well-modeled. A few notes:

- The HT2 pt distributions (page 1, third plot on top row) look funny in simulation. What's with the spike at 6 GeV in the h- plot?

- HT2 eta distribution on the east side for h+ (page 4) has spikes.

- Phi looks good to me

- Vertex distributions for calo triggers in simulation are awfully choppy, but overall the agreement seems OK.

Average Partonic Pt Carried by Charged Pions

Here's a fragmentation study looking at the ratio of reconstructed charged pion p_{T} and the event partonic p_{T} in PYTHIA. Cuts are

- fabs(mcVertexZ()) < 60

- fabs(vertexZ()) < 60

- geantId() == 8 or 9 (charged pions)

- fabs(etaPr()) < 1

- fabs(dcaGl()) < 1

- fitPts() > 25

Error bars are just the errors on the mean partonic p_{T} in each reconstructed pion p_{T} bin. Next step is to look at the jet simulations to come up with a plot that is more directly comparable to a real data measurement.

BBC Vertex

This is a study of 2005 data conducted in March 2006. Ported to Drupal from MIT Athena in October 2007

Goal: Quantify the relationship between the z-vertex position and the bbc timebin for each event.

Procedure: Plot z-vertex as a function of trigger and bbc timebin. Also, plot distributions of bbc timebins for each run to examine stability. Exclude runs<=6119039 as a result. Fit each vertex distribution with a gaussian and extract mean, sigma. Plots are linked at the bottom of the page. See in particular page 8 of run_plots.pdf, which shows the change from 8 bit to 4 bit onlineTimeDifference values.

Timebin 12 had zero counts for each trigger. Summaries of the means and sigmas:

| m | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| mb | 101.7 | 124.1 | 56.3 | 22.72 | -7.835 | -38.89 | -80.48 | -135.6 | - |

| ht1 | 81.09 | 111.8 | 50.75 | 16.88 | -13.49 | -44.57 | -89.36 | -182.8 | - |

| ht2 | 79.86 | 107.2 | 48.93 | 15.76 | -14.27 | -45.17 | -89.76 | -176.7 | - |

| jp1 | 101.3 | 139.3 | 54.8 | 17.68 | -12.95 | -44.05 | -92.4 | -176 | - |

| jp2 | 99.49 | 128.5 | 51.58 | 15.77 | -14.29 | -45.5 | -94.1 | -192.2 | - |

| s | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| mb | 68.85 | 67.48 | 40.4 | 34.26 | 33.31 | 35.91 | 49.32 | 76.29 | - |

| ht1 | 68.99 | 70.9 | 43.06 | 34.71 | 32.49 | 34.86 | 47.42 | 79.98 | - |

| ht2 | 67.64 | 70.13 | 43.06 | 34.76 | 32.55 | 34.98 | 47.26 | 78.22 | - |

| jp1 | 76.49 | 79.85 | 45.44 | 35.6 | 32.56 | 35.34 | 49.69 | 78.1 | - |

| jp2 | 76.18 | 78.01 | 45.73 | 35.79 | 32.87 | 35.68 | 49.37 | 81.21 | - |

Conclusions: Hank started the timebin lookup table on day 119, so this explains the continuous distributions from earlier runs. In theory one could just use integer division by 32 to get binned results before this date, but it would be good to make sure that no other changes were made; e.g., it looks like the distributions are also tighter before day 119. Examining the vertex distributions of the different timebins suggests that using timebins {6,7,8,9} corresponds roughly to a 60 cm vertex cut. A given timebin in this range has a resolution of 30-45 cm.

Basic QA Plots

Select a trigger and charge combination to view QA summary plots. Each point on a plot is a mean value of that quantity for the given run, while the error is sqrt(nentries).The runs selected are those that passed jet QA, so it's not surprising that things generally look good. Exceptions:

- dE/dx, nSigmaPion, and nHitsFit are all out of whack around day 170. I'll take a closer look to see if I can figure out what went wrong, but it's a small group of runs so in the end I expect to simply drop them.

Data - Monte Carlo Comparison, Take 2

I re-examined the data - pythia comparison in my analysis after some good questions were raised during my preliminary result presentation at today's Spin PWG meeting. In particular, there was some concern over occasionally erratic error bars in the simulation histograms and also some questions about the shape of the z-vertex distributions. Well, the error bars were a pretty easy thing to nail down once I plotted my simulation histograms separately for each partonic pt sample. You can browse all of the plots athttp://deltag5.lns.mit.edu/~kocolosk/datamc/samples/

If you look in particular at the lower partonic pt samples you'll see that I have very few counts from simulations in the triggered histograms. This makes perfect sense, of course: any jets that satisfy the jet-patch triggers at these low energies must have a high neutral energy content. Unfortunately, the addition of one or two particles in a bin from these samples can end up dominating that bin because they are weighted so heavily. I checked that this was in fact the problem by requiring at least 10 particles in a bin before I combine it with bins from the other partonic samples. Incidentally, I was aready applying this cut to the trigger bias histograms, so nothing changes there. The results are at

http://deltag5.lns.mit.edu/~kocolosk/datamc/cut_10/

If I compare e.g. the z-vertex distribution for pi+ from my presentation (on the left) with the same distribution after requiring at least 10 particles per bin (on the right) it's clear that things settle down nicely after the cut:

(The one point on the after plot that still is way too high can be fixed if I up the cut to 15 particles, but in general the plots look worse with the cut that high). Unfortunately, it's also clear that the dip in the middle of the z-vertex distribution is still there. At this point I think it's instructive to dig up a few of the individual distributions from the partonic pt samples. Here are eta (left) and v_z (right) plots for pi+ from the 7_9, 15_25, and above_35 samples (normalized to 2M events from data instead of the full 25M sample because I didn't feel like waiting around):

7_9:

15_25:

15_25:

above_35

above_35

You can see that as the events get harder and harder there's actually a bias towards events with *positive* z vertices. At the same time, the pseudorapidity distributions of the harder samples are more nearly uniform around zero. I guess what's happening is that the jets from these hard events are emitted perpendicular to the beam line, and so in order for them to hit the region of the BEMC included in the trigger the vertices are biased to the west.

So, that's all well and good, but we still have the case that the combined vertex distribution from simulation does not match the data. The implication from this mismatch is that the event-weighting procedure is a little bit off; maybe the hard samples are weighted a little too heavily? I tried tossing out the 45_55 and 55_65 samples, but it didn't improve matters appreciably. I'm open to suggestions, but at the same time I'm not cutting on the offline vertex position, so this comparison isn't quite as important as some of the other ones.

One other thing: while I 've got your attention, I may as well post the agreement for MB triggers since I didn't bother to show it in the PPT today. Here are pt, eta, phi, and vz distributions for pi+. The pt distribution in simulation is too hard, but that's something I've shown before:

Conclusions:

New data-mc comparisons requiring at least 10 particles per sample bin in simulation result in improved error bars and less jittery simulation distributions. The event vertex distribution in simulation still does not match the data, and a review of event vertex distributions from individual samples suggests that perhaps the hard samples are weighted a bit too heavily.

Effect of Triggers on Relative Subprocess Contributions

These histograms plot the fraction of reconstructed charged pions in each pion pT bin arising from gg, qg, and qq scattering. I use the following cuts:

- fabs(mcVertexZ()) < 60

- fabs(vertexZ()) < 60

- geantId() == 8 or 9 (charged pions)

- fabs(etaPr()) < 1

- fabs(dcaGl()) < 1

- fitPts() > 25

I analyzed Pythia samples from the P05ih production in partonic pT bins 3_4 through 55_65 (excluded minbias and 2_3). The samples were weighted according to partonic x-sections and numbers of events seen and then combined. StEmcTriggerMaker provided simulations of the HT1 (96201), HT2 (96211), JP1 (96221), and JP2 (96233) triggers. Here are the results. The solid lines are MB and are identical in each plot, while the dashed lines are the yields from events passing a particular software trigger. Each image is linked to a full-resolution copy:

Conclusions

- Imposing an EMC trigger suppresses gg events and enhances qq, particularly for transverse momenta < 6 GeV/c. The effect on qg events changes with pT. The explanation is that the ratio (pion pT / partonic pT) is lower for EMC triggered events than for minimum bias.

- High threshold triggers change the subprocess composition more than low-threshold triggers.

- JP1 is the least-biased trigger according to this metric. There aren't many JP1 triggers in the real data, though, as it was typically prescaled by ~30 during the 2005 pp run. Most of the stats in the real data are in JP2.

Old Studies

Outdated or obsolete studies are archived here

First Look at Charged Pion Trigger Bias

Motivation:

The charged pion A_LL analysis selects pions from events triggered by the EMC. This analysis attempts to estimate the systematic bias introduced by that selection.

Conditions:

- Simulation files, database timestamps, and selection cuts are the same as the ones used in the 2005 Charged Pion Data / Simulation Comparison

- Polarized PDFs are incorporated into simulation via the framework used by the jet group. In particular, only GRSV-std is used as input, since LO versions of the other scenarios were not available at the time.

- Errors on A_LL are calculated according to Jim Sowinski's recipe.

Plots:

Conclusion:

The BBC trigger has a negligible effect on the asymmetries, affirming its use as a "minimum-bias" trigger. The EMC triggers introduce a positive bias of as much as 1.0% in both asymmetries. The positive bias is more consistent in JP2; the HT2 asymmetries are all over the map.

First Look at Single-spin Asymmetries

This is a study of 2005 data conducted in March 2006. Ported to Drupal from MIT Athena in October 2007

eL_asymmetries.pdf

phi_asymmetries.pdf

I also increment 20 separate histograms with (asymmetry/error) for each fill and then fit the resulting distribution with a Gaussian. Ideally the mean of this Gaussian should be centered at zero and the width should be exactly 1. The results are in asymSummaryPlot.pdf

Finally, a summary of single-spin asymmetries integrated over all data. 2-sigma effects are highlighted in bold:

| + | MB | HT1 | HT2 | JP1 | JP2 |

| Y | 0.0691 +/- 0.0775 | 0.0069 +/- 0.0092 | -0.0038 +/- 0.0126 | 0.0086 +/- 0.0104 | 0.0116 +/- 0.0069 |

| B | -0.0809 +/- 0.0777 | -0.0019 +/- 0.0092 | -0.0218 +/- 0.0126 | 0.0067 +/- 0.0104 | -0.0076 +/- 0.0069 |

| - | MB | HT1 | HT2 | JP1 | JP2 |

| Y | -0.0206 +/- 0.0767 | -0.0193 +/- 0.0092 | -0.0158 +/- 0.0130 | -0.0035 +/- 0.0101 | 0.0061 +/- 0.0070 |

| B | 0.0034 +/- 0.0769 | -0.0021 +/- 0.0092 | 0.0006 +/- 0.0130 | -0.0164 +/-0.0101 | -0.0147 +/- 0.0070 |

Conclusions: The jet group sees significant nonzero single-spin asymmetries in Yellow JP2 (2.5 sigma) and Blue JP1 (4 sigma). I do not see these effects in my analysis. I do see a handful of 1 sigma effects and two asymmetries for negatively charged hadrons that just break 2 sigma, but in general these numbers are consistent with zero. I also do not see any significant dependence on track phi.

Inclusive Charged Pion Cross Section - First Look

Correction factors are derived from simulation by taking the ratio of the reconstructed primary tracks matched to MC pions divided by the MC pions. Specifically, the following cuts are applied:Monte Carlo

- |event_vz| < 60.

- |eta| < 1.

- nhits > 25

- geantID == 8||9 (charged pions)

Matched Reco Tracks

- |event_vz|<60.

- |reco eta| < 1.

- |global DCA| < 1.

- reco fit points > 25

- geantID of matched track == 8||9

There is currently a bug in StDetectorDbMaker that makes it difficult to retrieve accurate prescales using only a catalog query for the filelist. This affects the absolute scale of each cross section and data points for HT1 and JP1 relative to the other three triggers. It's probably a 10%-20% effect for HT1 and JP1. With that in mind, here's what I have so far:

This plot is generated from a fraction of the full dataset; I stopped my jobs when I discovered the prescales bug.

The cuts used to select good events from the data are:

- golden run list, version c

- |vz| < 60.

- Right now I am only using the first vertex from each event, but it's easy for me to change

The cuts used to select pion tracks are the same as the ones used for "Matched Reco Tracks", except for the PID cut of course. For PID I require that the dE/dx value of the track is between -1 and 2 sigma away from the mean for pions.

As always, comments are welcome.

Single-Spin Asymmetries by BBC timebin

This is a study of 2005 data conducted in May 2006. Ported to Drupal from MIT Athena in October 2007

Hi jetters. Mike asked me to plot the charged track / pion asymmetries in a little more detail. The structure is the same as before; each column is a trigger, and the four rows are pi+/Yellow, pi+/Blue, pi-/Yellow, pi-/Blue. I've split up the high pt pion sample (2< pT < 12 GeV) and plotted single-spin asymmetries for timebins 7,8, and 9 separately versus pT and phi. The plots and summaries are linked at the bottom of the page.

2 sigma effects are highlighted in yellow, 3 sigma in red. There are no 3 sigma asymmetries in the separate samples, although pi-/B/JP1 is 3 sigma above zero in the combined sample. Here's a table of all effects over 2 sigma:

|

timebin

|

charge

|

trig

|

asym |

effect

|

|

8

|

+

|

HT1

|

Y |

+2.2

|

|

9

|

+

|

JP1

|

B | +2.07 |

| 9 | - | JP1 | B | +2.45 |

| 7-9 | - | JP1 | B | +3.15 |

If you compare these results with the ones I had posted back in March (First Look at Single-spin Asymmetries), you'll notice the asymmetries have moved around a bit for the combined sample. The dominant effect there was the restriction to the new version of Jim's golden run list. The list I had been using before had at least two runs with spotty timebin info for board 5; see e.g.,

http://www.star.bnl.gov/HyperNews-star/protected/get/jetfinding/355/1/1/1.html

and ensuing discussion. I'm in the process of plotting asymmetries for charged track below 2 GeV in 200 MeV pT bins and will post those results here when I have them.

SPIN 2006 Preliminary Result

Event Selection Criteria

- run belongs to golden run list, version c

- BBC timebin belongs to {7,8,9}

- spinDB QA requires: isValid(), isPolDirLong(), !isPolDirTrans(), !isMaskedUsingBX48(x48), offsetBX48minusBX7(x48, x7)==0

- ignore additional vertices

- trigger = MB || JP1 || JP2

- |eta| < 1.

- |global DCA| < 1.

- nFitPoints > 25

- flag > 0

- nSigmaPion is in the range [-1,2]

- Random Patterns

- First Look at Charged Pion Trigger Bias (needs to be updated with more deltaG scenarios, should take ~1 day)

- 2005 Charged Pion Data / Simulation Comparison

- Asymmetries for near-side and away-side pions

- Background from PID Contamination

- Data - Monte Carlo Comparison, Take 2

The following are links to previous studies, some of which are outdated at this point:

Single-Spin Asymmetries by BBC timebin

BBC Vertex

Kasia's estimate of beam background effect on relative luminosities

Kasia's estimate of systematic error due to non-longitudinal porlarization

Asymmetries for near-side and away-side pions

Summary:

I associated charged pions from JP2 events with the jets that were found in these events. If a jet satisfied a set of cuts (including the geometric cut to exclude non-trigger jets), I calculated a deltaR from this jet for each pion in my sample. Then I split up my sample into near-side and away-side pions and calculate an asymmetry for both samples.

Jet cuts:

- R_T < 0.95

- JP2 hardware, software, and geometric triggers satisfied

Note: all plots are pi- on the left and pi+ on the right

This first set of plots shows eta(pion)-eta(jet) on the x axis and phi(pion)-phi(jet) on the y axis. You can see the intense circle around (0,0) from pions inside the jet cone radius as well as the regions around the top and bottom of the plots from the away-side jet:

Next I calculate deltaR = sqrt(deta*deta + dphi*dphi) for both samples. Again you can see the sharp cutoff at deltaR=0.4 from the jetfinder:

Asymmetries

Asymmetries

My original asymmetries for JP2 without requiring a jet in the event:

After requiring a jet in the event I get

Now look at the asymmetry for near-side pions, defined by a cone of deltaR<0.4:

And similarly the asymmetries for away-side pions, defined by deltaR>1.5:

Conclusions: No showstoppers. The statistics for away-side pions are only about a factor of 2 worse than the stats for near-side (I can post the exact numbers later). The asymmetries are basically in agreement with each other, although the first bin for pi+ and the second bin for pi- do show 1 sigma differences between near-side and away-side.

Background from PID Contamination

Summary:The goal of this analysis is to estimate the contribution to A_LL from particles that aren't charged pions but nevertheless make it into my analysis sample. So far I have calculated A_LL using a different dE/dx window that should pick out mostly protons and kaons, and I've estimated the fraction of particles inside my dE/dx window that are not pions by using a multi-Gaussian fit in each pt bin. I've assumed that this fraction is not spin-dependent.

Points to remember:

- my analysis cuts on -1 < nSigmaPion < 2

pi- is on the left, pi+ on the right. Each row is a pt bin corresponding to the binning of my asymmetry measurement. The red Gaussian corresponds to pions, green is protons and kaons, blue is electrons. So far I've let all nine parameters float. I tried fixing the mean and width of the pion Gaussian at 0. and 1., respectively, but that made for a worse overall fit. So far, the fit results for the first two bins seem OK.

2 < pt < 4:

4 < pt < 6:

4 < pt < 6:

6 < pt < 8:

6 < pt < 8:

8 < pt < 10:

8 < pt < 10:

I extracted the the integral of each curve from -1..2 and got the following fractional contributions to the total integral in this band:

| pi- bin | pion | p/K | electron |

| 2-4 | 0.91 | 0.09 | 0.01 |

| 4-6 | 0.92 | 0.05 | 0.03 |

| 6-8 | 0.78 | 0.07 | 0.15 |

| 8-10 | 0.53 | 0.46 | 0.01 |

| pi+ bin | pion | p/K | electron |

| 2-4 | 0.90 | 0.09 | 0.01 |

| 4-6 | 0.91 | 0.06 | 0.03 |

| 6-8 | 0.68 | 0.06 | 0.26 |

| 8-10 | 0.88 | 0.05 | 0.08 |

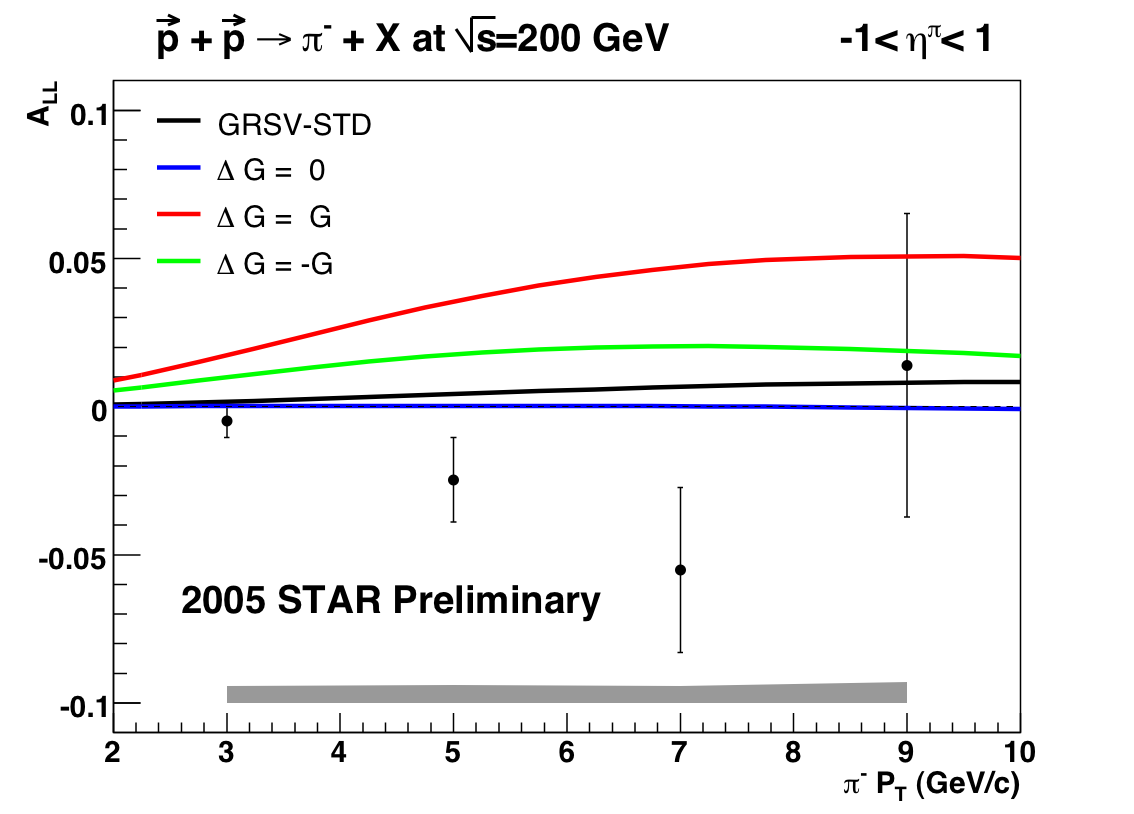

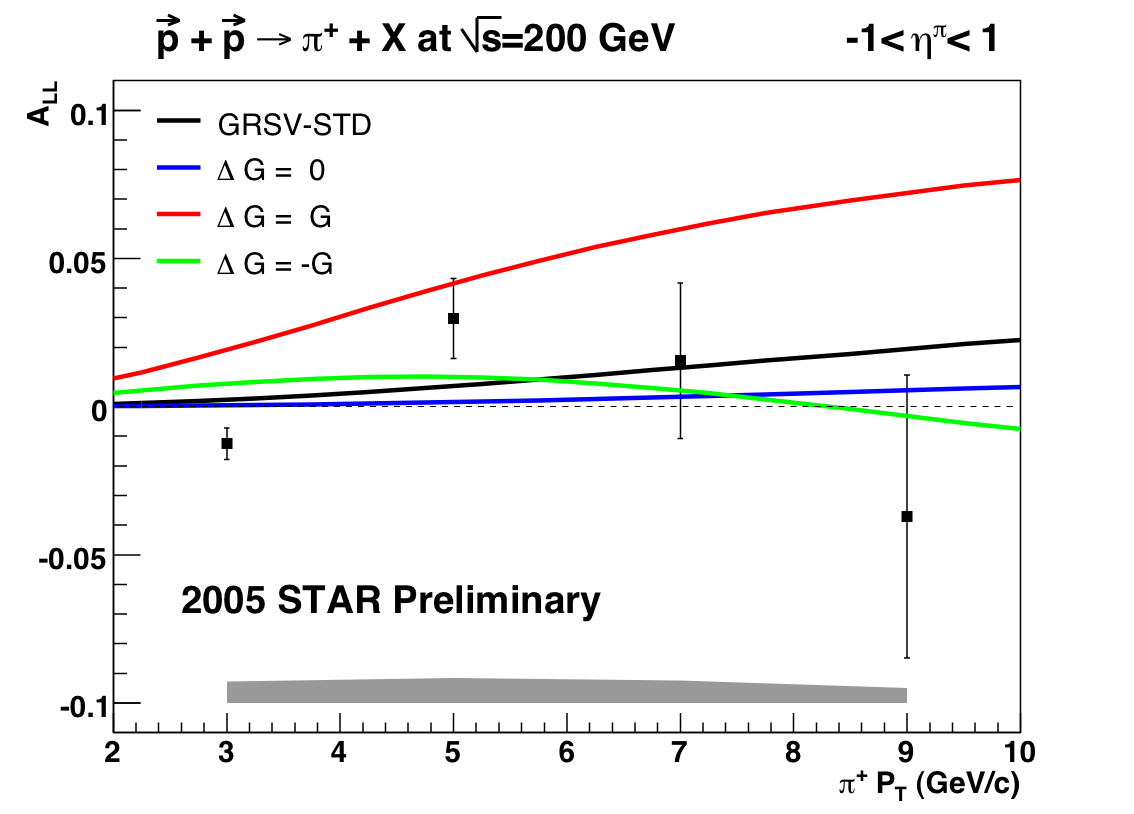

I repeated my A_LL analysis changing the dE/dx window to [-inf,-1] to select a good sample of protons and kaons. The A_LL I calculate for a combined MB || JP1 || JP2 trigger (ignore the theory curves) is

For comparison, the A_LL result for the pion sample looks like

I know it looks like I must have the p/K plots switched, but I rechecked my work and everything was done correctly. Anyway, since p/K is the dominant background the next step is to use this as the A_LL for the background and use the final contamination estimates from the fits to get a systematic on the pion measurement

Random Patterns

Triggers are| mb | ht1 |

| ht2 | jp1 |

| jp2 | all |

Conclusions: Sigmas of these distributions are ~equal to the statistical error on A_LL. Means are always within 1 sigma of zero

Systematic Error Table

I've included an Excel spreadsheet with currently assigned systematic errors as an attachment.