- genevb's home page

- Posts

- 2025

- 2024

- 2023

- 2022

- September (1)

- 2021

- 2020

- 2019

- 2018

- 2017

- December (1)

- October (3)

- September (1)

- August (1)

- July (2)

- June (2)

- April (2)

- March (2)

- February (1)

- 2016

- November (2)

- September (1)

- August (2)

- July (1)

- June (2)

- May (2)

- April (1)

- March (5)

- February (2)

- January (1)

- 2015

- December (1)

- October (1)

- September (2)

- June (1)

- May (2)

- April (2)

- March (3)

- February (1)

- January (3)

- 2014

- 2013

- 2012

- 2011

- January (3)

- 2010

- February (4)

- 2009

- 2008

- 2005

- October (1)

- My blog

- Post new blog entry

- All blogs

TPC Gating Grid Transparency study

Updated on Thu, 2024-05-09 12:07. Originally created by genevb on 2024-05-08 15:01.

The first test was conducted on 2024-05-03 at approximately 11:30-11:45am EDT (BNL time), runs 25124020-25124026. Outside of the test, C-AD had been performing luminosity leveling to keep our BBC coincidence rates at ~40 kHz, implying that the beams were mis-steered to some degree. During the test, we asked C-AD to perform no luminosity leveling, and over that duration, there was very little observable change in beam conditions anyhow.

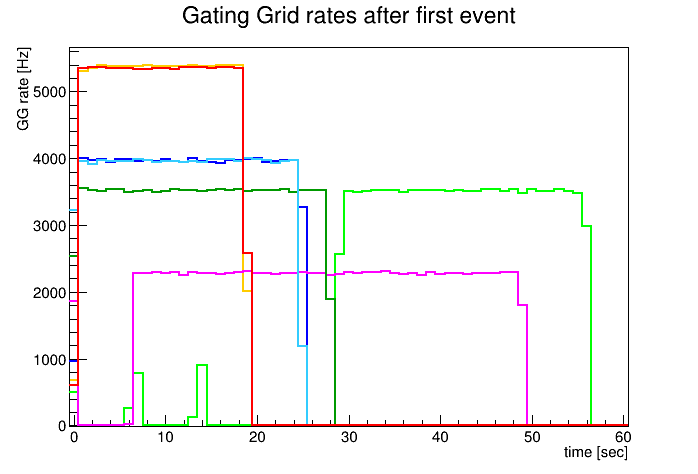

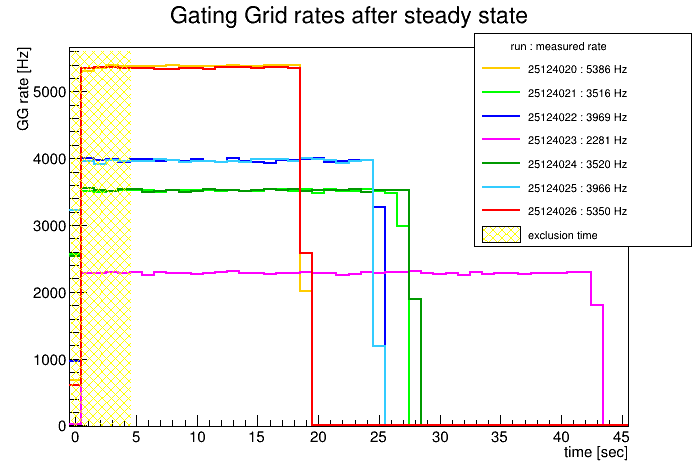

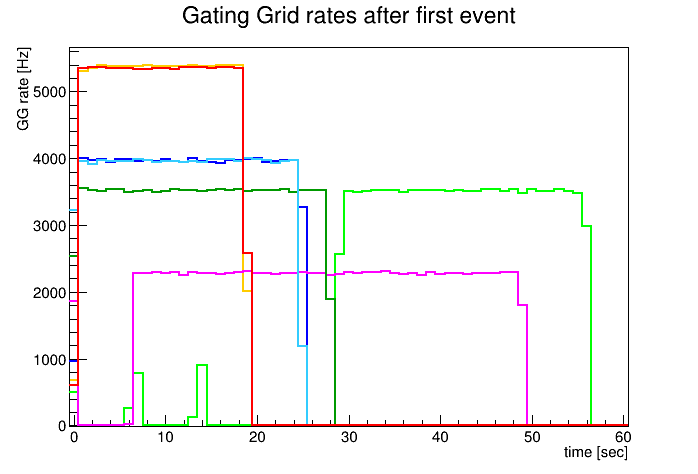

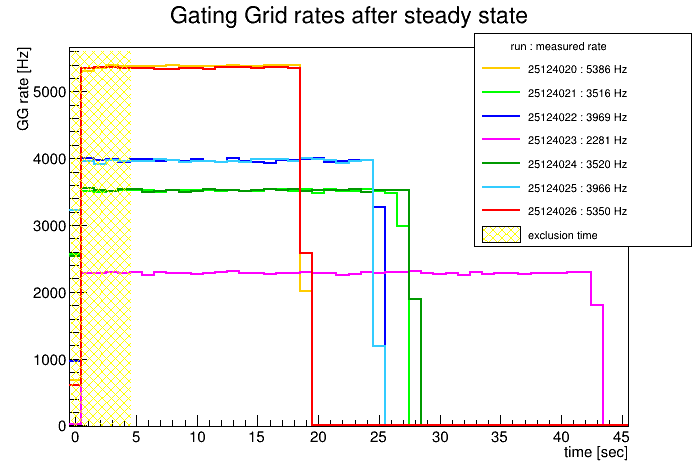

For analysis, I needed to exclude the first few seconds of the runs, to make sure that I was looking at data in a steady state of any possible IBF. The plot on the left shows the number of events recorded per second (found by grep-ing timestamps from quickly producing all the data without any tracking) as a function of time since the first event of the run. Some of the runs took some time to get settled with their prescales, so I manually offset those to the first partial second of the eventual acquisition speed. This is shown on the right below, including the first 4 full seconds of running at speed which I elected to ignore, and including (in the legend) the results of fitting the flat-top for each run to obtain the average DAQ (and thereby Gating Grid) rate. For subsequent running, I created instances of the Calibrations_tpc / tpcStatus table that ignores the TPC during that portion of runtime.

Before processing for SpaceCharge analysis, I calibrated a BeamLine and put that in the database. Based on the BeamLine calibration, I saw that I could use a VPD-TPC agreement cut on vertex z within ±10 cm.

I adjusted the SpaceCharge-measuring code to run until it reach 22k good quality tracks. Here are the numbers of events I had to process to reach that:

25124020 : (91961 processed - 22103 excluded) = 69858 included

25124021 : (89162 processed - 19172 excluded) = 69990 included

25124022 : (87734 processed - 16861 excluded) = 70873 included

25124023 : (81535 processed - 11039 excluded) = 70496 included

25124024 : (85283 processed - 16654 excluded) = 68629 included

25124025 : (87887 processed - 19015 excluded) = 68872 included

25124026 : (92481 processed - 22027 excluded) = 70454 included

=> typically 1 good quality track per ~3 included events

(I chose 22k tracks because I realized that would keep me below the 100k events I had available to process; it appears I may have been able to push to 24k with the cuts I was using, but that increase would unlikely change my findings.)

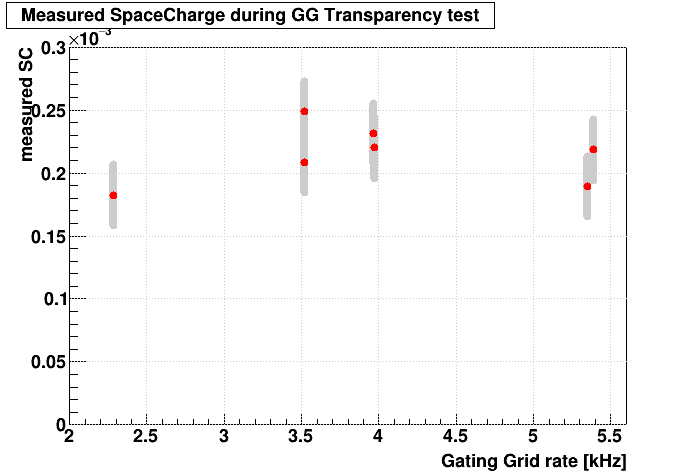

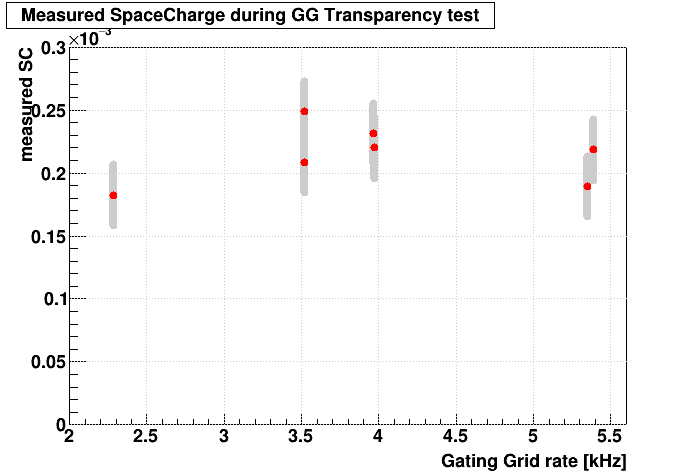

I also modified the histograms used to find SpaceCharge to have 200 bins over [-0.025,0.025]. The error reported on the fit of SpaceCharge was approximately 2.4e-5 in all seven cases. Here are my results showing that error in grey:

The great news is that there is no apparent effect due to opening the gating grid at high rates!

-Gene

For analysis, I needed to exclude the first few seconds of the runs, to make sure that I was looking at data in a steady state of any possible IBF. The plot on the left shows the number of events recorded per second (found by grep-ing timestamps from quickly producing all the data without any tracking) as a function of time since the first event of the run. Some of the runs took some time to get settled with their prescales, so I manually offset those to the first partial second of the eventual acquisition speed. This is shown on the right below, including the first 4 full seconds of running at speed which I elected to ignore, and including (in the legend) the results of fitting the flat-top for each run to obtain the average DAQ (and thereby Gating Grid) rate. For subsequent running, I created instances of the Calibrations_tpc / tpcStatus table that ignores the TPC during that portion of runtime.

Before processing for SpaceCharge analysis, I calibrated a BeamLine and put that in the database. Based on the BeamLine calibration, I saw that I could use a VPD-TPC agreement cut on vertex z within ±10 cm.

I adjusted the SpaceCharge-measuring code to run until it reach 22k good quality tracks. Here are the numbers of events I had to process to reach that:

25124020 : (91961 processed - 22103 excluded) = 69858 included

25124021 : (89162 processed - 19172 excluded) = 69990 included

25124022 : (87734 processed - 16861 excluded) = 70873 included

25124023 : (81535 processed - 11039 excluded) = 70496 included

25124024 : (85283 processed - 16654 excluded) = 68629 included

25124025 : (87887 processed - 19015 excluded) = 68872 included

25124026 : (92481 processed - 22027 excluded) = 70454 included

=> typically 1 good quality track per ~3 included events

(I chose 22k tracks because I realized that would keep me below the 100k events I had available to process; it appears I may have been able to push to 24k with the cuts I was using, but that increase would unlikely change my findings.)

I also modified the histograms used to find SpaceCharge to have 200 bins over [-0.025,0.025]. The error reported on the fit of SpaceCharge was approximately 2.4e-5 in all seven cases. Here are my results showing that error in grey:

The great news is that there is no apparent effect due to opening the gating grid at high rates!

-Gene

»

- genevb's blog

- Login or register to post comments