- genevb's home page

- Posts

- 2025

- 2024

- 2023

- 2022

- September (1)

- 2021

- 2020

- 2019

- 2018

- 2017

- December (1)

- October (3)

- September (1)

- August (1)

- July (2)

- June (2)

- April (2)

- March (2)

- February (1)

- 2016

- November (2)

- September (1)

- August (2)

- July (1)

- June (2)

- May (2)

- April (1)

- March (5)

- February (2)

- January (1)

- 2015

- December (1)

- October (1)

- September (2)

- June (1)

- May (2)

- April (2)

- March (3)

- February (1)

- January (3)

- 2014

- 2013

- 2012

- 2011

- January (3)

- 2010

- February (4)

- 2009

- 2008

- 2005

- October (1)

- My blog

- Post new blog entry

- All blogs

Change in simulation code (RT #3371)

Updated on Fri, 2018-11-16 11:27. Originally created by genevb on 2018-11-16 11:09.

This blog post is in support of RT ticket 3371.

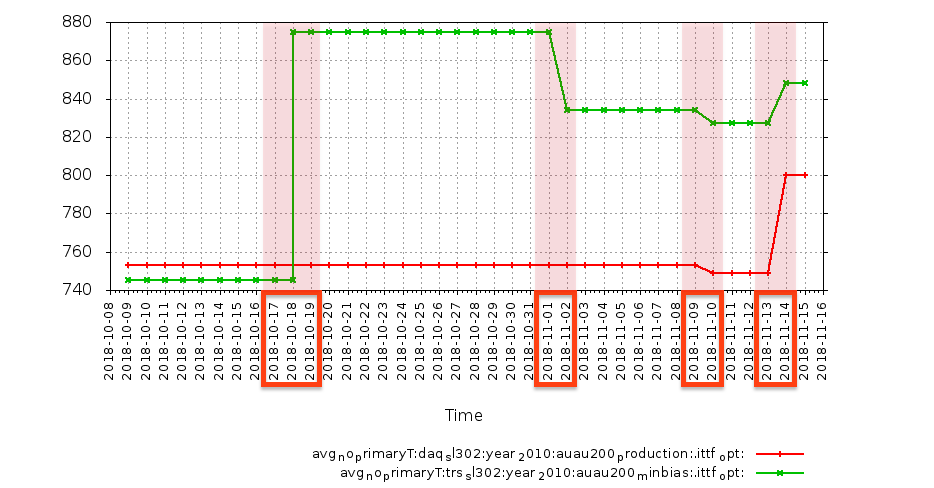

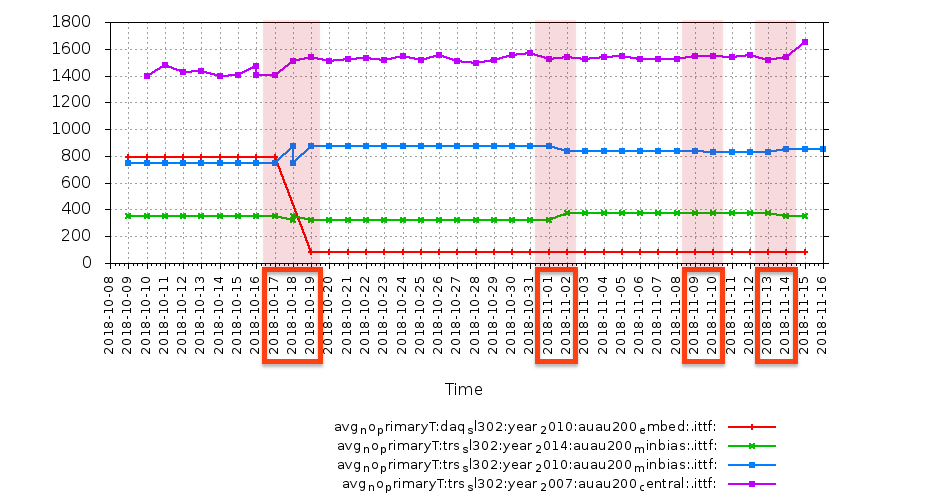

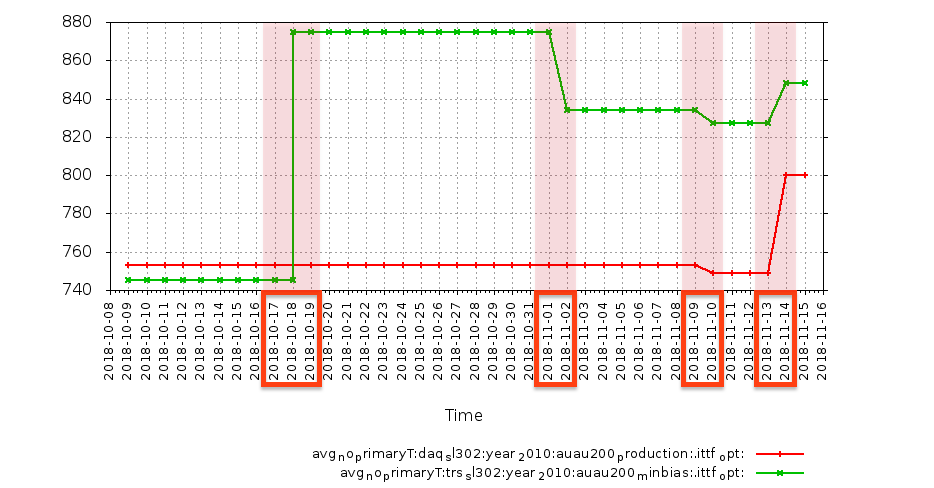

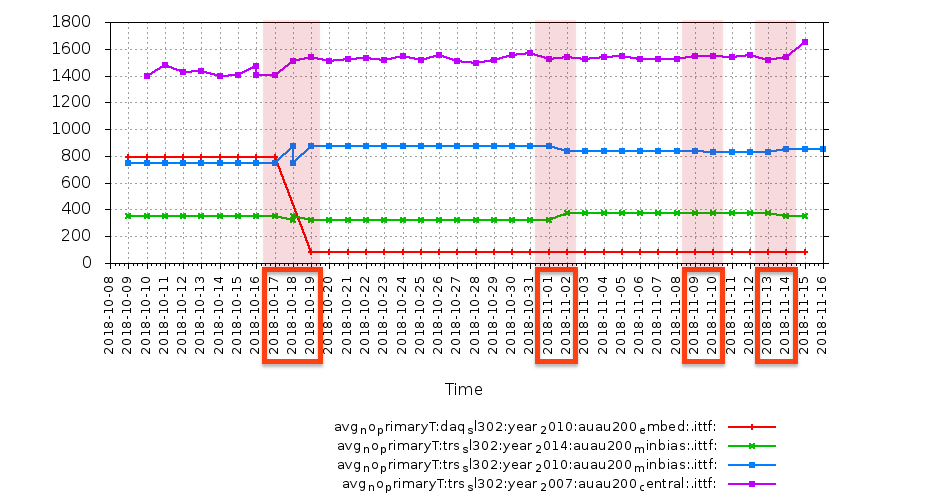

Both plots below show the average number of reconstructed primary tracks per event from nightly test data.

(NB: the nightly tests were run twice on October 18th because DEV did not initially compile due to missing code from Yuri, and it was then re-compiled and nightly tests were re-run on the 18th. The effect is that there are sometimes two values shown for the 18th on the plots and the plotting code didn't necessarily get the order of the two correct when connecting with a line as timestamps aren't that fine grained.)

The top plot is real data (red) and simulated data (green), both for Run 10 AuAu200 nightly tests.

The lower plot shows simulated data for Run 10 AuAu200 (blue), simulated data for Run 7 AuAu200 (purple), simulated data for Run 14 AuAu200 (green), and embedded data for Run 10 AuAu200 (red).

(NB: the Run 7 simulation uses a non-fixed random number seed, which explains the volatility, but there is nevertheless a clear step in the results)

I have highlighted the following events in chronological order:

-Gene

Both plots below show the average number of reconstructed primary tracks per event from nightly test data.

(NB: the nightly tests were run twice on October 18th because DEV did not initially compile due to missing code from Yuri, and it was then re-compiled and nightly tests were re-run on the 18th. The effect is that there are sometimes two values shown for the 18th on the plots and the plotting code didn't necessarily get the order of the two correct when connecting with a line as timestamps aren't that fine grained.)

The top plot is real data (red) and simulated data (green), both for Run 10 AuAu200 nightly tests.

The lower plot shows simulated data for Run 10 AuAu200 (blue), simulated data for Run 7 AuAu200 (purple), simulated data for Run 14 AuAu200 (green), and embedded data for Run 10 AuAu200 (red).

(NB: the Run 7 simulation uses a non-fixed random number seed, which explains the volatility, but there is nevertheless a clear step in the results)

I have highlighted the following events in chronological order:

- October 17th commit by Yuri to "Restore update for Run XVIII dE/dx calibration removed by Gene on 08/07/2018 "

- November 1st commit by Yuri for RT ticket 3369, resolving an issue Yuri introduced in February 2017, believed to be the key issue for embedding to match real data as brought forward by the JetCorr PWG

- November 9th commit by Victor to try to resolve the reconstruction issue Victor introduced in June 2016, believe to be the key issue for inefficient track reconstruction as brought forward by the JetCorr PWG

- November 13th commit by Victor to retract a possible enhancement that he committed on November 9th at the same time as the issue resolution codes, leaving only the issue resolution codes in place

- They affect simulation & embedding, not real data reconstruction (unlike Victor's commits, which affect everything), and they affect simulation & embedding by non-trivial percentages that seem very likely to affect efficiency calculations (this has not been proven, but a 17% modification to reconstructed primaries seen in Run 10 simulation would unlikely mean only edge-case tracks are affected; what happened to Run 10 embedding needs understanding too). Which simulation is better: before or after this commit? Do we have a reason for concern about all simulations before this commit?

- They affect all existing years' simulations; their impact is pervasive.

- They affect simulation using the older "TRS" TPC slow simulator (as was used for the Run 7 simulation shown), not just the newer "TpcRS" slow simulator. This indicates that the relevant modification is somewhere deeper in the code than at the level of TpcRS (TRS is an independent code). Fixing fundamental code later, after production starts, such that the fixes do not alter reconstruction, may be non-trivial. There is considerable value in fixing this before it goes into any settled libraries.

- Official productions are not the only users of the simulation and reconstruction codes; there are others doing high priority development work. Making everyone wait for a resolution to known issues needs good justification.

-Gene

»

- genevb's blog

- Login or register to post comments