- leun's home page

- Posts

- 2013

- 2012

- December (2)

- October (3)

- September (2)

- August (1)

- July (4)

- June (4)

- May (2)

- April (3)

- March (5)

- February (5)

- January (5)

- 2011

- December (3)

- November (3)

- September (5)

- August (2)

- July (2)

- June (3)

- May (4)

- April (4)

- March (2)

- February (4)

- January (2)

- 2010

- December (2)

- November (3)

- October (3)

- September (5)

- August (6)

- July (2)

- June (4)

- May (3)

- April (4)

- March (4)

- February (2)

- January (4)

- 2009

- 2008

- October (1)

- My blog

- Post new blog entry

- All blogs

FMS Simulation Plan

FMS Simulation for run 11 and run 12 anlayses

There are obvious needs for full simulation regarding the ongoing and soon-to-begin FMS analyses. I am guessing that at least in the short run, this will not go through the official production chain, for various practical reasons.

So assuming that we will proceed as we have in the past by producing "private" simulation samples, I would like to discuss a few things on what needs to be done and how.

1. Pythia version and primordial kT

Version 6.4 provides a better treatment of photon production. For me personally, this may be an important difference.

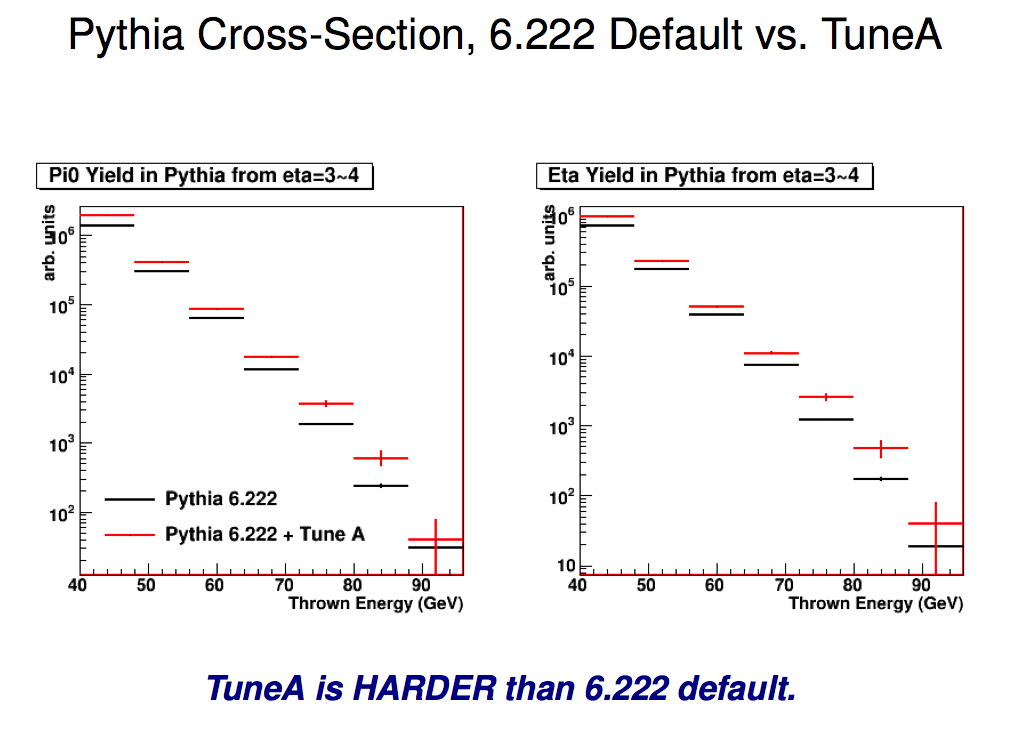

The problem is that the cross-section is too hard compared to the data, but it seems using tuneA should alleviate at least some of this issue. In addition, we had to weight the cross-section anyway, and in some sense having a harder spectrum actually makes it easier to live with smaller simulation sample, as long as we can put correct weights.

Fig. 1. Previous analysis, cross-section with tuneA, both version 6.222

I also previously did some study into where these forward events are coming from.

Job 1: Compare pi0 cross-section and the origin of the pi0 (hard scattering vs. beam remnant) between 6.22 default and 6.4+tuneA, and also as a function of "primordial" kT. ( MSTP(91), PARP(91) )

My guess is that we will run the latter and weight the distribution somehow, although as FMS has a far larger acceptance than the FPD, I suspect the simple pi0/eta weighting of the past might be inadequate.

We also need to decide what collision energy we will run Pythia at. Eventually of course we will need both 200 and 500GeV, but we do need to set some priorities.

We also have to figure out what information we need to save from Pythia more carefully. Previously, I generated a separate tree to store how many pi0s and etas were thrown, as some of them didn't pass the pyhtia filter and did not get recorded otherwise. Knowing these things in advnace will make it much easier down the road.

2. Pythis filter (trigger emulation)

Given the size of the detector, we need an aggressive filter at Pythia level if we hope to produce ~pb worth of simulation. (Even if we don't run full Cherenkov. In fact, I'm 99% sure we can't do this anyway.)

It seems that Jinguo added some functionalities to Akio's "custom" pythia event reader so that we can emulate something very roughly like board sums (at least in patch size) or jet patch. It's tedious but straight forward to code these, so we can likely imporve it fairly quickly, if we know what we want.

Job 2: Figure out proper pythia filters. Do we need multiple versions depending on the anlaysis?

For instance, I am interested in (the hopeless pursuit of) photons, and I suspect this means I will need both board sum and jet patch to understand the signal and jetty background. Naively I would think that emulating the actual trigger mix would be the obvious thing to do, but I don't know if this is the best.

3. GSTAR shower simulation

It will be necessary to eventually figure out a reasonable substitute user routine for the full Cherenkov simulation if we hope to get a sample big enough to be used for efficiency correction and background. As far as I can tell, we mainly need correct shower shape, and the energy dependence. Perhaps we should try what Carl suggested: multi-segment Pb-glass with dE/dX, and weight each segment appropriately.

Job 3: Come up with an alternative user routine that captures the essence of the Cherenkov shower.

My understanding is that Steve and Chris probably know the most about this, and also have the best tools. We also need to decide whether we will stick with GSTAR, or go with Geant4 so as to avoid the thin volume issue.

- leun's blog

- Login or register to post comments