Update on TMVA Regression with weight:0

Updated on Thu, 2020-01-09 13:57. Originally created by rsalinas on 2020-01-07 18:43.

Weighing

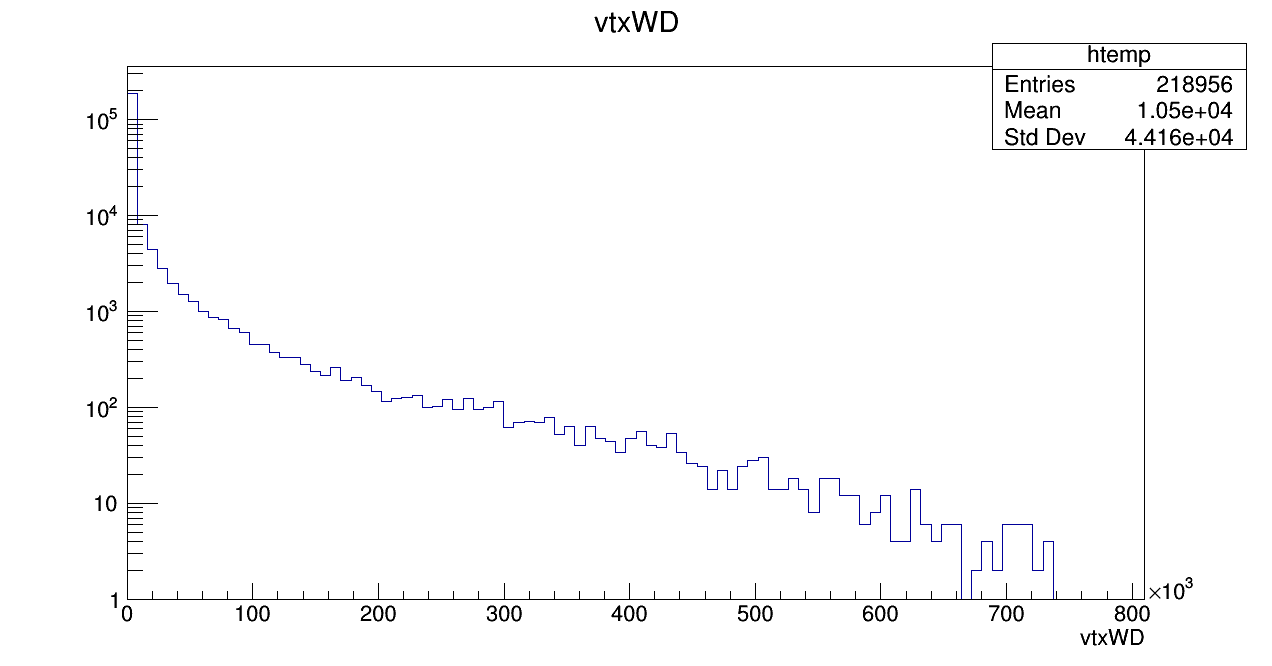

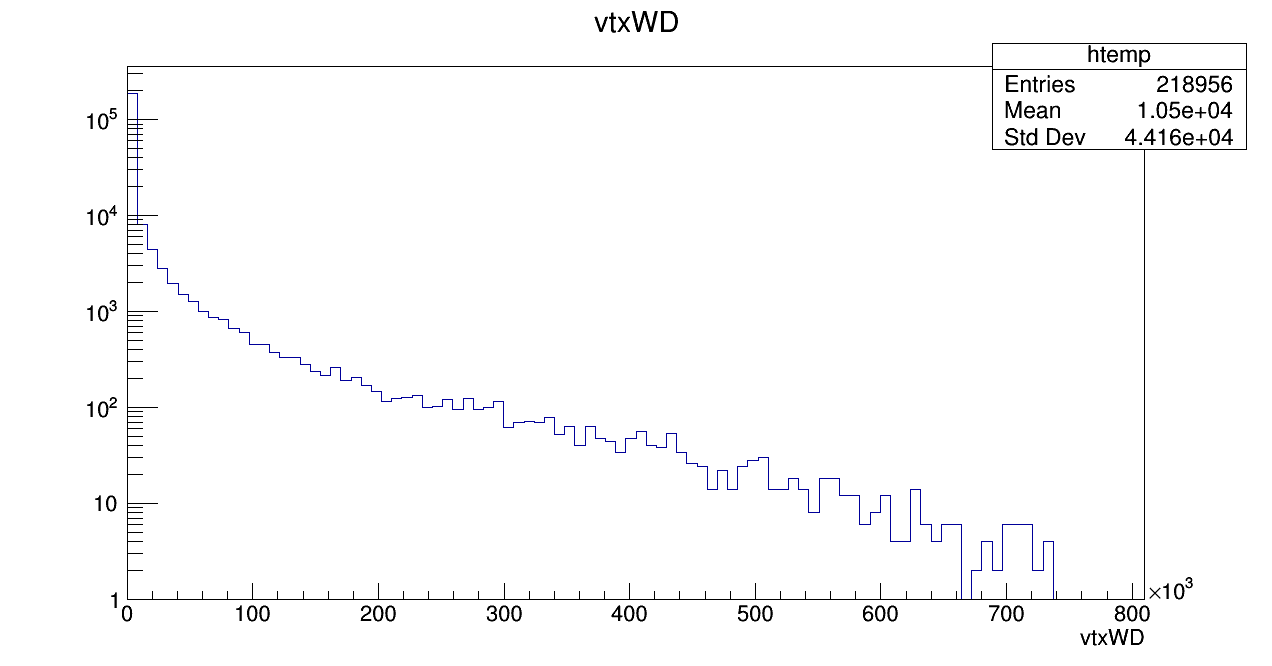

In this regression, I added a weight branch to the TTree containing a weight I grabbed from the embedding. The weight that was used consisted of the partonic weight for the matched detector jet, the kt weight, and the VPD vertex weight. This weight was only saved to the TTree once a detector jet was matched to a particle jet.Here is a plot of what the weight looked like for all the events:

Regression

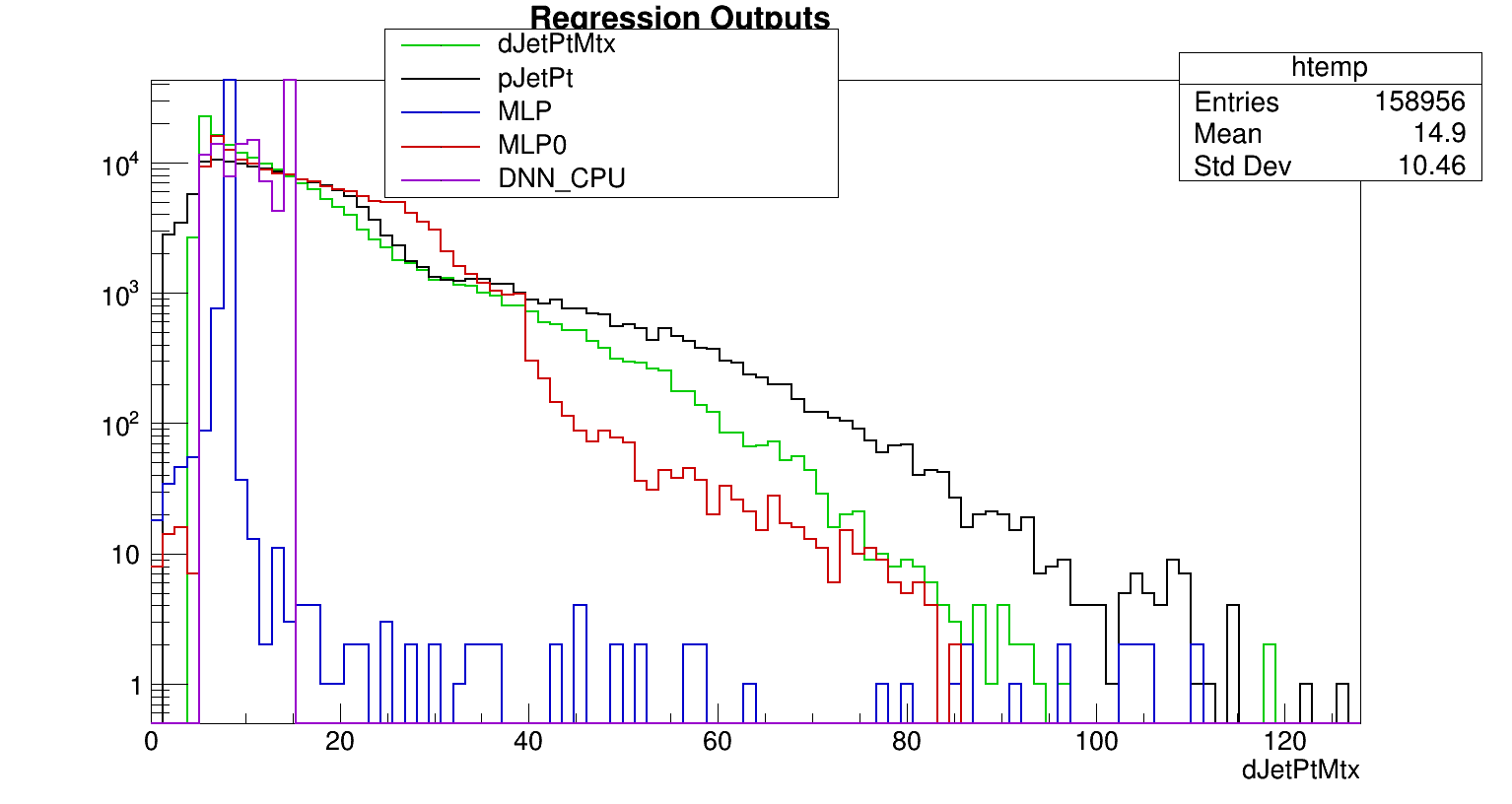

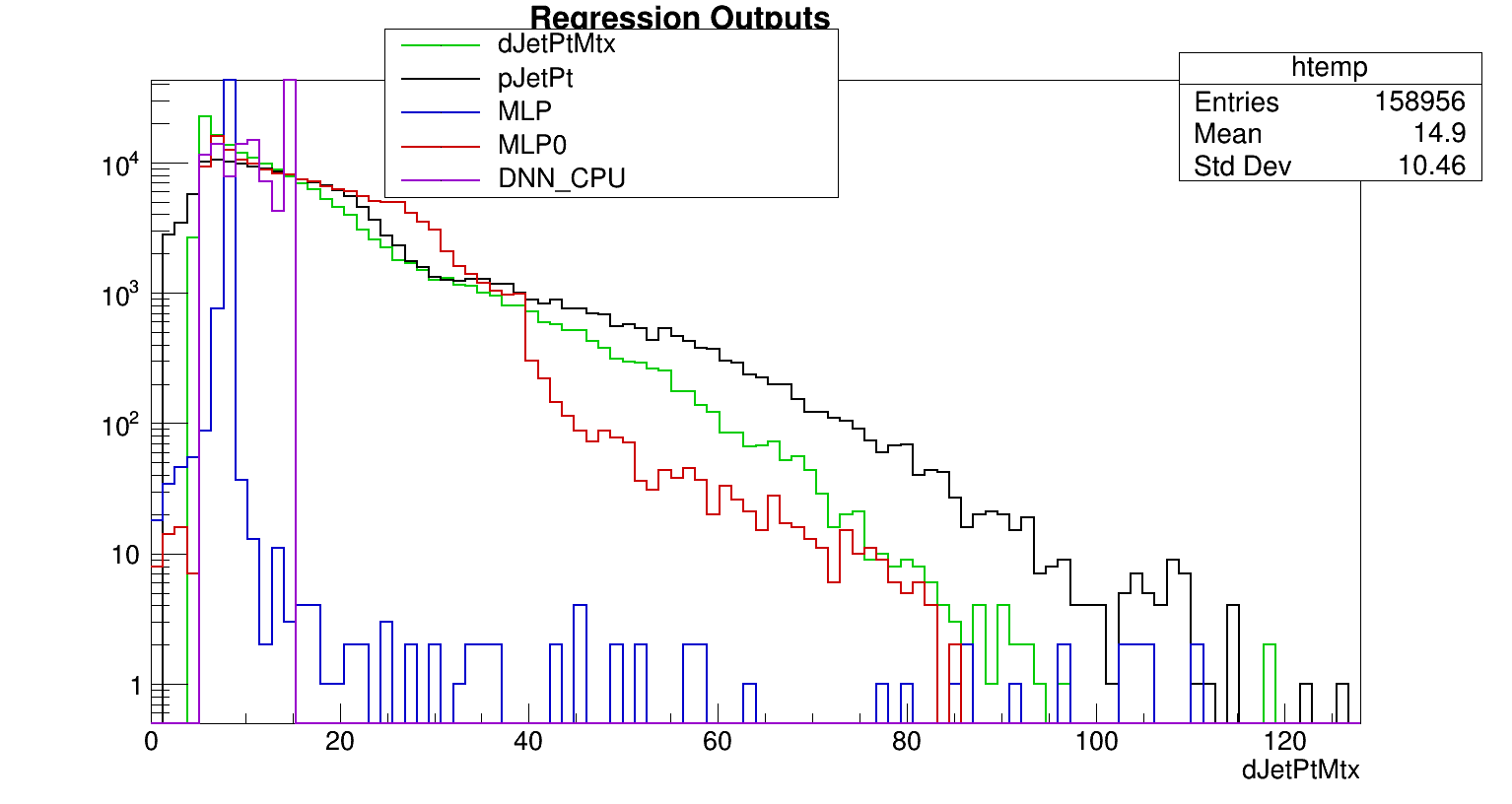

The weight was then passed to the regression via the "setweightexpression()" to set individual event weights to the events saved in the TTree. Whether the event-by-event weighing was used on the variables used for regression, the regression target, or both is still not clear to me but I suspect that it was passed to the variables used for regression based on how the regression looks. Here is the regression training with 60,000 out 218,958 events output for three methods (MLP,MLP0,DNN_CPU), and input variables and regression target :

MLP

-

Neuron type: tanh

-

Hidden layers: 2

-

Number of neurons per layer: 20,20

-

Learning rate: .01

-

Test rate: 10 (each 10 epochs)

-

Training method: Back Propagation (BP)

MLP0

-

Neuron type: tanh

-

Hidden layers: 1

-

Number of neurons per layer: 30

-

Learning rate: .001

-

Test rate: 10 (each 10 epochs)

-

Training method: BFGS

DNN_CPU

-

Neuron type: tanh

-

Hidden layers: 3

-

Number of neurons per layer: 50,50,50

-

Learning rate: .01,.001,.0001

-

Test rate: 10,5,5

pJetPt: particle jet pt

dJetPtMtx: detector jet matched to particle jet

dJetPtMtx: detector jet matched to particle jet

Conclusion

Looking at the regression, they all behaved terribly when the weight was incorporated. What I believe the weight is doing is applying this weight to the variables (eta, dJetPtMtx) and trying to re-create the pJetPt from this. However, I'm not too sure and would need to carefully read the TMVA manual and tinker.Relatively, the only one that didn't behave completely terribly is the MLP0 and there are several factors to consider including whether the weighing is being applied only to the input variables event-by-event or to both the input variables and the regression target. The following are a few variables that could use some tweaking to improve the regression:

- Learning rate

- number of training events

- Epoch testing

- neuron type

- change weight (?)

In retrospect, this is a step forward as it gives me insight in what works and what doesn't work.

»

- rsalinas's blog

- Login or register to post comments