Update on TMVA Regression with weight:1

Updated on Thu, 2020-01-09 14:37. Originally created by rsalinas on 2020-01-09 14:35.

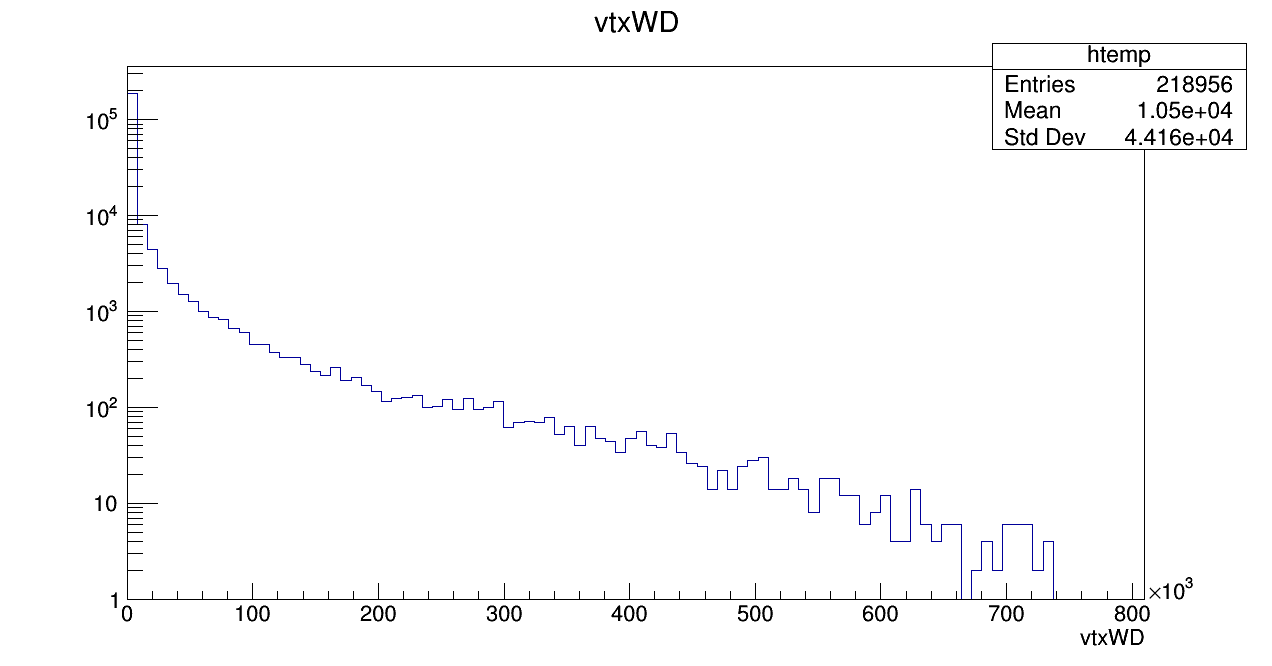

This serves as an update on the regression using the same weight (vtxWD) as the first update on this.

Here it is again for reference

Regression

This time around, there were two different methods used for the regression was two different instances of the MLP method:

MLP

- Neuron activation type: tanh

- Neuron input type: Sum (dictates how are all the inputs are passed to the input)

- NCycles: 10000

- Hidden Layers: N + 100 (N represents how many variables passed, 2 in this case)

- Learning rate: .02

- Training method: BFGS

MLP_0

- Neuron activation type: tanh

- Neuron input type: Sum (dictates how are all the inputs are passed to the input)

- NCycles: 500

- Hidden Layers: N, N-1(N represents how many variables passed, 2 in this case)

- Learning rate: .02

- Training method: BFGS

The biggest difference between these two methods are the number of neurons and the number of cycles to train on. This leads to a large discrepancy between the two different methods

However, this is an improvement from last time as these two methods are somewhat resembling the pJetPt with the weight included.

»

- rsalinas's blog

- Login or register to post comments