invest refmult distribution in QA-1 for run11 Au+Au 200 GeV 2015-07-26

Motivation:

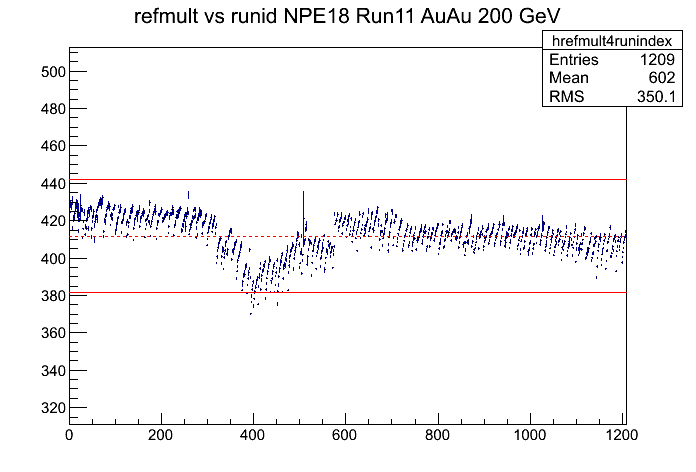

12138081~ 12145020 (May 18th - 25th) has a dip in refmult distribution as shown in (x-axis is runindex ordered by non-empty run number, y-axis is refmult)

The dip is presented for all sections.

Original post:

drupal.star.bnl.gov/STAR/blog/yili/qa-1-run11-auau-200-gev-2015-07-26

Hui Wang's centrality study showed, compared with MC, refmult distribution at large refmult end failed to matched MC shape, and it is fine for smaller refmult part. Slide 14 in www.star.bnl.gov/protected/bulkcorr/wanghui6/my_talks/Run11_200GeV_refMult.pdf

Some QA plots from Yi Guo: drupal.star.bnl.gov/STAR/system/files/Run11AuAu200QA_additional.pdf

Dataset:

Au+Au 200 GeV run11

trigger:

NPE_18 (350503, 350513)

Centrality 0-20% from StRefMultCorrr

Disscussion:

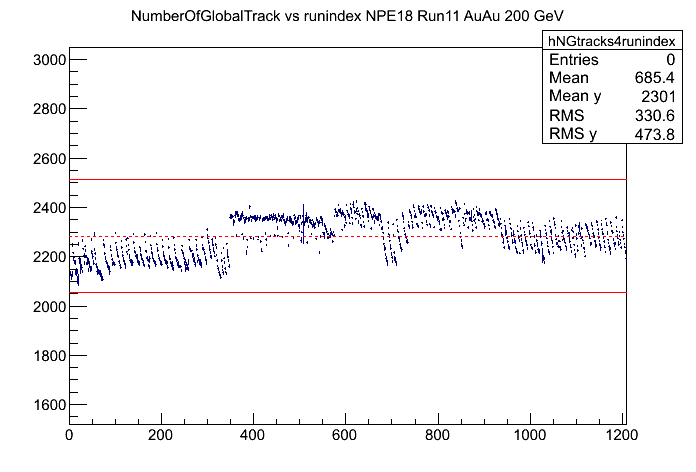

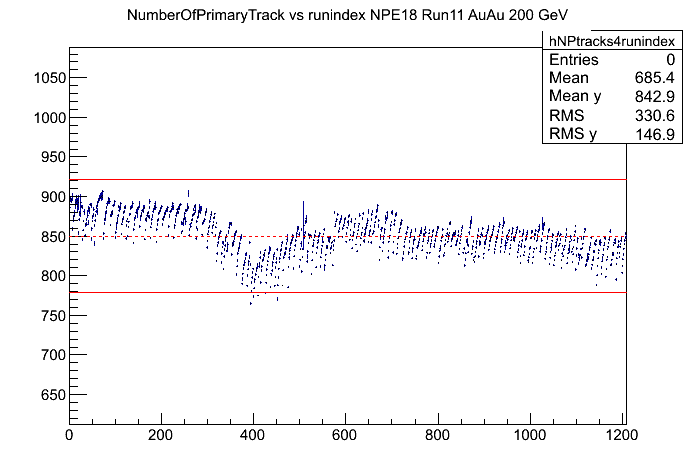

The dip is only for primary tracks, not global tracks or other detectors.

Number of Global tracks

Number of Primary tracks

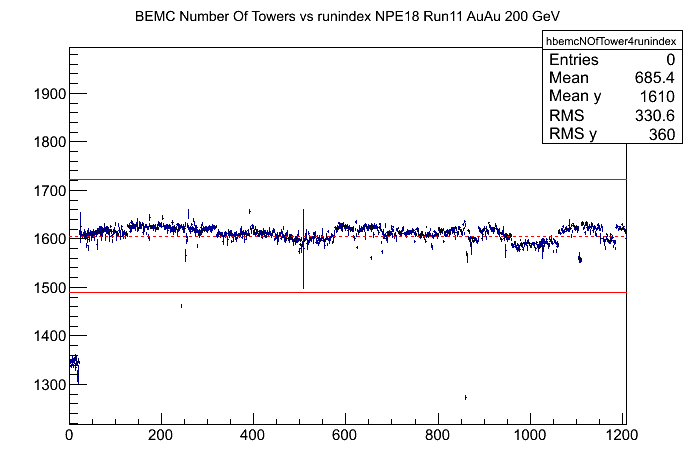

It is not presented in BEMC number of hitted towers

TPC gas presssure (PT_1 in online.star.bnl.gov/dbPlotsRun11/ thanks to L. C. De Silva) near that period time May 18-25th. No difference observed with the week before or after.

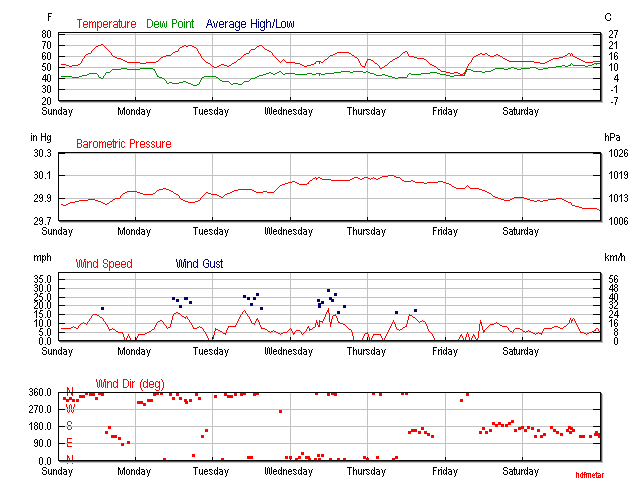

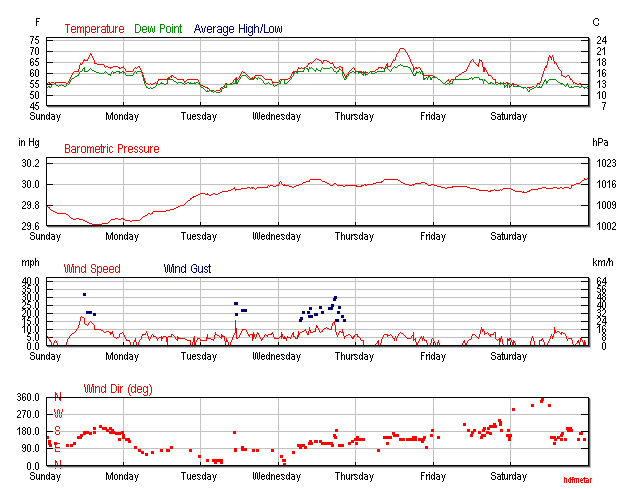

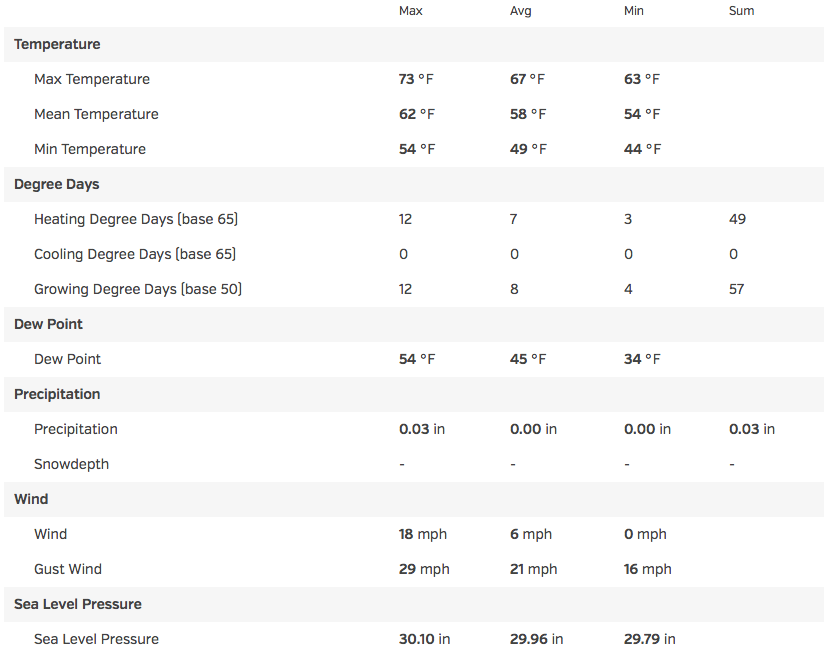

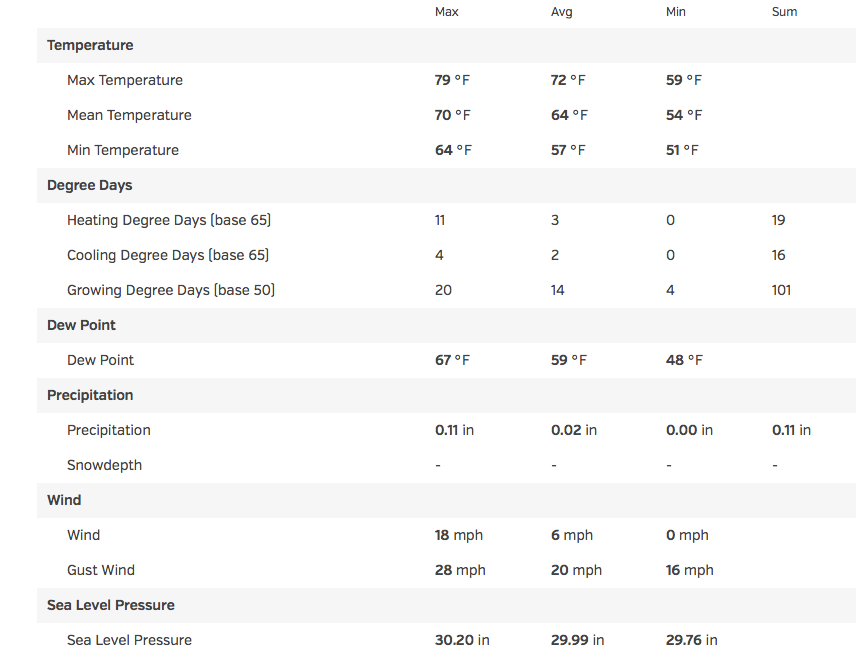

Weather condition:

| a week before | week of May15-21th | a week after |

|

|

|

| a week before | May 15-21th | a week after |

|

|

|

The weather was more or less stable, except on May 17th & 18th, there was 1.6 inch & 1.2 inch rain.

Shift log

Right before run 12138081:

May 18, 2011

| 16:29

General |

Note from Jeff:

He updated the trigger: adding 3 triggers for the high towers which included the FTPC, reduced the minbias rate by ~ 10% and increased the rate on the high-tower triggers.

- Matt Lamont |

| 21:39

General |

Runs 12138077-12138080 were unsuccessful because the presence of FTPC caused lots of problems. We called Jeff and he is looking into it. For now, we are taking data without FTPC.

- Oleksandr Grebenyuk |

| 21:50

General |

Run 12138081 - This is probably a good run, although it has stopped by itself with an EVB01 error. Nevertheless, I will mark it as a good run.

- Oleksandr Grebenyuk |

In the middle of the period, thing started to turn better.

May 20th, 2011

| 10:50

General |

Run 12140029 - AuAU200_production_2011: 628K events. Run stopped so Jeff can implement future protection.

- Matt Lamont |

| 12:31

General |

Run 12140042 - AuAu200_production_2011

2x10^7 triggers requested turned into 1.2x10^7 minbias with the future protection aborts added by Jeff. Interestingly, although we asked for 2x10^7 triggers, the run didn't stop of its own accord. It got to ~ 50 short of this and then no more new triggers came through. A beam dump has just been issued so I will keep my eyes on this during the next fill. |

May 21, 2011

| 07:29 (07:29) General |

Summary Report - Morning Shift

EVB05 has been out since the evening shift, and remains out at Jeff's instruction. As Matt noted in the day shift log, runs no longer stop when the requested number of triggers are reached: no more events are taken but the run has to be stopped manually. - Thomas Burton |

| 08:42

General |

Run 12141027 - AuAu200_production_2011: 318K triggers requested, 174K minbias triggers recorded.

Again,. the future-guardian trigger was running at 100% dead for the Scaler rate. It started at 98% dead but after 60K triggers, it went to 100% dead. This also causes the number of minbias aborts to stop, meaning that some of the run has future protection and some of the run doesn't.

- Matt Lamont |

| 09:46

General |

Future-guardian trigger issue:

With the first run I in this fill, I noticed that the future-guardian trigger went 100% dead some way into the run. The future-guardian and TPX deadtime should sum to 100% but the TPX was still running at 2-3% dead when the future-guardian trigger was 100% dead. When this goes dead, the number of events aborted in the minbias trigger no longer increases which is a problem because for that particular run, some of it is future protected, some of it not. We did some more tests and the final run with the future-guasrdian in was run 12141030. After talking to Jeff about this on his cell, we decided just to remove this trigger for now and only to bring it back once he has had a chance to look at it. I will try and figure out if this was always the case with this trigger (which was introduced yesterday) or is only recently introduced.

- Matt Lamont |

| 09:53

General |

Run 12141032 - AuAu200_production_2011: 530K events recorded with no future_guardian trigger.

We noticed a strange distribution in the barrel pre-shower and we have stropped the run and will do a 'BEMC Prepare for Physics' to see if this clears the problem.

- Matt Lamont |

| 10:14

General |

Future_guardian trigger - more info:

So, by plotting the trigger info of just the future_guardian trigger (RunLog Browser -> Trigger details -> Display plots), I traced the first bad run to run number 12140052. Thisd was taken at 19:00 yesterday evening. Note that there were 4 good runs with the future-guardian trigger in before this problem occurred and that for this run, the 100% dead time (and hence event rate 0f 0Hz for this trigger) occurred about 12 minutes into a 50 minute run.

- Matt Lamont |

| 16:20 (16:29) General |

Summary Report - Afternoon Shift

So, from the start of the run today, I noticed that there was a problem with the future_guardian trigger in that it was going 100% dead which meant that no min bias events were being aborted. After some diagnosis, this started happening yesterday evening. Details are in the shift log at the start of the shift. It has been taken out until further notice. Also, I noticed that the UPC_main trigger was not taking any data. This was traced to starting in the day shift yesterday and Geary noticed that it was because the trigger condition required the BBC-W to be both on and off. I have changed this back to what it was (BBC-W off) when it was working and it appears to be working now again. - Matt Lamont |

May 22th

| 10:07

General |

Run 12142028 - AuAu200_production_2011: 10^6 triggers.

Back to the future(_guardian). Zhangbu has made a change to this trigger, adding extra protection, and it seemed to work. It never got 100% dead and aborts continued throughout the run. Zhangbu will make an entry to the shiftlog detailing what he did. One issue remains with the trigger that if you ask for 10^6 triggers, say, then the run stops 50 triggers short but never actually stops itself, a manual intervention has to be made. Jeff thinks that this is some logic issue and until this is fixed, we just have to keep an eye on it.

- Matt Lamont |

| 10:10

General |

Run 12142029 - Put in Future_guardian = .not.minbias&TOFmult4&ZDC_COIN&ZDC_TAC&TPC_busy the additional .not.minbias makes sure that future_guardian acts on pile-up interaction during TPC_busy and not just any TPC_busy.

- Zhangbu Xu |

| 10:32

General |

Run 12142029 - AuAu200_production_2011. After 1.4x10^6 triggers, future_guardian again went 100% dead. This lasted a lot longer than in the past.

Zhangbu has noticed that every time there is an abort, a message is written to the console. Does this also have something to do with it? We will start a new run with just 10^6 triggers.

- Matt Lamont |

| 10:51

General |

Run 12142030 - AuAu200_production_2011: ~ 600 kHz triggers befiore the future_guardian went 100% dead so the run was stopped then.

- Matt Lamont |

| 10:53

General |

Run 12142031 - AuAu200_production_2011: future_guardian went 100% dead after ~ 380K triggers.

- Matt Lamont |

Run 12142032 didn't have future_guardian

| 16:26 (16:29) General |

Summary Report - Afternoon Shift

Zhangbu had an idea for fixing the future guardian trigger (implementing the current logic with .not.minbias) and initially it seemed to work but then it didn't work after all so we kept it out. The issue that Tom noted with the high dead times of the trigger were there for us intemrittently. Sometimes the rebooting of the trigger on the RTS console worked, other times it didn't. We're not sure of the solution right now. Zhangbu reduced the rate of the minbias protected trigger from 960 to 800 but this doesn't seem to have had much of an impact. - Matt Lamont |

May 23th

| 11:51

General |

Run 12143054 - AuAu200_production_2011: 440K events.

Future_guardian was added in again at the request of Jeff but it didn't work. Run stopped and we will try again.

- Matt Lamont |

| 11:56

General |

Run 12143055 - AuAu200_production_2011: 205K events.

Run stopped due to error in future_guardian and with the TOF (CANBUS error).

- Matt Lamont |

| 12:13

General |

Run 12143056 - AuAu200_production_2011: 800K triggers.

Run stopped as future_guardian didn't work. Will re-start with another test.

- Matt Lamont |

| 12:42

General |

Run 12143058 - AuAu200_production_2011: 10^6 triggers.

Future_guardian in again, but again went 100% dead.

- Matt Lamont |

Then future_guardian was taken out again until

May 24th

| 11:13

General |

Run 12144031 - AuAu200_production_2011. 1M triggers with future-guardian included

- Christopher Powell |

| 11:15

General |

Run 12144032 - AuAu200_production_2011 with 80k triggers. Run stopped to include new triggers created by Jeff.

- Christopher Powell |

| 11:56

General |

Run 12144033 - AuAu200_production_2011. 2 M triggers (future-guardian included)

- Christopher Powell |

Run 12144034 Run 12144035 and later runs had future_guardian

| 21:38

General |

Run 12144050 - Good production run without FTPC, 50k events. Stopped to include FTPC when the rate decreased.

The 'DAQ rate' is 350 Hz, this seems too low to me. Is this an effect of the future protection? I think the data are good, so we will continue running like this.

- Oleksandr Grebenyuk |

future_guardian still included for the runs.

May 25:

| 07:31 (07:29) General |

Summary Report - Morning Shift

We had 3 fills this shift. 2 normal and 1 short. This last short fill is ending early for APEX. FTPC tripped a couple of times. TPX sector 3 had some trouble, but after a reboot and 2 run-restarts was able to correct itself. Due to the future-protection trigger, the number of events actually recorded vs asked for is notably different. This appears to be a function of luminosity. - Dylan |

Run 12145020 the last run before APEX

At the end of the bad period, there was a APEX day. Then there was a new trigger(?) file updated. Afterwards, the refmult went back to normal.

May 26th

| 16:35 (16:29) General |

Summary Report - Afternoon Shift

Production tier1 file was updated to TRG_110526. - Christopher Powell |

It seems those change in the trigger happened at the same time as the primary track quantity changes. It is not clear to me why there would be any effect TPC primary tracks due to the trigger change.

- yili's blog

- Login or register to post comments