FST Operation Log May 10 - July 4 2023

July 3 Mon

Run23 Bi-weekly LAT Monitoring 1

1. List 2 generated based on 24183011-24184013

List of APVs that are "problematic" after the adjustment

RDO_ARM_GROUP_APV_TBHigh: avgFracTBHigh-5*errfH > avgFracTBMid+5*errfM; LAT0 -> LAT1

2.Summary:

a) All attempts were successful

b) Three new APVs are in List 2; will be carefully checked in the next Bi-weekly LAT monitoring

c) No need to revert

=============================================================================================

July 2 Sun

A standard procedure with quantification of monitoring the time bin plots and latency settings is established.

This is the first time to do this.

Run23 Bi-weekly LAT Monitoring 1

1. List 1 generated based on 24178044-24181023 (pre-adjustment)

List of APVs to change (List 1)

=============================================================================================

June 24 Sat

We had enough data with 9 time bin for diagnostic purposes and switched back to 3 time bin mode.

1. Updated the firmware

2. The LAT setting is changed in order to move the pulse maxima from bin 4 (middle bin when under 9 time bin settings) to bin 1 (middle bin when under 3 time bin settings)

3. Alter the dead time (busy registry) from 720 back to 300

4. Take a pedestal run for calibration

5. Take a PedAsPhys to check the noise

Some offline study was done during these days for 9 time bin. The plots can be found here.

The conclusions we are able to draw for now:

1. In terms, the FST timing is not drifting rapidly. There are sudden drifts here and there due to update of pedestals, new fill etc, but tends to go back.

2. The 3 time bin that are included under the 3 time bin settings has a fraction (among the entire signal) of over 95% for all APVs all the time, with one exception of APVs corresponds to a sector. These 4 APVs have fractions of over 90%, which is also acceptable.

=============================================================================================

June 22 Thur

We missed the step of changing the dead time (busy registry) (from 300->720). Tonko did this in the early morning. After that the FST was able to run in 9 time bin mode.

=============================================================================================

June 21 Wed

For diagnostic purposes, the FST is decided to run in 9 time bin mode for some time.

1. Gerard updated the firmware

2. The LAT setting is changed in order to move the pulse maxima from bin 1 (middle bin when under 3 time bin settings) to bin 4 (middle bin when under 9 time bin settings)

3. Take a pedestal run for calibration

4. Take a PedAsPhys to check the noise

The maxTB plots on the Online Monitor are also modified accordingly (numTimeBin changed from 3 to 9)

=============================================================================================

June 15 Thur

Latency on the following APVs are adjusted:

(RDO_ARM_PORT/GROUP_APV)

2_1_0_x: 89 -> 90 (x=4,5)

2_1_1_5: 90 -> 89

5_1_0_x: 88 -> 89 (x=2,3)

=============================================================================================

June 11 Sun

The FST desktop in the control room was rebooted under the hope that it may fix the display issue. Did not succeed.

=============================================================================================

June 8 Thur

1. Print the new shift-crew manual.

2. The entire STAR was brought back; procedure started at noon

3. FST encountered the following issue:

To double check, I went in and checked the the MPOD crates. I switched off and back on to cycle the procedure. Then the "emergency shutdown" procedure (the "shutdown" on the FST control GUI) was went over. However, later the FST refuse to ramp up. It turns out that

1) The MPOD crates seemed to lack power supply even when the hard switch was "ON" (lights were all off)\

Solution: cycle the hard switch again

2) On the FST monitoring desktop, the GUI is not displaying the "detector status" correctly when ramping up. It should be "FST IS ON (NOT READY)", but instead showing "FST IS OFF". It returns back to normal when the ramping is completed.

On the other hand, experts' remote access to the GUI was normal. We left a note to the shift saying that ignore this inconsistency for now.

=============================================================================================

June 7 Wed

1. In the morning, the MPOD crates are turned off to secure the work on TPC chill water. It was later turned back on in the afternoon.

2. Due to the severe air pollution, the entire STAR was shut down temporarily until further notice. Only the gas system is kept on.

FST MPOD crates hard switches were left on when the power supply was cut off

FST cooling system was left on and the power supply was not cut.

3. Update on the shift-crew manual (change of wording on the shift-crew GUI, contact list, and date; add the "Power Supply Off" chapter). The expert will print it out next time he goes to the control room.

=============================================================================================

May 30 Tue

Maintenance day today. Had a chance to go in.

1. Refilled the coolant and switched the pump 1->2.

2. Current estimation of leak rate is ~0.5%/day (based on last 3 valid data points)

=============================================================================================

May 29 Mon

More APVs latency adjusted:

Form: (RDO_ARM_PORT/GROUP_APV)

1_1_1_x: 88 -> 89 (x = 4, 6, 7)

2_1_0_5: 90 -> 89

2_1_1_x: 89 -> 90 (x = 5, 6, 7)

3_1_1_7: 88 -> 89

5_1_0_x: 89 -> 88 (x = 2, 3)

Here is a summary of all adjustment on latency on May 27 and 29.

=============================================================================================

May 28 Sun

1. Double checked the latency by APVs. New APVs need adjustment found, which is most likely caused by the "FST not off during beam dump" incident yesterday.

2. Changed the wording of the Shift Crew GUI:

"FST IS READY" -> "FST IS READY FOR DATA TAKING"

"FST IS ON" -> "FST IS ON (NOT READY)"

=============================================================================================

May 27 Sat

1. Checked and adjusted the latency settings on the APVs:

Form: (RDO_ARM_PORT/GROUP_APV)

2. A DO forgot to turn off the FST before the beam dump; the co-DO checked the status, but mistook "FST IS READY" as "FST is ready for beam dump" (instead of ready of data taking).

This caused one time trips on all HV channels.

Pedestals are checked; no issue found.

=============================================================================================

May 26 Fri

Local test for adding plots to the Shift QA Histograms complete. Pull request sent and implemented online.

288 (Max Time Bin by APVs) + 1 (Time bin fraction vs global apv index)

Hints from Jeff when debugging locally:

1. Every year uses different trigger setups

For Run21,22, use st_fwd_adc*daq; for Run23, use st_forphoto_adc*daq or st_upcjet_adc*daq

2. Most plots require ADC information. Therefore it is more efficient to us st*adc*daq files.

=============================================================================================

May 24 Wed

We have decided that FST is safe to include in the runs.

=============================================================================================

May 22 Mon

A re-commissioning plan is carried out, which includes the following items (in order):

1. Latency setting (Non zero suppressed)

- Intention of adding Max Time Bin (by APVs) plots to the Shift QA Histograms

2. Check online pedestal and CMN substraction (both zero suppressed and non zero suppressed)

3. Take data for alignment (this can be done any time, needs low luminosity, B=0,0.5T)

=============================================================================================

May 21 Sun

Re-commissioning for the FST is brought up during the operation daily meeting.

=============================================================================================

May 20 Sat

We checked the HV current and pedestal/noise. It is consistent with the "Winter status". We'll keep an eye on this.

=============================================================================================

May 19 Fri

1. Miscommunication happened between the MCR and the STAR control room around 22:30, leading to unexpected beam abort. FST and other detectors were on. Triggered immediate trip. Cleared by power cycling (done by DO). No emergency call to the expert. ShiftLog

2. sTGC started to trip with normal run condition; sTGC expert found damage to their detector. No such trip observed in FST. We'll keep an eye on this.

=============================================================================================

May 16 Tue

1. After the magnet is brought down

1.1 Ramp up the voltage

1.2 Take 2 short pedestal runs

1.2 Turn off the FST

No trip is found. The pedestal and noise is normal.

2. Waiting for the access to the cooling manifold:

The techs removed the pole-tip, mounted the scaffolding.

They have tested the leak and found that one of the connectors for the TPC cooling loop cracked, which was the leak.

The plastic connector was replaced by a cooper one.

The new connector was proven to be good by the pressure test.

The TPC cooling is turned on and therefore the FST PPB had proper cooling.

We are granted to get on the scaffolding

3. Alternately turn off/on valves for the NOVEC

3.1 turned off the valves (returns+supplies) on the "left half", slowly. Then hold it for a while. Check the return/supply flow readout.

3.2 turned on the valves (returns+supplies) on the "left half", slowly; turned off the valves (returns+supplies) on the "right half", slowly; then hold it for a while. Check the return/supply readout.

3.3 turned on the valves (returns+supplies). Check the return/supply flow readout.

4. "Measure the temperature"

4.1 Turn on the FST and ramp up the HV

4.2 Take a 1.5 min (short) pedestal run

4.3 Take a 15 min (long) pedestal run

4.4 Check the temperature

The yellow alarm goes away. The pedestal and the noise is normal.

5. Verify that releasing the coolant tank pressure won't cause the coolant not flowing in the tubes

Summarize: all FST issue is fixed. Pole-tip is to be inserted back tomorrow (May 17)

=============================================================================================

May 13 Sat

1.HV is ramp-uped and the trips go away by themselves. Strongly suspecting this is cause by the leak.

Currents are normal in those previously tripped channels, as well as the pedestals and noises.

Note: the remaining issue is yellow temperature alarm on Disk 2 RDO4 Module 8,10,12

=============================================================================================

May 11 Thur

1. Xu has verified the mapping of the temperature entries. This can also be found on daqman: /RTS/conf/fst/fst_rdo_conf.txt

The overheating readout all end with _3, which corresponds to the PPBs. Two possibilities are:

1) STAR Hall has higher temperature

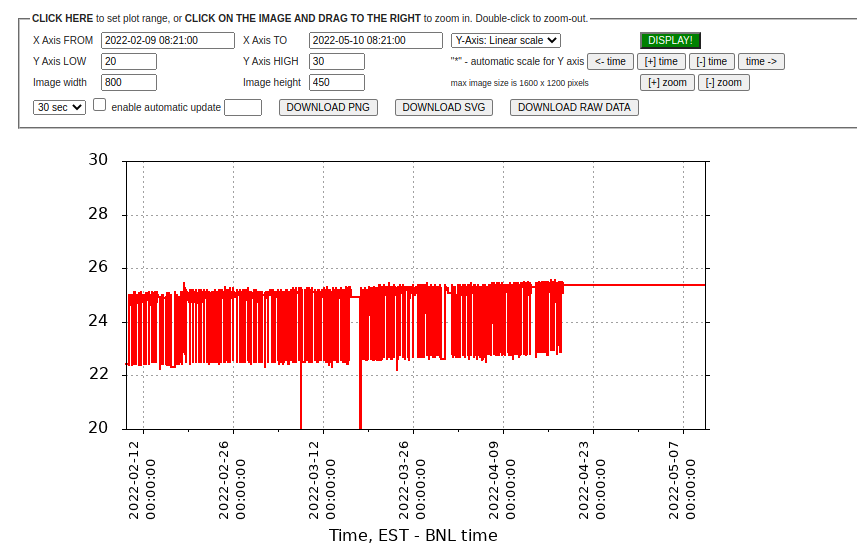

Last year vs now

2) The cooling loop for the PPBs are NOT connected (uses TPC chilling water)

Bill called Bob, and he learnt from him that these should be connected during the run, and they were disconnected somehow by someone at some point.

2. The aforementioned cooling tubes were connected by Bill and Prashanth. This fixed the yellow alarms on the temperature of the PPBs.

3. New trips are found on MPOD 3 u3,4,5, later that night also MPOD 3 u2.

4. Some inner sectors are found to be overheated; yellow alarm is triggered

The corresponding outer sectors also have higher than normal temperature, but not high enough to trigger the alarm

5. We restarted the pump and released the the pressure in the NOVEC tank multiple times. Alarms on Disk 1 went away.

6. We switched the pump in an attempt to have larger flows. It did not help on the issue for RDO4

7. Tried manually ramp up the voltage in the problematic channels (MPOD3 u2,3,4,5). u4 and u5 can be ramp up slowly to the target voltage. No luck in u 2,3

8. Checked the pedestals and noise in the modules with high temperatures; they are consistent w

=============================================================================================

May 10 Wed

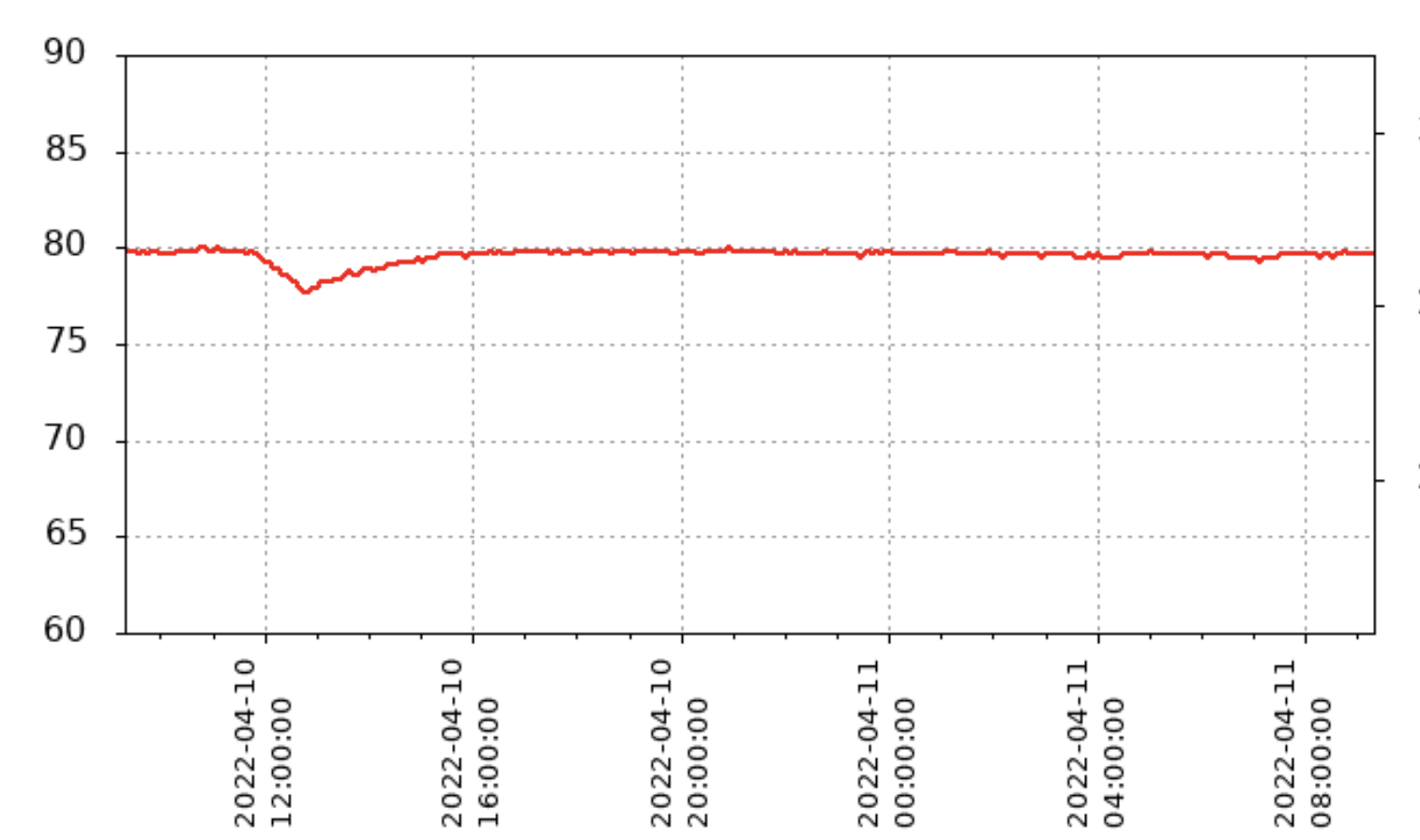

Some of the temperature readouts are found to be high compared to last year run time, and is triggering Yellow Alarms. E.g, on the Online Status Viewer:

The Online Status Viewer is monitoring the temperature of the FST modules (inner and outer sectors separately), temperature of the T-Board, and the PPBs. We are waiting to verify the mapping (for the last number in X_X_X_X) with the experts.

- zyzhang's blog

- Login or register to post comments