Run 5

2005 Beamline Calibration

Information about how this calibration is done, and links to other years, can be found BeamLine Constraint.

With the large number of special triggers in the 2005 pp data, I need to be judiscious about running the calibration pass. I want to be sure that the triggers I use do not bias the position of the reconstructed vertex in the transverse plane for a given z. As the DAQ files come and go quickly from disk, I also wanted to be able to do this study from the event.root and/or MuDst.root files on disk.

Using event.root and MuDst.root files

It would be nice to use the already-produced event.root files from FastOffline to do the beamline calibration. The first question to ask is, was the chain used for FastOffline sufficient for doing this?

FastOffline has been running with the following chain:

P2005 ppOpt svt_daq svtD EST pmdRaw Xi2 V02 Kink2 CMuDst OShortR

This causes StMagUtilities to run with the following options:

StMagUtilities::ReadField Version 2D Mag Field Distortions + Padrow 13 + Twist + Clock + IFCShift + ShortedRing

I believe these are indeed the distortion corrections we want turned on (no SpaceCharge or GridLeak, whose distortions should smear, but not bias the vertex positions).

Our old BFC chain for doing this calibration was:

ppOpt,ry2001,in,tpc_daq,tpc,global,-Tree,Physics,-PreVtx,FindVtxSeed,NoEvent,Corr2

The old chain uses Corr2, but we're now using Corr3. The only difference between these is OBmap2D now, versus OBmap then, which is a good thing. Also, we now have the svt turned on, but it isn't used in the tracks used to find the primary vertex (global vs. estGlobal). So this is no different than tpc-only. I have not yet come to an understanding of whether PreVtx (which is ON for the FastOffline chain) would do anything negative. At this point, it seems acceptable to me.

In order to use the FastOffline files, I modified StVertexSeedMaker to be usable for StEvent (event.root files) in the form of StEvtVtxSeedMaker, and MuDst (MuDst.root files) in StMuDstVtxSeedMaker. The latter may be an issue in that it introduces a dependency upon loading MuDst libs when loading the StPass0CalibMaker lib (add "MuDST" option to chain).

Testing trigger biases

Using the FastOffline files, I wanted to see which triggers would be usable. There is the obvious choice of the ppMinBias trigger. But these are not heavily recorded: there may be perhaps 1000 per run. So fills with just a few runs may have insufficient stats to get a good measure of the beamline. Essentially, ALL ppMinBias triggers would need to be examined, which means ALL pp files with these triggers! While performing the pass on the data files does not take long, getting every file off HPSS and providing disk space would be an issue. Discussions with pp analysis folks gave me an indication that several other triggers may lead to preferential z locations of collision vertices (towards one end or the other), but no transverse biases. This is acceptable, but I wanted to be sure it was true. I spent some time looking at the FastOffline files to compare the different triggers, but kept running into the problem of insufficient statistics from the ppMinBias triggers (the prime reason for using the special triggers!). Here's an example of a comparison between the triggers:

X vs. Z, and Y vs. Z:

(black points are profiles of vertices from ppMinBias triggers 96011, red are special triggers 962xx, where xx=[11,21,33,51,61,72,82])

First Calibrations pass

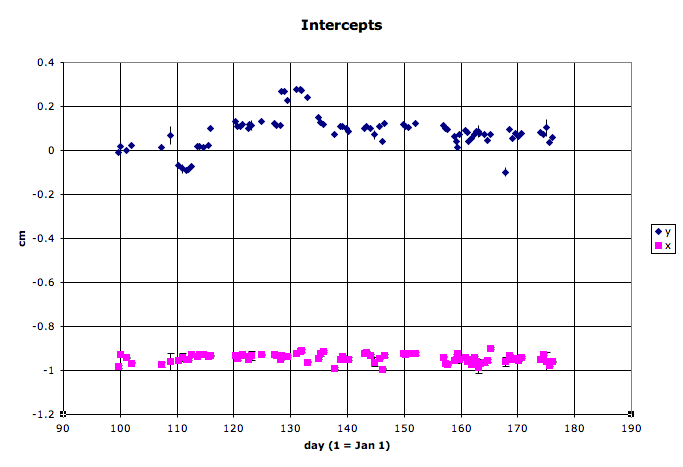

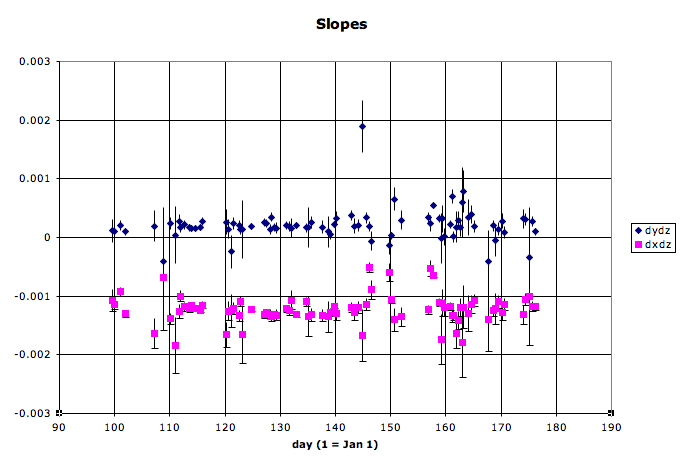

Using minbias+special triggers from about 1000 MuDst files available on disk, here's what we get:

This can be compared to similar plots for Run 4 (pp), Run 3 (200 GeV dAu and pp), and Run 2 (200 GeV pp).

The trigger IDs I used were:

96201 : bemc-ht1-mb 96211 : bemc-ht2-mb 96221 : bemc-jp1-mb 96233 : bemc-jp2-mb 96251 : eemc-ht1-mb 96261 : eemc-ht2-mb 96272 : eemc-jp1-mb-a 96282 : eemc-jp2-mb-a 96011 : ppMinBias 20 : Jpsi 22 : upsilon

Calibrating the current pp data for 2005 will mean processing about 5000 files, daq or MuDst.

Full Calibrations pass

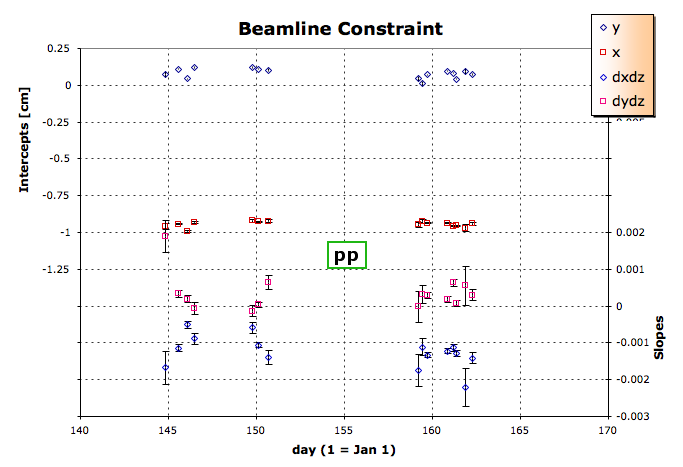

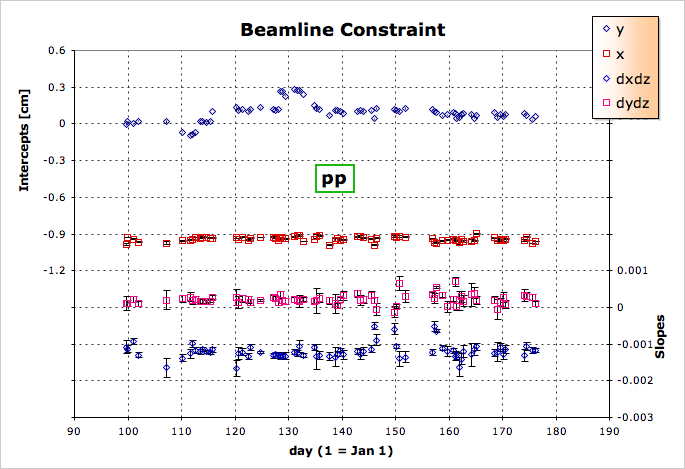

To make sure we had all the final calibrations in place (TPC drift velocity in particular), we ran the calibration pass over the DAQ files. This produced the following intercepts and slopes from the full pass and aggregation:

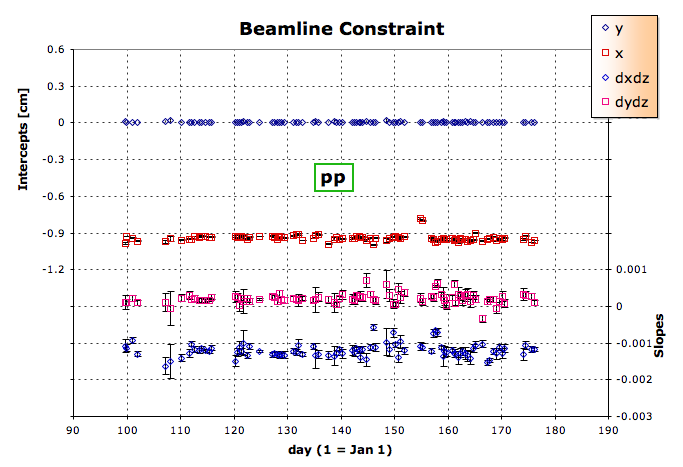

I removed a few outliers and came up with this set, which went into the DB:

Jerome reminds me that we are still missing the high energy pp run, sqrt(s)=410 GeV. Lidia reminds me we are missing the ppTrans data. So, I did those two and added a few statistics to fills we had already done. The result is this:

mktxt2005.txt

#!/bin/csh

set sdir = ./StarDb/Calibrations/rhic

set tdir = ./txt

set files = `ls -1 $sdir/*.C`

set vs = (x0 err_x0 y0 err_y0 dxdz err_dxdz dydz err_dydz stats)

set len = 0

set ofile = ""

set dummy = "aa bb"

# Necessary instantiation to make each element of oline a string with spaces

set oline = ( \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

"aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" "aa bb" \

)

set firstTime = 1

set newString = ""

foreach vv ($vs)

set ofile = $tdir/${vv}.txt

touch $ofile

rm $ofile

touch $ofile

set va = "row.$vv"

set fnum = 0

foreach file ($files)

@ fnum = $fnum + 1

set vb = `cat $file | fgrep $va`

set vc = $vb[3]

@ len = `echo $vc | wc -c` - 1

set vd = `echo $vc | colrm $len`

echo $vd >> $ofile

if ($firstTime) then

set newString = "$vd"

else

set newString = "${oline[${fnum}]} $vd"

endif

set oline[$fnum] = "$newString"

end

set firstTime = 0

end

set ff = (date.time days)

set vf

foreach fff ($ff)

set vg = $tdir/${fff}.txt

set vf = ($vf $vg)

touch $vg

rm $vg

touch $vg

end

set ofile = ${tdir}/full.txt

touch $ofile

rm $ofile

touch $ofile

set days = 0

set frac = 0

set fnum = 0

foreach file ($files)

@ len = `echo $file | wc -c` - 18

set dt = `echo $file | colrm 1 $len | colrm 16`

set year = `echo $file | colrm 1 $len | colrm 5`

@ len = $len + 4

set month = `echo $file | colrm 1 $len | colrm 3`

@ len = $len + 2

set day = `echo $file | colrm 1 $len | colrm 3`

@ len = $len + 3

set hour = `echo $file | colrm 1 $len | colrm 3`

@ len = $len + 2

set min = `echo $file | colrm 1 $len | colrm 3`

@ len = $len + 2

set sec = `echo $file | colrm 1 $len | colrm 3`

set modi = 0

# Feb has 28 = 31-3 days (this is a leap year)

if ($month > 2) @ modi += 2

# Apr,Jun,Sep,Nov have 30 = 31-1 days

if ($month > 4) @ modi += 1

if ($month > 6) @ modi += 1

if ($month > 9) @ modi += 1

if ($month > 11) @ modi += 1

echo $modi

# convert to seconds since 01-Jan-2005 EST (5 hours behind GMT)

@ year = $year - 2005

@ month = ($month + ($year * 12)) - 1

@ day = $day + ($month * 31) - $modi

@ hour = ($hour + ($day * 24)) - 5

@ min = $min + ($hour * 60)

@ sec = $sec + ($min * 60)

# convert to days since 01-Jan-2005 EST (5 hours behind GMT)

@ days = $sec / 86400

@ sec = $sec - ($days * 86400)

@ frac = ($sec * 10000) / 864

set fra = "$frac"

set len = `echo $fra | wc -c`

@ len = 7 - $len

while ($len > 0)

set fra = "0${fra}"

@ len = $len - 1

end

echo $dt >> $vf[1]

echo ${days}.$fra >> $vf[2]

@ fnum = $fnum + 1

echo "${days}.$fra ${oline[${fnum}]} $dt" >> $ofile

end

queryNevtsPerRun.txt

-- N evts per run, where N is the numerator at the end of the last line

select floor(bi.blueFillNumber) as fill,

sum(ts.numberOfEvents/ds.numberOfFiles) as gdEvtPerFile,

ds.numberOfFiles,

sum(dt.numberOfEvents*ts.numberOfEvents/ds.numberOfEvents) as gdInFile,

dt.file, rd.glbSetupName

--

--

from daqFileTag as dt

left join l0TriggerSet as ts on ts.runNumber=dt.run

left join runDescriptor as rd on rd.runNumber=dt.run

left join daqSummary as ds on ds.runNumber=dt.run

left join beamInfo as bi on bi.runNumber=dt.run

left join detectorSet as de on de.runNumber=dt.run

left join runStatus as rs on rs.runNumber=dt.run

left join magField as mf on mf.runNumber=dt.run

--

--

-- where (bi.blueFillNumber=3458 or bi.blueFillNumber=3459)

where rd.runNumber>6083000

and ds.numberOfFiles>1

and de.detectorID=0

and mf.scaleFactor!=0

-- USING pp-minbias triggers to identify nevents/file

and (ts.name='ppMinBias' or

ts.name='bemc-ht1-mb' or ts.name='bemc-ht2-mb' or

ts.name='bemc-jp1-mb' or ts.name='bemc-jp2-mb' or

ts.name='eemc-ht1-mb' or ts.name='eemc-ht2-mb' or

ts.name='eemc-jp1-mb-a' or ts.name='eemc-jp2-mb-a' or

ts.name='upsilon' or ts.name='Jpsi')

-- and rs.shiftLeaderStatus=0

and rs.shiftLeaderStatus<=0

and bi.entryTag=0

-- USE DAQ100 filestreams

and ((dt.fileStream between 101 and 104) or (dt.fileStream between 201 and 204))

-- and dt.file like 'st_physics_6%'

-- USE these trigger setups

and (rd.glbSetupName='ppProduction' or rd.glbSetupName='ppProductionMinBias')

--

--

group by dt.file

having (@t4:=sum(dt.numberOfEvents*ts.numberOfEvents/ds.numberOfEvents))>60

and (@t3:=mod(avg(dt.filesequence+(10*dt.fileStream)),(@t2:=floor(sum(ts.numberOfEvents)/10000.0)+1)))=0

;