Simulation

Welcome to the Simulation Pages!

Please note that most of the material posted before October 2006 is located at the older web site which we'll keep for reference, for the time being. See sections below for most recent additions and information.

For making a new simulation production request, please consult the STAR Simulations Requests interface.

Adding a New Detector to STAR

The STAR Geometry Model

Geometry Definition

Users wishing to develop and integrate new detector models into the STAR framework will be intersted in the following links:

- The STAR AgML reference guide.

- The STAR AgML example shapes.

- List of Default AgML Materials

- The AgML Tutorials is a good place to get started with AgML development.

- An annotated example, the AgML Example: The Beam Beam Counters, is also available.

- Another example, the FGT geometry

Tracking Interface (Stv)

Exporting detector hits

- Implement a hit class based on StEvent/StHit

- Implement a hit collection

- Implement an iterator over your hit collection based on StEventUtilities/StHitIter

- Add your hit iterator to the StEventUtitlies/StEventHitIter

Implementing a custom seed finder

ID Truth

ID truth is an ID which enables us to determine which simulated particle was principally responsible for the creation of a hit in a detector, and eventually the physics objects (clusters, points, tracks) which are formed from them. The StHit class has a member function which takes two arguements:

- idTru -- the primary key of the simulated particle, i.e. "track_p" in the g2t hit structure

- qaTru -- the quality of the truth value, defined as the dominant contributor to the hit, cluster, point or track.

When hits are built up into clusters, the clustering algorithm should set the idtruth value for the cluster based on the dominant contributor of the hits which make up the cluster.

When clusters are associated into space points, the point finding algorithm should set the idtruth value for the point. In the event that two clusters are combined with two different idTruth values, you should set idTruth = 0.

Interface to Starsim

The interface between starsim and reconstruction is briefly outlined here

- You do not have access to view this node

Information about geometries used in production and which geometries to use in simulations may be found in the following links:

- Existing Geometry Tags used in Production

- The STAR Geometry in simulation & reconstruction contains useful information on the detector configurations associated with a unique geometry tag. Production geometry tags state the year for which the tag is valid, and a letter indicating the revision level of the geometry. For example, "y2009c" indicates the third revision of the 2009 configuration of the STAR detector. Users wishing to run starsim in their private areas are encouraged to use the most recent revision for the year in which they want to compare to data.

Comparisons between the original AgSTAR model and the new AgML model of the detector may be found here:

- Comparisons between AgML vs AgSTAR Comparison demonstrate the level of equivalence between AgML and the original AgSTAR geometries.

- Comparisons between HIT-by-HIT comparison between AgML and AgSTAR geometries for tag y2009c HITS, for simple simulations run in starsim.

- Comparisons between AgML vs AgSTAR tracking comparison

AgML Project Overview and Readiness for 2012

HOWTO Use Geometries defined in AgML in STARSIM

AgML geometries are available for use in simulation using the "eval" libraries.

$ starver eval

The geometries themselves are available in a special library, which is setup for backwards compatability with starsim. To use the geometries you load the "xgeometry.so" library in a starsim session, either interactively or in a macro:

starsim> detp geom y2012

starsim> gexe $STAR_LIB/xgeometry.so

starsim> gclos all

HOWTO Use Geometries defined in AgML in the Big Full Chain

AgML geometries may also be used in reconstruction. To access them, the "agml" flag should be provided in the chain being run:

e.g

root [0] .L bfc.C

root [1] bfc(nevents,"y2012 agml ...", inputFile);

Geometry in Preparation: y2012Major changes: 1. Support cone, ftpc, ssd, pmd removed.

2. Inner Detector Support Module (IDSM) added

3. Forward GEM Tracker (FGTD) added

Use of AgML geometries within starsim:

$ starver eval

$ starsim

starsim> detp geom y2012

starsim> gexe $STAR_LIB/xgeometry.so

starsim> gclos all

Use of AgML geometries within the big full chain:

$ root4star

root [0] .L bfc.C

root [1] bfc(0,"y2012 agml ...",inputFile);

|

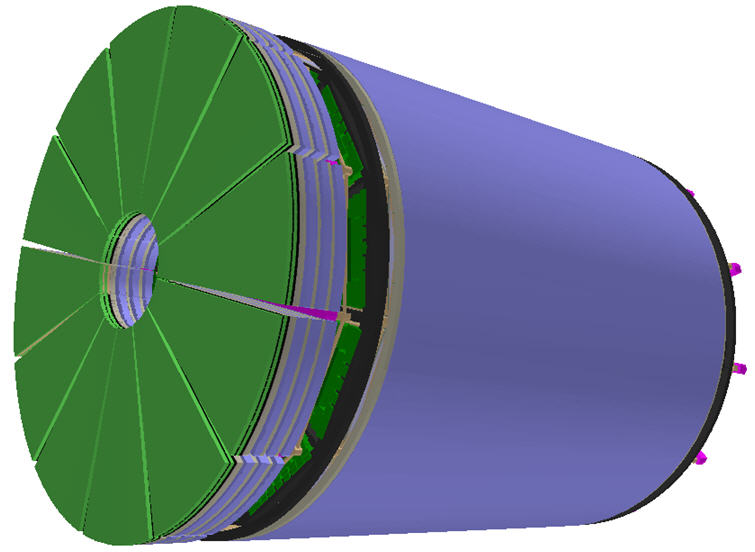

Current (10/24/2011) configuration of the IDSM with FGT inside --  |

|

AgML Example: The Beam Beam Counters

-

<Document file="StarVMC/Geometry/BbcmGeo/BbcmGeo.xml">

-

<!--

-

Every AgML document begins with a Document tag, which takes a single "file"

-

attribute as its arguement.

-

-

-->

-

-

-

<Module name="BbcmGeo" comment=" is the Beam Beam Counter Modules GEOmetry " >

-

<!--

-

The Module tag declares an AgML module. The name should consist of a four

-

letter acronym, followed by the word "geo" and possibly a version number.

-

-

e.g. BbcmGeo, EcalGeo6, TpceGeo3a, etc...

-

-

A mandatory comment attribute provides a short description of which detector

-

is implemented by the module.

-

-

-->

-

-

<Created date="15 march 2002" />

-

<Author name="Yiqun Wang" />

-

<!-- The Created and Author tags accept a free-form date and author, for the

-

purposes of documentation. -->

-

-

-

<CDE>AGECOM,GCONST,GCUNIT</CDE>

-

<!-- The CDE tag provides some backwards compatability features with starsim.

-

AGECOM,GCCONST and GCUNIT are fine for most modules. -->

-

-

<Content>BBCM,BBCA,THXM,SHXT,BPOL,CLAD</Content>

-

<!-- The Content tag should declare the names of all volumes which are

-

declared in the detector module. A comma-separated list. -->

-

-

<Structure name="BBCG" >

-

<var name="version" />

-

<var name="onoff(3)" />

-

<var name="zdis(2)" />

-

</Structure>

-

<!-- The structure tag declares an AgML structure. It is similar to a c-

-

struct, but has some important differences which will be illustrated

-

later. The members of a Structure are declared using the var tag. By

-

default, the type of a var will be a float.

-

-

Arrays are declared by enclosing the dimensions of the array in

-

parentheses. Only 1D and 2D arrayes are supported. e.g.

-

-

<var name="x(3)" /> allowed

-

<var name="y(3,3)" /> allowed

-

<var name="z(4,4,4)" /> not allowed

-

-

Types may be declared explicitly using the type parameter as below.

-

Valid types are int, float and char. char variables should be limited

-

to four-character strings for backwards compatability with starsim.

-

Arrays of chars are allowed, in which case you may treat the variable

-

as a string of length Nx4, where N is the dimension of the array.

-

-

-->

-

-

<Structure name="HEXG">

-

<var name="type" type="float" />

-

<var name="irad" type="float" />

-

<var name="clad" type="float" />

-

<var name="thick" type="float" />

-

<var name="zoffset" type="float" />

-

<var name="xoffset" type="float" />

-

<var name="yoffset" type="float" />

-

</Structure>

-

-

<varlist type="float">

-

actr,srad,lrad,ztotal,x0,y0,theta0,phi0,xtrip,ytrip,rtrip,thetrip,rsing,thesing

-

</varlist>

-

<!-- The varlist tag allows you to declare a list of variables of a stated type.

-

The variables will be in scope for all volumes declared in the module.

-

-

Variables may be initialized using the syntax

-

var1/value1/ , var2/value2/, var3, var4/value4/ ...

-

-

Arrays of 1 or 2 dimensions may also be declared. The Assign tag may

-

be used to assign values to the arrays:

-

-

<Assign var="ARRAY" value="{1,2,3,4}" />

-

-->

-

-

<varlist type="int">I_trip/0/,J_sing/0/</varlist>

-

-

<Fill name="BBCG" comment="BBC geometry">

-

<var name="Version" value="1.0" comment=" Geometry version " />

-

<var name="Onoff" value="{3,3,3}" comment=" 0 off, 1 west on, 2 east on, 3 both on: for BBC,Small tiles,Large tiles " />

-

<var name="zdis" value="{374.24,-374.24}" comment=" z-coord from center in STAR (715/2+6*2.54+1=373.8) " />

-

</Fill>

-

<!-- The members of a structure are filled inside of a Fill block. The Fill

-

tag specifies the name of the structure being filled, and accepts a

-

mandatory comment for documentation purposes.

-

-

The var tag is used to fill the members of the structure. In this

-

context, it accepts three arguements: The name of the structure member,

-

the value which should be filled, and a mandatory comment for

-

documentation purposes.

-

-

The names of variables, structures and structure members are case-

-

insensitive.

-

-

1D Arrays are filled using a comma separated list of values contained in

-

curly brackets...

-

-

e.g. value="{1,2,3,4,5}"

-

-

2D Arrays are filled using a comma and semi-colon separated list of values

-

-

e.g. value="{11,12,13,14,15; This fills an array dimensioned

-

21,22,23,24,25; as A(3,5)

-

31,32,33,34,35;}"

-

-

-->

-

-

-

<Fill name="HEXG" comment="hexagon tile geometry" >

-

<var name="Type" value="1" comment="1 for small hex tile, 2 for large tile " />

-

<var name="irad" value="4.174" comment="inscribing circle radius =9.64/2*sin(60)=4.174 " />

-

<var name="clad" value="0.1" comment="cladding thickness " />

-

<var name="thick" value="1.0" comment="thickness of tile " />

-

<var name="zoffset" value="1.5" comment="z-offset from center of BBCW (1), or BBCE (2) " />

-

<var name="xoffset" value="0.0" comment="x-offset center from beam for BBCW (1), or BBCE (2) " />

-

<var name="yoffset" value="0.0" comment="y-offset center from beam for BBCW (1), or BBCE (2) " />

-

</Fill>

-

-

<Fill name="HEXG" comment="hexagon tile geometry" >

-

<var name="Type" value="2" comment="1 for small hex tile, 2 for large tile " />

-

<var name="irad" value="16.697" comment="inscribing circle radius (4x that of small one) " />

-

<var name="clad" value="0.1" comment="cladding of tile " />

-

<var name="thick" value="1.0" comment="thickness of tile " />

-

<var name="zoffset" value="-1.5" comment="z-offset from center of BBCW (1), or BBCE (2) " />

-

<var name="xoffset" value="0.0" comment="x-offset center from beam for BBCW (1), or BBCE (2) " />

-

<var name="yoffset" value="0.0" comment="y-offset center from beam for BBCW (1), or BBCE (2) " />

-

</Fill>

-

-

<Use struct="BBCG"/>

-

<!-- An important difference between AgML structures and c-structs is that

-

only one instance of an AgML structure is allowed in a geometry module,

-

and there is no need for the user to create it... it is automatically

-

generated. The Fill blocks store multiple versions of this structure

-

in an external name space. In order to access the different versions

-

of a structure, the Use tag is invoked.

-

-

Use takes one mandatory attribute: the name of the structure to use.

-

By default, the first set of values declared in the Fill block will

-

be loaded, as above.

-

-

The Use tag may also be used to select the version of the structure

-

which is loaded.

-

-

Example:

-

<Use struct="hexg" select="type" value="2" />

-

-

The above example loads the second version of the HEXG structure

-

declared above.

-

-

NOTE: The behavior of a structure is not well defined before the

-

Use operator is applied.

-

-

-->

-

-

-

<Print level="1" fmt="'BBCMGEO version ', F4.2" >

-

bbcg_version

-

</Print>

-

<!-- The Print statement takes a print "level" and a format descriptor "fmt". The

-

format descriptor follows the Fortran formatting convention

-

-

(n.b. Print statements have not been implemented in ROOT export

-

as they utilize fortran format descriptors)

-

-->

-

-

-

<!-- small kludge x10000 because ROOT will cast these to (int) before computing properties -->

-

<Mixture name="ALKAP" dens="1.432" >

-

<Component name="C5" a="12" z="6" w="5 *10000" />

-

<Component name="H4" a="1" z="1" w="4 *10000" />

-

<Component name="O2" a="16" z="8" w="2 *10000" />

-

<Component name="Al" a="27" z="13" w="0.2302 *10000" />

-

</Mixture>

-

<!-- Mixtures and Materials may be declared within the module... this one is not

-

a good example, as there is a workaround being used to avoid some issues

-

with ROOT vs GEANT compatability. -->

-

-

-

<Use struct="HEXG" select="type" value="1 " />

-

srad = hexg_irad*6.0;

-

ztotal = hexg_thick+2*abs(hexg_zoffset);

-

-

<Use struct="HEXG" select="type" value="2 " />

-

lrad = hexg_irad*6.0;

-

ztotal = ztotal+hexg_thick+2*abs(hexg_zoffset); <!-- hexg_zoffset is negative for Large (type=2) -->

-

-

<!-- AgML has limited support for expressions, in the sense that anyhing which

-

is not an XML tag is passed (with minimal parsing) directly to the c++

-

or mortran compiler. A few things are notable in the above lines.

-

-

(1) Lines may be optionally terminated by a ";", but...

-

(2) There is no mechanism to break long lines across multiple lines.

-

(3) The members of a structure are accessed using an "_", i.e.

-

-

hexg_irad above refers to the IRAD member of the HEXG structure

-

loaded by the Use tag.

-

-

(4) Several intrinsic functions are available: abs, cos, sin, etc...

-

-->

-

-

<Create block="BBCM" />

-

<!-- The Create operator creates the volume specified in the "block"

-

parameter. When the Create operator is invoked, execution branches

-

to the block of code for the specified volume. In this case, the

-

Volume named BBCM below. -->

-

-

<If expr="bbcg_OnOff(1)==1|bbcg_OnOff(1)==3">

-

-

<Placement block="BBCM" in="CAVE"

-

x="0"

-

y="0"

-

z="bbcg_zdis(1)"/>

-

<!-- After the volume has been Created, it is positioned within another

-

volume in the STAR detector. The mother volume may be specified

-

explicitly with the "in" attribute.

-

-

The position of the volume is specified using x, y and z attributes.

-

-

An additional attribute, konly, is used to indicate whether or

-

not the volume is expected to overlap another volume at the same

-

level in the geometry tree. konly="ONLY" indicates no overlap and

-

is the default value. konly="MANY" indicates overlap is possible.

-

-

For more info on ONLY vs MANY, consult the geant 3 manual.

-

-->

-

-

</If>

-

-

<If expr="bbcg_OnOff(1)==2|bbcg_OnOff(1)==3" >

-

<Placement block="BBCM" in="CAVE"

-

x="0"

-

y="0"

-

z="bbcg_zdis(2)">

-

<Rotation alphay="180" />

-

</Placement>

-

<!-- Rotations are specified as additional tags contained withn a

-

Placement block of code. The translation of the volume will

-

be performed first, followed by any rotations, evaluated in

-

the order given. -->

-

-

-

</If>

-

-

<Print level="1" fmt="'BBCMGEO finished'"></Print>

-

-

-

<!--

-

-

Volumes are the basic building blocks in AgML. The represent the un-

-

positioned elements of a detector setup. They are characterized by

-

a material, medium, a set of attributes, and a shape.

-

-

-->

-

-

-

-

<!-- === V o l u m e B B C M === -->

-

<Volume name="BBCM" comment="is one BBC East or West module">

-

-

<Material name="Air" />

-

<Medium name="standard" />

-

<Attribute for="BBCM" seen="0" colo="7" />

-

<!-- The material, medium and attributes should be specified first. If

-

ommitted, the volume will inherit the properties of the volume which

-

created it.

-

-

NOTE: Be careful when you reorganize a detector module. If you change

-

where a volume is created, you potentially change the properties

-

which that volume inherits.

-

-

-->

-

-

<Shape type="tube"

-

rmin="0"

-

rmax="lrad"

-

dz="ztotal/2" />

-

<!-- After specifying the material, medium and/or attributes of a volume,

-

the shape is specified. The Shape is the only property of a volume

-

which *must* be declared. Further, it must be declared *after* the

-

material, medium and attributes.

-

-

Shapes may be any one of the basic 16 shapes in geant 3. A future

-

release will add extrusions and composite shares to AgMl.

-

-

The actual volume (geant3, geant4, TGeo, etc...) will be created at

-

this point.

-

-->

-

-

<Use struct="HEXG" select="type" value="1 " />

-

-

<If expr="bbcg_OnOff(2)==1|bbcg_OnOff(2)==3" >

-

<Create block="BBCA" />

-

<Placement block="BBCA" in="BBCM"

-

x="hexg_xoffset"

-

y="hexg_yoffset"

-

z="hexg_zoffset"/>

-

</If>

-

-

<Use struct="HEXG" select="type" value="2 " />

-

-

<If expr="bbcg_OnOff(3)==1|bbcg_OnOff(3)==3" >

-

-

<Create block="BBCA"/>

-

<Placement block="BBCA" in="BBCM"

-

x="hexg_xoffset"

-

y="hexg_yoffset"

-

z="hexg_zoffset"/>

-

-

</If>

-

-

</Volume>

-

-

<!-- === V o l u m e B B C A === -->

-

<Volume name="BBCA" comment="is one BBC Annulus module" >

-

<Material name="Air" />

-

<Medium name="standard" />

-

<Attribute for="BBCA" seen="0" colo="3" />

-

<Shape type="tube" dz="hexg_thick/2" rmin="hexg_irad" rmax="hexg_irad*6.0" />

-

-

x0=hexg_irad*tan(pi/6.0)

-

y0=hexg_irad*3.0

-

rtrip = sqrt(x0*x0+y0*y0)

-

theta0 = atan(y0/x0)

-

-

<Do var="I_trip" from="0" to="5" >

-

-

phi0 = I_trip*60

-

thetrip = theta0+I_trip*pi/3.0

-

xtrip = rtrip*cos(thetrip)

-

ytrip = rtrip*sin(thetrip)

-

-

<Create block="THXM" />

-

<Placement in="BBCA" y="ytrip" x="xtrip" z="0" konly="'MANY'" block="THXM" >

-

<Rotation thetaz="0" thetax="90" phiz="0" phiy="90+phi0" phix="phi0" />

-

</Placement>

-

-

-

</Do>

-

-

-

</Volume>

-

-

<!-- === V o l u m e T H X M === -->

-

<Volume name="THXM" comment="is on Triple HeXagonal Module" >

-

<Material name="Air" />

-

<Medium name="standard" />

-

<Attribute for="THXM" seen="0" colo="2" />

-

<Shape type="tube" dz="hexg_thick/2" rmin="0" rmax="hexg_irad*2.0/sin(pi/3.0)" />

-

-

<Do var="J_sing" from="0" to="2" >

-

-

rsing=hexg_irad/sin(pi/3.0)

-

thesing=J_sing*pi*2.0/3.0

-

<Create block="SHXT" />

-

<Placement y="rsing*sin(thesing)" x="rsing*cos(thesing)" z="0" block="SHXT" in="THXM" >

-

</Placement>

-

-

-

</Do>

-

-

</Volume>

-

-

-

<!-- === V o l u m e S H X T === -->

-

<Volume name="SHXT" comment="is one Single HeXagonal Tile" >

-

<Material name="Air" />

-

<Medium name="standard" />

-

<Attribute for="SHXT" seen="1" colo="6" />

-

<Shape type="PGON" phi1="0" rmn="{0,0}" rmx="{hexg_irad,hexg_irad}" nz="2" npdiv="6" dphi="360" zi="{-hexg_thick/2,hexg_thick/2}" />

-

-

actr = hexg_irad-hexg_clad

-

-

<Create block="CLAD" />

-

<Placement y="0" x="0" z="0" block="CLAD" in="SHXT" >

-

</Placement>

-

-

<Create block="BPOL" />

-

<Placement y="0" x="0" z="0" block="BPOL" in="SHXT" >

-

</Placement>

-

-

-

</Volume>

-

-

-

<!-- === V o l u m e C L A D === -->

-

<Volume name="CLAD" comment="is one CLADding of BPOL active region" >

-

<Material name="ALKAP" />

-

<Attribute for="CLAD" seen="1" colo="3" />

-

<Shape type="PGON" phi1="0" rmn="{actr,actr}" rmx="{hexg_irad,hexg_irad}" nz="2" npdiv="6" dphi="360" zi="{-hexg_thick/2,hexg_thick/2}" />

-

-

</Volume>

-

-

-

<!-- === V o l u m e B P O L === -->

-

<Volume name="BPOL" comment="is one Bbc POLystyren active scintillator layer" >

-

-

<Material name="POLYSTYREN" />

-

<!-- Reference the predefined material polystyrene -->

-

-

<Material name="Cpolystyren" isvol="1" />

-

<!-- By specifying isvol="1", polystyrene is copied into a new material

-

named Cpolystyrene. A new material is introduced here in order to

-

force the creation of a new medium, which we change with parameters

-

below. -->

-

-

<Attribute for="BPOL" seen="1" colo="4" />

-

<Shape type="PGON" phi1="0" rmn="{0,0}" rmx="{actr,actr}" nz="2" npdiv="6" dphi="360" zi="{-hexg_thick/2,hexg_thick/2}" />

-

-

<Par name="CUTGAM" value="0.00008" />

-

<Par name="CUTELE" value="0.001" />

-

<Par name="BCUTE" value="0.0001" />

-

<Par name="CUTNEU" value="0.001" />

-

<Par name="CUTHAD" value="0.001" />

-

<Par name="CUTMUO" value="0.001" />

-

<Par name="BIRK1" value="1.000" />

-

<Par name="BIRK2" value="0.013" />

-

<Par name="BIRK3" value="9.6E-6" />

-

<!--

-

Parameters are the Geant3 paramters which may be set via a call to

-

GSTPar.

-

-->

-

-

<Instrument block="BPOL">

-

<Hit meas="tof" nbits="16" opts="C" min="0" max="1.0E-6" />

-

<Hit meas="birk" nbits="0" opts="C" min="0" max="10" />

-

</Instrument>

-

<!-- The instrument block indicates what information should be saved

-

for this volume, and how the information should be packed. -->

-

-

</Volume>

-

-

-

</Module>

-

</Document>

-

-

AgML Tutorials

Getting started developing geometries for the STAR experiment with AgML.

Setting up your local environment

You need to checkout several directories and complie in this order:

$ cvs co StarVMC/Geometry $ cvs co StarVMC/StarGeometry $ cvs co StarVMC/xgeometry$ cvs co pams/geometry$ cons +StarVMC/Geometry $ cons

This will take a while to compile, during which time you can get a cup of coffee, or do your laundry, etc...

If you only want to visualize the STAR detector, you can checkout:

$ cvs co StarVMC/Geometry/macros

Once this is done you can visualize STAR geometries using the viewStarGeometry.C macro in AgML 1, and the loadAgML.C macro in AgML 2.0.

$ root.exeroot [0] .L StarVMC/Geometry/macros/viewStarGeometry.C root [1] nocache=true root [2] viewall=true root [3] viewStarGeometry("y2012")root [0] .L StarVMC/Geometry/macros/loadAgML.C root [1] loadAgML("y2016") root [2] TGeoVolume *cave = gGeoManager->FindVolumeFast("CAVE"); root [3] cave -> Draw("ogl"); // ogl uses open GL viewer

Tutorial #1 -- Creating and Placing Volumes

Start by firing up your favorite text editor... preferably something which does syntax highlighting and checking on XML documents. Edit the first tutorial geometries located in StarVMC/Geometry/TutrGeo ...

$ emacs StarVMC/Geometry/TutrGeo/TutrGeo1.xml

This module illustrates how to create a new detector module, how to create and place a simple volume, and how to create and place multiple copies of that volume. Next, we need to attach this module to a geometry model in order to visualize it. Geometry models (or "tags") are defined in the StarGeo.xml file.

$ emacs StarVMC/Geometry/StarGeo.xml

There is a simple geometry, which only defines the CAVE. It's the first geometry tag called "black hole". You can add your detector here...

xxx

$ root.exe

root [0] .L StarVMC/Geometry/macros/viewStarGeometry.C root [1] nocache=true root [2] viewStarGeometry("test","TutrGeo1");

The "test" geometry tag is a very simple geometry, implementing only the wide angle hall and the cave. All detectors, beam pipes, magnets, etc... have been removed. The second arguement to viewStarGeometry specifies which geometry module(s) are to be built and added to the test geometry. In this case we add only TutrGeo1. (A comma-separated list of geometry modules could be provided, if more than one geometry module was to be built).

Now you can try modifying TutrGeo1. Feel free to add as many boxes in as many positions as you would like. Once you have done this, recompile in two steps

$ cons +StarVMC/Geometry $ cons

Tutorial #2 -- A few simple shapes, rotations and reflections

The second tutorial geometry is in StarVMC/Geometry/TutrGeo/TutrGeo2.xml. Again, view it using viewStarGeometry.C

$ root.exe

root [0] .L viewStarGeometry.C

root [1] nocache=true

root [2] viewStarGeometry("test","TutrGeo2")

What does the nocache=true statement do? It instructs viewStarGeometry.C to recreate the geometry, rather than load it from a root file created the last time you ran the geometry. By default, if the macro finds a file name "test.root", it will load the geometry from that file to save time. You don't want this since you know that you've changed the geometry.

The second tutorial illustrates a couple more simple shapes: cones and tubes. It also illustrates how to create reflections. Play around with the code a bit, recompile in the normal manner, then try viewing the geometry again.

Tutorial #3 -- Variables and Structures

|

AgML provides variables and structures. The third tutorial is in StarVMC/Geometry/TutrGeo/TutrGeo3.xml. Open this up in a text editor and let's look at it. We define three variables: boxDX, boxDY and boxDZ to hold the dimensions of the box we want to create. AgML is case-insensitve, so you can write this as boxdx, BoxDY and BOXDZ if you so choose. In general, choose what looks best and helps you keep track of the code you're writing. Next check out the volume "ABOX". Note how the shape's dx, dy and dz arguements now reference the variables boxDX, boxDY and boxDZ. This allows us to create multiple versions of the volume ABOX. Let's view the geometry and see.

$ root.exe

root [0] .L StarVMC/Geometry/macros/viewStarGeometry.C

root [1] nocache=true

root [2] viewStarGeometry("test","TutrGeo3")

Launch a new TBrowser and open the "test" geometry. Double click test --> Master Volume --> CAVE --> TUTR. You now see all of the concrete volumes which have been created by ROOT. It should look like what you see at the right. We have "ABOX", but we also have ABO1 and ABO2. This demonstrates the an important concept in AgML. Each <Volume ...> block actually defines a volume "factory". It allows you to create multiple versions of a volume, each differing by the shape of the volume. When the shape is changed, a new volume is created with a nickname, where the last letter in the volume name is replaced by [1 2 3 ... 0 a b c ... z] (then the second to last letter, then the third...). Structures provide an alternate means to define variables. In order to populate the members of a structure with values, you use the Fill statement. Multiple fill statements for a given structure may be defined, providing multiple sets of values. In order to select a given set of values, the <Use ...> operator is invoked. In TutrGeo3, we create and place 5 different tubes, using the data stored in the Fill statements. However, you might notice in the browser that there are only two concrete instances of the tube being created. What is going on here? This is another feature of AgML. When the shape is changed, AgML will look for another concrete volume with exactly the same shape. If it finds it, it will use that volume. If it doesn't, then a new volume is created. There's alot going on in this tutorial, so play around a bit with it. |

|

Tutorial #4 -- Some more shapes

AgML vs AgSTAR Comparison

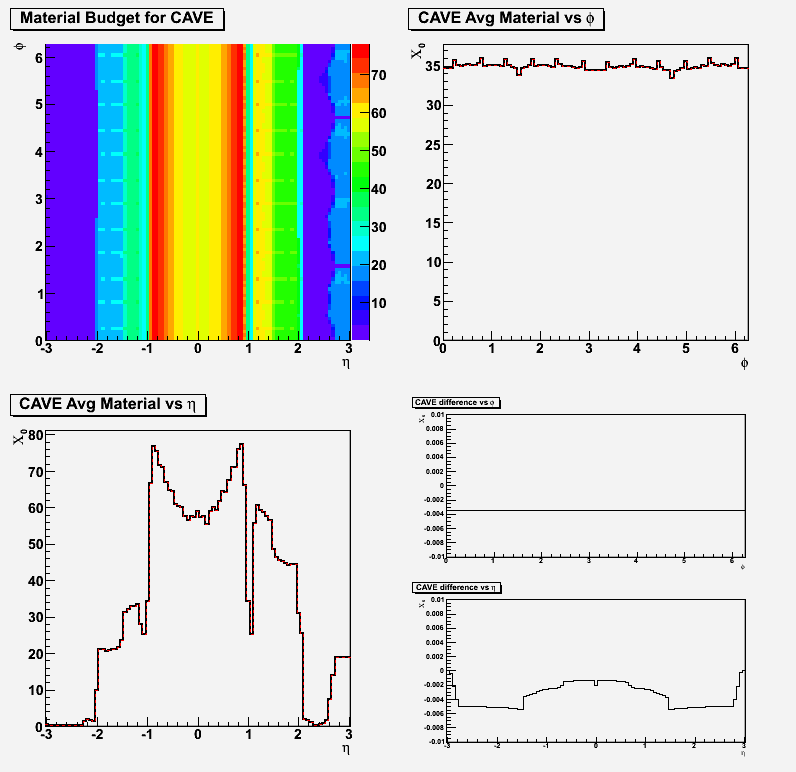

Abstract: We compare the AgML and AgSTAR descriptions of recent revisions of the STAR Y2005 through Y2011 geometry models. We are specifically interested in the suitability of the AgML model for tracking. We therefore plot the material contained in the TPC vs pseudorapidity for (a) all detectors, (b) the time projection chamber, and (c) the sensitive volumes of the time projection chamber. We also plot (d) the material found in front of the TPC active volumes.

Decription of the PlotsBelow you will find four columns of plots, for the highest revision of each geometry from y2005 to the present. The columns from left-to-right show comparisons of the material budget for STAR and its daughter volumes, the material budgets for the TPC and it's immediate daughter volumes, the material budgets for the active volumes in the TPC, and the material in front of the active volume of the TPC. In the context of tracking, the right-most column is the most important. Each column contains three plots. The top plot shows the material budget in the AgML model. The middle plot, the material budget in the AgSTAR model. The bottom plot shows the difference divided by the AgSTAR model. The y-axis on the difference plot extends between -2.5% and +2.5%. --------------------------------

STAR Y2011 Geometry TagIssues with TpceGeo3a.xml

Issues with PhmdGeo.xml

|

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

STAR Y2010c Geometry TagIssues with TpceGeo3a.xml

Issues with PhmdGeo.xml

|

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

STAR Y2009c Geometry TagIssues with TpceGeo3a.xml

Issues with PhmdGeo.xml

|

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

STAR Y2008e Geometry TagGlobal Issues

Issues with TpceGeo3a.xml

Issues with PhmdGeo.xml

|

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

STAR Y2007h Geometry TagGlobal Issues

Issues with TpceGeo3a.xml

Issues with PhmdGeo.xml

Issues with SVT. |

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

STAR Y2006g Geometry TagGlobal Issues

Note: TpceGeo2.xml does not suffer from the overlap issue in TpceGeo3a.xml |

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

STAR Y2005i Geometry TagGlobal Issues

Issues with TpceGeo3a.xml

Issues with PhmdGeo.xml

|

|||

| (a) Material in STAR Detector and daughters | (b) Material in TPC and daughters | (c) Material in TPC active volumes | (d) Material in front of TPC active volumes |

|

|

|

|

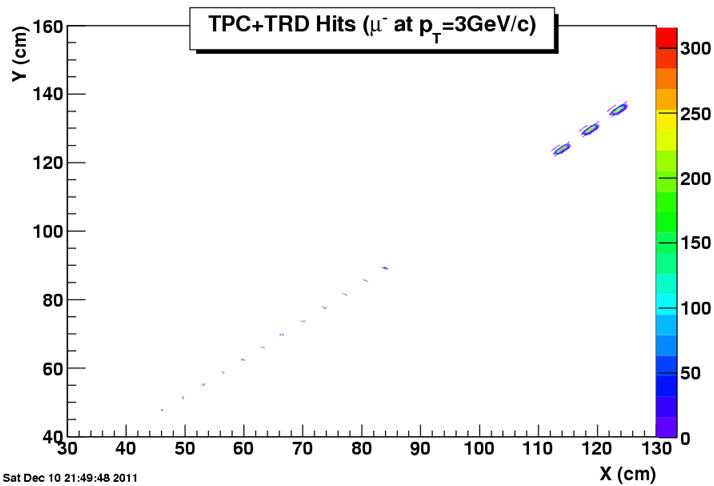

AgML vs AgSTAR tracking comparison

Attached is a comparison of track reconstruction using the Sti tracker, with AgI and AgML geometries as input.

Interfacing the New Detector with the STAR Big Full Chain

As STAR gradually comes to the end of its AA heavy ion program and more focus are put on polarized pp/pA physics and future ep/eA project at eRHIC era, many upgrades are foreseen to strengthen the detector capability at forward region. These include both the near-term upgrades for polarized pp program, eg. FMS/FSC/FHC calorimeters and FGT/VFGT tracking, and upgrades for eSTAR in about 5 to 10 years. Different detector concepts exist and optimization is needed to fit them in STAR physics and into the current STAR detector system. To reach a proper solution a lot of Monte Carlo (MC) works will be carried out, especially in the STAR simulation framework for its flexibility, robustness and proven performance during the last decade.

> cons +pams

> cons +StarVMC/Geometry

> cons

List of Default AgML Materials

List of default AgML materials and mixtures. To get a complete list of all materials defined in a geometry, execute AgMaterial::List() in ROOT, once the geometry has been created.

[-] Hydrogen: a= 1.01 z= 1 dens= 0.071 radl= 865 absl= 790 isvol= <unset> nelem= 1

[-] Deuterium: a= 2.01 z= 1 dens= 0.162 radl= 757 absl= 342 isvol= <unset> nelem= 1

[-] Helium: a= 4 z= 2 dens= 0.125 radl= 755 absl= 478 isvol= <unset> nelem= 1

[-] Lithium: a= 6.94 z= 3 dens= 0.534 radl= 155 absl= 121 isvol= <unset> nelem= 1

[-] Berillium: a= 9.01 z= 4 dens= 1.848 radl= 35.3 absl= 36.7 isvol= <unset> nelem= 1

[-] Carbon: a= 12.01 z= 6 dens= 2.265 radl= 18.8 absl= 49.9 isvol= <unset> nelem= 1

[-] Nitrogen: a= 14.01 z= 7 dens= 0.808 radl= 44.5 absl= 99.4 isvol= <unset> nelem= 1

[-] Neon: a= 20.18 z= 10 dens= 1.207 radl= 24 absl= 74.9 isvol= <unset> nelem= 1

[-] Aluminium: a= 26.98 z= 13 dens= 2.7 radl= 8.9 absl= 37.2 isvol= <unset> nelem= 1

[-] Iron: a= 55.85 z= 26 dens= 7.87 radl= 1.76 absl= 17.1 isvol= <unset> nelem= 1

[-] Copper: a= 63.54 z= 29 dens= 8.96 radl= 1.43 absl= 14.8 isvol= <unset> nelem= 1

[-] Tungsten: a= 183.85 z= 74 dens= 19.3 radl= 0.35 absl= 10.3 isvol= <unset> nelem= 1

[-] Lead: a= 207.19 z= 82 dens= 11.35 radl= 0.56 absl= 18.5 isvol= <unset> nelem= 1

[-] Uranium: a= 238.03 z= 92 dens= 18.95 radl= 0.32 absl= 12 isvol= <unset> nelem= 1

[-] Air: a= 14.61 z= 7.3 dens= 0.001205 radl= 30400 absl= 67500 isvol= <unset> nelem= 1

[-] Vacuum: a= 14.61 z= 7.3 dens= 1e-06 radl= 3.04e+07 absl= 6.75e+07 isvol= <unset> nelem= 1

[-] Silicon: a= 28.09 z= 14 dens= 2.33 radl= 9.36 absl= 45.5 isvol= <unset> nelem= 1

[-] Argon_gas: a= 39.95 z= 18 dens= 0.002 radl= 11800 absl= 70700 isvol= <unset> nelem= 1

[-] Nitrogen_gas: a= 14.01 z= 7 dens= 0.001 radl= 32600 absl= 75400 isvol= <unset> nelem= 1

[-] Oxygen_gas: a= 16 z= 8 dens= 0.001 radl= 23900 absl= 67500 isvol= <unset> nelem= 1

[-] Polystyren: a= 11.153 z= 5.615 dens= 1.032 radl= <unset> absl= <unset> isvol= <unset> nelem= 2

A Z W

C 12.000 6.000 0.923

H 1.000 1.000 0.077

[-] Polyethylene: a= 10.427 z= 5.285 dens= 0.93 radl= <unset> absl= <unset> isvol= <unset> nelem= 2

A Z W

C 12.000 6.000 0.857

H 1.000 1.000 0.143

[-] Mylar: a= 12.87 z= 6.456 dens= 1.39 radl= <unset> absl= <unset> isvol= <unset> nelem= 3

A Z W

C 12.000 6.000 0.625

H 1.000 1.000 0.042

O 16.000 8.000 0.333

Production Geometry Tags

This page was merged with STAR Geometry in simulation & reconstruction and maintained by STAR's librarian.

Attic

Retired Simulation Pages kept here.

Action Items

Immediate action items:

- Y2008 tag

- find out about the status of the FTPC (can't locate the relevant e-mail now)

- find out about the status of PMD in 2008 (open/closed)

- ask Akio about possible updates of the FMS code, get the final version

- based on Dave's records, add a small amount of material to the beampipe

- review the tech drawings from Bill and Will and others and start coding the support structure

- extract information from TOF people about the likely configuration

- when ready, produce the material profile plots for Y2008 in slices in Z

- TUP tags

- work with Jim Thomas, Gerrit and primarily Spiros on the definition of geometry for the next TUP wave

- coordinate with Spiros, Jim and Yuri a possible repass of the trecent TUP MC data without the IST

- Older tags

- check the more recent correction to the SVT code (carbon instead of Be used in the water channels)

- provide code for the correction for 3 layers of mylar on the beampipe as referred to above in Y2008

- check with Dave about the dimensions of the water channels (likely incorrect in GEANT)

- determine which years we will choose to retrofit with improved SVT (ask STAR members)

- MTD

- Establish a new UPGRXX tag for the MTD simulation

- supervise and help Lijuan in extending the filed map

- provide facility for reading a separate map in starsim and root4star (with Yuri)

- Misc

- collect feedback on possible simulation plans for the fall'07

- revisit the codes for event pre-selection ("hooks")

- revisit the event mixing scripts

- Development

- create a schema to store MC run catalog data with a view to automate job definition (Michael has promised help)

Beampipe support geometry and other news

Documentation for the beampipe support geometry description development

After the completion of the 2007 run, the SVT and the SSD were removed from the STAR detector along with there utility lines. The support structure for the beampipe remained, however.

The following drawings describe the structure of the beampipe support as it exists in the late 2007 and probably throughout 2008

Datasets

Here we present information about our datasets.

2005

| Description |

Dataset name

|

Statistics, thousands

|

Status

|

Moved to HPSS

|

Comment

|

|---|---|---|---|---|---|

| Herwig 6.507, Y2004Y |

rcf1259

|

225

|

Finished

|

Yes

|

7Gev<Pt<9Gev |

| Herwig 6.507, Y2004Y |

rcf1258

|

248

|

Finished

|

Yes

|

5Gev<Pt<7Gev |

| Herwig 6.507, Y2004Y |

rcf1257

|

367

|

Finished

|

Yes

|

4Gev<Pt<5Gev |

| Herwig 6.507, Y2004Y |

rcf1256

|

424

|

Finished

|

Yes

|

3Gev<Pt<4Gev |

| Herwig 6.507, Y2004Y |

rcf1255

|

407

|

Finished

|

Yes

|

2Gev<Pt<3Gev |

| Herwig 6.507, Y2004Y |

rcf1254

|

225

|

Finished

|

Yes

|

35Gev<Pt<100Gev |

| Herwig 6.507, Y2004Y |

rcf1253

|

263

|

Finished

|

Yes

|

25Gev<Pt<35Gev |

| Herwig 6.507, Y2004Y |

rcf1252

|

263

|

Finished

|

Yes

|

15Gev<Pt<25Gev |

| Herwig 6.507, Y2004Y |

rcf1251

|

225

|

Finished

|

Yes

|

11Gev<Pt<15Gev |

| Herwig 6.507, Y2004Y |

rcf1250

|

300

|

Finished

|

Yes

|

9Gev<Pt<11Gev |

| Hijing 1.382 AuAu 200 GeV minbias, 0< b < 20fm |

rcf1249

|

24

|

Finished

|

Yes

|

Tracking,new SVT geo, diamond: 60, +-30cm, Y2005D |

| Herwig 6.507, Y2004Y |

rcf1248

|

15

|

Finished

|

Yes

|

35Gev<Pt<45Gev |

| Herwig 6.507, Y2004Y |

rcf1247

|

25

|

Finished

|

Yes

|

25Gev<Pt<35Gev |

| Herwig 6.507, Y2004Y |

rcf1246

|

50

|

Finished

|

Yes

|

15Gev<Pt<25Gev |

| Herwig 6.507, Y2004Y |

rcf1245

|

100

|

Finished

|

Yes

|

11Gev<Pt<15Gev |

| Herwig 6.507, Y2004Y |

rcf1244

|

200

|

Finished

|

Yes

|

9Gev<Pt<11Gev |

| CuCu 62.4 Gev, Y2005C |

rcf1243

|

5

|

Finished

|

No

|

same as 1242+ keep Low Energy Tracks |

| CuCu 62.4 Gev, Y2005C |

rcf1242

|

5

|

Finished

|

No

|

SVT tracking test, 10 keV e/m process cut (cf. rcf1237) |

|

10 J/Psi, Y2005X, SVT out

|

rcf1241

|

30

|

Finished

|

No

|

Study of the SVT material

effect

|

|

10 J/Psi, Y2005X, SVT in

|

rcf1240

|

30

|

Finished

|

No

|

Study of the SVT material

effect

|

|

100 pi0, Y2005X, SVT out

|

rcf1239

|

18

|

Finished

|

No

|

Study of the SVT material

effect

|

|

100 pi0, Y2005X, SVT in

|

rcf1238

|

20

|

Finished

|

No

|

Study of the SVT material

effect

|

| CuCu 62.4 Gev, Y2005C |

rcf1237

|

5

|

Finished

|

No

|

SVT tracking test, pilot run |

| Herwig 6.507, Y2004Y |

rcf1236

|

8

|

Finished

|

No

|

Test run for initial comparison with Pythia, 5Gev<Pt<7Gev |

| Pythia, Y2004Y |

rcf1235

|

100

|

Finished

|

No

|

MSEL=2, min bias |

| Pythia, Y2004Y |

rcf1234

|

90

|

Finished

|

No

|

MSEL=0,CKIN(3)=0,MSUB=91,92,93,94,95 |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1233

|

308

|

Finished

|

Yes

|

4<Pt<5, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1232

|

400

|

Finished

|

Yes

|

3<Pt<4, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1231

|

504

|

Finished

|

Yes

|

2<Pt<3, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1230

|

104

|

Finished

|

Yes

|

35<Pt, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1229

|

208

|

Finished

|

Yes

|

25<Pt<35, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1228

|

216

|

Finished

|

Yes

|

15<Pt<25, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1227

|

216

|

Finished

|

Yes

|

11<Pt<15, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1226

|

216

|

Finished

|

Yes

|

9<Pt<11, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1225

|

216

|

Finished

|

Yes

|

7<Pt<9, MSEL=1, GHEISHA |

| Pythia, Y2004Y, sp.2 (CDF tune A) |

rcf1224

|

216

|

Finished

|

Yes

|

5<Pt<7, MSEL=1, GHEISHA |

| Pythia special tune2 Y2004Y, GCALOR |

rcf1223

|

100

|

Finished

|

Yes

|

4<Pt<5, GCALOR

|

| Pythia special tune2 Y2004Y, GHEISHA |

rcf1222

|

100

|

Finished

|

Yes

|

4<Pt<5, GHEISHA

|

| Pythia special run 3 Y2004C |

rcf1221

|

100

|

Finished

|

Yes

|

ENER 200.0, MSEL 2, MSTP (51)=7, MSTP (81)=1, MSTP (82)=1, PARP (82)=1.9, PARP (83)=0.5, PARP (84)=0.2, PARP (85)=0.33, PARP (86)=0.66, PARP (89)=1000, PARP (90)=0.16, PARP (91)=1.0, PARP (67)=1.0 |

| Pythia special run 2 Y2004C (CDF tune A) |

rcf1220

|

100

|

Finished

|

Yes

|

ENER 200.0, MSEL 2, MSTP (51)=7, |

| Pythia special run 1 Y2004C |

rcf1219

|

100

|

Finished

|

Yes

|

ENER 200.0, MSEL 2, MSTP (51)=7, MSTP (81)=1, MSTP (82)=1, PARP (82)=1.9, PARP (83)=0.5, PARP (84)=0.2, PARP (85)=0.33, PARP (86)=0.66, PARP (89)=1000, PARP (90)=0.16, PARP (91)=1.5, PARP (67)=1.0 |

| Hijing 1.382 AuAu 200 GeV central 0< b < 3fm |

rcf1218

|

50

|

Finished

|

Yes

|

Statistics enhancement of rcf1209 with a smaller diamond: 60, +-30cm, Y2004a |

| Hijing 1.382 CuCu 200 GeV minbias 0< b < 14 fm |

rcf1216

|

52

|

Finished

|

Yes

|

Geometry: Y2005x

|

| Hijing 1.382 AuAu 200 GeV minbias 0< b < 20 fm |

rcf1215

|

100

|

Finished

|

Yes

|

Geometry: Y2004a, Special D decays |

2006

| Description | Dataset name | Statistics, thousands | Status | Moved to HPSS | Comment |

|---|---|---|---|---|---|

| AuAu 200 GeV central | rcf1289 | 1 | Finished | No | upgr06: Hijing, D0 and superposition |

| AuAu 200 GeV central | rcf1288 | 0.8 | Finished | No | upgr11: Hijing, D0 and superposition |

| AuAu 200 GeV min bias | rcf1287 | 5 | Finished | No | upgr11: Hijing, D0 and superposition |

| AuAu 200 GeV central | rcf1286 | 1 | Finished | No | upgr10: Hijing, D0 and superposition |

| AuAu 200 GeV min bias | rcf1285 | 6 | Finished | No | upgr10: Hijing, D0 and superposition |

| AuAu 200 GeV central | rcf1284 | 1 | Finished | No | upgr09: Hijing, D0 and superposition |

| AuAu 200 Gev min bias | rcf1283 | 6 | Finished | No | upgr09: Hijing, D0 and superposition |

| AuAu 200 GeV min bias | rcf1282 | 38 | Finished | No | upgr06: Hijing, D0 and superposition |

| AuAu 200 GeV min bias | rcf1281 | 38 | Finished | Yes | upgr08: Hijing, D0 and superposition |

| AuAu 200 GeV min bias | rcf1280 | 38 | Finished | Yes | upgr01: Hijing, D0 and superposition |

| AuAu 200 GeV min bias | rcf1279 | 38 | Finished | Yes | upgr07: Hijing, D0 and superposition |

| Extension of 1276: D0 superposition | rcf1278 | 5 | Finished | No | upgr07: Z cut=+-300cm |

| AuAu 200 GeV min bias | rcf1277 | 5 | Finished | No | upgr05: Z cut=+-300cm |

| AuAu 200 GeV min bias | rcf1276 | 35 | Finished | No | upgr05: Hijing, D0 and superposition |

| Pythia 200 GeV + HF | rcf1275 | 23*4 | Finished | No | J/Psi and Upsilon(1S,2S,3S) mix for embedding |

| AuAu 200 GeV min bias | rcf1274 | 10 | Finished | No | upgr02 geo tag, |eta|<1.5 (tracking upgrade request) |

| Pythia 200 GeV | rcf1273 | 600 | Finished | Yes | Pt <2 (Completing the rcf1224-1233 series) |

| CuCu 200 GeV min bias+D0 mix | rcf1272 | 50+2*50*8 | Finished | Yes | Combinatorial boost of rcf1261, sigma: 60, +-30 |

| Pythia 200 GeV | rcf1233 | 300 | Finished | Yes | 4< Pt <5 (rcf1233 extension) |

| Pythia 200 GeV | pds1232 | 200 | Finished | Yes | 3< Pt <4 (rcf1232 clone) |

| Pythia 200 GeV | pds1231 | 240 | Finished | Yes | 2< Pt <3 (rcf1231 clone) |

| Pythia 200 GeV | rcf1229 | 200 | Finished | Yes | 25< Pt <35 (rcf1229 extension) |

| Pythia 200 GeV | rcf1228 | 200 | Finished | Yes | 15< Pt <25 (rcf1228 extension) |

| Pythia 200 GeV | rcf1227 | 208 | Finished | Yes | 11< Pt <15 (rcf1227 extension) |

| Pythia 200 GeV | rcf1226 | 200 | Finished | Yes | 9< Pt <11 (rcf1226 extension) |

| Pythia 200 GeV | rcf1225 | 200 | Finished | Yes | 7< Pt <9 (rcf1225 extension) |

| Pythia 200 GeV | rcf1224 | 212 | Finished | Yes | 5< Pt <7 (rcf1224 extension) |

| Pythia 200 GeV Y2004Y CDF_A | rcf1271 | 120 | Finished | Yes | 55< Pt <65 |

| Pythia 200 GeV Y2004A CDF_A | rcf1270 | 120 | Finished | Yes | 45< Pt <55 |

| CuCu 200 GeV min bias | rcf1266 | 10 | Finished | Yes | SVT study: clams and two ladders |

| CuCu 200 GeV min bias | rcf1265 | 10 | Finished | Yes | SVT study: clams displaced |

| CuCu 200 GeV min bias | rcf1264 | 10 | Finished | Yes | SVT study: rotation of the barrel |

| CuCu 62.4 GeV min bias+D0 mix | rcf1262 | 50*3 | Finished | Yes | 3 subsets: Hijing, single D0, and the mix |

| CuCu 200 GeV min bias+D0 mix | rcf1261 | 50*3 | Finished | No | 3 subsets: Hijing, single D0, and the mix |

1 J/Psi over 200GeV minbias AuAu | rcf1260 | 10 | Finished | No | J/Psi mixed with 200GeV AuAu Hijing Y2004Y 60/35 vertex |

2007

Unless stated otherwise, all pp collisions are modeled with Pythia, and all AA collisions with Hijing. Statistics is listed in thousands of events. Multiplication factor in some of the records refelcts the fact that event mixing was done for a few types of particles, on the same base of original event files.

Name

System/Energy

Statistics

Status

HPSS

Comment

Site

rcf1290

AuAu200 0<b<3fm, Zcut=5cm

32*5

Done

Yes

Hijing+D0+Lac2+D0_mix+Lac2_mix

rcas

rcf1291

pp200/UPGR07/Zcut=10cm

10

Done

Yes

ISUB = 11, 12, 13, 28, 53, 68

rcas

rcf1292

pp500/UPGR07/Zcut=10cm

10

Done

Yes

ISUB = 11, 12, 13, 28, 53, 68

rcas

rcf1293

pp200/UPGR07/Zcut=30cm

205

Done

Yes

ISUB = 11, 12, 13, 28, 53, 68

rcas

rcf1294

pp500/UPGR07/Zcut=30cm

10

Done

Yes

ISUB = 11, 12, 13, 28, 53, 68

rcas

rcf1295

AuAu200 0<b<20fm, Zcut=30cm

20

Done

Yes

QA run for the Y2007 tag

rcas

rcf1296

AuAu200 0<b<3fm, Zcut=10cm

100*5

Done

Yes

Hijing,B0,B+,B0_mix,B+_mix, Y2007

rcas

rcf1297

AuAu200 0<b<20fm, Zcut=300cm

40

Done

Yes

Pile-up simulation in the TUP studies, UPGR13

rcas

rcf1298

AuAu200 0<b<3fm, Zcut=15cm

100*5

Done

Part

Hijing,D0,Lac2,D0_mix,Lac2_mix, UPGR13

rcas

rcf1299

pp200/Y2005/Zcut=50cm

800

Done

Yes

Pythia, photon mix, pi0 mix

rcas

rcf1300

pp200/UPGR13/Zcut=15cm

100

Done

No

Pythia, MSEL=4 (charm)

rcas

rcf1301

pp200/UPGR13/Zcut=300cm

84

Done

No

Pythia, MSEL=1, wide vertex

rcas

rcf1302

pp200 Y2006C

120

Done

No

Pythia for Spin PWG, Pt(45,55)GeV

rcas

rcf1303

pp200 Y2006C

120

Done

No

Pythia for Spin PWG, Pt(35,45)GeV

rcas

rcf1304

pp200 Y2006C

120

Done

No

Pythia for Spin PWG, Pt(55,65)GeV

rcas

rcf1296

Upsilon S1,S2,S3 + Hijing

15*3

Done

No

Muon Telescope Detector, ext.of 1296

rcas

rcf1306

pp200 Y2006C

400

Done

Yes

Pythia for Spin PWG, Pt(25,35)GeV

rcas

rcf1307

pp200 Y2006C

400

Done

Yes

Pythia for Spin PWG, Pt(15,25)GeV

rcas

rcf1308

pp200 Y2006C

420

Done

Yes

Pythia for Spin PWG, Pt(11,15)GeV

rcas

rcf1309

pp200 Y2006C

420

Done

Yes

Pythia for Spin PWG, Pt(9,11)GeV

rcas

rcf1310

pp200 Y2006C

420

Done

Yes

Pythia for Spin PWG, Pt(7,9)GeV

rcas

rcf1311

pp200 Y2006C

400

Done

Yes

Pythia for Spin PWG, Pt(5,7)GeV

rcas

rcf1312

pp200 Y2004Y

544

Done

No

Di-jet CKIN(3,4,7,8,27,28)=7,9,0.0,1.0,-0.4,0.4

rcas

rcf1313

pp200 Y2004Y

760

Done

No

Di-jet CKIN(3,4,7,8,27,28)=9,11,-0.4,1.4,-0.5,0.6

rcas

rcf1314

pp200 Y2004Y

112

Done

No

Di-jet CKIN(3,4,7,8,27,28)=11,15,-0.2,1.2,-0.6,-0.3

Grid

rcf1315

pp200 Y2004Y

396

Done

No

Di-jet CKIN(3,4,7,8,27,28)=11,15,-0.5,1.5,-0.3,0.4

Grid

rcf1316

pp200 Y2004Y

132

Done

No

Di-jet CKIN(3,4,7,8,27,28)=11,15,0.0,1.0,0.4,0.7

Grid

rcf1317

pp200 Y2006C

600

Done

Yes

Pythia for Spin PWG, Pt(4,5)GeV

Grid

rcf1318

pp200 Y2006C

690

Done

Yes

Pythia for Spin PWG, Pt(3,4)GeV

Grid

rcf1319

pp200 Y2006C

690

Done

Yes

Pythia for Spin PWG, Minbias

Grid

rcf1320

pp62.4 Y2006C

400

Done

No

Pythia for Spin PWG, Pt(4,5)GeV

Grid

rcf1321

pp62.4 Y2006C

250

Done

No

Pythia for Spin PWG, Pt(3,4)GeV

Grid

rcf1322

pp62.4 Y2006C

220

Done

No

Pythia for Spin PWG, Pt(5,7)GeV

Grid

rcf1323

pp62.4 Y2006C

220

Done

No

Pythia for Spin PWG, Pt(7,9)GeV

Grid

rcf1324

pp62.4 Y2006C

220

Done

No

Pythia for Spin PWG, Pt(9,11)GeV

Grid

rcf1325

pp62.4 Y2006C

220

Done

No

Pythia for Spin PWG, Pt(11,15)GeV

Grid

rcf1326

pp62.4 Y2006C

200

Running

No

Pythia for Spin PWG, Pt(15,25)GeV

Grid

rcf1327

pp62.4 Y2006C

200

Running

No

Pythia for Spin PWG, Pt(25,35)GeV

Grid

rcf1328

pp62.4 Y2006C

50

Running

No

Pythia for Spin PWG, Pt(35,45)GeV

Grid

2009

| Name | SystemEnergy |

Range | Statistics | Comment |

| rcf9001 | pp200, y2007g | 03_04gev | 690k | Jet Study AuAu200(PP200) JLC PWG |

| rcf9002 | 04_05gev | 686k | ||

| rcf9003 | 05_07gev | 398k | ||

| rcf9004 | 07_09gev | 420k | ||

| rcf9005 | 09_11gev | 412k | ||

| rcf9006 | 11_15gev | 420k | ||

| rcf9007 | 15_25gev | 397k | ||

| rcf9008 | 25_35gev | 400k | ||

| rcf9009 | 35_45gev | 120k | ||

| rcf9010 | 45_55gev | 118k | ||

| rcf9011 | 55_65gev | 120k | ||

| Name | SystemEnergy | Range | Statistics | Comment |

| rcf9021 | pp200,y2008 | 03_04 GeV | 690k | Jet Study AuD200(PP200) JLC PWG |

| rcf9022 | 04_05 GeV | 686k | ||

| rcf9023 | 05_07 GeV | 398k | ||

| rcf9024 | 07_09 GeV | 420k | ||

| rcf9025 | 09_11 GeV | 412k | ||

| rcf9026 | 11_15 GeV | 420k | ||

| rcf9027 | 15_25 GeV | 397k | ||

| rcf9028 | 25_35 GeV | 400k | ||

| rcf9029 | 35_45 GeV | 120k | ||

| rcf9030 | 45_55 GeV | 118k | ||

| rcf9031 | 55_99 GeV | 120k |

| Name | SystemEnergy | Range | Statistics | Comment |

| rcf9041 | PP500, Y2009 | 03_04gev | 500k | Spin Study PP500 Spin group(Matt,Jim,Jan) 2.3M evts |

| rcf9042 | 04_05gev | 500k | ||

| rcf9043 | 05_07gev | 300k | ||

| rcf9044 | 07_09gev | 250k | ||

| rcf9045 | 09_11gev | 200k | ||

| rcf9046 | 11_15gev | 100k | ||

| rcf9047 | 15_25gev | 100k | ||

| rcf9048 | 25_35gev | 100k | ||

| rcf9049 | 35_45gev | 100k | ||

| rcf9050 | 45_55gev | 25k | ||

| rcf9051 | 55_99gev | 25k | ||

| rcf9061 | CuCu200,y2005h | B0_14 | 200k | CuCu200 radiation length budget, Y.Fisyak, KyungEon Choi. |

| rcf9062 | AuAu200, y2007h | B0_14 | 150k | AuAu200 radiation length budget Y.Fisyak ,KyungEon Choi |

2010

Information on Monte Carlo Data Samples

| Geometry | y2009a |

| Library | SL09g |

| Generator | Pythia 6.4.22 |

| Tune | 320 |

| Field | -5.0 |

| ETA | -10 < η < +10 |

| PHI | -π < φ < +π |

| vertex | 0, 0, -2 |

| width | 0.015, 0.015, 42.0 |

| Sample | Channel | Events |

| rcf10000 | W+ → e+ nu | 10k |

| rcf10001 | W- → e- nu | 6k |

| rcf10002 | W+ → tau+ nu W- → tau- nu |

10k |

| rcf10003 | pp → W+/- + jet | 10k |

| rcf10004 | Z e+e-, no Z/gamma interference | 4k |

| rcf10005 | Z all but e+e- | 10k |

| rcf10006 | QCD w/ partonic pT > 35 GeV | 100k |

Geometry Tag Options

This page documents the options in geometry.g which define each of the production tags.

This page documents the options in geometry.g which define each of the production tags.

This page documents the options in geometry.g which define each of the production tags.

This page documents the options in geometry.g which define each of the production tags.

This page documents the options in geometry.g which define each of the production tags.

This page documents the options in geometry.g which define each of the production tags.

Geometry Tag Options II

The attached spreadsheets document the production tags in STARSIM on 11/30/2009. At that time the y2006h and y2010 tags were in development and not ready for production.

Material Balance Histograms

.

Y2008a

y2008a full and TPC only material histograms

y2008aStar

1 |

2 |

|

|

|

|

y2008aTpce

|

|

|

|

|

|

y2005g

.

y2005gStar

|

2 |

3 |

|

|

|

y2005gTpce

|

|

|

|

|

|

y2008yf

.

y2008yfStar

|

111 111 |

|

|

| ` |

y2008yfTpce

|

1 |

|

|

|

|

y2009

.

y2009Star

|

. |

|

. |

|

|

y2009Tpce

|

. |

|

|

|

|

STAR AgML Geometry Comparison with STARSIM/AgSTAR

STAR Geometry Comparison: AgML vs AgSTAR

At the left is a general status for each geometry tag which compiles in AgML. All volumes are tested recursively except for the "IBEM" and similar support structures for the VPD, and the Endcap SMD strips. (The ESMD planes are tested as a unit, rather than test all 2*12*288 SMD strips).

Color codes:

Green: No differences larger than 1% Yellow: The volume did not appear in AgSTAR geometry Orange: Difference was larger than 1%, but absolute difference is absolutely negligible. Red: A difference larger than 1% was detected for a significant amount of material; or a negligible but widespread difference was detected.

At the right is a PDF file for each geometry tag. For each volume we show two plots. The top plot shows the absolute number of radiation lengths which a geantino encounters traversing the geometry, starting at the geometry and following a straight line at the given pseudorapidity. We average over all phi. The left (right) hashes show the AgML (AgSTAR) geometry. The difference (expressed as a fractional value) of the two histograms is shown the lower plot. Frequently the differences are small, e.g. 10^-6, and ROOT rescales the plots accordingly. Since it is difficult to read the scales of so many plots at once, we have color coded the plots. (Coding seems to fail in the generation of some histograms)... The meaning of the color coding is summarized below.

<?php

/********************************************************************** START OF PHP */

/* =======================================================

Helper function to show the status_yXXXX.png

======================================================= */

function showImage( $tag, $dir ) {

echo "<img src=\"$dir/status_$tag.png\" />";

}

/* =======================================================

Helper function to show the PDF file

======================================================= */

function showGoogle( $tag, $dir ) {

/*

echo "<iframe border=\"0\" url=\"http://docs.google.com/gview?url=$dir$tag.pdf&embedded=true\" style=\"width: 562px; height: 705px;\"> </iframe>";

*/

echo

"<iframe frameborder=\"0\" style=\"width: 562px; height: 705px;\" src=\"http://docs.google.com/gview?url=$dir/$tag.pdf&embedded=true\"></iframe>"

;

}

/* =======================================================

First some PHP input... find the date of the comparison

======================================================= */

$YEAR="2011";

$DATE="06-15-2011";

$DIR="http://www.star.bnl.gov/~jwebb/".$YEAR."/".$DATE."/AgML-Comparison/";

$TAGS=$DIR."TAGS";

/* =======================================================

Output header for this page

======================================================= */

echo "<h3>STAR AgML vs AgSTAR Comparison on ".$DATE."</h3>";

/* =======================================================

Read in each line in the TAGs file

======================================================= */

$handle = @fopen("$TAGS", "r");

if ($handle) {

while (($buffer = fgets($handle, 4096)) !== false) {

/* Trim the whitespace out of the string */

$buffer=trim($buffer);

/* Draw an HRULE and specify which geometry tag we are using */

echo "<hr><p>STAR Geometry Tag $buffer</p>";

/* Now build a 2-entry table with the status PNG on the left

and the summary PDF ala google docs on the right */

showImage( $buffer, $DIR );

showGoogle( $buffer, $DIR );

}

if (!feof($handle)) {

echo "Error: unexpected fgets() fail\n";

}

fclose($handle);

}

/************************************************************************ END OF PHP */

?>STAR AgML Language Reference

STAR Geometry Page

R&D Tags

The R&D conducted for the inner tracking upgrade required that a few specialized geometry tags be created. For a complete set of geometry tags, please visit the STAR Geometry in simulation & reconstruction page. The below serves as additional documentation and details.

Taxonomy:

-

SSD: Silicon strip detector

-

IST: Inner Silicon Tracker

-

HFT: Heavy Flavor Tracker

-

IGT: Inner GEM Tracker

-

HPD: Hybrid Pixel Detector

The TPC is present in all configuration listed below and the SVT is in none.

|

Tag |

SSD | IST | HFT | IGT | HPD | Contact Person | Comment | |

|---|---|---|---|---|---|---|---|---|

|

UPGR01 |

+ |

|

+ |

|

||||

|

UPGR02 |

|

+ |

+ |

|

||||

|

UPGR03 |

|

+ |

+ |

+ |

|

|||

|

|

+ |

|

|

+ |

Sevil |

retired | ||

|

|

+ |

+ |

+ |

+ |

+ |

Everybody |

retired | |

|

|

+ |

+ |

+ |

Sevil |

retired | |||

|

UPGR07 |

+ |

+ |

+ |

+ |

|

Maxim |

||

|

|

+ |

+ |

+ |

+ |

Maxim |

|||

|

|

+ |

+ |

+ |

Gerrit |

retired Outer IST layer only | |||

|

UPGR10 |

+ |

+ |

+ |

Gerrit |

Inner IST@9.5cm | |||

|

UPGR11 |

+ |

+ |

+ |

|

Gerrit |

IST @9.5&@17.0 | ||

|

|

+ |

+ |

+ |

+ |

+ |

Ross Corliss |

retired UPGR05*diff.igt.radii | |

|

UPGR13 |

+ |

+ |

+ |

+ |

Gerrit |

UPGR07*(new 6 disk FGT)*corrected SSD*(no West Cone) | ||

| UPGR14 | + | + | + | Gerrit | UPGR13 - IST | |||

| UPGR15 | + | + | + | Gerrit | Simple Geometry for testing, Single IST@14cm, hermetic/polygon Pixel/IST geometry. Only inner beam pipe 0.5mm Be. Pixel 300um Si, IST 1236umSi | |||

| UPGR20 | + | Lijuan | Y2007 + one TOF | |||||

| UPGR21 | + | Lijuan | UPGR20 + full TOF |

Eta coverage of the SSD and HFT at different vertex spreads:

|

Z cut, cm |

eta SSD | eta HFT |

|---|---|---|

|

5 |

1.63 |

2.00 |

|

10 |

1.72 |

2.10 |

|

20 |

1.87 |

2.30 |

|

30 |

2.00 |

2.55 |

Material balance studies for the upgrade: presented below are the usual radiation length plots (as a function of rapidity).

Full UPGR05:

Forward region: the FST and the IGT ONLY:

Below, we plot the material for each individual detector, excluding the forward region to reduce ambiguity.

SSD:

IST:

HPD:

HFT:

Event Filtering

The attached PDF describes event filtering in the STAR framework.

Event Generators

Event Generator Framework- StarPrimaryMaker -- Main steering class for event generation

- StarGenerator -- Base class (abstract) for interface to event generators

- StarGenEvent -- Base class for event records

- StarGenPPEvent -- Implementation of a generic PP event record

- StarGenEPEvent -- Implementation of a generic EP event record

- StarGenAAEvent -- Implementation of a generic AA event record

- StarGenEAEvent -- Future implementation of a generic EA event record

- StarGenParticle -- Representation of a particle in the STAR event record

- StarParticleData -- "Database" class holding particle data used in simulation

$ cvs co StRoot/StarGenerator/macros

- starsim.pythia6.C

- starsim.pythia8.C

- starsim.hijing.C

- starsim.herwig.C

- starsim.pepsi.C

- starsim.starlight.C

- starsim.kinematics.C

$ ln -s StRoot/StarGenerator/macros/starsim.pythia8.C starsim.C $ root4star -q -b starsim.C\(100\)This will generate two files. A standard "fzd" file, which can be reconstructed using the big "full" chain (bfc.C). And a root file, containing a TTree expressing the event record for the generated events.

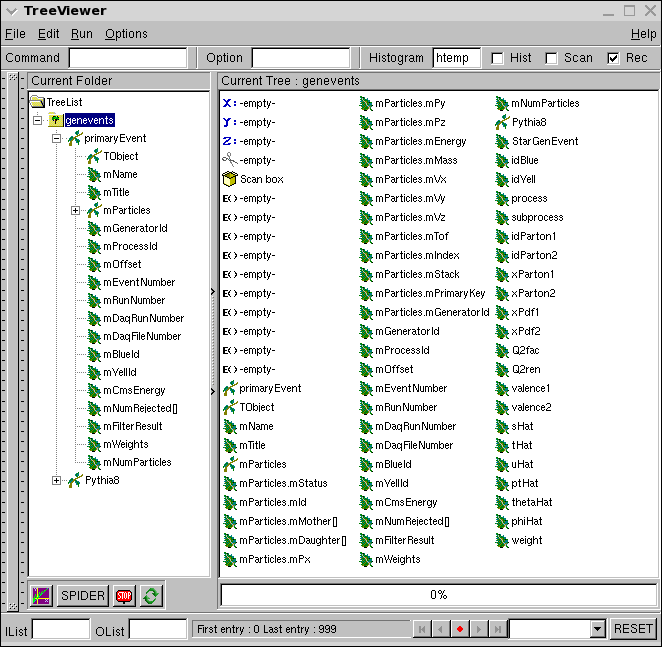

The new Event Record

The event-wise and particle-wise information from event generators is saved in a ROOT/TTree. The TTree can be read in in sync with the MuDst when you perform your analysis. The ID truth values in the reconstructed tracks in the MuDst can be compared to the primary key of the tracks in the event record to identify generator tracks which were reconstructed by the tracker.

The event record can be browsed using the standard ROOT ttree viewer. Example for pythia 8:

root [0] TFile::Open("pythia8.starsim.root")

root [1] genevents->StartViewer()

root [2] genevents->Draw("mMass","mStatus>0")

The event record contains both particle-wise and event-wise information. For the definitions of different quantities, see the documentation provided in the StarGenEvent links above.

Adding a new Generator

Event generators are responsible for creating the final state particles which are fed out to GEANT for simulation. They can be as simple as particle guns, shooting individual particles along well defined trajectories, or complex hydrodynamical models of heavy-ion collisions. Regardless of the complexities of the underlying physical model, the job of an event generator in the STAR framework is to add particles to an event record. In this document we will describe the steps needed to add a new event generator to the STAR framework. The document will be divided into three sections: (1) An overview, presenting the general steps which are required; (2) A FORtran-specific HOWTO, providing guidance specific to the problem of interfacing FORtran with a C++ application; and (3) A document describing the STAR event record.Contents:

1.0 Integrating Event Generators

2.0 Integrating FORtran Event Generators

3.0 The STAR Event Record

1.0 Integrating Event Generators

The STAR Event Generator Framework implements several C++ classes which facilitate the integration of FORtran and C++ event generators with the STAR simulation code. The code is available in the CVS repository and can be checked out as

$ cvs co StRoot/StarGeneratorAfter checking out the generator area you will note that the code is organized into several directories, containing both CORE packages and concrete event generators. Specifically:

StarGenerator/BASE -- contains the classes implementing the STAR interface to event generators

StarGenerator/EVENT -- contains the classes implementing the STAR event record

StarGenerator/UTIL -- contains random number generator base class and particle data

StarGenerator/TEST -- contains test makers used for validating the event generators

The concrete event generators (at the time this document was assembled) include

StarGenerator/Hijing1_383

StarGenerator/Pepsi

StarGenerator/Pythia6_4_23

StarGenerator/Pythia8_1_62

1.1 Compiling your Generator

Your first task in integrating a new event generator is to create a directory for it under StarGenerator, and get your code to compile. You should select a name for your directory which includes the name and version of your event generator. It should also be CamelCased... MyGenerator1_2_3, for example. (Do not select a name which is ALL CAPS, as this has a special meaning for compilation). Once you have your directory, you can begin moving your source files into the build area. In general, we would like to minimize the number of edits to the source code to make it compile. But you may find that you need to reorganize the directory structure of your code to get it to compile under cons. (For certain, if your FORtran source ends in ".f" you will need to rename the file to ".F", and if your C++ files end in ".cpp" or ".cc", you may need to rename to ".cxx".)

1.2 Creating your Interface

Ok. So the code compiles. Now we need to interface the event generation machinery with the STAR framework. This entails several things. First, we need to expose the configuration of the event generator so that the end user can generate the desired event sample. We must then initialize the concrete event generator at the start of the run, and then exercise the event generation machinery on each and every event. Finally, we need to loop over all of the particles which were created by the event generator and push them onto the event record so that they are persistent (i.e. the full event can be analyzed at a later date) and so that the particles are made available to the Monte Carlo application for simulation.

The base class for all event generator interfaces is StarGenerator.

Taking a quick look at the code, we see that there are several "standard" methods defined for configuring an event generator:

- SetBlue( ... ) -- Sets the blue beam particle, e.g. proton

- SetYell( ... ) -- Sets the yellow beam particle, e.g. Au

- SetFrame( ... ) -- Sets the reference frame, e.g. CMS

You may need to implement additional methods in order to expose the configuration of your event generator. You should, of course, do this.

The two methods which StarGenerator requires you to implement are Init() and Generate(). These methods will respectively be called at the start of each run, and during each event.

Init() is responsible for initializing the event generator. In this method, you should pass any of the configuration information on to your concrete event generator. This may be through calls to subroutines in your event generator, or by setting values in common blocks. However this is done, this is the place to do it.

Generate() will be called on every single event during the run. This is where you should exercise the generation machinery of your event generator. Every event generator handles this differently, so you will need to consult your manual to figure out the details.

Once Generate() has been called, you are ready to fill the event record. The event record consists of two parts: (1) the particle record, and (2) the event-wise information describing the physical interaction being simulated. At a minimum, you will need to fill the particle-wise information. For more details, see The STAR Event Record below.

2.0 Integrating FORtran Event Generators

Interfacing a FORtran event generator with ROOT involves (three) steps:

1. Interface the event generator's common blocks (at least the ones which we need to use) to C++

2. Map those common blocks onto C++ structures

3. Expose the C++ structures representing the common blocks to ROOT so that users may modify / access their contents

Let's look at the pythia event generator for a concrete example.

If you examine the code in StRoot/StarGenerator/Pythia6_4_23/ there is a FORtran file named address.F. Open that up in your favorite editor and have a look... You'll see several functions defined. The first one is address_of_pyjets. In it we declare the PYJETS common block, essentially just cutting and pasting the delcaration from the pythia source code in the same directory.

Next we need to describe the memory layout to C++. This is done in the file Pythia6.h. Each common block setup in address.F has a corresponding structure defined in this header file. So, let's take a look at the setup for the PyJets common block:

First, notice the first line where we call the c-preprocessor macro "F77_NAME". This line handles, in a portable way, the different conventions between FORtran and C++ compilers, when linking together object codes.

Next, let's discuss "memory layout". In this section of the code we map the FORtran memory onto a C-structure. Every variable in the common block should be declared in the same order in the C struct as it was declared in FORtran, and with the corresponding C data type. These are:

INTEGER --> Int_t REAL --> Float_t REAL *4 --> Float_t REAL *8 --> Double_t DOUBLE PRECISION --> Double_tYou probably noticed that there are two differences with the way we have declared the arrays. First, the arrays all were declared with an "_" in front of their name. This was a choice on my part, which I will explain in a moment. The important thing to notice right now is that the indicies on the arrays are reversed, compared to their declarion in FORtran. "INTEGER K(4000,5)" in FORtran becomes "Int_t _k[5][4000]" in C++. The reason for this is that C++ and FORtran represent arrays differently in memory. It is important to keep these differences in mind when mapping the memory of a FORtran common block --

1) The indices in the arrays will always be reversed between FORtran and C -- A(10,20,30) in FORtran becomes A[30][20][10] in C.

2) FORtran indices (by default) start from 1, C++ (always) from 0 -- i.e. B(1) in FORtran would be B[0] in C.

3) FORtran indices may start from any value. An array declared as D(-10:10) would be declared in C as D[21], and D(-10) in FORtran is D[0] in C.

What about the underscore?

We need to make some design choices at this point. Specifically, how do we expose the common blocks to the end user? Do we want the end user to deal with the differences in C++ and FORtran, or do we want to provide a mechanism by which the FORtran behavior (i.e. count from 1, preserve the order of indices) can be emulated.

My preference is to do the latter -- provide the end user functions which emulate the behavior of the FORtran arrays, because these arrays are what is documented in the event generator's manual. This will minimize the likelyhood that the end user will make mistakes in configuring t he event generator.