- jeromel's home page

- Posts

- 2025

- 2020

- 2019

- 2018

- 2017

- 2016

- 2015

- December (1)

- November (1)

- October (2)

- September (1)

- July (2)

- June (1)

- March (3)

- February (1)

- January (1)

- 2014

- 2013

- 2012

- 2011

- 2010

- December (2)

- November (1)

- October (4)

- August (3)

- July (3)

- June (2)

- May (1)

- April (4)

- March (1)

- February (1)

- January (2)

- 2009

- December (3)

- October (1)

- September (1)

- July (1)

- June (1)

- April (1)

- March (4)

- February (6)

- January (1)

- 2008

- My blog

- Post new blog entry

- All blogs

HPSS MDC, Run 17

Updated on Fri, 2016-12-16 15:06. Originally created by jeromel on 2016-12-15 15:34.

Following a reshape of networking in summer 2016 (new switches, dual ath using LACP at the end of July) and the introduction of LTO7 technlogy, yet a new Mock Data Sync is needed. This blog documents the test we do everytime we change configuration. The previous one is available at HPSS MDC, Run 16.

The exact file size 5000086528 (example below)

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00259

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00169

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00039

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00194

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00037

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00172

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00032

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00204

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00046

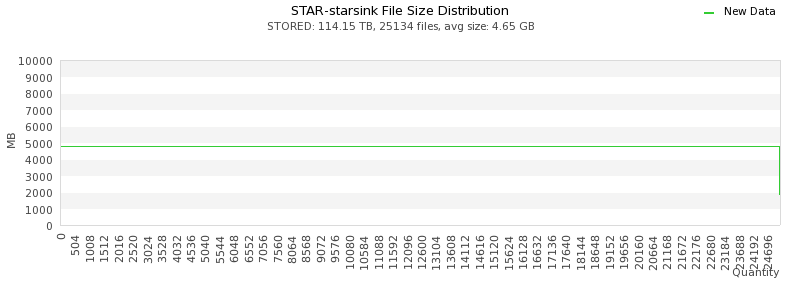

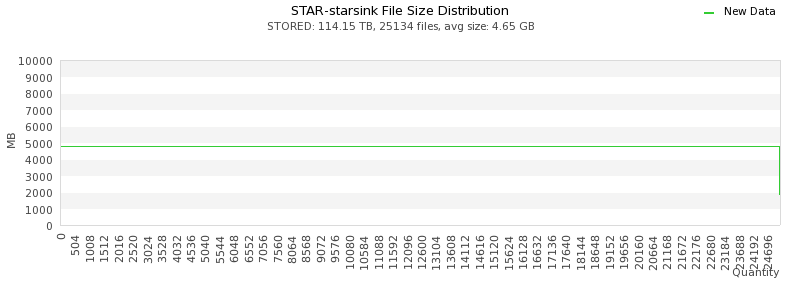

leading to a monitored average file size as follows

Lucklily, 5000086528 == 4.66 GB (or 4.65 if truncated to 2 digits).

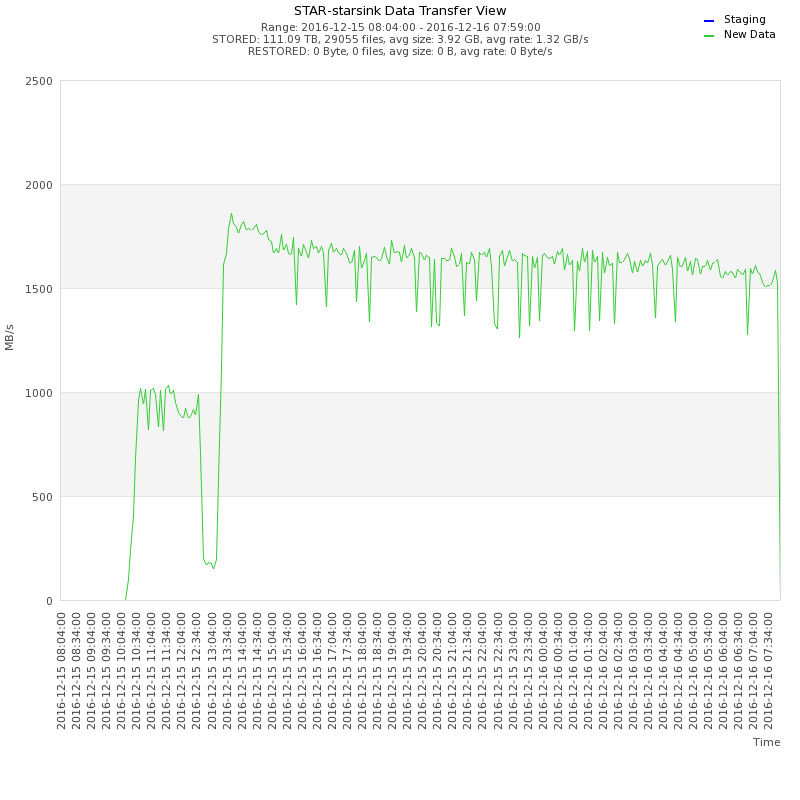

Summing the averages, we get 1741.6 M ... to relate to the data sync speed frm the other side (HPSS) showing consistency with a speed of 1.7-ish GB/sec.

A note that the first portion of the above graph (a lower data transfer rate) was caused by the fact that we found that the LACP hashing leaded to an assymetrical data transfer balance between links. This was fixed and the test restarted.

The network topology was modified to a Layer3/4 structure (connection to BNL's "HPC Core" infrastructure).

Clockwise, 3.51+3.46+3.47+3.52 = 13.96 Gb/sec == 1.75 GB/sec. Consistent again and in addition, we are balanced acrossed links (in average).

More interresting is this graph

We can achieve ~ 203 MB/sec / drive write speed.

For the reads, I got a list of files created during the write, submitted all to the DataCarousel and let it restore on a single partition.

An initial measurement showed 307 MB/sec read speed which may be over-optimistic as a grand average. More results will be provided later as the test ends and more data over a wider time range is gathered.

Thoughts: This speed raises concerns - the impedence missmatch is on the user-end file system that is, we can restore from HPSS tape to HPSS cache fasterthan we can take th files out of HPSS cache to live storage. This may create a situation where the HPSS cache gets full (because the "consumption" of files from cache to live drive missmatch by a large amount), then flush and each subsequent files restored by the Carousel call-back forces second tape mount. The conclusion is that LTO7 would be practical nadbeneficial for distributed data production (CRS) and distributed data restore in Xrootd (SE cardinality is high) but not suitable for single-user restores - improvement and more intelligence to the Carousel to detect this condition will be needed.

Following a reshape of networking in summer 2016 (new switches, dual ath using LACP at the end of July) and the introduction of LTO7 technlogy, yet a new Mock Data Sync is needed. This blog documents the test we do everytime we change configuration. The previous one is available at HPSS MDC, Run 16.

Data sync to HPSS

Basics

The test was started around noon on Dec 15th and continued until mid-morning on the 16th. All 10 EVB transferred data.The exact file size 5000086528 (example below)

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00259

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00169

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00039

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00194

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00037

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00172

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00032

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00204

-rw-r--r-- 1 starreco rhstar 5000086528 Dec 16 13:49 test.daq_00046

leading to a monitored average file size as follows

Lucklily, 5000086528 == 4.66 GB (or 4.65 if truncated to 2 digits).

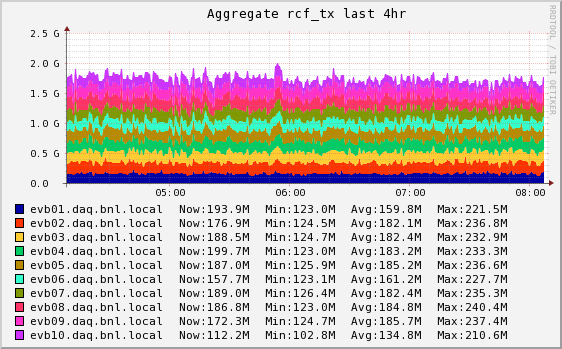

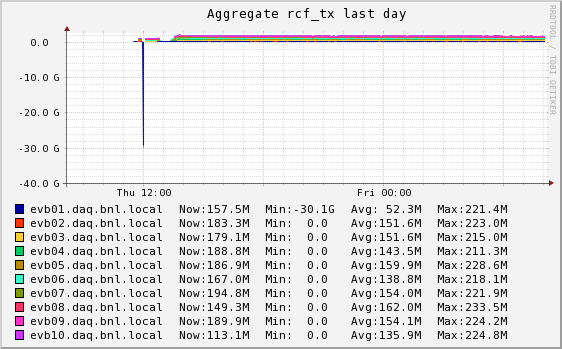

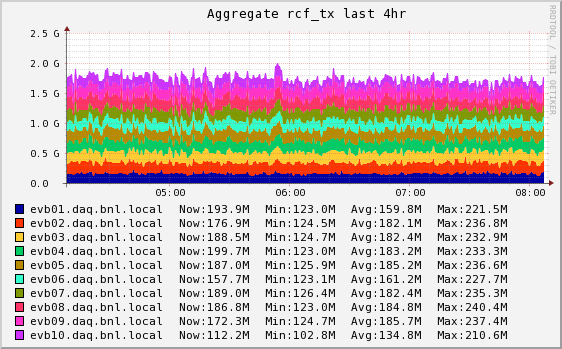

EVB to HPSS

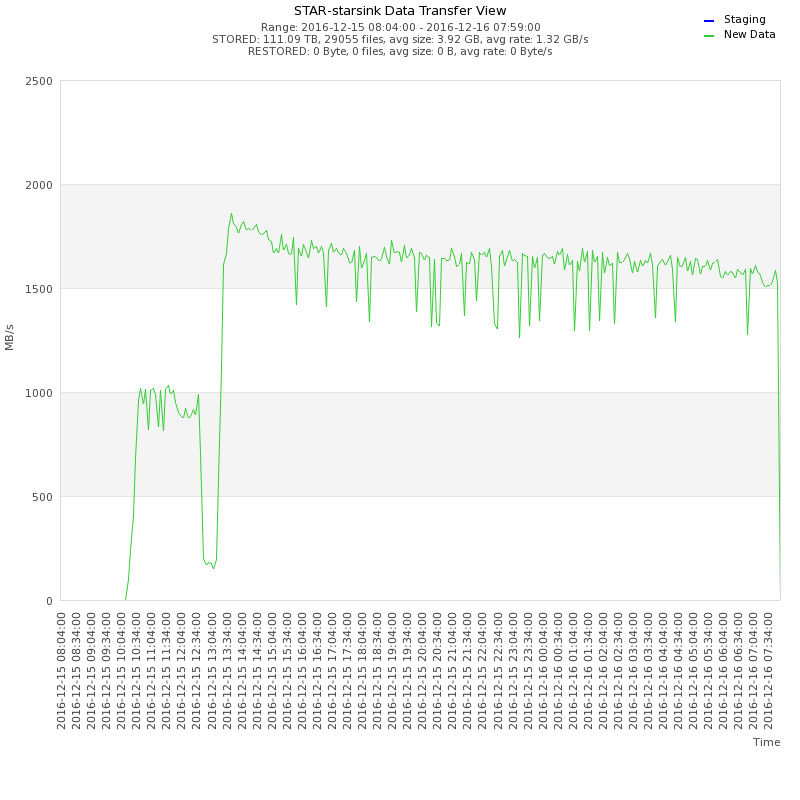

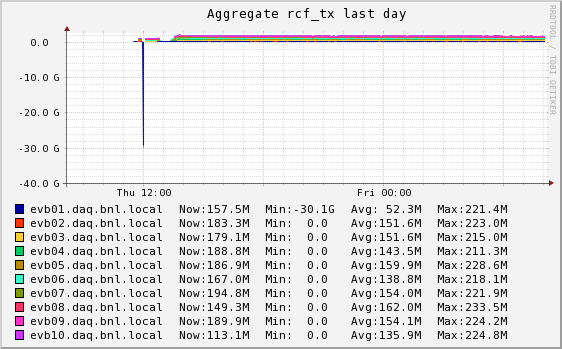

An aggregate Ganglia metric was created to measure the transfer speed from the CH to HPSS. The metric is a custom one, targetted to look at the interface connected to the switch on the way to HPSS. Below is the aggregate plot.

Summing the averages, we get 1741.6 M ... to relate to the data sync speed frm the other side (HPSS) showing consistency with a speed of 1.7-ish GB/sec.

A note that the first portion of the above graph (a lower data transfer rate) was caused by the fact that we found that the LACP hashing leaded to an assymetrical data transfer balance between links. This was fixed and the test restarted.

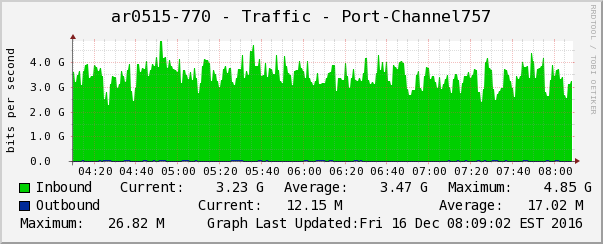

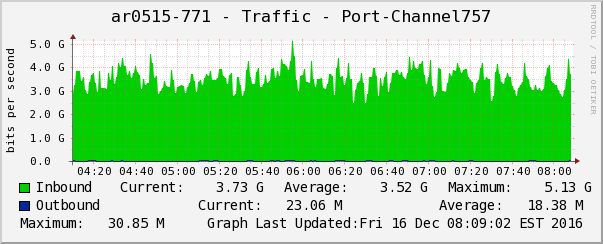

Network speed consistency - link check

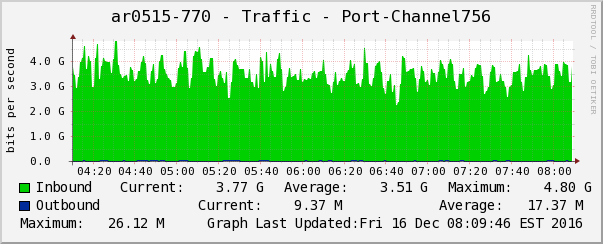

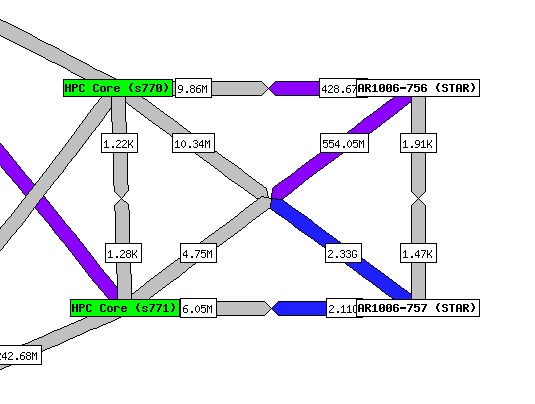

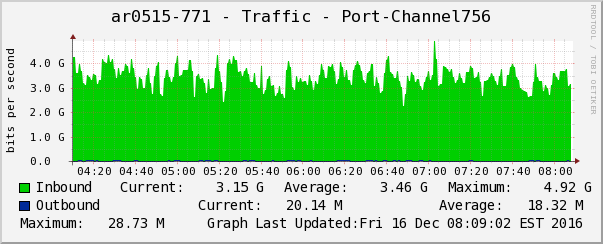

The network topology was modified to a Layer3/4 structure (connection to BNL's "HPC Core" infrastructure).

|

|

|

|

|

Clockwise, 3.51+3.46+3.47+3.52 = 13.96 Gb/sec == 1.75 GB/sec. Consistent again and in addition, we are balanced acrossed links (in average).

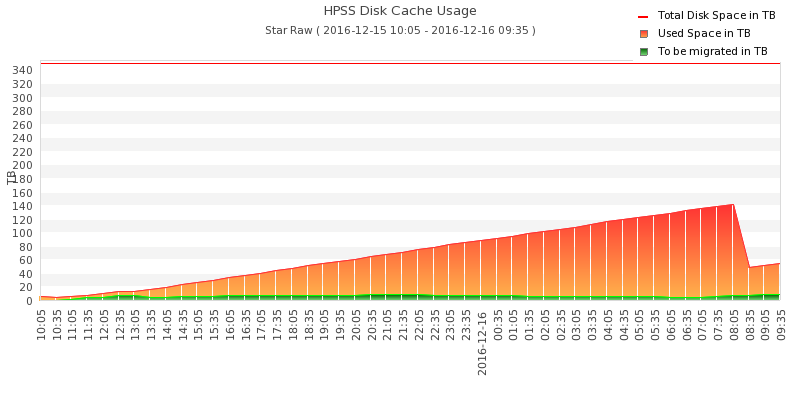

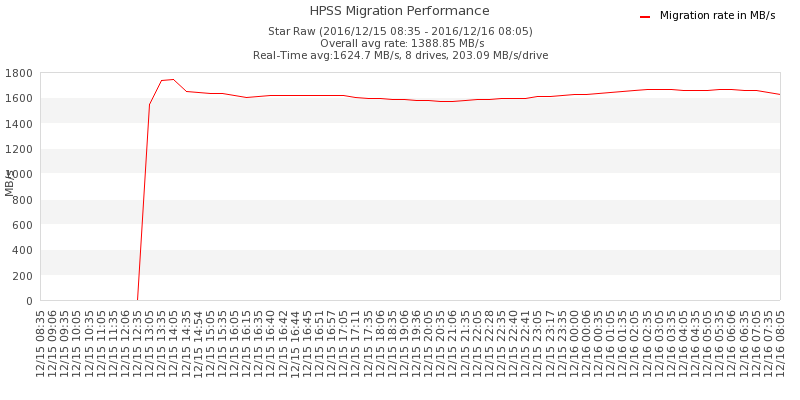

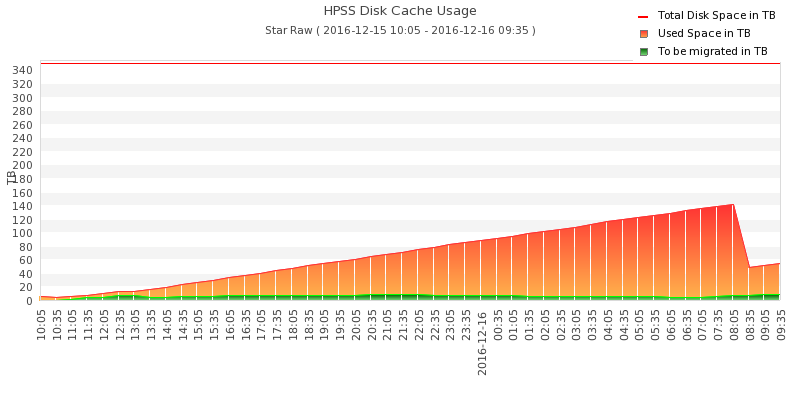

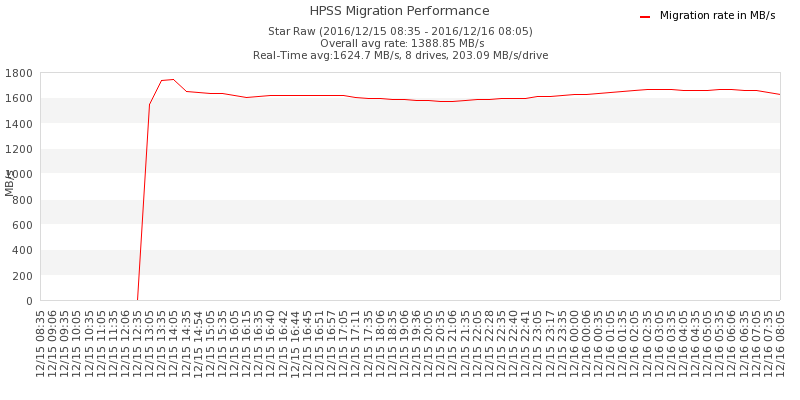

Cache to tape mirgration speed

HPSS cache was flushed to ensure speed measurement. This can be seen by the usual dent-shape of the cache size.

More interresting is this graph

We can achieve ~ 203 MB/sec / drive write speed.

Read speed test

For the reads, I got a list of files created during the write, submitted all to the DataCarousel and let it restore on a single partition.

An initial measurement showed 307 MB/sec read speed which may be over-optimistic as a grand average. More results will be provided later as the test ends and more data over a wider time range is gathered.

Thoughts: This speed raises concerns - the impedence missmatch is on the user-end file system that is, we can restore from HPSS tape to HPSS cache fasterthan we can take th files out of HPSS cache to live storage. This may create a situation where the HPSS cache gets full (because the "consumption" of files from cache to live drive missmatch by a large amount), then flush and each subsequent files restored by the Carousel call-back forces second tape mount. The conclusion is that LTO7 would be practical nadbeneficial for distributed data production (CRS) and distributed data restore in Xrootd (SE cardinality is high) but not suitable for single-user restores - improvement and more intelligence to the Carousel to detect this condition will be needed.

Oddity

The rcf_tx Ganglia metric may go to negative values - the calculation and script would need to be checked.

»

- jeromel's blog

- Login or register to post comments