- seelej's home page

- Posts

- 2011

- 2010

- December (2)

- November (3)

- October (3)

- September (3)

- June (2)

- May (3)

- April (2)

- February (9)

- January (1)

- 2009

- December (1)

- October (3)

- September (4)

- August (1)

- July (1)

- June (6)

- May (1)

- April (4)

- March (5)

- February (1)

- January (4)

- 2008

- My blog

- Post new blog entry

- All blogs

Relative Luminosity Studies - II

(First I apologize for the deluge of data)

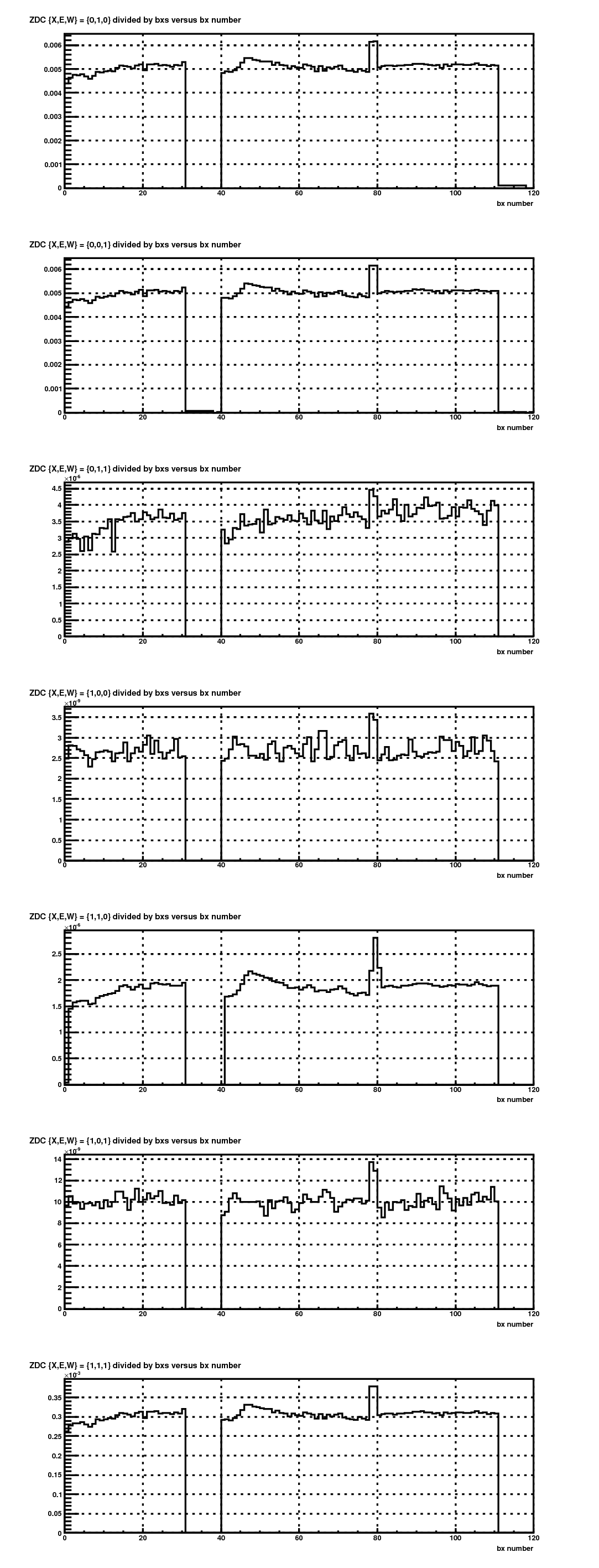

Continuing my studies of the relative luminosity and understanding the difference between the bbcx and zdcx relative luminosities, I got the raw (uncorrected) scaler data from Jim and I began to look at it. There are seven relevant possibilities each for the bbc and the zdc in their current configurations where you have an east detector (E), west detector (W) and their coincidence (X), so the seven relevant possibilities for the triggering patterns in a event are {X,E,W} = {0,1,0}, {0,0,1}, {0,1,1}, {1,0,0}, {1,1,0}, {1,0,1}, {1,1,1}. Now if everything were perfect in the detectors a few of these seven quantities should be logical impossibilities, but it is unlikely that everything is perfect (timing off and such) so we expect that there will be logically disallowed events. Now plotting these seven scaler quantities (divide by the number of bunch crossings for a scale) for the bbc and the zdc we have

.png)

And next is the zdc plots.

There are a couple of things you can conclude from these plots.

1) There are differences in the shapes of the distributions that are bigger than any of the accidentals corrections, especially around the abort gaps. And so there are likely to be issues in the bunch shapes/crossings that are causing differences in what is sampled by the two detectors in these bunches.

2) There is a sizable increase in the trigger rate for the bunches ~80 in both the bbc and the zdc. I am not sure what is causing these issues.

3) There is different behavior in the abort gaps for the two detectors. The bbcs sees more of the noise in the abort gaps than the zdcs.

4) There is a "fin" shape after the first abort gap. I am not sure what is causing this fin, but it appears in both the bbcs and the zdcs and in a similar magnitude, such that it isn't likely to be a bias in the rellumi.

5) There are a couple of issues with the bbc in general. There are a lot of the cases where the logically disallowed triggers happen. There are also less raw singles triggers than raw coincidence triggers which doesn't make any sense to me.

6) There don't appear to be any affects from the "kicked" bunches (20 and 60) though if I look at just the first few fills in the data I see that there is a decrease there, but not in the rest of the data.

These bunches should be cut out until they are understood, but for now we will not do so and then come back to it at the end of the rellumi analysis.

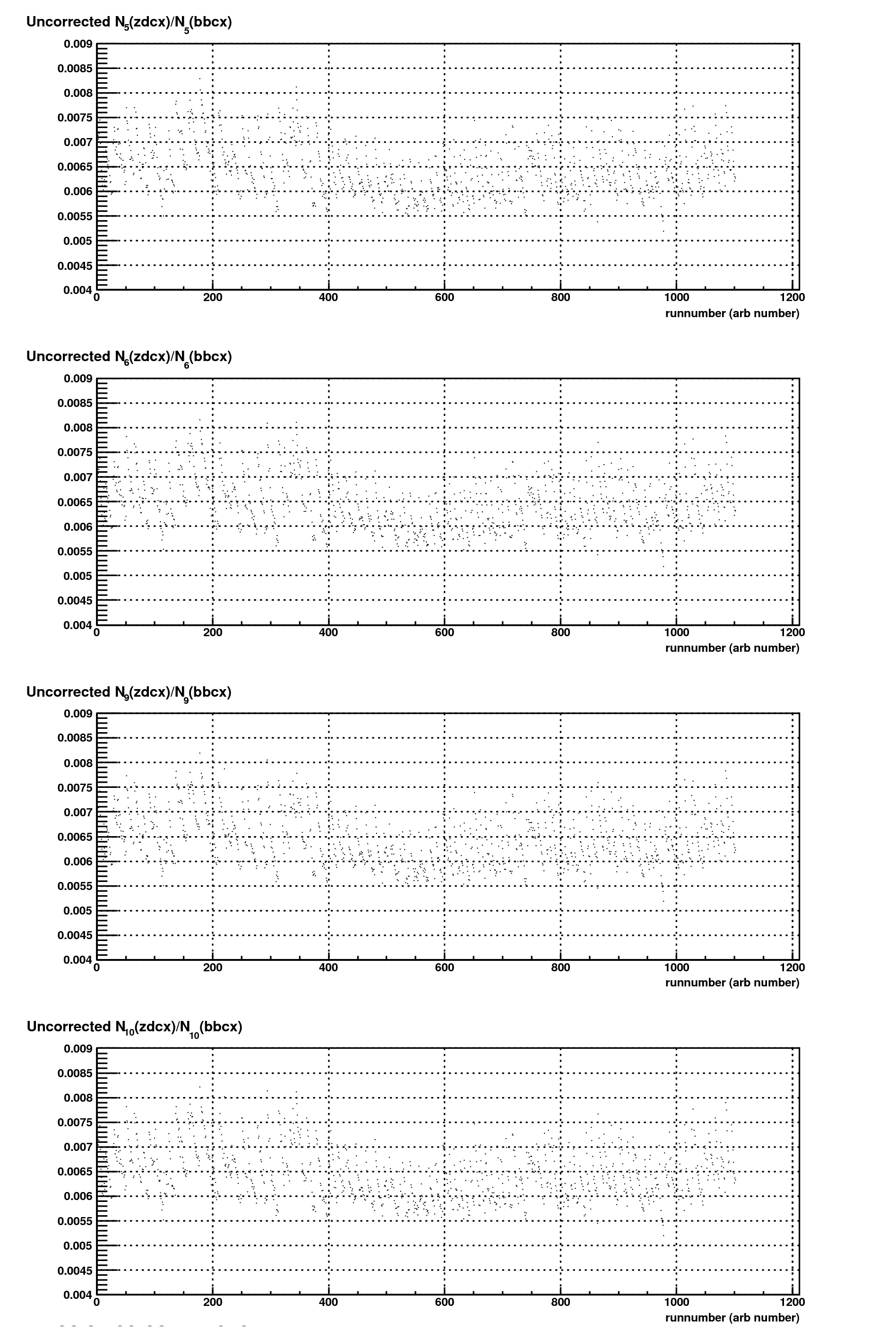

One of the issues that we identifies in Jim's calculation is that the accidentals corrections were not agreeing with our expectations so I did the calculation myself to see if it was a problem in his calculation or our understanding. First lets start with the uncorrected ratio of zdcx/bbcx versus runnumber separated into the 4 spin configurations of the bunch crossing.

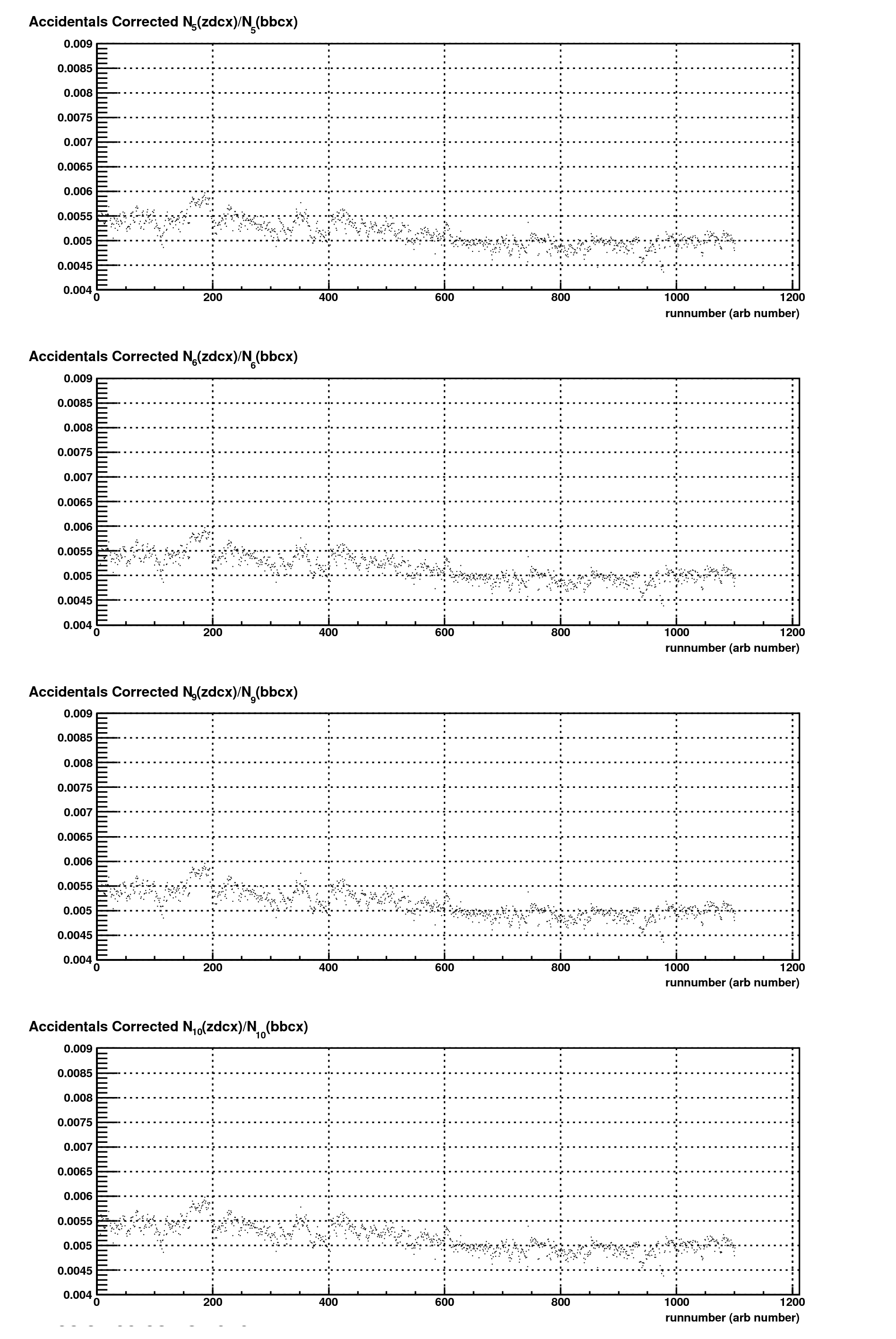

In this data, the "striations" are apparent and they decrease as luminosity decreases. Now if we apply the accidentals corrections we get the following

As we can see, the striations decrease in size and the ratio also decreases in overall magnitude. There are still some residual striations and I will attribute these to either there being no implemented deadtime correction for the bbc or more likely to the fact that the raw singles rates don't make much sense in that they are less than the raw coincidence rate.

The question is does this have an impact on our relative luminosities? Lets look at the delta-R's for the uncorrected and corrected data versus runnumber.

.png)

.png)

At the moment you can see that these corrections are clearly important for the bbcx as a measure of the relative luminosity. There are still evident luminosity dependent effects in the corrected data (again attributed to the bad singles), but what you can see is that regardless of the corrections, the shifts from 0 still persist and persist strongly throughout the run and its unlikely that any further corrections to the numbers will cause this shift from its mean value.

And just to tie it all together here are the histograms of the corrected R's so that you can see how the mean is shifted from zero (by ~1 sigma or 1e-3)

.png)

So to conclude, the accidentals corrections do not solve the problem of the overall shift in the data, but they do significantly shrink the width of the distribution and they are necessary for the bbcx to be a viable estimate of the relative luminosity and currently they are good to a certain level, but more time and effort should be expended to decrease the spread due to the possibly bad singles rates.

- seelej's blog

- Login or register to post comments