file systems with on-the-fly compression, part 2

Some perfomance results...

A sampling of trigger files was copied from the trgscratch machine to sl7beta (17GB gzipped with compression (1703 files) and 41GB non-gzipped (1454 files)):scp /archive5/trgdata/run1[67]*.2.* root@sl7beta.star.bnl.gov:/home/wbetts/trgdata_sample

(That destination is in an ext4 filesystem on a different disk from those used for testing below.)

These files were copied to the ZFS (with lz4 compression), BTRFS (with zlib compression), EXT4, and XFS filesystems dedicated for testing (all empty at the start of each copy pass). That required reading the files from the source EXT4 filesystem, which is on the system disk. The read I/O adds time and load that is not particularly relevant, but somewhat spoils the results. This was done once by hand, and then scripted at a later time with four passes. A read-only test (cat * > /dev/null) was also done for comparison. The initial single test results are notably different from the results in the multi-pass test run, especially for BTRFS-zlib (I have no idea why).

Here are the copying times for the various filesystems tested:

| destination Filesystem (# of trials) | avg wall time (sec) | std. dev. | avg system time | sync time, post copy | initial one time test |

| ZFS-lz4 (4) | 828.07 | 9.96 | 208.37 | 1.23 | 874 |

| ZFS-lzjb (4) | 829.53 | 5.66 | 173.59 | 1.51 | n/a |

| BTRFS-zlib (4) | 1373.84 | 7.49 | 248.13 | 20.81 | 1105 |

| BTRFS-lzo (4) | 819.99 | 11.55 | 237.19 | 4.01 | n/a |

| EXT4 (8) | 866.55 | 5.48 | 371.72 | 8.32 | 920 |

| XFS (4) | 866.71 | 5.30 | 299.5 | 7.90 | 870 |

| none (read-only from source) (8) | 796.20 | 6.71 | 124.97 | n/a | n/a |

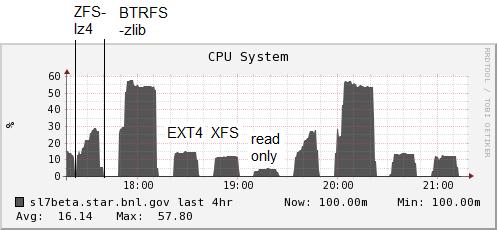

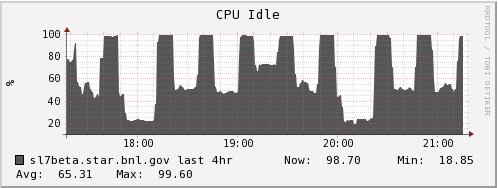

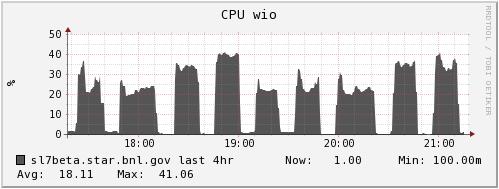

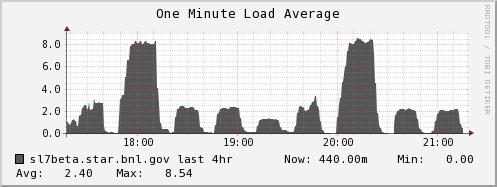

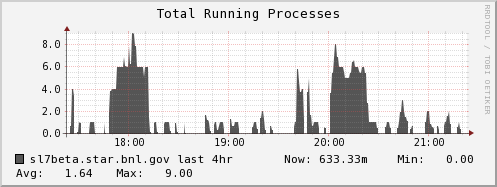

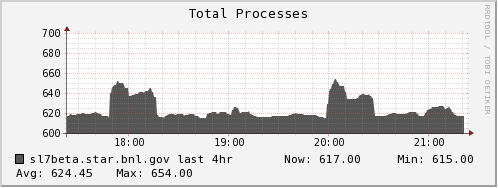

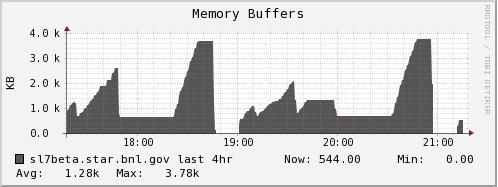

Here is the system monitoring from Ganglia, covering a couple of test cycles (there are 10 minute sleeps between each file system test). BTRFS-zlib is a bit of a CPU hog.

DIFFERENT VERTICAL SCALE

DIFFERENT VERTICAL SCALE

(Note the vertical scale is different by a factor of 1000 in the two memory graphs.)

Compression ratios

Since the compression algorithms used with ZFS and BTRFS above are not the same, comparisons between the two should be taken with the proverbial grain of salt. Perhaps of interest is the compression ratios achieved. According to df, the copies of the 57 GB took 33GB (34485376KB) on ZFS-lz4, 34GB (34964348KB) on BTRFS-zlib and 57GB (of course!) on ext4.

| original | ZFS-lz4 usage | ZFS-lzjb | BTRFS-zlib | BTRFS-lzo |

| gzipped files (17146908KB) | 15751552 (91.9%) | 17173722 (100.2%) | 17048960 (99.4%) | 17197148 (100.3%) |

| raw trigger files (42467036KB) | 17326336 (40.8%) | 23347698 (55.0%) | 17756768 (41.8%) | 22785784 (53.7%) |

A noteworthy point here - for ZFS, the lz4 compression achieves significantly better compression while using less CPU than lzjb (see the System CPU graphs above). For BTRFS, zlib gets better compression, but uses more CPU to do so.

File Deletion

Since the files needed to be deleted anyway, I decided to time it. First table below shows the time to delete the zipped files (1703 files) using, for example, time rm -f /zfs/trgdata_sample/*.gz This is not a rigorous test. At a minimum, it should have sync before starting, and include in the measured time a sync after the rm - plus it is so short that it could easily be interfered with by other processes that might happen to be active.| real (s) | user (s) | sys (s) | |

| ZFS-lz4 | 8.216 | 0.101 | 0.879 |

| BTRFS-zlib | 2.382 | 0.065 | 0.425 |

| EXT4 | 0.368 | 0.088 | 0.186 |

| XFS | 0.215 | 0.058 | 0.146 |

and again, this time deleting the raw, uncompressed files (1454 of them) (done in reverse order, ext4 first):

| real (s) | user (s) | sys (s) | |

| ZFS-lz4 | 21.584 | 0.086 | 1.27 |

| BTRFS-zlib | 11.179 | 0.048 | 0.824 |

| EXT4 | 1.356 | 0.077 | 1.165 |

| XFS | 1.015 | 0.050 | 0.964 |

NOTE HOWEVER, that space was still being freed (according to df) after the rm had returned (I noticed this in the ZFS filesystem - it could have happened with the others too without me noticing). So all in all, this is definitely NOT a good test from which to draw any conclusions, but it does indicate to me that it should be looked into further.

As part of the multi-pass copy test mentioned above, timed rm and sync commands were done after the copies (and note that the copy test had a sync immediately before the timed rm command):

| avg wall time to delete the files (sec) | std. dev. | final sync real time | |

| ZFS-lz4 | 45.98 | 0.93 | 0.04 |

| ZFS-lzjb | 46.30 | 1.75 | 0.03 |

| BTRFS-zlib | 12.72 | 0.37 | 0.22 |

| BTRFS-lzo | 13.67 | 0.41 | 0.22 |

| EXT4 | 1.58 | 0.17 | 0.11 |

| XFS | 1.72 | 0.36 | 0.13 |

Read performance:

sample test command: time -p cat /btrfs/trgdata_sample/* > /dev/null| real (s) | user | system | |

| EXT4 | 774.10 | 0.72 | 126.90 |

| XFS | 704.69 | 0.63 | 132.58 |

| ZFS (no comp.) | |||

| ZFS-lz4 | |||

| ZFS-lzjb | 626.57 | 0.68 | 99.63 (sensible?) |

| BTRFS (no comp.) | |||

| BTRFS-zlib | |||

| BTRFS-lzo | 517.23 | 0.59 | 145.56 |

Miscellaneous notes:

During an early trial copying the sample trigger files to BTRFS-zlib, the machine spontaneously rebooted. There was nothing noted in /var/log/messages - I have no clue what happened or whether the use of BTRFS-zlib had anything to do with it, but it is definitely something to keep in mind. Five other runs through the same copy to BTRFS-zlib have been successful. Several instances of a spontaneous reboot have occurred, all while copying files to BTRFS-zlib. But reproducibility is not clear - the same copy often works. I have set up another computer (different hardware altogether) to see if this can be reproduced there. I will also move the disks from sl7beta to another identical unit.

Ganglia's "disk space available" metric does not seem to include space in the ZFS pool.

Groups:

- wbetts's blog

- Login or register to post comments