Determining eta weights for EPD event plane analysis

Determining eta weights for EPD event plane analysis

Background:

For many collision energies the beam rapidity sweeps through the acceptance of the EPD. Since the directed flow of the spectators is in the opposite direction as the produced particles weighting all rings of the EPD equally may lead to very low Psi1 resolution when these contributions explicitly cancel. For this reason it's often good to weight the response of the EPD tiles with the v1 of the ring in which they belong (thus negatively weighting some tiles). In principle it might be good to optimize weight the higher orders, but, since higher order flow does not change sign with rapidity, this is not generally as important. This has already been looking at by Mike for 27GeV pre-production data:

Second order EP: https://drupal.star.bnl.gov/STAR/blog/lisa/optimizing-ring-weights-find-2nd-order-event-plane

First order EP: https://drupal.star.bnl.gov/STAR/blog/lisa/ep-resolution-and-eta-dependence-directed-flow-auau-collisions

First oder EP II: https://drupal.star.bnl.gov/STAR/blog/lisa/phi-weighting-and-optimizing-ring-weights-auau-27-gev

v1 in EPD: https://drupal.star.bnl.gov/STAR/blog/lisa/presentation-bulkcorr-6-june-2018-v1-27-gev-auau-collisions-2018-run

Motivation for this page:

I want to give a detailed procedural way to get from nothing to eta weights. Mike's documents explain the basic motivation and go into some possible choices, but I think people may still have some trouble knowing where to start and what routes actually matter. This should be understandable to someone who has never used the EPD before at all. The basic tool for such analysis is the StEpdEpFinder. You may find that your analysis requires significant editing of that class, but I do not believe significant editing is necessary to find the weights. If you want more discussion about what the corrections are doing and resolution etc., check out this paper https://arxiv.org/abs/0809.2949.

Procedure:

The EPD weights must be determined iteratively. At each step in the iteration process the v1 vs eta for each centrality bin is fit with an explicitly odd third-order polynomial (ax + bx^3). The parameters of that fit can be fed to the StEpdEpFinder. Personal experience tells me that getting a rough idea of the flow magnitude is very important, but getting a very precise v1 has quickly diminishing returns. This does not need a great deal of optimization.

I've attached a zipped directory of code disguised as a .txt file (Drupal will not allow .zip uploades) "EpdEtaWeight.zip.txt". Rename it to EpdEtaWeight.zip and unzip the file. The important code is StRoot/MyAnalysis/MyAnalysisMaker.cxx. I have only slightly edited StRoot/StEpdUtil/StEpdEpFinder.cxx to change the name of the EP corrections output file and to stop the code from writing the resolution file altogether. This code should also be on RCF at /star/u/iupsal/EpdEtaWeight/.

For the attached code I ran over the 2018 27GeV dataset, so that I can compare to Mike. At the moment my code gets the a resolution identical to Mike's in all centrality bins except the most peripheral bin. Even stranger, though the code starts with poor resolution in that bin it only gets worse with iteration, ending with 0 resolution. I don't understand why this is right now, but I do know that our centrality definitions are different. I believe his is based on a percentage binning of uncorrected RefMult in the isobar data which I performed. At this moment we still do not have an official StRefMultCorr for 2018 27GeV data, so I'm using the 2011 27GeV centrality determination. I chose a run number from that dataset at random for the initialization of StRefMultCorr (mRefmultCorrUtil->init(12175005);). Details of how to initialize and use the StEpdEpFinder should be contextually clear from MyAnalysisMaker.cxx. I'll provide line numbers for some explanation, but they might shift a bit over time

In standard usage the StEpdEpFinder can be run with or without a file input. Each time the code is run it outputs a file called XXX.EpCorrections.root (where XXX is some string provided by Analysis.C, also note that this convention is not exactly the same for the checked out version of StEpdEpFinder). If you started with no file (or a dummy file which isn't relevant to your actual analysis) it fills PhiWeightEW0 and PhiWeightEW1, which are histograms which compare how large the average signal of a tile is relative to it's neighbors (technically I think it's just the number of hits, the comparison is done in the code). All of the tiles in a ring should have the same average response. This output file should be hadd'ed and/or moved to a good location (like ./CorrectionFiles/Corrections19140002.root) so that it will be read in the next iteration. The next time the code is run the Phi weight correction is performed and histograms are filled for the Psi-shift (e.g. EpdShiftEW0Psi1_sin, the EP maker finds planes up to order 3 automatically). The bins of these histograms are the harmonic decompositions of the overall Psi distributions up to order 6 WRT the centrality bin. The third iteration performs the Psi-shift method, after which your Psi distribution should be statistically flat, and allows you to fill <cos(PsiE-PsiW)> histograms to estimate the EP resolution in the future. If you find that your distributions are not flat make sure you have already performed enough iterations. If you have a particularly tough dataset you may want to increase the shift correction harmonic order beyond 6 in StEpdEpFinder.cxx.

To summarize: the first time you run over some dataset you need to run over the data 3 times in order to get totally-corrected flat EP distributions. If you change the rapidity/ring weight in the EP maker (more about this later) you can skip one of the iterations because the rapidity/ring weight does not effect the first correction (phi weight). In that case additional changes require 2 iterations. Because the resolution is not very dependent on totally-precise ring weights, and because the method requires ~>7 iterations over the data (quite a lot!) I have found that it is best to use ~ a single run number. In my example I used 19140002. For a lower-luminosity dataset a few run numbers could be more appropriate. This is also good because the EP corrections should be done as a function of time, but the v1 itself should be constant. This way you do not have to worry about making sure the corrections are time-dependant. It's also important to make sure you are using the same dataset with each iteration.

The first time the code is run I use only the 4 innermost rings of the EPD. This is somewhat arbitrary, but you have to start somewhere and it's generally a good idea to limit the rapidity. To do this I make sure mEpFinder->SetEtaWeights(1,wt); is commented out (191) and mEpFinder->SetRingWeights(1, PtrRingWeight); is not. Ring weights are generally more sloppy because the pseudorapidity of the tile is depends on the z vertex, but we can be sloppy for the first round of iterations. I ran over the grid ($ star-submit Analysis.xml) for the single run number mentioned above. I hadd'ed the EpCorrection output files, replaced ./CorrectionFiles/Corrections19140002.root with the new correction, and did $ star-submit Analysis.xml again (3 times) until the EP distributions (from hadd'ing the *.histograms.root) are flat. Then I ran QuoteV1.C and SqrtResolution.C over the hadd'ed .histograms.root file (I called it total.root, which those macros expect). QuoteV1.C fits the TProfile histograms Epdv1C# (# is the 9-bin CentralityID, which is from 0-80%, so each bin should represent 1/8th [for bin < 7] or 1/16th [for bins 7 and 8] of the events which pass the cuts) and prints out arrays of the linear and cubic components of the odd cubic polynomial fits. SqrtResolution.C prints out an array of sub-event-plane resolutions, which are just sqrt(<cos(PsiE - PsiW)>). Since the v1 is found by comparing the distribution of hits in one side of the EPD to the EP found in the other it is the sub-event-plane resolution which is relevant. Note that the EpFinder code automatically rotates the East EP by pi so that the EPs are expected to point in the same direction if you have good resolution. I've also included FullResolution.C which finds the full event plane resolution (the resolution of the combined plane) by solving modified Bessel functions. This procedure is described in the EP paper referenced above and should be well approximated by sqrt(2*<cos(PsiE - PsiW)>). These can be copied into MyAnalysisMaker::Init() in the appropriate places and the old arrays of resolution, lin, and cub can be commented out. At this point you have finished the first iteration and you must remember to go back to eta weights by uncommenting the SetEtaWeights line and commenting out the SetRingWeights.

Next you run the code 2 more times to get flat EP distributions with these new eta weights and once again extract the v1 fit parameters and the sub-event-plane resolution. Repeat this procedure until the improvement in the resolution is negligible. Once you run over all of the data it might be nice to see if you can improve the resolution slightly with an additional two iterations. I suspect the improvement will not be worth much, but, regardless, I'm sure that restricting the initial iterations to a small subset saves a lot of time.

I want to list some obvious steps to avoid any confusion. In case it isn't clear, the MyAnalysisMaker.cxx code can be tested locally with

$ root -l Analysis.C\(2000,\"27adams.list\",\"./\",\"LocalOut\"\)

where 27adams.list is a list of absolute-path strings which are locations of 27GeV picoDst files. Currently Joey had several in his home directory, so I just referenced them instead of restoring these files myself. You should only run locally to test the code, unless you plan on optimizing over ~< 10 files (this may be possible). Otherwise you should run the code over the grid:

$ star-submit Analysis.xml

Remember to edit Analysis.xml so that it points to your output directory and if you change the correction histogram edit the sandbox copy in the xml. Remember to compile your code after every change to MyAnalysisMaker.cxx:

$ cons

FYI I used SL19b, so

$ starver SL19b

when you start a session of working on the code is generally a good idea.

I didn't think very seriously about the event cuts. Those can be refined, but considering how not-sensitive the resolution is to minor v1 changes, I do not expect this to be important.

Additional details:

I think the part of this code which will be most unfamiliar to the reader is the EPD parts in MyAnalysisMaker::Init() and in MyAnalysisMaker::Make(). The latter includes a loop over the EPD hits. In this case you can't rely on the EpFinder to do all of the work for you. This looks like the following

for(unsigned int iEpd = 0; iEpd < pico->numberOfEpdHits(); iEpd++)

{

StPicoEpdHit *EpdHit = pico->epdHit(iEpd);

if (! EpdHit) continue;

TVector3 EpdPoint = mEpdGeom->RandomPointOnTile(EpdHit->id());

TVector3 StraightLine = EpdPoint-PrimaryVertex;

double nMip = EpdHit->nMIP();

double nMipEff = nMip; //put a max on nMip in calc.

if(nMipEff > 3.) nMipEff = 3.;

else if (nMipEff < 0.3) continue;

double RandomEta = StraightLine.Eta();

double RandomPhi = StraightLine.Phi(); while(RandomPhi < 0.) RandomPhi += 2.*pi;

int ew = (EpdHit->id()<0)?0:1; //is EPD east or west

//mNmipDists[ew][EpdHit->position()-1][EpdHit->tile()-1]->Fill(nMip);

if (!(fabs(RandomEta)>0)||(fabs(RandomEta)>1000)) continue;

TVector3 cent = mEpdGeom->TileCenter(EpdHit->id()); //tile center

float CenterRadius = cent.Perp();

float CenterPhi = cent.Phi(); if(CenterPhi < 0.) CenterPhi += 2.*pi;

int RingGroup = FindRing(CenterRadius);

int PhiBin = -1;

if(1 == RingGroup) PhiBin = FindPhiBin12(CenterPhi);

else PhiBin = FindPhiBin24(CenterPhi);

// x axis is going to cycle through all of the tiles in one ring group and then start the next ring

int TileBinId = -1;

if(1 == RingGroup) TileBinId = PhiBin;

else TileBinId = 24*(RingGroup-1) + PhiBin;

if(!ew) TileBinId += 384;

histogram2D[4]->Fill(nMip, TileBinId);

histogram2D[5]->Fill(EpdHit->adc(), TileBinId);

//cout << "TileBinId " << TileBinId << endl;

if (1 == ew){ //west

//double v1 = nMipEff*cos(RandomPhi - Psi1E)/mResCorr[CentralityID9]; //Reference EP from opposite side

double v1 = nMipEff*cos(RandomPhi - Psi1E);

histogramTProfile[0]->Fill(RandomEta, v1);

histogramTProfile[2]->Fill(RandomEta, v1);

histogramTProfile[3+CentralityID9]->Fill(RandomEta, v1);

histogramTProfile2D[0]->Fill(PhiBin, RingGroup, nMipEff);

if(1 == RingGroup) histogramTProfile2D[0]->Fill(PhiBin+12, RingGroup, nMipEff);

}

else if(0 == ew){ //east

//double v1 = nMipEff*cos(RandomPhi - Psi1W)/mResCorr[CentralityID9];

double v1 = nMipEff*cos(RandomPhi - Psi1W);

histogramTProfile[1]->Fill(-RandomEta, -v1);

histogramTProfile[2]->Fill(-RandomEta, -v1);

histogramTProfile[3+CentralityID9]->Fill(-RandomEta, -v1);

histogramTProfile2D[0]->Fill(PhiBin, RingGroup, nMipEff);

if(1 == RingGroup) histogramTProfile2D[0]->Fill(PhiBin+12, RingGroup, nMipEff);

}

} //end EPD hit loop

Note a few things:

1) nMipEff and nMip: nMip, or the number of miniminally ionizing particles, comes from a calibrated EPD. This number statistically tells you how many particles passed through a tile. Since it's statistical it isn't an integer. If the dataset isn't well calibrated this might have problems, but even so it probably works well within a small time window (relative hits are all that matter). If the MIP signal is extremely small it's probably not real, so it's good to put a minimum cut somewhere around 0.3. This protects against bad pedestals as well. The MIP distribution is a Landau, which has no average. If a very slow particle passes (that is, not a MIP) through the detector it may give an anomalously large nMIP (like 50) which could obviously bias the result. Use a maximum nMip, so that if nMIP > #, nMIP = #. I used 3 here and called this quantity nMipEff. nMipEff ends up being the weight of the v1.

2) A tile has some finite size and a not-very-easy to describe shape (https://drupal.star.bnl.gov/STAR/subsys/epd/mapping/geometry-information-use-codes). The size of the tile limits the spatial resolution of the hit. One can find a geometric center to a tile, but that isn't the center of the probability distribution. The StEpdGeom class allows you to either a) randomly sample the tile (StEpdGeom::RandomPointOnTile), or b) get the center of the tile (StEpdGeom::TileCenter). These arrive as TVector3s. To get the phi should be clear. To get the pseudorapidity make sure you subtract off the primary vertex of the collision. a) is generally preferred. This may seem odd, but if you use b) you get a saw-shaped dN/deta distribution. For event planes this distinction barely matters (things quickly average out), but I wanted to mention it in case it is a surprise.

3) I have my own functions defined for determining the ring goup and the phi bin. This was just to make unique tile IDs. Note that the very first ring only has 12 tiles, whereas all other rings have 24.

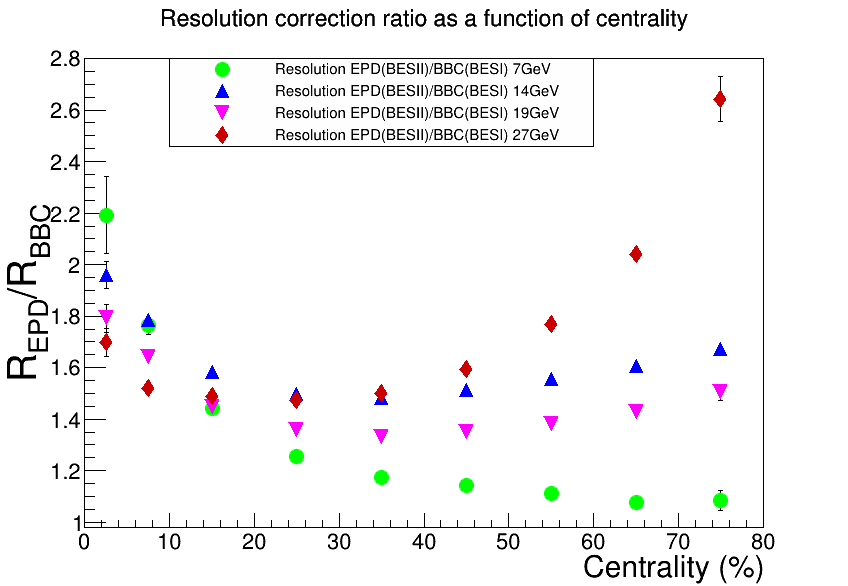

Results:

It is important to note that these depend on the specific (arbitrary) centrality determination and were found over relatively small subsets of the data. They are not optimal for the full datasets they represent. The resolution of the most central and most peripheral bins might not be accurate.

I would suggest starting with these, running over all of the data once (with the proper number of EP correction iterations and making your EP corrections dependent on time) and calculating a new weights, then comparing the resolution with the new weights to those quoted below. If the variation is small (which I expect) then this single iteration is sufficient. If not you may need to consider running over the data a few more times. Not doing this refinement is okay, it just may not be optimal. I have done no testing, so it is possible that it is not necessary to do any such refinement at all.

2019 7GeV (pre-production data):

double lin[9] = {-0.00286554, -0.00568233, -0.00605378, -0.00629579, -0.00504539, -0.00241481, 9.71163e-06, 0.0018728, 0.00121921};

double cub[9] = {0.000813921, 0.00147716, 0.0020958, 0.00261875, 0.00269278, 0.00238397, 0.00168866, 0.000855601, 0.00026617};

float SubEventPlaneResolution[9] = {0.202267, 0.275439, 0.354029, 0.41841, 0.451356, 0.455185, 0.407701, 0.31789, 0.194232};

float FullEventPlaneResolution[9] = {0.333761, 0.484598, 0.639614, 0.738251, 0.7649, 0.749084, 0.67515, 0.544924, 0.338061};

2019 14.5GeV (pre-production data):

double lin[9] = {-0.00615184, -0.00871193, -0.0113521, -0.0131902, -0.0134869, -0.0117586, -0.00823323, -0.00425391, -0.00133439};

double cub[9] = {0.000703957, 0.00106953, 0.00150214, 0.00187265, 0.00203782, 0.00191302, 0.00143622, 0.000819686, 0.00029455};

float SubEventPlaneResolution[9] = {0.22549, 0.312506, 0.404023, 0.476615, 0.513339, 0.512029, 0.451022, 0.348419, 0.213035};

float FullEventPlaneResolution[9] = {0.31373, 0.428215, 0.541702, 0.625349, 0.665167, 0.663778, 0.596575, 0.473704, 0.296925};

2019 19GeV (pre-production data):

double lin[9] = {-0.00492359, -0.00758976, -0.00977947, -0.0117094, -0.012088, -0.0111248, -0.00819093, -0.00480956, -0.0017971};

double cub[9] = {0.000513127, 0.000819023, 0.00113311, 0.00141833, 0.00154068, 0.00148565, 0.00114832, 0.000692142, 0.000262};

float SubEventPlaneResolution[9] = {0.202267, 0.275439, 0.354029, 0.41841, 0.451356, 0.455185, 0.407701, 0.31789, 0.194232};

float FullEventPlaneResolution[9] = {0.282324, 0.380124, 0.480704, 0.558767, 0.596956, 0.601311, 0.546086, 0.435106, 0.271387};

27GeV:

Mike:

double lin[9] = {-1.950, -1.900, -1.850, -1.706, -1.438, -1.340, -1.045, -0.717, -0.700};

double cub[9] = {0.1608, 0.1600, 0.1600, 0.1595, 0.1457, 0.1369, 0.1092, 0.0772, 0.0700};

float FullEventPlaneResolution[9] = {0.351, 0.418, 0.464, 0.501, 0.506, 0.496, 0.428, 0.327, 0.209};

Isaac (worse for my most peripheral bin only):

double lin[9] = {-0.0146441, -0.0645661, -0.0531274, -0.0435543, -0.0386959, -0.03328, -0.0258429, -0.0171934, 0.011403};

double cub[9] = {0.00409298, 0.00529479, 0.00460861, 0.00407853, 0.00383382, 0.00344899, 0.00281276, 0.00189258, -0.0011986};

The above analysis happened before the official centrality definition for 27GeV. I reanalyzed the data using:

<input URL="catalog:star.bnl.gov?production=P19ib,filetype=daq_reco_PicoDst,trgsetupname=27GeV_production_2018,tpx=1,filename~st_physics,sanity=1,storage!=HPSS,runnumber[]19131037-19133000" preferStorage="local" singleCopy="true" nFiles="all" />

Mike's weights with updated centrality definition (analyzed by Isaac):

double lin[9] = {-1.950, -1.900, -1.850, -1.706, -1.438, -1.340, -1.045, -0.717, -0.700};

double cub[9] = {0.1608, 0.1600, 0.1600, 0.1595, 0.1457, 0.1369, 0.1092, 0.0772, 0.0700};

float FullEventPlaneResolution[9] = {0.241866, 0.324851, 0.40741, 0.468789, 0.50725, 0.511219, 0.455619, 0.342736, 0.200827};

float FullEventPlaneResolution_error[9] = {0.00179166, 0.00121374, 0.000906259, 0.000745863, 0.000650167, 0.000652164, 0.000769749, 0.00163387, 0.00289291};

float SubEventPlaneResolution[9] = {0.172663, 0.23377, 0.296367, 0.344494, 0.375531, 0.378778, 0.334032, 0.247157, 0.142936};

Isaac w/ updated centrality definition:

double lin[9] = {-0.0063546, -0.00824664, -0.010106, -0.011256, -0.0113069, -0.0100722, -0.00714236, -0.00369874, -0.00131937};

double cub[9] = {0.000465516, 0.000662303, 0.000877767, 0.00104741, 0.00112554, 0.00105999, 0.000785157, 0.000415431, 0.000144557};

float FullEventPlaneResolution[9] = {0.253307, 0.327272, 0.407403, 0.468775, 0.507336, 0.511691, 0.45662, 0.34295, 0.201776};

float FullEventPlaneResolution_error[9] = {0.0017051, 0.00120404, 0.000906795, 0.00074631, 0.000650376, 0.000651628, 0.000767916, 0.00163347, 0.00288008};

float SubEventPlaneResolution[9] = {0.181003, 0.235577, 0.296362, 0.344483, 0.375601, 0.379164, 0.334825, 0.247317, 0.14362};

.png)

- iupsal's blog

- Login or register to post comments