- jeromel's home page

- Posts

- 2025

- 2020

- 2019

- 2018

- 2017

- 2016

- 2015

- December (1)

- November (1)

- October (2)

- September (1)

- July (2)

- June (1)

- March (3)

- February (1)

- January (1)

- 2014

- 2013

- 2012

- 2011

- 2010

- December (2)

- November (1)

- October (4)

- August (3)

- July (3)

- June (2)

- May (1)

- April (4)

- March (1)

- February (1)

- January (2)

- 2009

- December (3)

- October (1)

- September (1)

- July (1)

- June (1)

- April (1)

- March (4)

- February (6)

- January (1)

- 2008

- My blog

- Post new blog entry

- All blogs

Run 10 MDC, testing data rate to HPSS

General

At every HPSS software revision (API changes), the experiment re-test the data bandwidth to ensure rates and functionality under a minimal test period of 24 hours, can be sustained without the components exhibiting problems. All experiments pushed data at the same time to also make sure network connectivity does not play a fundamental role into the performance.

Current capacities

As it stands, the STAR RAW Class Of Service (aka COS) is set as follows (limitations are explained):

- 12 drives available - for a 10 GB file, the drive speed was measured to be 65 MB/sec/drive - since not all drives could be allocated to data migration (at least a few need to be left for data retrieval from HPSS), we assume a maximum capacity of 65x10 = 650 MB/sec IO rate for migrating data from cache to tape

- The RAW COS has 8 LUNS (or arrays) each able to sustain 200 MB/sec - a total of 1.6 GB/sec is then possible BUT, half of it is to be considered only as data IN needs (streaming from CH to HPSS cache) needs to be taken off cache (cache to tape). Hence, a data rate greater than 800 MB/sec cache write rate would cause the cache to fill up.

- Note that for each new 200 MB/sec (each LUN), a cost of 30 K$ is incurred

- For each drive, a cost of 8 K$ is to be considered

- => for a +200 MB/sec, one needs at least a 46 K$ investment

- The network connectivity from the CH to HPSS is via a redundant fail-over 10 Gb/s line. Hence, maximum data rate is 1280 MB/sec for the network. This would need to be considered for a peak rate (count only 1 GB/sec network transfer from CH to HPSS with overhead)

- Note 1: investigating with ITD networking, the following possible plan were defined

- The fail-over line could be used as secondary 10 Gb/s line if need be, increasing network bandwidth capacity to 2 GB/sec

- Investigation of the presence of an additional 10 Gb line was requested - we would (if exists) then have an asymmetric fail-over network (2x10 Gb, 1x10 Gb in fail-over mode)

- Note 1: investigating with ITD networking, the following possible plan were defined

- Each STAR mover has a network connectivity of 2x1 Gb channel bounded data transfer in theory. In practice, the channel bounding fails to rate balance between the two lines and one is always under-utilized. STAR has 5 movers hence, counting only one functional line, 5 Gb/sec only is achievable hence a 640 MB/sec LAN speed for the movers

- Note: plan is to replace all network cards by 10 Gb/sec capable cards - late purchase (i.e. late supplemental funds) caused a slip in planing and will not make higher rates possible for the start of Run 10. We may consider leveraging an access to rolling-upgrade the movers.

Planing

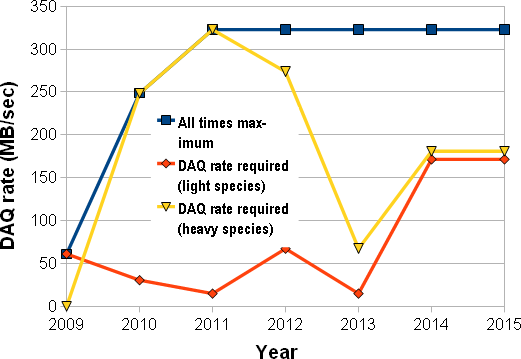

Planned for outer years according to the CSN0474 : The STAR Computing Resource Plan, 2009 projections (based on an initial run plan made in 2008 ranging up to 2015). The rate planned is as in the below figure:

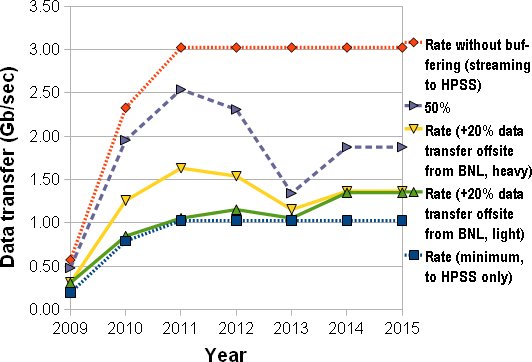

The planned rates (sustained) was projected to be 322 MB/sec, HPSS spec was set at x2 the sustained rate to accomodate for peak rates (hence the current 640 MB/sec). The LAN/WAN capacities were made such that in addition of the transfer to HPSS, transfer offsite (KISTI, etc ...) in near real time would be possible. In Gb/sec, this translates to the figure below:

and at least a 3 Gb/sec connectivityLAN and WAN was requested (this includes WAN coming OUT of BNL). This was tested and showed in February 2009 BNL to KISTI data transfer and a sustained rate of 1 Gb/sec all across continents was showed as feasible without impact on data acquisition.

For future, we planned for

- Current maximal achievable rate is around 640 MB/sec .

- RAW counts 12 drives in 2010 (could only allocate 10 to data migration) and +4 in 2011 when the data rates are supposed to plateau

Results

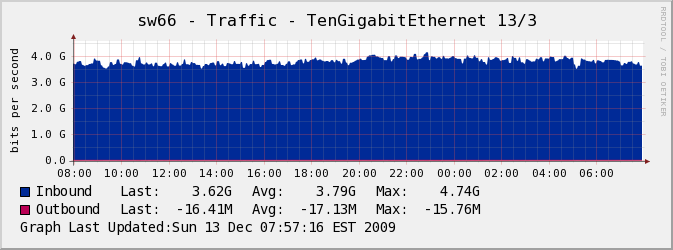

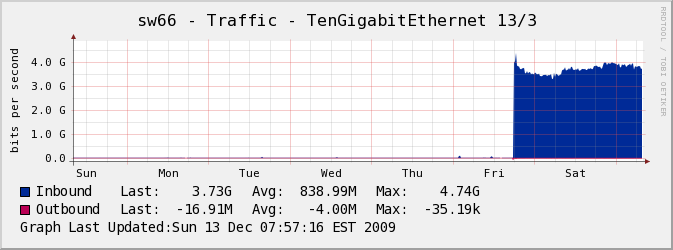

Test from Friday, December 11th to Sunday the 13th.

For FY10, a sustained rate of ~ 3.73 Gb/sec (~ 480 MB/sec) could be achieved during this period.

We however allocated 10 drives to this exercise. Post analysis showed that, providing STAR intent to sustain a data rate around 4 Gb/sec, we would benefit from:

- The addition of one more LTO4 drive to sustain more IO rate

- For a 40 MB/sec additional (missing to reach 4 Gb/sec) and a 24 hours write/read period, one infers we would need at least +3 TB of HPSS disk cache to be on the safe side

- jeromel's blog

- Login or register to post comments