Offline QA

Offline QA Shift Resources

STAR Offline QA Documentation (start here!)Quick Links: Shift Requirements , Automated Browser Instructions , Shift Report Instructions , Online RunLog Browser

|

|

Automated Offline QA BrowserQuick Links: Reference Histograms |

QA Shift Report FormsQuick Links: Issue Browser/Editor, Dashboard, Report Archive |

QA Technical, Reference, and Historical Information

QA Shift Report Instructions

STAR QA Shift Report

- If you know that the experiment is taking data, but no fast offline data is appearing in the QA Browser, please make an effort to understand why there is no data (look at the production hypernews, for example) and post this issue to the Electronic Shift Log (under the QA subdetector) if it has not already been posted. Please also remember to close any such opened issues if the problem is resolved.

- The report is best filled out DURING your shift, not after. This is simply because it is easiest to fill out the form while looking at the data.

- The plan is: one report per shift, many data entries per report.

- You should never select the Back button of your web browser during the report process (and you should never need to do so).

- Do not be afraid to try things out. Until you submit the full report, everything can be modified and reviewed if it has been saved. Also, you can try things out by using a "play" session (see below).

- If you are experiencing problems, try closing the Shift Report window, clearing your browser's cache and restarting the Shift Report forms - it should not lose your data. Additionally, be sure that cookies and javascript are enabled in your browser. If problems persist, please contact Gene Van Buren at gene@bnl.gov or (631-344-7953).

- Choose a session name

- You can also continue a session which was started earlier. This name has no meaning other than to identify one QA Shift Report from another in case more than one person is working on a report simultaneously, and to allow one to restore a report that they have already started if their browser or computer crashes. Your chosen session name should appear next to Session in the Menu. You can also make a copy of another session.

- If you do not see a session name in the Menu, or the name Unknown appears, please try selecting a session again. Problems will arise otherwise.

If you are learning how to use the forms, you may wish to create a "play" session , where a toy report can be generated, but nothing is permanently recorded (the report is not archived, and issues are not attached to runs nor updated for the "last used" lists). Once a session is created, it cannot be changed to or from being a play session, but such sessions can be copied into new play or non-play sessions. - Fill out the Shift Info form.

- Select the Save/Continue button at the bottom of the form when finished. If you are unsure about an item, it can be edited later. If you leave this form (i.e. select any options under Manage Contents) before selecting Save/Continue, you will lose the information you have entered.

- Add data entries

- Do so for each QA job (histogram set) examined by making the appropriate selection from the Add A Data Entry For... submenu. When combining files for QA, use the pull down menu of trigger types instead of typing in a file sequence. Again, select the Save/Continue button when finished with each data entry. And again, these items can be edited later if necessary, and leaving this form (i.e. selecting anything under Manage Contents) before selecting Save/Continue will result in loss of information on the form.

- Focus on issues

- Issues are maintained with the Issue Browser/Editor, which can be reached via Open Issue Browser/Editor in the Menu, or from the data entry forms (where only issues for that data type are listed). Issues can then be made active for a given data entry to indicate that the issue was seen there. The intent here is that opened/created issues may be used repeatedly and can be modified or appended later (if more detail is learned, for example) until they are eventually closed/resolved. A name serves as a brief description of each issue, and a detailed description is available via the Browser/Editor, along with any image attachments for the issue. Each issue may also be given tags (by subsystem/category [required], or keywords [optional]) to aid in finding issues or correlating them later. To be listed in the data entry form of a given data type, an issue must be allowed for that type.

- You may need to Refresh Issues when working on an entry for any newly created issues to appear. Please make an effort to search through the existing issues before creating a new issue. A filter tool appears to make searching for a related issue easier.

- Manage the contents

- After entering each portion of the report, a current contents listing is shown. Each portion may be viewed (by clicking on the item), deleted (by marking the checkbox beside the item and clicking on the Delete button), edited, or copied.

- Submit the QA Shift Report

- When ready to submit, select Finish & Submit Report. This takes you to the final information to be entered for the report. You can save this information and submit later if you decide you are not yet ready to submit the full report. You can also choose not to delete the session after submitting, so that it can be reused and modified another time.

At any time, you may (using the Menu):

- Browse or edit issues by selecting Open Issue Browser/Editor in the Menu.

- View the currently entered portions of the report by selecting View Current Contents.

You may subsequently edit these files or copy their contents into a new data report. - Re-enter or edit the Shift Info by selecting (Re)Enter Shift Info.

- Erase the entire report and start over by selecting Erase Sesson & Start Over.

- Stop the current session and choose to start a new one, or continue a different one, by selecting Session.

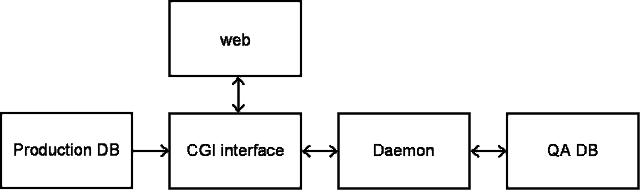

OfflineQA DB documentation

QA Browser

To be written.

QA Shift Reports

Three tables in the OfflineQA database are currently used: QAIssues, QARunFileSeqIndex, and QAShiftReports. Their structure and indexing are defined by these create statements:CREATE TABLE QAIssues (ID int not null primary key, Name tinytext,

Description text, closed bool default 0,

timeFirst timestamp default CURRENT_TIMESTAMP,

timeLast int default 0, timeFRP int default 0, timeRDP int default 0,

timeRNT int default 0, timeMDP int default 0, timeMNT int default 0,

flagsFRP bool default 0, flagsRDP bool default 0,

flagsRNT bool default 0, flagsMDP bool default 0, flagsMNT bool default 0);

ALTER TABLE QAIssues ADD INDEX(timeFRP,ID);

ALTER TABLE QAIssues ADD INDEX(timeRDP,ID);

ALTER TABLE QAIssues ADD INDEX(timeRNT,ID);

ALTER TABLE QAIssues ADD INDEX(timeMDP,ID);

ALTER TABLE QAIssues ADD INDEX(timeMNT,ID);CREATE TABLE QArunFileSeqIndex (runYear smallint not null,runDay smallint not null,

run int not null default 0, seq varchar(16) not null, idx tinyint(1) not null,

RepType tinyint(1) not null, RepNum varchar(16) not null, link text,

primary key (runYear,runDay,run,seq,RepType,idx));

ALTER TABLE QArunFileSeqIndex ADD INDEX(RepType,RepNum);CREATE TABLE QAShiftReports (RepType tinyint(1) not null, RepYear int not null,

RepMonth int not null, RepText mediumtext, RepNum varchar(16) not null,

primary key (RepType,RepNum));

ALTER TABLE QAShiftReports ADD INDEX(RepType, RepYear, RepMonth);It would still be good to add a way to quickly identify in which runs a specific issue was seen.

STAR QA Documentation

Lanny Ray, University of Texas at Austin

June 11, 2002

Last Update, Feb. 18, 2009

Index

-

Information for the Fast Offline QA Shift Crew for Run 9

- Information for QA Shifts

- Summary of Fast Offline QA Shift Duties - Run 9

- QuickStart Instructions for the Auto QA Browser - Run 9

- Fast Offline QA Histogram References and Descriptions

- Fast Offline QA Shift Report Preparation and Instructions for Run 9

- Offline QA Shift Report Web Based Form

- Manual generation of QA histograms

-

General References

-

List of Contacts

-

QA Documents for Previous Runs

- Link for Runs 2 - 7

- See child pages below for other Runs (after 7, but prior to current Run)

-

Technical Documentation

webmaster

Last modified: Tue Feb 13 10:42:23 EST 2007

Configuring AutoQA for subsystems

Configuring AutoQA for subsystems

This document is intended for subsystem expert who configure the AutoQA reference histogram analysis in Offline QA for their subsystem. Please note that there are three parts to this process:

- Configuring histogram descriptions

- Configuring the data to use as a histogram reference

- Configuring the analysis parameters.

- All configurations

- Every configuration begins by using the QA browser. Please enter your RCF username and then select "2.1 shift work". The next step you take depends on whether you wish to view data and reference, or just the reference alone. When using the AutoQA system, please take note of the "Help" buttons which are situated at the top right corner of any panel for which contextual help exists and feel free to click these at any time.

- I. Configuring histogram descriptions

- To aid the people who review the QA histograms, each histogram's title and an informative description are to be maintained. Note that descriptions span any trigger types (i.e. a separate description is not needed for minbias histograms vs. high tower histograms).

- If this is all you want to do, you may simply view the references alone for this: use for "View reference histograms" from page 22 of the QA Browser (page 22 is titled "Fast offline, select cuts"), then click "OK on the next page to continue.

- It is also fine to opt for a comparison with real data as it does not affect this step; i.e. this can be done concerrently with configuring reference histograms and/or configuring analysis parameters.

- Use the menus to select the latest references (you may need to click the little arrows on some browsers), and then click on the "Analyze" button which appears.

- After a few moments spent generating graphics of the histograms, the full list of histograms will appear. Scroll to the histograms for your subsystem, and click "Examine" next to any one of them. It is also worth being aware that double clicking on any histogram name will reveal its title, and clicking on "(more)" will also reveal its description (without having to click "Examine").

- After a few moments spent retrieving the individual histogram, a page will display showing details of the histogram. Click on the "Edit (experts only!)" button. Once in Edit mode, you will stay there until you explicitly leave it through a similar action, and you will see a red-dashed border while in this mode.

- The histogram title and description will appear in the middle of the page. Edit these as appropriate, and click the "Update description" button.

- Use the left panel to navigate to the next histogram on which you wish to work (you can use "Prev"/"Next", the table of pages, or select "All" to return to the full list). Then repeat steps 4-5 above as appropriate.

- If this is all you want to do, you may simply view the references alone for this: use for "View reference histograms" from page 22 of the QA Browser (page 22 is titled "Fast offline, select cuts"), then click "OK on the next page to continue.

- II. Configuring reference histograms

- Each histogram's analysis consists of a comparison against a reference histogram.

- Locate a run, or set of runs, where the subsystem is believed to be in proper working order to define a reference.

- If using a single run, opt for "Select auto-combined jobs and compare to a reference histogram."

- Another way to get there is to arrive via the "Check Offline QA" link for any given run from the RunLog, then checking the box so that analysis options are shown, and then selecting plots for the file stream of choice

- If using a set of runs, opt for "Combine several jobs and compare to a reference histogram."

- If unsure of a good run, you will need to hunt through available runs for one which you believe looks good.

- If using a single run, opt for "Select auto-combined jobs and compare to a reference histogram."

- Find the run(s) of interest among those listed and click the OK button.

- Use the provided menu to select the appropriate reference. By default, what the system believes is the appropriate reference (same Run Year, Trigger Setup, and lated Version) is automatically selected. The "Analyze" button will appear when a reference is selected, and you should then click it.

- Repeat steps 3-4 from "Configuring histogram descriptions" listed above. The data histogram and any previous reference will appear near the top of the panel.

- It is important to remember that reference histograms may be general, or specific to a trigger type. If a reference histogram does not exist for a specific trigger type, the analysis will be made comparing to the general one (if it exists). Please consider which purpose you want this histogram to serve and select the trigger type for which it applies appropriately.

- Click the "Mark for Updating Reference" button.

- Repeat the selection of histograms you wish to choose as reference and steps 5-6 until you are ready to submit the entire set of new histograms for reference.

- Click on the "Marked for update" button near the upper left.

- The list of marked histograms will appear, along with a options for the new reference set. By default, the same Run Year and Trigger Setup will be selected, and any other Trigger Setups from the current operations will be listed as alternatives. Whichever is chosen, a new version number will be given automatically. Once the selections are made, a "Submit new reference" button will appear.

- Before submitting, please input comments, such as "BSMDe histograms updated for new dead regions".

- Click the "Submit new reference" button.

- Locate a run, or set of runs, where the subsystem is believed to be in proper working order to define a reference.

- III. Configuring analysis parameters

- Each analysis involves a comparison method ("mode"), possible options for that mode, and a value uses as a cut on whether the analysis passes or fails.

- Repeat steps 1-4 from "Configuring histogram descriptions" listed above. The histogram analysis parameters will appear near the bottom of the panel.

- It is important to remember that analysis parameters may apply to a histogram of any trigger type ("general"), or to a specific trigger type. If analysis parameters do not exist for a specific trigger type, the general analysis parameters for this histogram will be used (if they exist). Please consider which purpose you want these parameters to serve and select the trigger type for which they apply appropriately.

- Select an analysis mode and enter any options. The default of Kolmogorov Maximum Distance and no options works pretty well. More details about the possible modes are listed below these instructions.

- Select a numerical value between 0 and 1 to use as a pass/fail cut.

- For guidance, something near 0.8 will likely catch most discrepancies, but it may be wise to choose a tighter value (e.g. 0.9 or 0.95) initially if no trend records for this analysis are available. The "View Trends" button exists to help in making this selection, presenting the records from any previously set analysis used when the QA shift examined data.

- Click the "Update Cut" button to store the new parameters for use the next time an analysis is run (it is important to be aware that the currently shown analysis and parameter that were used will not update to reflect the new parameters - it must be run again to do so).

- If an analysis is no longer desired to be used, clicking the "Delete Cut" button will exclude it from future analyses. This may be desirable in a case such as where a specific trigger typed analysis may be supplanted by a general analysis for the histogram.

- Use the left panel to navigate to the next histogram on which you wish to work. Then repeat steps 2-6 above as appropriate.

- To assess the performance of the new analysis parameters, click on "Go back ... Back to data selections" near the very top and compare data and reference to see the scores.

___________

Analysis Modes

Currently, three modes are available:

- χ2 Probability: using ROOT's TH1::Chi2Test(). This test can be very sensitive, giving results near 0 for anything which is not a very good match.

- Kolmogorov Probability: using ROOT's TH1::KolmogorovTest(). This test is also quite sensitive, but a bit more forgiving that the χ2.

- Kolmogorov Maximum Distance: using ROOT's TH1::KolmogorovTest() with the "M" option, and taking 1.0 minus the maximum distance result (so that a small maximum distance gives a good score). This test tends to give much better scores to histograms which are less perfect matches, and seems to provide reasonable room for discrepancies, so it is the most commonly used test.

If you are interested in adding another analysis mode, please bring the topic to the starqa-hn hypernews forum. Such a mode can be a custom function written as a plug-in, or an existing root function.

___________

Last updated Feb. 24, 2016 (G. Van Buren)

Fast Offline QA Histogram References and Descriptions

Current (Run 10) descriptions for:

Old links:

QA Reference for General Histograms - Run 9

QA Reference for Trigger Specific Histograms - Run 9

Fast Offline QA and DST Production QA Reference Histograms - Run 8

CL Histograms for Run 10

STAR Offline QA Shift Histogram Description - Run 10

Note: Not all reference plots may be ideal, be sure to carefully read the descriptions.

This page contains the reference histograms and descriptions for the Offline QA system for Run 10. These pages should correspond exactly to the jobs viewed in the Offline QA Browser. However, STAR is a dynamic experiment and the plots generated for QA will change several times throughout the run. If this reference page seems out-of-date, or if you find a job that would make a better reference, please email the QA hypernews list here.

Other links:

Elizabeth W. Oldag for the QA Team.

January 25, 2010.

Page Index for QA Shift Plots

- Page 1

- Page 2

- Page 3

- Page 4

- Page 5

- Page 6

- Page 7

- Page 8

- Page 9

- Page 10

- Page 11

- Page 12

- Page 13

1. StECL event primary vertex check: Primary vertex finding status for run; events with (1) and without (-1) final vertex. The relative fraction of events with primary vertex depends on trigger, beam diamond width and position.

2. StECL point: # hits tpc : Distribution of number of reconstructed space points in TPC per event. Should scale with centrality, depends on multiplicity cut and trigger. For central triggers this distribution peaks at large hit multiplicity.

3. StECL point: # hits ftpc: Not filled in this run. Distribution of number of reconstructed space points in FTPC East (solid line) and West (dashed line) per event. Scales with centrality, depends on multiplicity cut and trigger.

4. StECL point: x-y distribution of hits, tpcE: Scatter plot of the azimuthal distribution of reconstructed space points in the East TPC. The distribution should be azimuthally uniform except for the 12 sector structure of the TPC (lighter areas indicate sector boundaries). Density should decrease radially but inner and outer sector structure should be present (darker areas in the innermost pads of the inner and outer sectors). Watch for empty areas (typically masked RDOs) and hot spots. Notify TPC experts if new empty area or hot spot appears.

5. StECL point: x-y distribution of hits, tpcW: Scatter plot of the azimuthal distribution of reconstructed space points in the West TPC. The distribution should be azimuthally uniform except for the 12 sector structure of the TPC (lighter areas indicate sector boundaries). Density should decrease radially but inner and outer sector structure should be present (darker areas in the innermost pads of the inner and outer sectors). Watch for empty areas (typically masked RDOs) and hot spots. Notify TPC experts if new empty area or hot spot appears.

6. StECL point: r-phi distribution of hits, tpcE: Same as the x-y scatter plot except in polar coordinates.

1. StECL point: r-phi distribution of hits, tpcW: Same as the x-y scatter plot except in polar coordinates.

2. StECL point: z distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to z-coordinate. There is a broad peak above the nominally flat background at z=0. This is intentional and is done in order to allow identification of post-trigger pileup events. Watch out for any other anamolous steps or unusual spikes or dips. The peaks just outside |z| = 200 cm are new in Run 10.

3. StECL point: #phi distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to azimuthal coordinate (phi) for east (solid line) and west (dashed line) halves. Should be flat except for the 12-fold sector structure or masked RDOs (as shown). Dead RDO's will produce dips and gaps; hot pads or FEE cards will produce spikes.

4. StECL point: padrow distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to padrow number 1-45. Should display gradual fall-off; watch for anamolous spikes and dips. Missing RDO's cause drops in the distribution. Padrow 13 was restored in Run 9 and continues to be active in Run 10.

5. StECL point: x-y distribution of hits, ftpcE: Not filled in this run. Radial distribution of reconstructed space points in FTPC East. The distribution should be uniform except for the insensitive gaps between the six readout sectors. Localized denser areas are due to noise. Ideally noisy pads are flagged in the FTPC gain tables so that they are not used for cluster finding. Less densely populated areas not on the sector boundaries are due to dead electronics. Empty areas not on the sector boundaries indicates a serious hardware problem. An FTPC expert should be contacted immediately if this is seen in fast offline-QA during data taking.

6. StECL point: x-y distribution of hits, ftpcW: Not filled in this run. Radial distribution of reconstructed space points in FTPC West. The distribution should be uniform except for the insensitive gaps between the six readout sectors. Localized denser areas are due to noise. Ideally noisy pads are flagged in the FTPC gain tables so that they are not used for cluster finding. Less densely populated areas not on the sector boundaries are due to dead electronics. Empty areas not on the sector boundaries indicates a serious hardware problem. An FTPC expert should be contacted immediately if this is seen in fast offline-QA during data taking.

1. StECL point: plane distribution of hits, ftpc: Not filled in this run. Number of reconstructed space points assigned to tracks in FTPC East (solid line) and West (dashed line) in each padrow. The horizontal axis shows padrow numbers where FTPC-West is 1-10 and FTPC-East is 11-20. Padrows #1 and #11 are closest to the center of STAR. East and West should be similar in shape but West will have less magnitude than east for d+Au. Spikes indicate noisy electronics; dips indicate dead electronics.

2. StECL point: #pads vs #timebins of hits, ftpcE: Not filled in this run. Monitors the cluster quality in FTPC East. Ideally the clusters should have a pad length of 3-5 and a timebin length of 4-6. A prominent peak located at (2,2) indicates bad gas or loss of gain or excessive background.

3. StECL point: #pads vs #timebins of hits, ftpcW: Not filled in this run. Monitors the cluster quality in FTPC West. Ideally the clusters should have a pad length of 3-5 and a timebin length of 4-6. A prominent peak located at (2,2) indicates bad gas or loss of gain or excessive background.

4. StECL Number hits in cluster for bemc: Distribution of number of BEMC towers contributing to energy clusters.

5. StECL Energy of cluster for bemc: Distribution of energy in reconstructed clusters in EMC barrel. Should be peaked with a smooth fall off for minbias data but may display other peaks or structures for other triggers.

6. StECL Eta of clusters for bemc: Azimuthally integrated pseudorapidity distribution of reconstructed energy clusters in the EMC-barrel. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes. For Run 10 the full barrel is instrumented. The distribution should nominally be flat.

1. StECL Phi of clusters for bemc: Pseudorapidity integrated azimuthal distribution (radians) of reconstructed energy clusters in the EMC-barrel. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps, unusual spikes, or sections of significantly elevated yields.

2. StECL Number hits in cluster for bsmde: Distribution of numbers of hits (anodes) contributing to Barrel SMD clusters along pseudorapidity.

3. StECL Eta of clusters for bsmde: Pseudorapidity distribution of reconstructed energy clusters in the BSMD anode grid along pseudorapidity. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes. Distribution should nominally be flat.

4. StECL Number hits in cluster for bsmdp: Distribution of numbers of hits (anodes) contributing to Barrel SMD clusters along azimuth.

5. StECL Phi of clusters for bsmdp: Azimuthal distribution of reconstructed energy clusters in the BSMD anode grid along azimuth angle (phi in radians). Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes.

6. StECL EmcCat4 Point Energy: Energy distribution for Barrel EMC-SMD Category 4 clusters. Cat4 clusters correspond to TPC track matched clusters in the EMC barrel, BSMD-eta and BSMD-phi detectors.

1. StECL EmcCat4 Point Eta: Pseudorapidity distribution for EMC-SMD Category 4 clusters. Distribution should nominally be flat.

2. StECL EmcCat4 Point Phi: Azimuthal distribution for EMC-SMD Category 4 clusters. Distribution should nominally be flat.

3. StECL EmcCat4 Sigma Eta: Distribution of widths (sigma) of Category 4 Barrel EMC-SMD clusters with respect to pseudorapidity.

4. StECL EmcCat4 Sigma Phi: Distribution of widths (sigma) of Category 4 Barrel EMC-SMD clusters with respect to azimuthal angle.

5. StECL EmcCat4 Delta Eta: Differences between centroids of Category 4 Barrel EMC-SMD clusters and projected positions of TPC tracks at BEMC with respect to pseudorapidity. Should be peaked at ~0. Not filled in Run 10.

6. StECL EmcCat4 Delta Phi: Differences between centroids of Category 4 Barrel EMC-SMD clusters and projected positions of TPC tracks at BEMC with respect to azimuthal angle. Should be peaked at ~0. Not filled in Run 10.

1. StECL EmcCat4 Points Multiplicity: Frequency distribution of number of Category 4 clusters per event. For central triggers this distribution is peaked at large multiplicity.

2. StECL EmcCat4 Track Momenta : Distribution of TPC global track momentum for Barrel EMC-SMD Category 4 clusters. Not filled in Run 10.

3. StECL Point Flag: Distribution of Barrel EMC and SMD cluster types by Category number. There should be a reasonable fraction of Cat4 clusters as in this example histogram; report if this fraction drops significantly. For central triggers the relative fraction of Cat4 clusters should be larger than for MinBias.

4. StECL globtrk: iflag - all : Quality flag values for all global tracks. Some with large, negative values may not appear on plot; check stat. box for underflows. Majority of tracks should have iflag>0, corresponding to good, usable tracks. Refer to: dst_track_flags.html and kalerr.html for description of flag values. Note that in Runs 7-10 about half the tracks have iflag < 0.

5. StECL globtrk: tot good tracks - all: Distribution of the number of good global tracks in the TPC per trigger; including pileup. For the central trigger this distribution peaks at large multiplicity.

6. StECL globtrk: tot good tracks - ftpc: Not filled in this run. Scatter plot of good global track multiplicities in FTPC West versus FTPC East.

1. StECL globtrk: Detector ID good tracks - all: Global track detector IDs for good tracks. Refer to: /afs/rhic/star/packages/DEV00/pams/global/inc/StDetectorDefinitions.h for Detector ID codes.

2. StECL globtrk: z-DCA to Beam Axis, coarse scale: Coarse scale distribution along the z-axis (from -200 to +200 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Peaks indicate probable locations of individual collision vertices however indentification of individual collision vertices is unlikely.

3. StECL globtrk: z-DCA to Beam Axis: Fine scale distribution along the z-axis (from -50 to +50 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Peaks indicate probable locations of individual collision vertices.

4. StECL globtrk: z-DCA to Beam Axis vs tanl, tpc: Scatter plot of the tangent of the dip angle (tanl) versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Vertical bands should be seen corresponding to individual events. The bands should be smooth and continuous; breaks at tanl=0 indicate probable TPC calibration errors in either the t0 offset or the drift speed. This is best seen for high multiplicity events. Cross reference for calibration errors with z-DCA to beam axis versus z-coord. of first hit on track.

5. StECL globtrk: z-DCA to Beam Axis vs z-first: Scatter plot of the z-coordinate of the first fitted hit in the TPC versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC-only global in which the first point used in the fit lies in the TPC. Vertical bands should be seen corresponding to individual events. The bands should be smooth and continuous; breaks at z=0 indicate TPC calibration errors in either the t0 offset or the drift speed. This is best seen for high multiplicity events.

6. StECL globtrk: z-DCA to Beam Axis vs psi: Scatter plot of the azimuthal direction angle (psi) versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Vertical bands should be seen corresponding to individual events. The bands should be smooth, straight and continuous indicating azimuthal symmetry in the tracking. Bends or offsets could indicate problems in individual TPC sectors such as voltage sags or drifts. These are best studied with high multiplicity events.

1. StECL globtrk: padrow of first hit on trk, tpc: Distribution of first fitted space point with respect to pad row number for TPC-only global tracks. Should peak at 1 with a minor peak at padrow 14 (beginning of outer sector); the latter should not be too big relative to that at row 1. Other peaks may indicate large, missing sections of the TPC read-out causing breaks, and consequently additional, false new starting points for tracks. The excess data for TPC west beyond padrow 35 is due to EEMC tracking needs.

2. StECL globtrk: z of first hit on trk, tpc: Distribution of first fitted space point with respect to z for TPC-only global tracks. Should be approx. symmetric.

3. StECL globtrk: first point: hit - helix, tpc: Residuals at FIRST point on track for TPC-only global tracks. The quantities plotted are the longitudinal (along z-axis, dashed line) and transverse (in x-y plane, solid line) differences between the coordinates of the first hit and the DCA point on the helix fit to the first point. For the transverse residual, positive (negative) values correspond to hits inside (outside) the circular projection of the helix onto the bend plane. FWHM should be less than ~ 1cm.

4. StECL globtrk: phi of first point on trk, tpc: Distribution of first fitted space point with respect to azimuthal angle (phi) for TPC-only global tracks. The solid (dashed) line is for the east (west) half of the TPC. These should be approximately equal and flat within statistics, except for the 12-sector structure.

5. StECL globtrk: chisq0, tpc: Chi-square per degree of freedom for TPC global tracks. Should peak just below 1.

6. StECL globtrk: signed impact param from prim vtx, tpc: Two-dimensional (2D) (in the transverse plane) signed impact parameter (in cm) from primary vertex for East (solid line), West (dashed line) and All (dotted line) TPC-only global tracks. Should be centered at zero depending on the TPC calibration status used in fast-offline QA production.

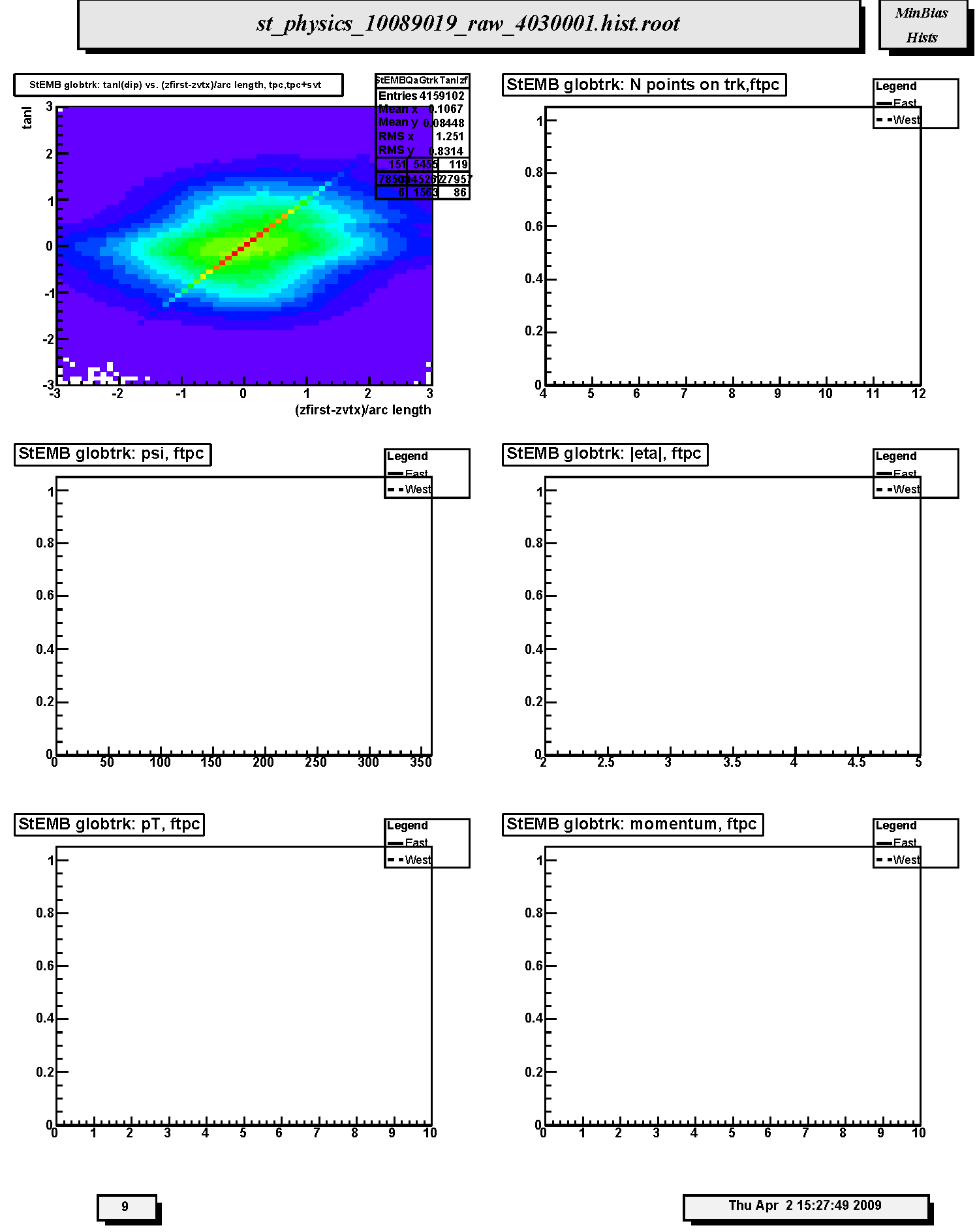

1. StECL globtrk: tanl(dip) vs. (zfirst-zvtx)/arc length, tpc,tpc+svt: Scatter plot of tangent of dip angle (tanl) versus (z_first - z_primvrtx)/arc-length for TPC-only global tracks whose first fitted point is in the TPC. Variable 'z_first' is the z coordinate of the first fitted point in the TPC. Variable 'z_primvrtx' is the z-coordinate of the primary vertex for the event. Variable 'arc-length' is 2R*arcsin(delta_r/2R) where R = track radius of curvature and delta_r is the transverse distance between the primary vertex and the first hit on track. Primary tracks lie along the 45 deg diagonal. Secondary tracks and strays lie scattered to either side. The diagonal band should appear clearly and be straight and smooth without kinks, breaks or bends.

2. StECL globtrk: N points on trk,ftpc: Not filled in Run 10. Distribution of the number of fitted points on track for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. Distributions should be similar except fewer tracks for west than east. Also depends on the relative fraction of active readout in each detector.

3. StECL globtrk: psi, ftpc: Not filled in Run 10. Azimuthal distributions for track direction angle (psi) for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be flat within statistics except for the hexagonal FTPC sector structure. There will be fewer west tracks than east for d+Au.

4. StECL globtrk: |eta|, ftpc: Not filled in Run 10. Distributions of absolute value of pseudorapidity for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be similar (modulo for d+Au) and range from about 2.5 to 4.5.

5. StECL globtrk: pT, ftpc: Not filled in Run 10. Transverse momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be similar (modulo d+Au)within statistics.

6. StECL globtrk: momentum, ftpc: Not filled in Run 10. Total momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be similar (modulo d+Au) within statistics.

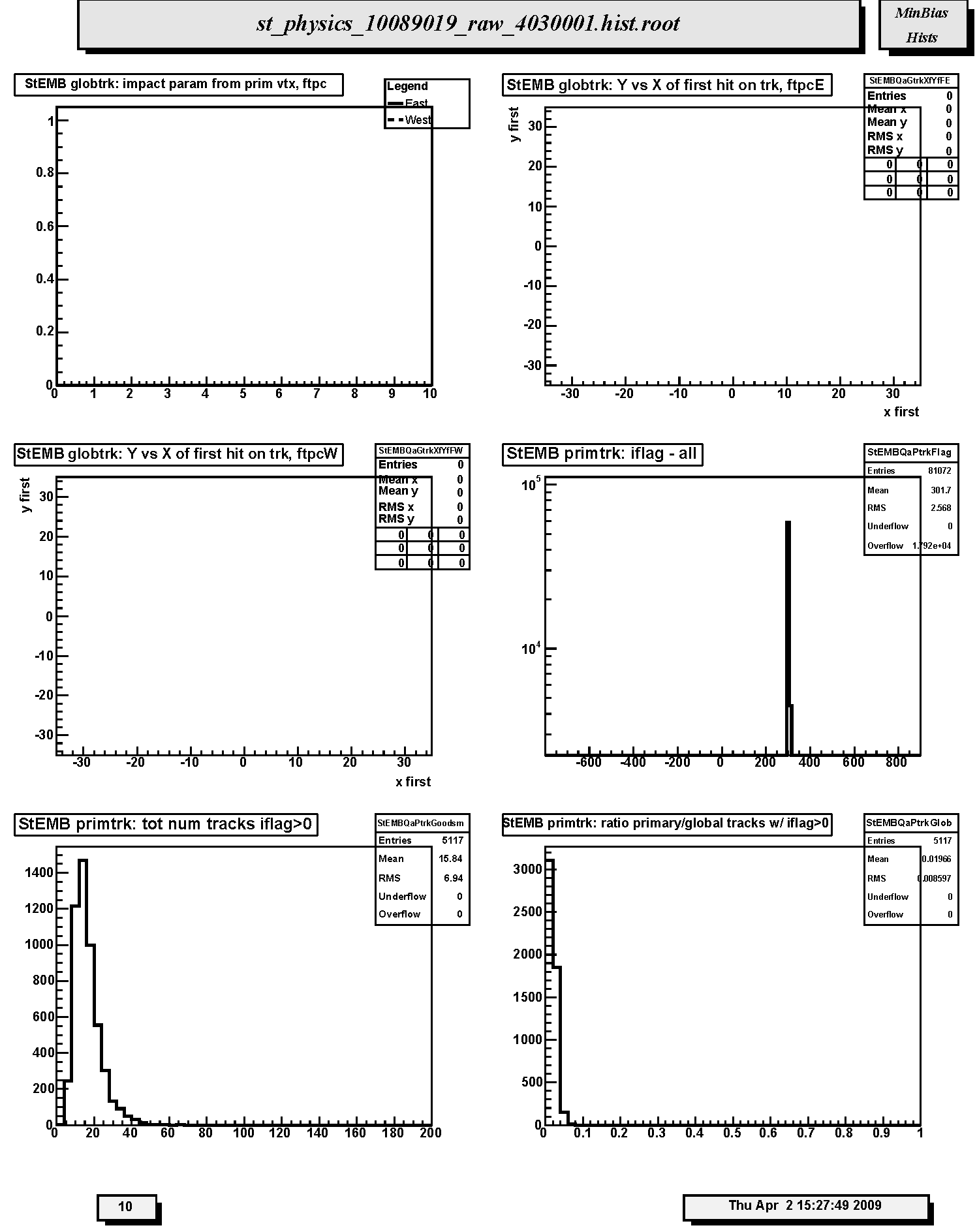

1. StECL globtrk: impact param from prim vtx, ftpc: Not filled in Run 10. Distribution of impact parameter values for global FTPC East tracks (solid curve) and West tracks (dashed curve) with respect to the main TPC global track determined primary vertex.

2. StECL globtrk: Y vs X of first hit on trk, ftpcE: Not filled in Run 10. Scatter plot of (x,y) coordinates for the first fitted space points in FTPC-East global tracks. These should be uniformly populated with hits; FTPC hexagonal structure is apparent.

3. StECL globtrk: Y vs X of first hit on trk, ftpcW: Not filled in Run 10. Scatter plot of (x,y) coordinates for the first fitted space points in FTPC-West global tracks. These should be uniformly populated with hits; FTPC hexagonal structure is apparent.

4. StECL primtrk: iflag - all: Quality flag values for all primary tracks. Some with large, negative values may not appear on plot; check stat. box for underflows. Majority of tracks should have iflag>0, corresponding to good, usable tracks. Refer to: dst_track_flags.html and kalerr.html for description of flag values.

5. StECL primtrk: tot num tracks iflag>0: Distribution of total number of primary tracks per triggered event. For central trigger data this distribution peaks at large multiplicity.

6. StECL primtrk: ratio primary/global tracks w/ iflag>0: Ratio of good primary to good global tracks for all detectors. For Au-Au this ratio usually peaks at about 0.5.

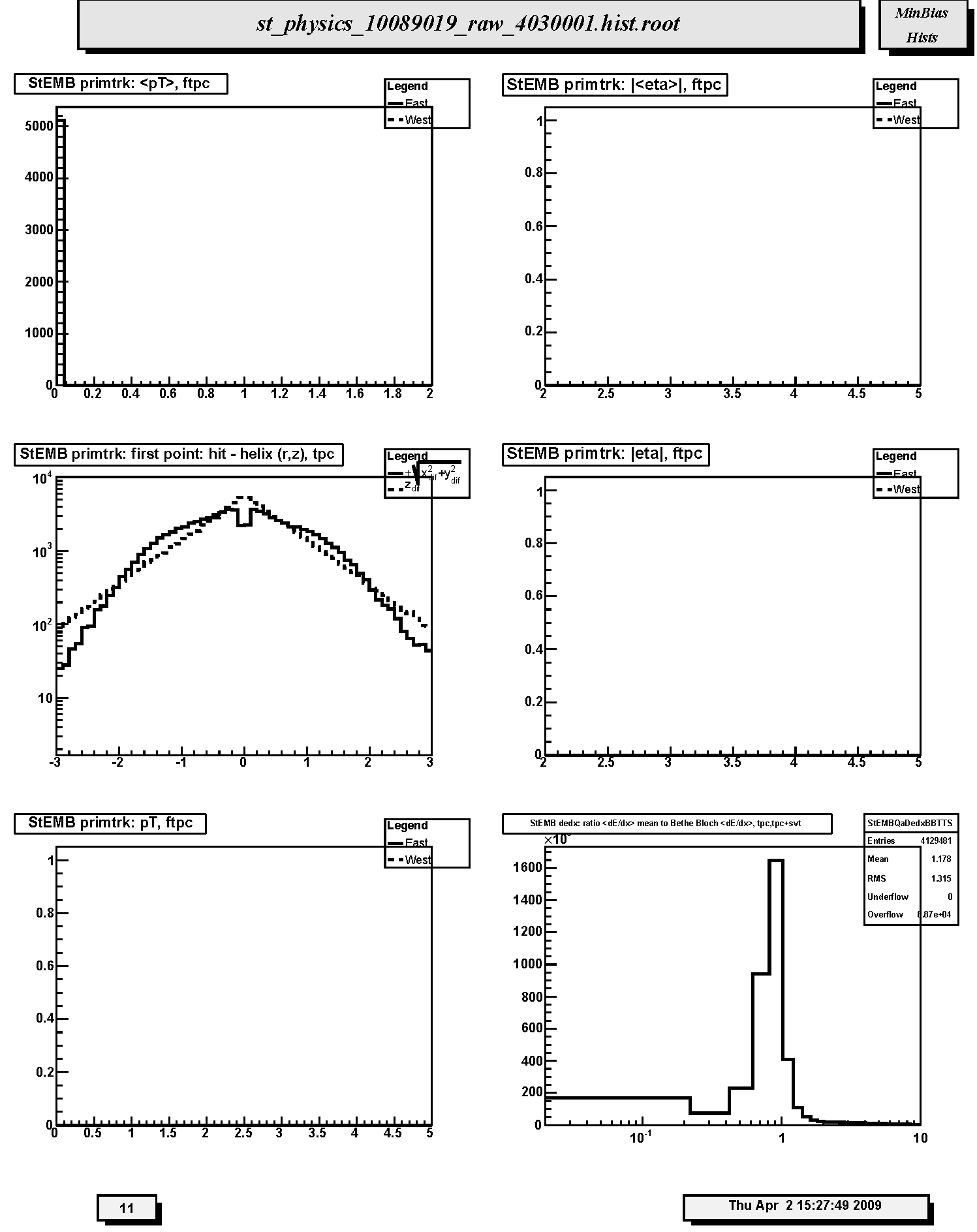

1. StECL primtrk:, ftpc: Not filled in Run 10. Transverse momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics.

2. StECL primtrk: ||, ftpc: Not filled in Run 10. Distributions of absolute value of mean pseudorapidity for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics and range from about 2.5 to 3.5.

3. StECL primtrk: first point: hit - helix (r,z), tpc: Residuals at first point on track for TPC-only primary tracks. The quantities plotted are the longitudinal (along z-axis, dashed line) and transverse (in x-y plane, solid line) differences between the coordinates of the first hit and the DCA point on the helix fit to the first point. For the transverse residual, positive (negative) values correspond to hits inside (outside) the circular projection of the helix onto the bend plane. FWHM should be less than ~ 1cm.

4. StECL primtrk: |eta|, ftpc: Not filled in Run 10. Distributions of absolute value of mean pseudorapidity for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics and range from about 2.5 to 3.5.

5. StECL primtrk: pT, ftpc: Not filled in Run 10. Transverse momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics.

6. StECL dedx: ratiomean to Bethe Bloch Distribution of ratio of mean dE/dx to Bethe-Bloch dE/dx for pions at the same momentum for TPC-only global tracks. Should peak at ~1. Tests calibration of charge deposition in TPC gas., tpc,tpc+svt:

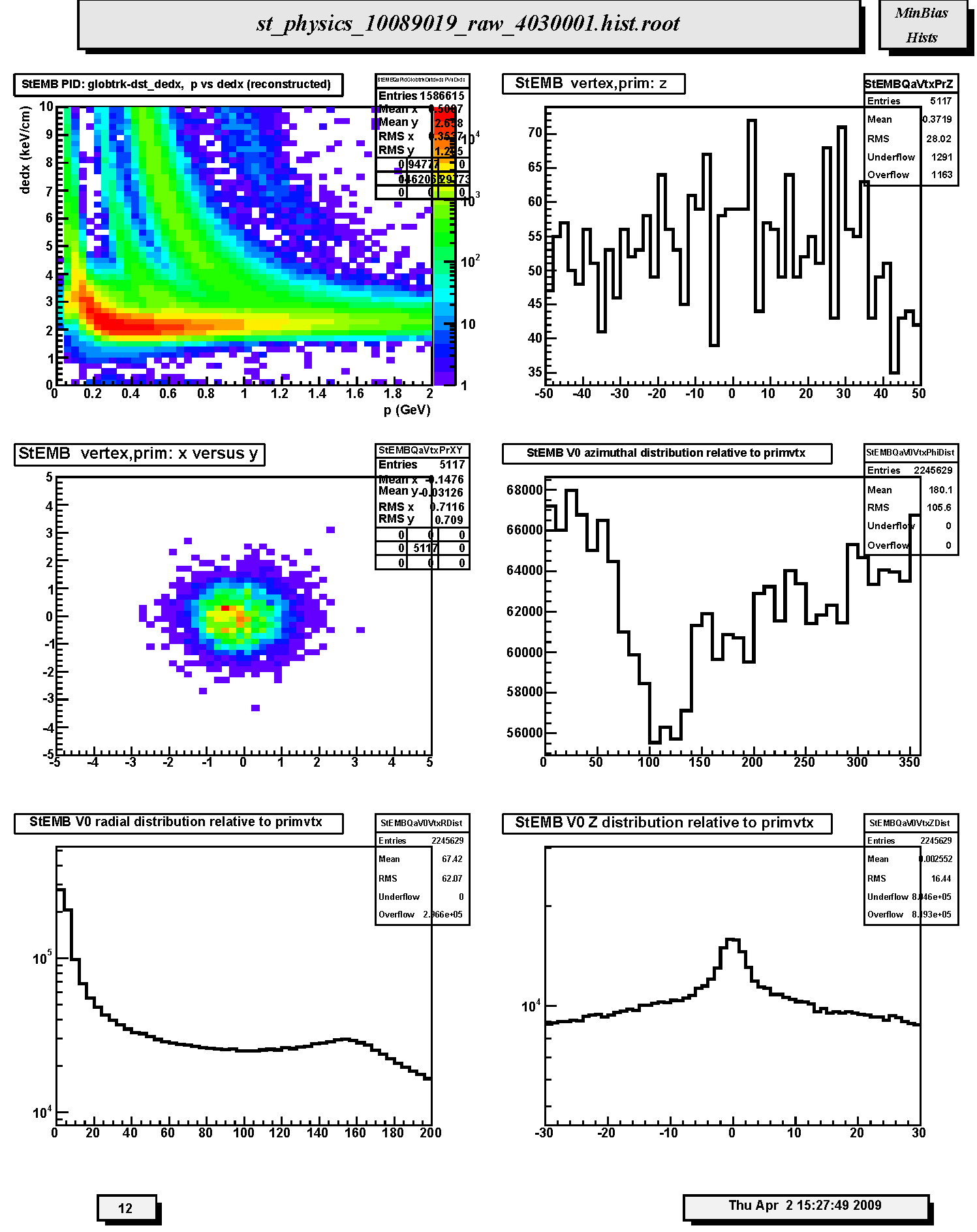

1. StECL PID: globtrk-dst_dedx, p vs dedx (reconstructed): Scatter plot of truncated mean dE/dx versus total momentum (GeV/c) for TPC-only global tracks. Should be able to see Bethe-Bloch bands for pions, kaons and protons if statistics are sufficient.

2. StECL vertex,prim: z : Distribution of primary vertex position along the z-axis out to abs(z) < 50 cm.

3. StECL vertex,prim: x versus y: Scatter plot of the (x,y) coordinates of the primary vertex position. This should correspond to the determined beam transverse position. The amount of dispersion will depend on the trigger condition and multiplicity cuts.

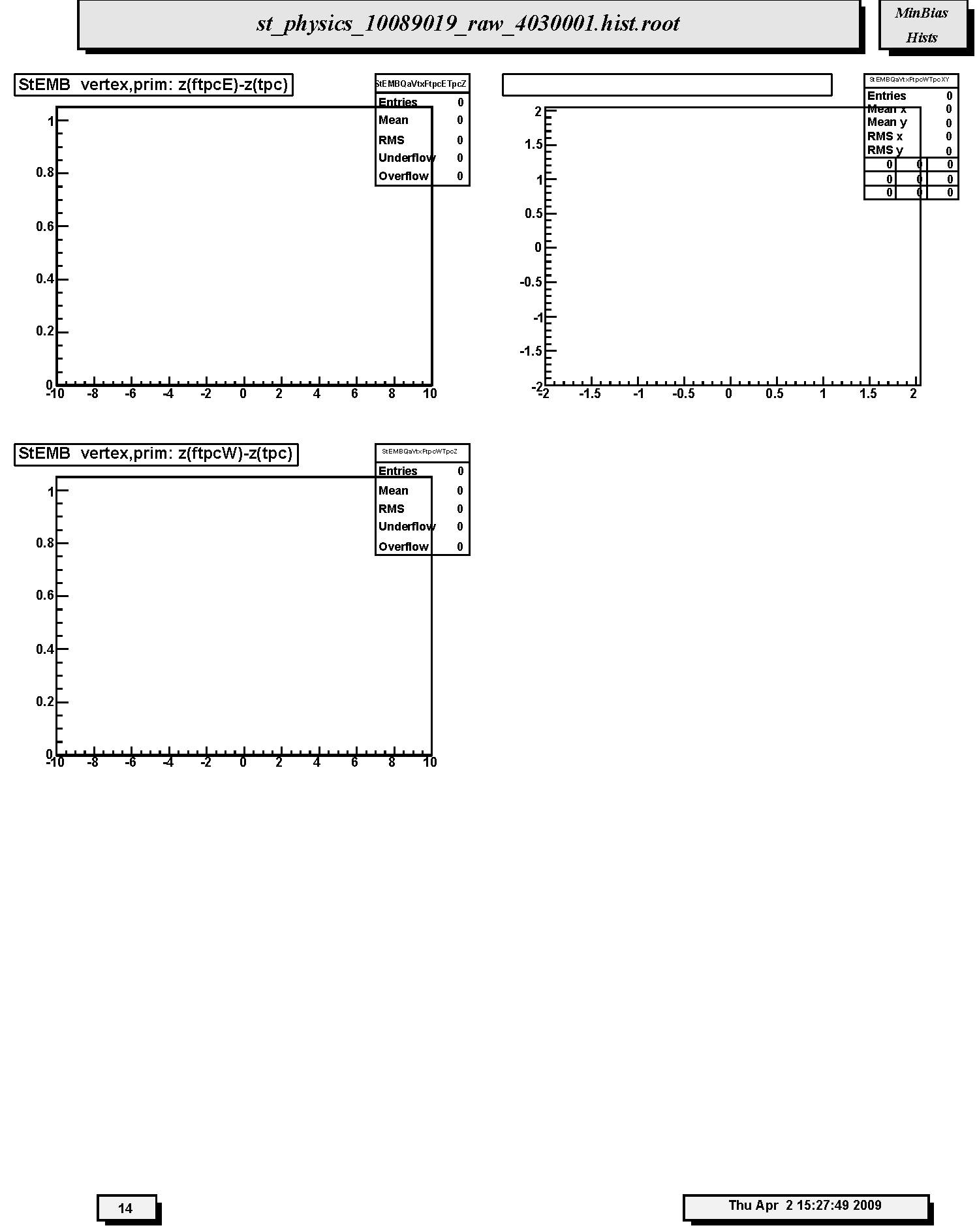

4. StECL vertex,prim: x(ftpcE)-x(tpc) vs y(ftpcE)-y(tpc): Not filled in Run 10. Scatter plot of the differences in x-y position (cm) for primary vertex positions determined by FTPC East global tracks and main TPC glbtrks. Should scatter about (0,0).

5. StECL vertex,prim: z(ftpcE)-z(tpc): Not filled in Run 10. Distribution of the differences along the z-axis, i.e. beam direction, in cm for primary vertex positions determined by FTPC East global tracks and main TPC glbtrks. The mean should be near 0.

6. StECL vertex,prim: x(ftpcW)-x(tpc) vs y(ftpcW)-y(tpc): Not filled in Run 10. Scatter plot of the differences in x-y position (cm) for primary vertex positions determined by FTPC West global tracks and main TPC glbtrks. Should scatter about (0,0).

1. StECL vertex,prim: z(ftpcW)-z(tpc): Not filled in Run 10. Distribution of the differences along the z-axis, i.e. beam direction, in cm for primary vertex positions determined by FTPC West global tracks and main TPC glbtrks. The mean should be near 0.

Fast Offline QA and DST Production QA Reference Histograms - Run 8

Run 8: d + Au

General Histograms -- Now Available!

Minbias/High-tower Histograms -- Now Available!

Detailed Sub-system References

General Histograms for Run 10

STAR Offline QA Shift Histogram Description - Run 10

Note: Not all reference plots may be ideal, be sure to carefully read the descriptions.

This page contains the reference histograms and descriptions for the Offline QA system for Run 10. These pages should correspond exactly to the jobs viewed in the Offline QA Browser. However, STAR is a dynamic experiment and the plots generated for QA will change several times throughout the run. If this reference page seems out-of-date, or if you find a job that would make a better reference, please email the QA hypernews list here.

Other links:

Elizabeth W. Oldag for the QA Team.

January 25, 2010.

Page Index for QA Shift Plots

- Page 1

1. StE trigger word: Distribution of trigger word groups: 1 = minimum bias, 2 = central, 3 = high pt, 4 = Jet patch, 5 = high BEMC tower, 6 = other.

2. StE trigger bits: Frequency of usages of 32 trigger bits.

3. FTPC West chargestep: The real chargestep corresponds to the maximum drift time in FTPC West (clusters from inner radius electrode) and is located near 170 timebins. This position will change slightly with atmospheric pressure. The hits beyond the step at timebin 170 are due to electronic noise and pileup. This step should always be visible even if it is only a "blip". Not filled in this run.

4. FTPC East chargestep: The real chargestep corresponds to the maximum drift time in FTPC East (clusters from inner radius electrode) and is located near 170 timebins. This position will change slightly with atmospheric pressure. The hits beyond the step at timebin 170 are due to electronic noise and pileup. This step should always be visible even if it is only a "blip". Not filled in this run.

5. FTPCW cluster radial position: Radial positions of clusters. Not filled in this run.

6. FTPCE cluster radial position: Radial positions of clusters. Not filled in this run.

HT Histograms for Run 10

STAR Offline QA Shift Histogram Description - Run 10

Note: Not all reference plots may be ideal, be sure to carefully read the descriptions.

This page contains the reference histograms and descriptions for the Offline QA system for Run 10. These pages should correspond exactly to the jobs viewed in the Offline QA Browser. However, STAR is a dynamic experiment and the plots generated for QA will change several times throughout the run. If this reference page seems out-of-date, or if you find a job that would make a better reference, please email the QA hypernews list here.

Other links:

Elizabeth W. Oldag for the QA Team.

January 25, 2010.

Page Index for QA Shift Plots

- Page 1

- Page 2

- Page 3

- Page 4

- Page 5

- Page 5

- Page 6

- Page 7

- Page 8

- Page 9

- Page 10

- Page 11

- Page 12

- Page 13

1. StEHT event primary vertex check: Primary vertex finding status for run; events with (1) and without (-1) final vertex. The relative fraction of events with primary vertex depends on trigger, beam diamond width and position.

2. StEHT point: # hits tpc : Distribution of number of reconstructed space points in TPC per event. Should scale with centrality, depends on multiplicity cut and trigger. For high tower triggers this distribution extends to larger hit multiplicities.

3. StEHT point: # hits ftpc: Not filled in this run. Distribution of number of reconstructed space points in FTPC East (solid line) and West (dashed line) per event. Scales with centrality, depends on multiplicity cut and trigger.

4. StEHT point: x-y distribution of hits, tpcE: Scatter plot of the azimuthal distribution of reconstructed space points in the East TPC. The distribution should be azimuthally uniform except for the 12 sector structure of the TPC (lighter areas indicate sector boundaries). Density should decrease radially but inner and outer sector structure should be present (darker areas in the innermost pads of the inner and outer sectors). Watch for empty areas (typically masked RDOs) and hot spots. Notify TPC experts if new empty area or hot spot appears.

5. StEHT point: x-y distribution of hits, tpcW: Scatter plot of the azimuthal distribution of reconstructed space points in the West TPC. The distribution should be azimuthally uniform except for the 12 sector structure of the TPC (lighter areas indicate sector boundaries). Density should decrease radially but inner and outer sector structure should be present (darker areas in the innermost pads of the inner and outer sectors). Watch for empty areas (typically masked RDOs) and hot spots. Notify TPC experts if new empty area or hot spot appears.

6. StEHT point: r-phi distribution of hits, tpcE: Same as the x-y scatter plot except in polar coordinates.

1. StEHT point: r-phi distribution of hits, tpcW: Same as the x-y scatter plot except in polar coordinates.

2. StEHT point: z distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to z-coordinate. There is a broad peak above the nominally flat background at z=0. This is intentional and is done in order to allow identification of post-trigger pileup events. Watch out for any other anamolous steps or unusual spikes or dips. The peaks just outside |z| = 200 cm are new in Run 10.

3. StEHT point: #phi distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to azimuthal coordinate (phi) for east (solid line) and west (dashed line) halves. Should be flat except for the 12-fold sector structure or masked RDOs (as shown). Dead RDO's will produce dips and gaps; hot pads or FEE cards will produce spikes.

4. StEHT point: padrow distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to padrow number 1-45. Should display gradual fall-off; watch for anamolous spikes and dips. Missing RDO's cause drops in the distribution. Padrow 13 was restored in Run 9 and continues to be active in Run 10.

5. StEHT point: x-y distribution of hits, ftpcE: Not filled in this run. Radial distribution of reconstructed space points in FTPC East. The distribution should be uniform except for the insensitive gaps between the six readout sectors. Localized denser areas are due to noise. Ideally noisy pads are flagged in the FTPC gain tables so that they are not used for cluster finding. Less densely populated areas not on the sector boundaries are due to dead electronics. Empty areas not on the sector boundaries indicates a serious hardware problem. An FTPC expert should be contacted immediately if this is seen in fast offline-QA during data taking.

6. StEHT point: x-y distribution of hits, ftpcW: Not filled in this run. Radial distribution of reconstructed space points in FTPC West. The distribution should be uniform except for the insensitive gaps between the six readout sectors. Localized denser areas are due to noise. Ideally noisy pads are flagged in the FTPC gain tables so that they are not used for cluster finding. Less densely populated areas not on the sector boundaries are due to dead electronics. Empty areas not on the sector boundaries indicates a serious hardware problem. An FTPC expert should be contacted immediately if this is seen in fast offline-QA during data taking.

1. StEHT point: plane distribution of hits, ftpc: Not filled in this run. Number of reconstructed space points assigned to tracks in FTPC East (solid line) and West (dashed line) in each padrow. The horizontal axis shows padrow numbers where FTPC-West is 1-10 and FTPC-East is 11-20. Padrows #1 and #11 are closest to the center of STAR. East and West should be similar in shape but West will have less magnitude than east for d+Au. Spikes indicate noisy electronics; dips indicate dead electronics.

2. StEHT point: #pads vs #timebins of hits, ftpcE: Not filled in this run. Monitors the cluster quality in FTPC East. Ideally the clusters should have a pad length of 3-5 and a timebin length of 4-6. A prominent peak located at (2,2) indicates bad gas or loss of gain or excessive background.

3. StEHT point: #pads vs #timebins of hits, ftpcW: Not filled in this run. Monitors the cluster quality in FTPC West. Ideally the clusters should have a pad length of 3-5 and a timebin length of 4-6. A prominent peak located at (2,2) indicates bad gas or loss of gain or excessive background.

4. StEHT Number hits in cluster for bemc: Distribution of number of BEMC towers contributing to energy clusters.

5. StEHT Energy of cluster for bemc: Distribution of energy in reconstructed clusters in EMC barrel. Should be peaked with a smooth fall off for minbias data but may display other peaks or structures for other triggers. For high tower triggers there may be excess counts in the large energy tails.

6. StEHT Eta of clusters for bemc: Azimuthally integrated pseudorapidity distribution of reconstructed energy clusters in the EMC-barrel. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes. For Run 10 the full barrel is instrumented. The distribution should nominally be flat.

1. StEHT Phi of clusters for bemc: Pseudorapidity integrated azimuthal distribution (radians) of reconstructed energy clusters in the EMC-barrel. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps, unusual spikes, or sections of significantly elevated yields.

2. StEHT Number hits in cluster for bsmde: Distribution of numbers of hits (anodes) contributing to Barrel SMD clusters along pseudorapidity.

3. StEHT Eta of clusters for bsmde: Pseudorapidity distribution of reconstructed energy clusters in the BSMD anode grid along pseudorapidity. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes. Distribution should nominally be flat.

4. StEHT Number hits in cluster for bsmdp: Distribution of numbers of hits (anodes) contributing to Barrel SMD clusters along azimuth.

5. StEHT Phi of clusters for bsmdp: Azimuthal distribution of reconstructed energy clusters in the BSMD anode grid along azimuth angle (phi in radians). Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes.

6. StEHT EmcCat4 Point Energy: Energy distribution for Barrel EMC-SMD Category 4 clusters. Cat4 clusters correspond to TPC track matched clusters in the EMC barrel, BSMD-eta and BSMD-phi detectors.

1. StEHT EmcCat4 Point Eta: Pseudorapidity distribution for EMC-SMD Category 4 clusters. Distribution should nominally be flat.

2. StEHT EmcCat4 Point Phi: Azimuthal distribution for EMC-SMD Category 4 clusters. Distribution should nominally be flat.

3. StEHT EmcCat4 Sigma Eta: Distribution of widths (sigma) of Category 4 Barrel EMC-SMD clusters with respect to pseudorapidity.

4. StEHT EmcCat4 Sigma Phi: Distribution of widths (sigma) of Category 4 Barrel EMC-SMD clusters with respect to azimuthal angle.

5. StEHT EmcCat4 Delta Eta: Differences between centroids of Category 4 Barrel EMC-SMD clusters and projected positions of TPC tracks at BEMC with respect to pseudorapidity. Should be peaked at ~0. Not filled in Run 10.

6. StEHT EmcCat4 Delta Phi: Differences between centroids of Category 4 Barrel EMC-SMD clusters and projected positions of TPC tracks at BEMC with respect to azimuthal angle. Should be peaked at ~0. Not filled in Run 10.

1. StEHT EmcCat4 Points Multiplicity: Frequency distribution of number of Category 4 clusters per event. For high tower triggers this distribution extends to larger multiplicities.

2. StEHT EmcCat4 Track Momenta : Distribution of TPC global track momentum for Barrel EMC-SMD Category 4 clusters. Not filled in Run 10.

3. StEHT Point Flag: Distribution of Barrel EMC and SMD cluster types by Category number. There should be a reasonable fraction of Cat4 clusters as in this example histogram; report if this fraction drops significantly. For high tower triggers the relative fraction of Cat4 clusters should be larger than in MinBias.

4. StEHT globtrk: iflag - all : Quality flag values for all global tracks. Some with large, negative values may not appear on plot; check stat. box for underflows. Majority of tracks should have iflag>0, corresponding to good, usable tracks. Refer to: dst_track_flags.html and kalerr.html for description of flag values. Note that in Runs 7-10 about half the tracks have iflag < 0.

5. StEHT globtrk: tot good tracks - all: Distribution of the number of good global tracks in the TPC per trigger; including pileup. For high tower triggers this distribution extends to larger track multiplicities.

6. StEHT globtrk: tot good tracks - ftpc: Not filled in this run. Scatter plot of good global track multiplicities in FTPC West versus FTPC East.

1. StEHT globtrk: Detector ID good tracks - all: Global track detector IDs for good tracks. Refer to: /afs/rhic/star/packages/DEV00/pams/global/inc/StDetectorDefinitions.h for Detector ID codes.

2. StEHT globtrk: z-DCA to Beam Axis, coarse scale: Coarse scale distribution along the z-axis (from -200 to +200 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Peaks indicate probable locations of individual collision vertices however indentification of individual collision vertices is unlikely.

3. StEHT globtrk: z-DCA to Beam Axis: Fine scale distribution along the z-axis (from -50 to +50 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Peaks indicate probable locations of individual collision vertices.

4. StEHT globtrk: z-DCA to Beam Axis vs tanl, tpc: Scatter plot of the tangent of the dip angle (tanl) versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Vertical bands should be seen corresponding to individual events. The bands should be smooth and continuous; breaks at tanl=0 indicate probable TPC calibration errors in either the t0 offset or the drift speed. This is best seen for high multiplicity events. Cross reference for calibration errors with z-DCA to beam axis versus z-coord. of first hit on track.

5. StEHT globtrk: z-DCA to Beam Axis vs z-first: Scatter plot of the z-coordinate of the first fitted hit in the TPC versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC-only global in which the first point used in the fit lies in the TPC. Vertical bands should be seen corresponding to individual events. The bands should be smooth and continuous; breaks at z=0 indicate TPC calibration errors in either the t0 offset or the drift speed. This is best seen for high multiplicity events.

6. StEHT globtrk: z-DCA to Beam Axis vs psi: Scatter plot of the azimuthal direction angle (psi) versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Vertical bands should be seen corresponding to individual events. The bands should be smooth, straight and continuous indicating azimuthal symmetry in the tracking. Bends or offsets could indicate problems in individual TPC sectors such as voltage sags or drifts. These are best studied with high multiplicity events.

1. StEHT globtrk: padrow of first hit on trk, tpc: Distribution of first fitted space point with respect to pad row number for TPC-only global tracks. Should peak at 1 with a minor peak at padrow 14 (beginning of outer sector); the latter should not be too big relative to that at row 1. Other peaks may indicate large, missing sections of the TPC read-out causing breaks, and consequently additional, false new starting points for tracks. The excess data for TPC west beyond padrow 35 is due to EEMC tracking needs.

2. StEHT globtrk: z of first hit on trk, tpc: Distribution of first fitted space point with respect to z for TPC-only global tracks. Should be approx. symmetric.

3. StEHT globtrk: first point: hit - helix, tpc: Residuals at FIRST point on track for TPC-only global tracks. The quantities plotted are the longitudinal (along z-axis, dashed line) and transverse (in x-y plane, solid line) differences between the coordinates of the first hit and the DCA point on the helix fit to the first point. For the transverse residual, positive (negative) values correspond to hits inside (outside) the circular projection of the helix onto the bend plane. FWHM should be less than ~ 1cm.

4. StEHT globtrk: phi of first point on trk, tpc: Distribution of first fitted space point with respect to azimuthal angle (phi) for TPC-only global tracks. The solid (dashed) line is for the east (west) half of the TPC. These should be approximately equal and flat within statistics, except for the 12-sector structure.

5. StEHT globtrk: chisq0, tpc: Chi-square per degree of freedom for TPC global tracks. Should peak just below 1.

6. StEHT globtrk: signed impact param from prim vtx, tpc: Two-dimensional (2D) (in the transverse plane) signed impact parameter (in cm) from primary vertex for East (solid line), West (dashed line) and All (dotted line) TPC-only global tracks. Should be centered at zero depending on the TPC calibration status used in fast-offline QA production.

1. StEHT globtrk: tanl(dip) vs. (zfirst-zvtx)/arc length, tpc,tpc+svt: Scatter plot of tangent of dip angle (tanl) versus (z_first - z_primvrtx)/arc-length for TPC-only global tracks whose first fitted point is in the TPC. Variable 'z_first' is the z coordinate of the first fitted point in the TPC. Variable 'z_primvrtx' is the z-coordinate of the primary vertex for the event. Variable 'arc-length' is 2R*arcsin(delta_r/2R) where R = track radius of curvature and delta_r is the transverse distance between the primary vertex and the first hit on track. Primary tracks lie along the 45 deg diagonal. Secondary tracks and strays lie scattered to either side. The diagonal band should appear clearly and be straight and smooth without kinks, breaks or bends.

2. StEHT globtrk: N points on trk,ftpc: Not filled in Run 10. Distribution of the number of fitted points on track for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. Distributions should be similar except fewer tracks for west than east. Also depends on the relative fraction of active readout in each detector.

3. StEHT globtrk: psi, ftpc: Not filled in Run 10. Azimuthal distributions for track direction angle (psi) for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be flat within statistics except for the hexagonal FTPC sector structure. There will be fewer west tracks than east for d+Au.

4. StEHT globtrk: |eta|, ftpc: Not filled in Run 10. Distributions of absolute value of pseudorapidity for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be similar (modulo for d+Au) and range from about 2.5 to 4.5.

5. StEHT globtrk: pT, ftpc: Not filled in Run 10. Transverse momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be similar (modulo d+Au)within statistics.

6. StEHT globtrk: momentum, ftpc: Not filled in Run 10. Total momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) global tracks. These should be similar (modulo d+Au) within statistics.

1. StEHT globtrk: impact param from prim vtx, ftpc: Not filled in Run 10. Distribution of impact parameter values for global FTPC East tracks (solid curve) and West tracks (dashed curve) with respect to the main TPC global track determined primary vertex.

2. StEHT globtrk: Y vs X of first hit on trk, ftpcE: Not filled in Run 10. Scatter plot of (x,y) coordinates for the first fitted space points in FTPC-East global tracks. These should be uniformly populated with hits; FTPC hexagonal structure is apparent.

3. StEHT globtrk: Y vs X of first hit on trk, ftpcW: Not filled in Run 10. Scatter plot of (x,y) coordinates for the first fitted space points in FTPC-West global tracks. These should be uniformly populated with hits; FTPC hexagonal structure is apparent.

4. StEHT primtrk: iflag - all: Quality flag values for all primary tracks. Some with large, negative values may not appear on plot; check stat. box for underflows. Majority of tracks should have iflag>0, corresponding to good, usable tracks. Refer to: dst_track_flags.html and kalerr.html for description of flag values.

5. StEHT primtrk: tot num tracks iflag>0: Distribution of total number of primary tracks per triggered event. For high tower triggers this distribution extends to larger track multiplicities.

6. StEHT primtrk: ratio primary/global tracks w/ iflag>0: Ratio of good primary to good global tracks for all detectors. For Au-Au this ratio usually peaks at about 0.5.

1. StEHT primtrk:, ftpc: Not filled in Run 10. Transverse momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics.

2. StEHT primtrk: ||, ftpc: Not filled in Run 10. Distributions of absolute value of mean pseudorapidity for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics and range from about 2.5 to 3.5.

3. StEHT primtrk: first point: hit - helix (r,z), tpc: Residuals at first point on track for TPC-only primary tracks. The quantities plotted are the longitudinal (along z-axis, dashed line) and transverse (in x-y plane, solid line) differences between the coordinates of the first hit and the DCA point on the helix fit to the first point. For the transverse residual, positive (negative) values correspond to hits inside (outside) the circular projection of the helix onto the bend plane. FWHM should be less than ~ 1cm.

4. StEHT primtrk: |eta|, ftpc: Not filled in Run 10. Distributions of absolute value of mean pseudorapidity for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics and range from about 2.5 to 3.5.

5. StEHT primtrk: pT, ftpc: Not filled in Run 10. Transverse momentum (GeV/c) distributions for FTPC-East (solid line) and FTPC-West (dashed line) primary tracks. These should be similar within statistics.

6. StEHT dedx: ratiomean to Bethe Bloch Distribution of ratio of mean dE/dx to Bethe-Bloch dE/dx for pions at the same momentum for TPC-only global tracks. Should peak at ~1. Tests calibration of charge deposition in TPC gas., tpc,tpc+svt:

1. StEHT PID: globtrk-dst_dedx, p vs dedx (reconstructed): Scatter plot of truncated mean dE/dx versus total momentum (GeV/c) for TPC-only global tracks. Should be able to see Bethe-Bloch bands for pions, kaons and protons if statistics are sufficient.

2. StEHT vertex,prim: z : Distribution of primary vertex position along the z-axis out to abs(z) < 50 cm.

3. StEHT vertex,prim: x versus y: Scatter plot of the (x,y) coordinates of the primary vertex position. This should correspond to the determined beam transverse position. The amount of dispersion will depend on the trigger condition and multiplicity cuts.

4. StEHT vertex,prim: x(ftpcE)-x(tpc) vs y(ftpcE)-y(tpc): Not filled in Run 10. Scatter plot of the differences in x-y position (cm) for primary vertex positions determined by FTPC East global tracks and main TPC glbtrks. Should scatter about (0,0).

5. StEHT vertex,prim: z(ftpcE)-z(tpc): Not filled in Run 10. Distribution of the differences along the z-axis, i.e. beam direction, in cm for primary vertex positions determined by FTPC East global tracks and main TPC glbtrks. The mean should be near 0.

6. StEHT vertex,prim: x(ftpcW)-x(tpc) vs y(ftpcW)-y(tpc): Not filled in Run 10. Scatter plot of the differences in x-y position (cm) for primary vertex positions determined by FTPC West global tracks and main TPC glbtrks. Should scatter about (0,0).

1. StEHT vertex,prim: z(ftpcW)-z(tpc): Not filled in Run 10. Distribution of the differences along the z-axis, i.e. beam direction, in cm for primary vertex positions determined by FTPC West global tracks and main TPC glbtrks. The mean should be near 0.

MB Histograms for Run 10

STAR Offline QA Shift Histogram Description - Run 10

Note: Not all reference plots may be ideal, be sure to carefully read the descriptions.

This page contains the reference histograms and descriptions for the Offline QA system for Run 10. These pages should correspond exactly to the jobs viewed in the Offline QA Browser. However, STAR is a dynamic experiment and the plots generated for QA will change several times throughout the run. If this reference page seems out-of-date, or if you find a job that would make a better reference, please email the QA hypernews list here.

Other links:

Elizabeth W. Oldag for the QA Team.

January 25, 2010.

Page Index for QA Shift Plots

- Page 1

- Page 2

- Page 3

- Page 4

- Page 5

- Page 6

- Page 7

- Page 8

- Page 9

- Page 10

- Page 11

- Page 12

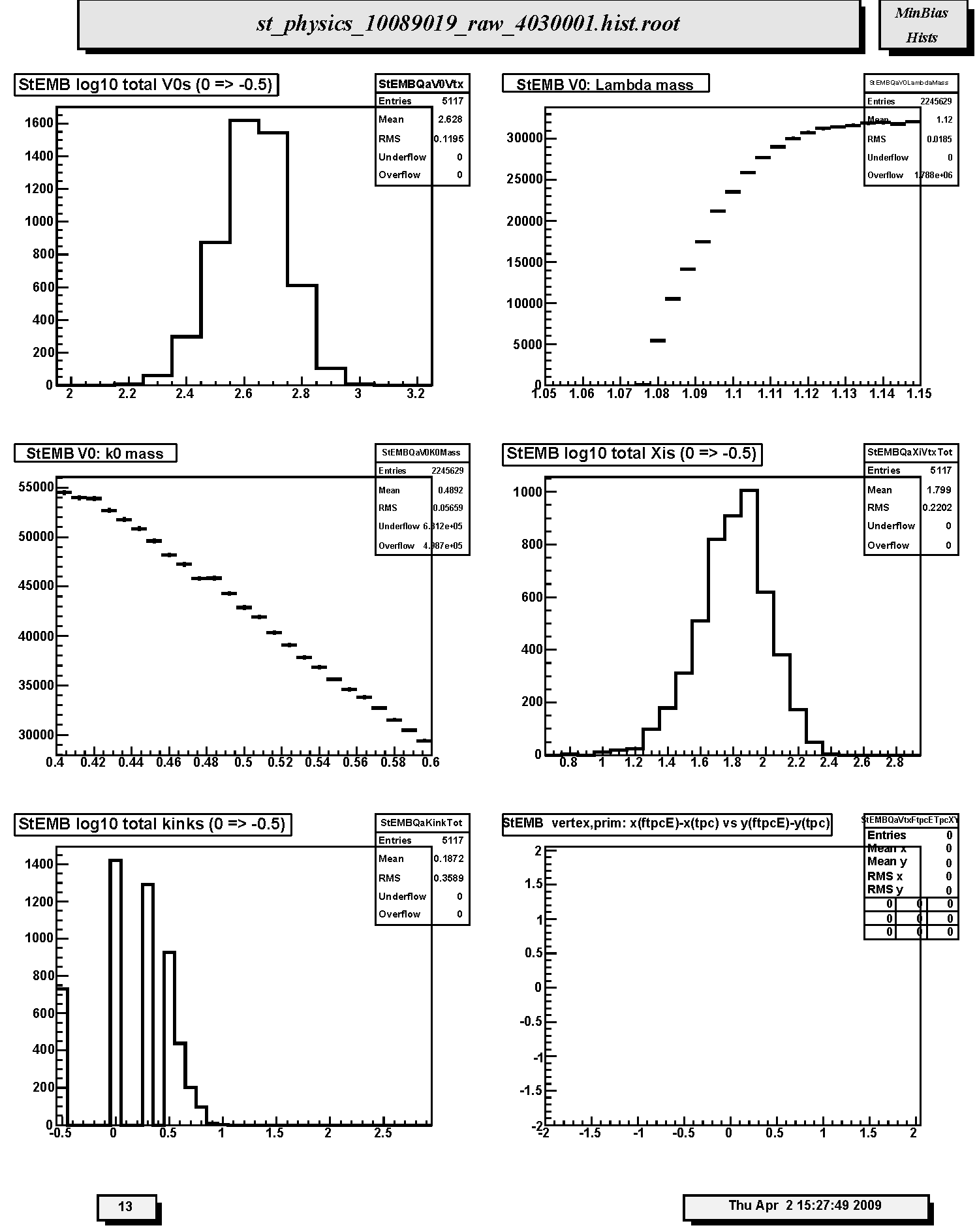

- Page 13

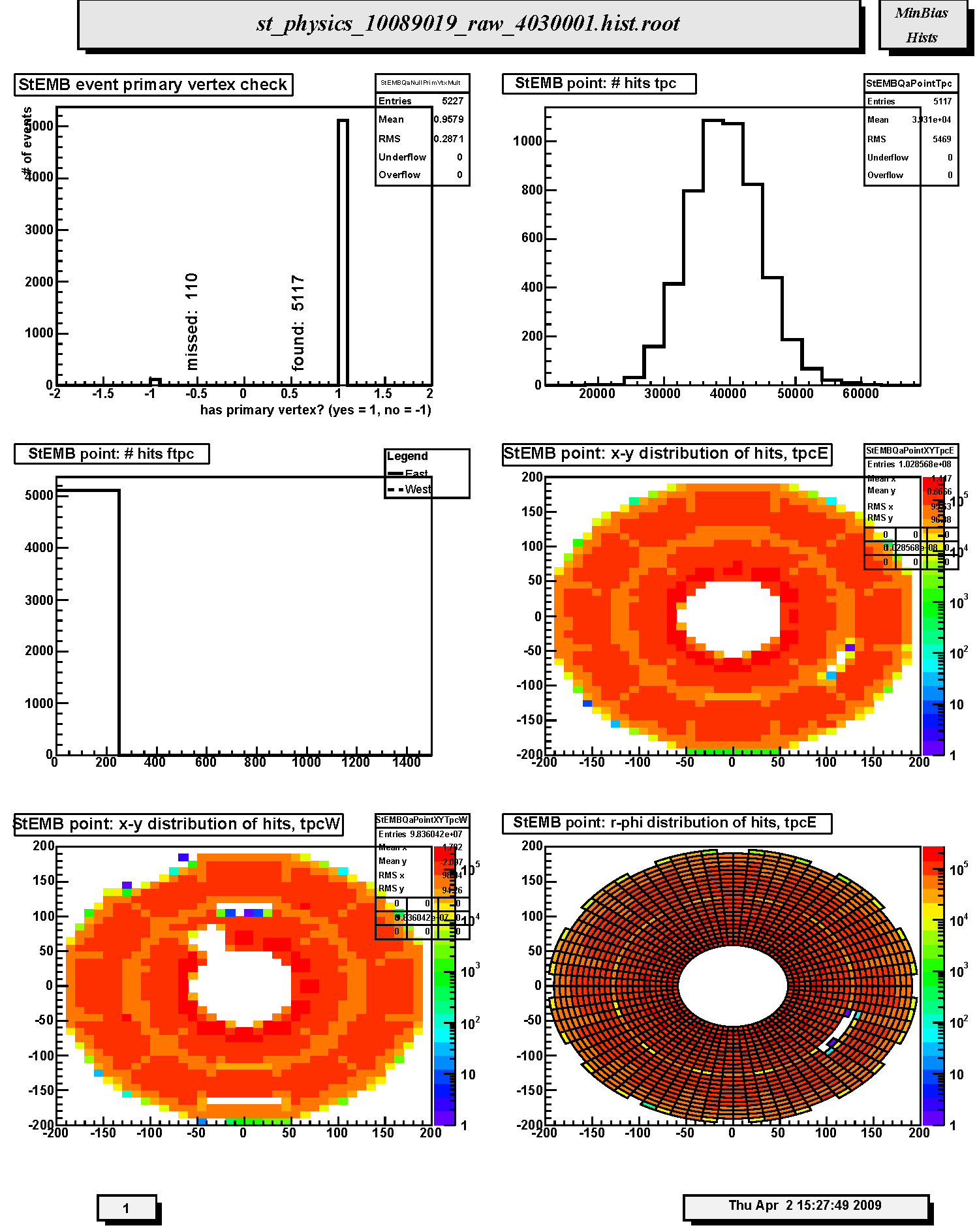

1. StEMB event primary vertex check: Primary vertex finding status for run; events with (1) and without (-1) final vertex. The relative fraction of events with primary vertex depends on trigger, beam diamond width and position.

2. StEMB point: # hits tpc : Distribution of number of reconstructed space points in TPC per event. Should scale with centrality, depends on multiplicity cut and trigger.

3. StEMB point: # hits ftpc: Not filled in this run. Distribution of number of reconstructed space points in FTPC East (solid line) and West (dashed line) per event. Scales with centrality, depends on multiplicity cut and trigger.

4. StEMB point: x-y distribution of hits, tpcE: Scatter plot of the azimuthal distribution of reconstructed space points in the East TPC. The distribution should be azimuthally uniform except for the 12 sector structure of the TPC (lighter areas indicate sector boundaries). Density should decrease radially but inner and outer sector structure should be present (darker areas in the innermost pads of the inner and outer sectors). Watch for empty areas (typically masked RDOs) and hot spots. Notify TPC experts if new empty area or hot spot appears.

5. StEMB point: x-y distribution of hits, tpcW: Scatter plot of the azimuthal distribution of reconstructed space points in the West TPC. The distribution should be azimuthally uniform except for the 12 sector structure of the TPC (lighter areas indicate sector boundaries). Density should decrease radially but inner and outer sector structure should be present (darker areas in the innermost pads of the inner and outer sectors). Watch for empty areas (typically masked RDOs) and hot spots. Notify TPC experts if new empty area or hot spot appears.

6. StEMB point: r-phi distribution of hits, tpcE: Same as the x-y scatter plot except in polar coordinates.

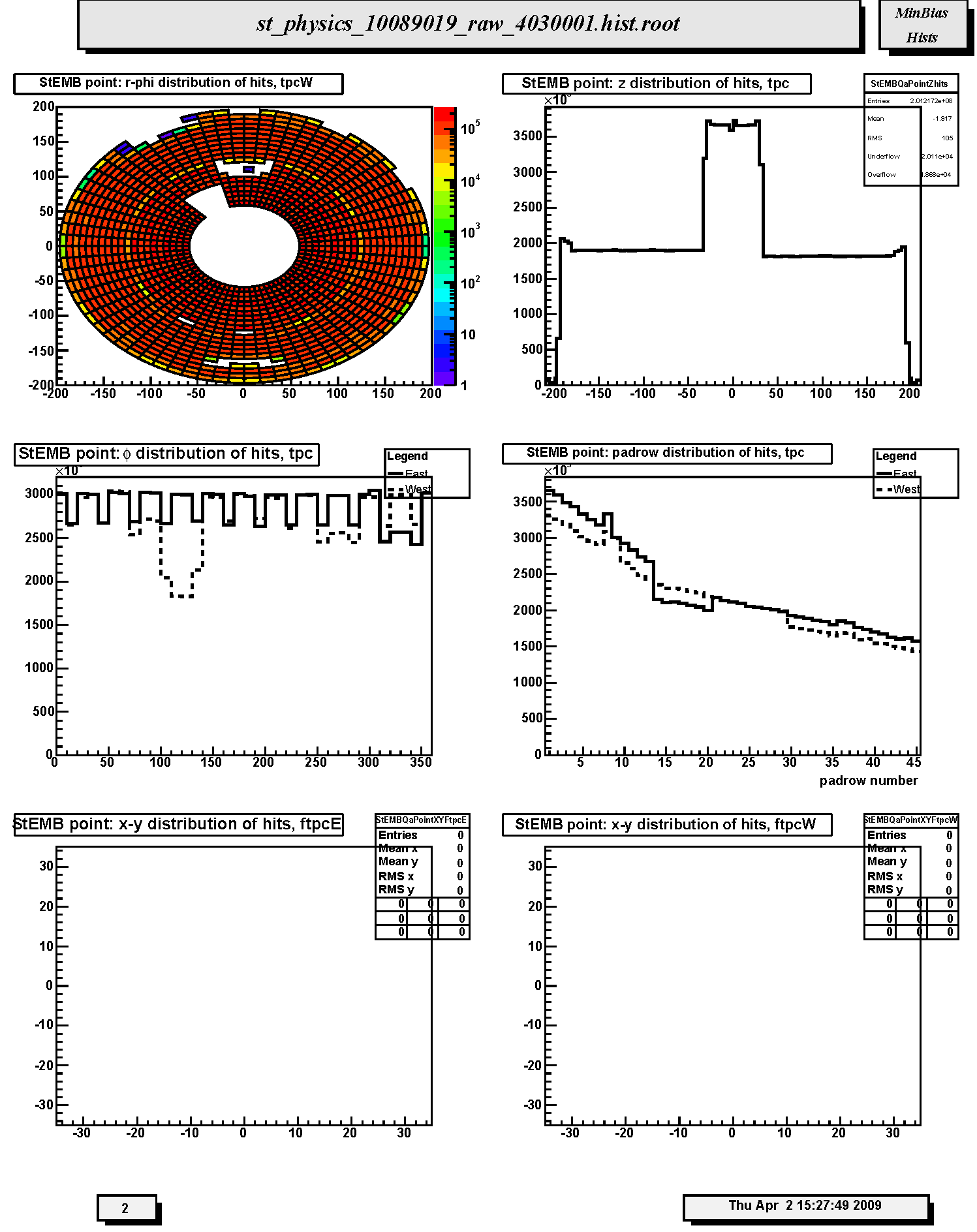

1. StEMB point: r-phi distribution of hits, tpcW: Same as the x-y scatter plot except in polar coordinates.

2. StEMB point: z distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to z-coordinate. There is a broad peak above the nominally flat background at z=0. This is intentional and is done in order to allow identification of post-trigger pileup events. Watch out for any other anamolous steps or unusual spikes or dips. The peaks just outside |z| = 200 cm are new in Run 10.

3. StEMB point: #phi distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to azimuthal coordinate (phi) for east (solid line) and west (dashed line) halves. Should be flat except for the 12-fold sector structure or masked RDOs (as shown). Dead RDO's will produce dips and gaps; hot pads or FEE cards will produce spikes.

4. StEMB point: padrow distribution of hits, tpc: Distribution of reconstructed space points in TPC with respect to padrow number 1-45. Should display gradual fall-off; watch for anamolous spikes and dips. Missing RDO's cause drops in the distribution. Padrow 13 was restored in Run 9 and continues to be active in Run 10.

5. StEMB point: x-y distribution of hits, ftpcE: Not filled in this run. Radial distribution of reconstructed space points in FTPC East. The distribution should be uniform except for the insensitive gaps between the six readout sectors. Localized denser areas are due to noise. Ideally noisy pads are flagged in the FTPC gain tables so that they are not used for cluster finding. Less densely populated areas not on the sector boundaries are due to dead electronics. Empty areas not on the sector boundaries indicates a serious hardware problem. An FTPC expert should be contacted immediately if this is seen in fast offline-QA during data taking.

6. StEMB point: x-y distribution of hits, ftpcW: Not filled in this run. Radial distribution of reconstructed space points in FTPC West. The distribution should be uniform except for the insensitive gaps between the six readout sectors. Localized denser areas are due to noise. Ideally noisy pads are flagged in the FTPC gain tables so that they are not used for cluster finding. Less densely populated areas not on the sector boundaries are due to dead electronics. Empty areas not on the sector boundaries indicates a serious hardware problem. An FTPC expert should be contacted immediately if this is seen in fast offline-QA during data taking.

1. StEMB point: plane distribution of hits, ftpc: Not filled in this run. Number of reconstructed space points assigned to tracks in FTPC East (solid line) and West (dashed line) in each padrow. The horizontal axis shows padrow numbers where FTPC-West is 1-10 and FTPC-East is 11-20. Padrows #1 and #11 are closest to the center of STAR. East and West should be similar in shape but West will have less magnitude than east for d+Au. Spikes indicate noisy electronics; dips indicate dead electronics.

2. StEMB point: #pads vs #timebins of hits, ftpcE: Not filled in this run. Monitors the cluster quality in FTPC East. Ideally the clusters should have a pad length of 3-5 and a timebin length of 4-6. A prominent peak located at (2,2) indicates bad gas or loss of gain or excessive background.

3. StEMB point: #pads vs #timebins of hits, ftpcW: Not filled in this run. Monitors the cluster quality in FTPC West. Ideally the clusters should have a pad length of 3-5 and a timebin length of 4-6. A prominent peak located at (2,2) indicates bad gas or loss of gain or excessive background.

4. StEMB Number hits in cluster for bemc: Distribution of number of BEMC towers contributing to energy clusters.

5. StEMB Energy of cluster for bemc: Distribution of energy in reconstructed clusters in EMC barrel. Should be peaked with a smooth fall off for minbias data but may display other peaks or structures for other triggers.

6. StEMB Eta of clusters for bemc: Azimuthally integrated pseudorapidity distribution of reconstructed energy clusters in the EMC-barrel. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes. For Run 10 the full barrel is instrumented. The distribution should nominally be flat.

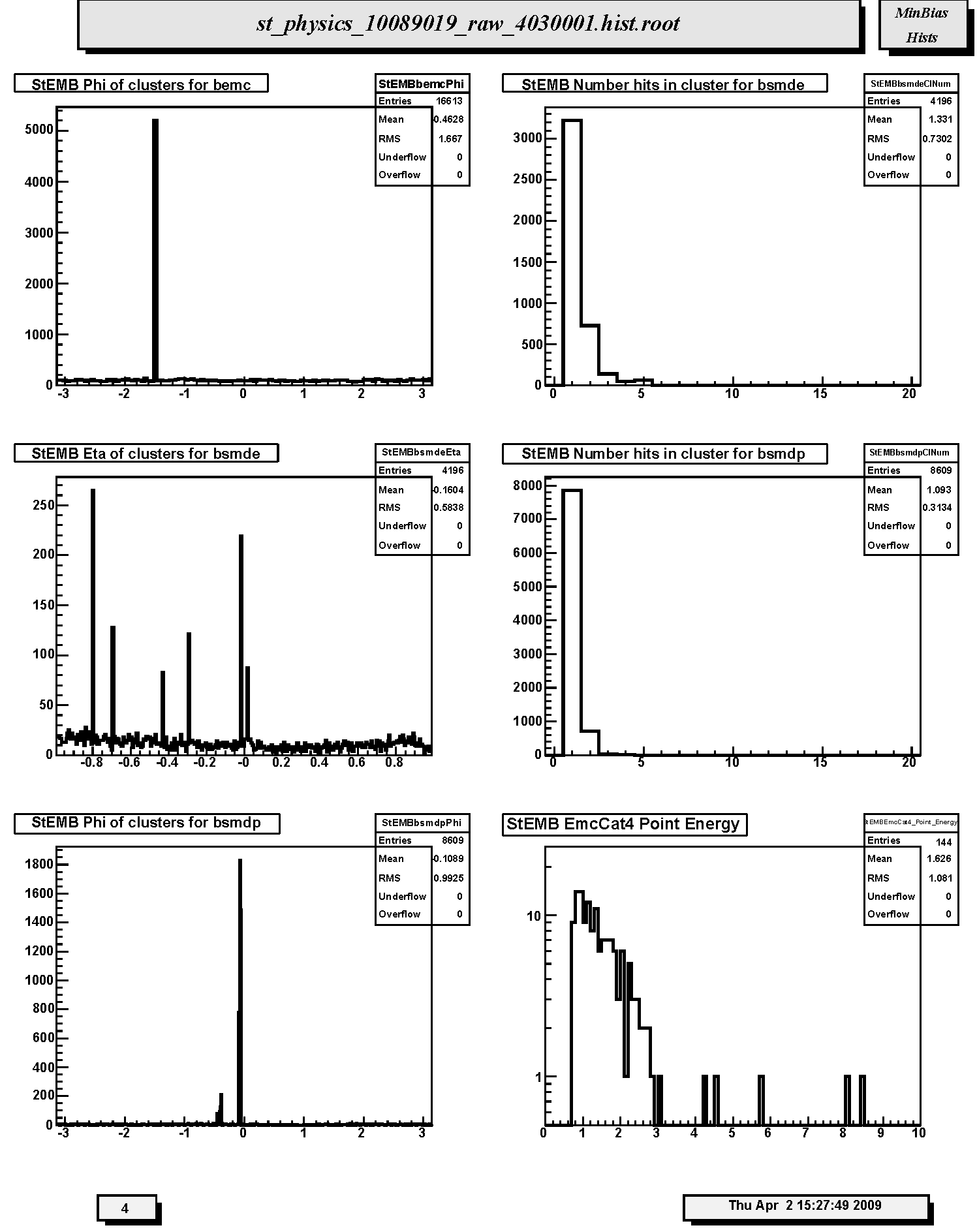

1. StEMB Phi of clusters for bemc: Pseudorapidity integrated azimuthal distribution (radians) of reconstructed energy clusters in the EMC-barrel. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps, unusual spikes, or sections of significantly elevated yields.

2. StEMB Number hits in cluster for bsmde: Distribution of numbers of hits (anodes) contributing to Barrel SMD clusters along pseudorapidity.

3. StEMB Eta of clusters for bsmde: Pseudorapidity distribution of reconstructed energy clusters in the BSMD anode grid along pseudorapidity. Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes. Distribution should nominally be flat.

4. StEMB Number hits in cluster for bsmdp: Distribution of numbers of hits (anodes) contributing to Barrel SMD clusters along azimuth.

5. StEMB Phi of clusters for bsmdp: Azimuthal distribution of reconstructed energy clusters in the BSMD anode grid along azimuth angle (phi in radians). Note there will be gaps due to missing and/or uninstrumented sectors. Report any unexpected gaps or unusual spikes.

6. StEMB EmcCat4 Point Energy: Energy distribution for Barrel EMC-SMD Category 4 clusters. Cat4 clusters correspond to TPC track matched clusters in the EMC barrel, BSMD-eta and BSMD-phi detectors.

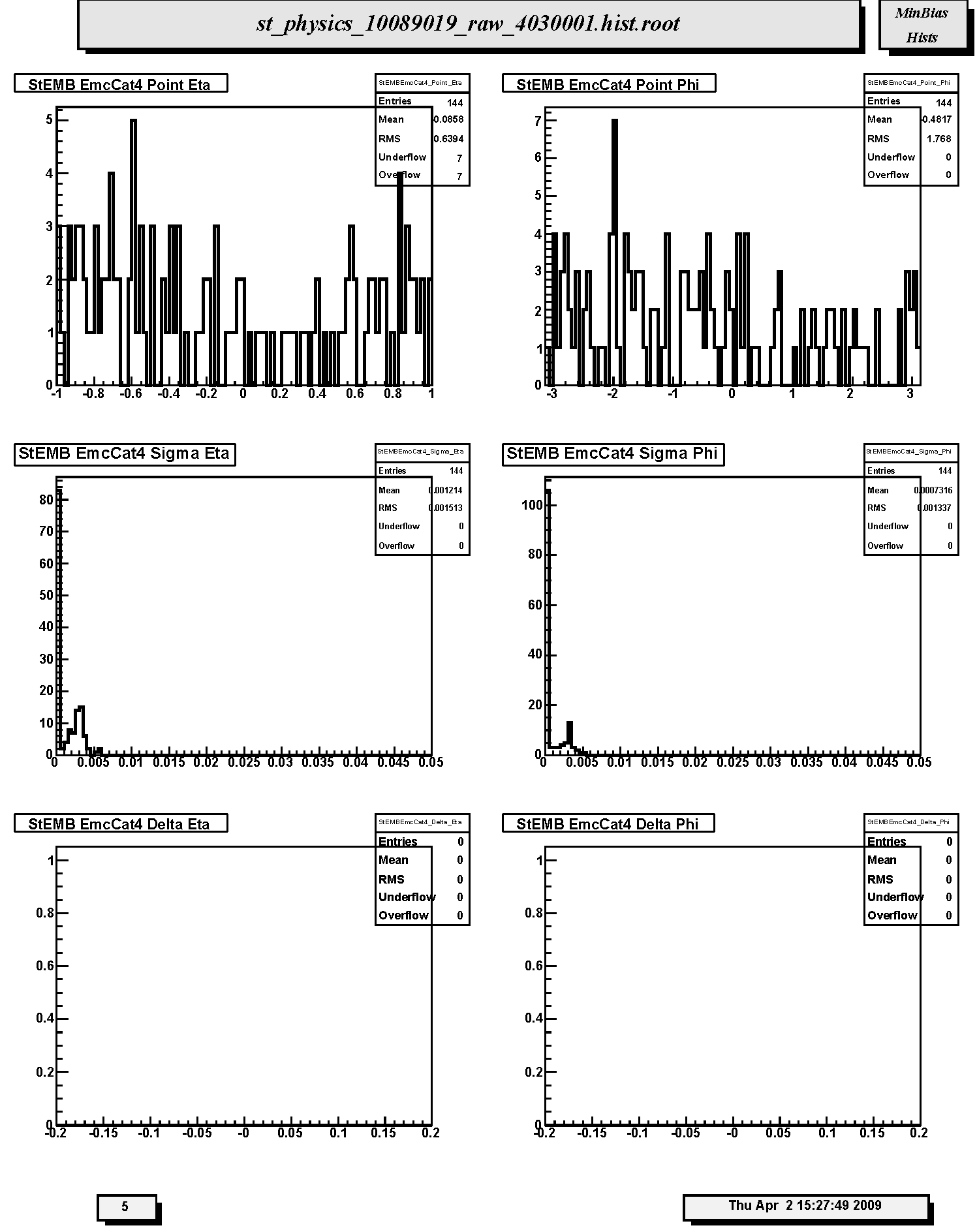

1. StEMB EmcCat4 Point Eta: Pseudorapidity distribution for EMC-SMD Category 4 clusters. Distribution should nominally be flat.

2. StEMB EmcCat4 Point Phi: Azimuthal distribution for EMC-SMD Category 4 clusters. Distribution should nominally be flat.

3. StEMB EmcCat4 Sigma Eta: Distribution of widths (sigma) of Category 4 Barrel EMC-SMD clusters with respect to pseudorapidity.

4. StEMB EmcCat4 Sigma Phi: Distribution of widths (sigma) of Category 4 Barrel EMC-SMD clusters with respect to azimuthal angle.

5. StEMB EmcCat4 Delta Eta: Differences between centroids of Category 4 Barrel EMC-SMD clusters and projected positions of TPC tracks at BEMC with respect to pseudorapidity. Should be peaked at ~0. Not filled in Run 10.

6. StEMB EmcCat4 Delta Phi: Differences between centroids of Category 4 Barrel EMC-SMD clusters and projected positions of TPC tracks at BEMC with respect to azimuthal angle. Should be peaked at ~0. Not filled in Run 10.

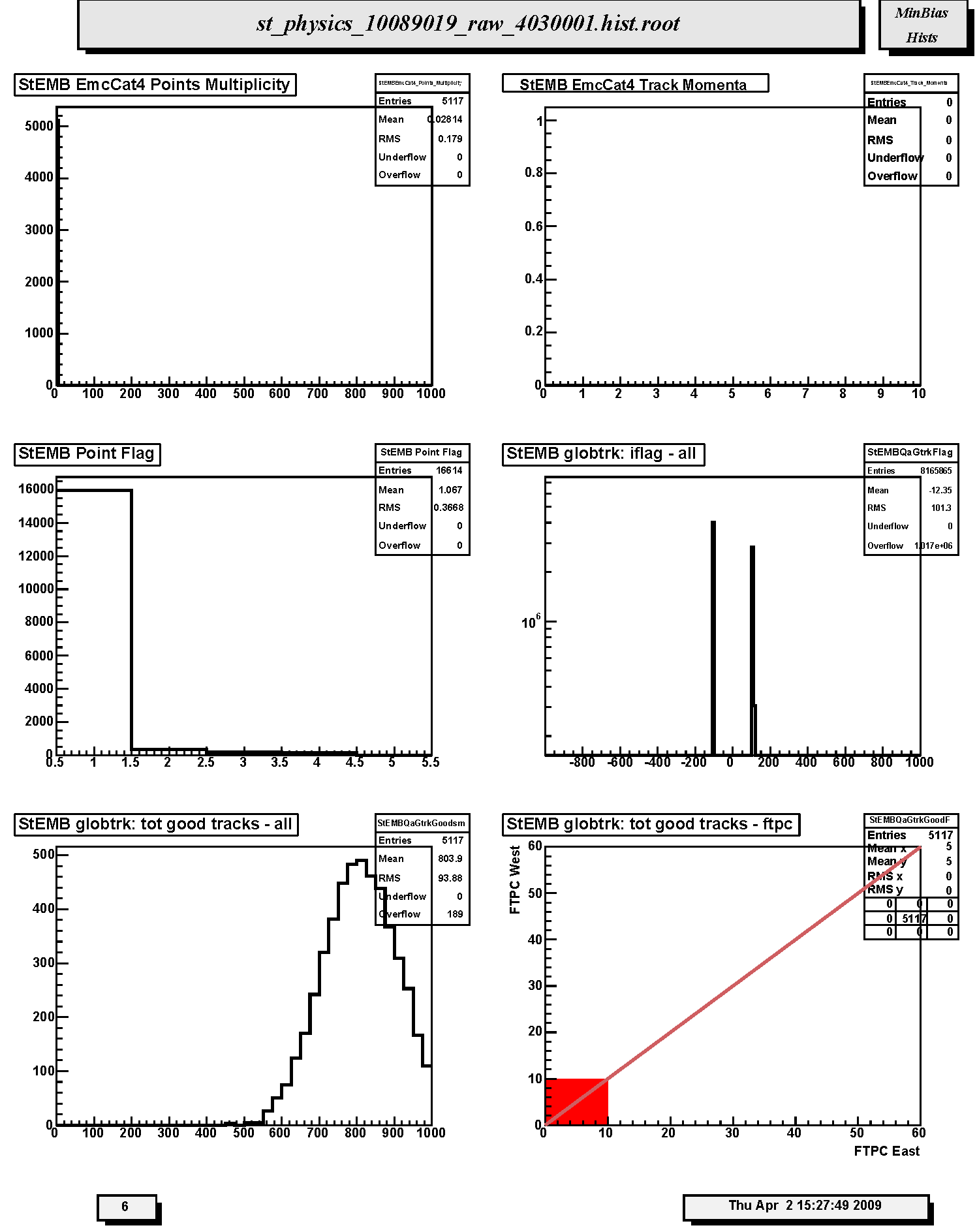

1. StEMB EmcCat4 Points Multiplicity: Frequency distribution of number of Category 4 clusters per event.

2. StEMB EmcCat4 Track Momenta : Distribution of TPC global track momentum for Barrel EMC-SMD Category 4 clusters. Not filled in Run 10.

3. StEMB Point Flag: Distribution of Barrel EMC and SMD cluster types by Category number. There should be a reasonable fraction of Cat4 clusters as in this example histogram; report if this fraction drops significantly.

4. StEMB globtrk: iflag - all : Quality flag values for all global tracks. Some with large, negative values may not appear on plot; check stat. box for underflows. Majority of tracks should have iflag>0, corresponding to good, usable tracks. Refer to: dst_track_flags.html and kalerr.html for description of flag values. Note that in Runs 7-10 about half the tracks have iflag < 0.

5. StEMB globtrk: tot good tracks - all: Distribution of the number of good global tracks in the TPC per trigger; including pileup.

6. StEMB globtrk: tot good tracks - ftpc: Not filled in this run. Scatter plot of good global track multiplicities in FTPC West versus FTPC East.

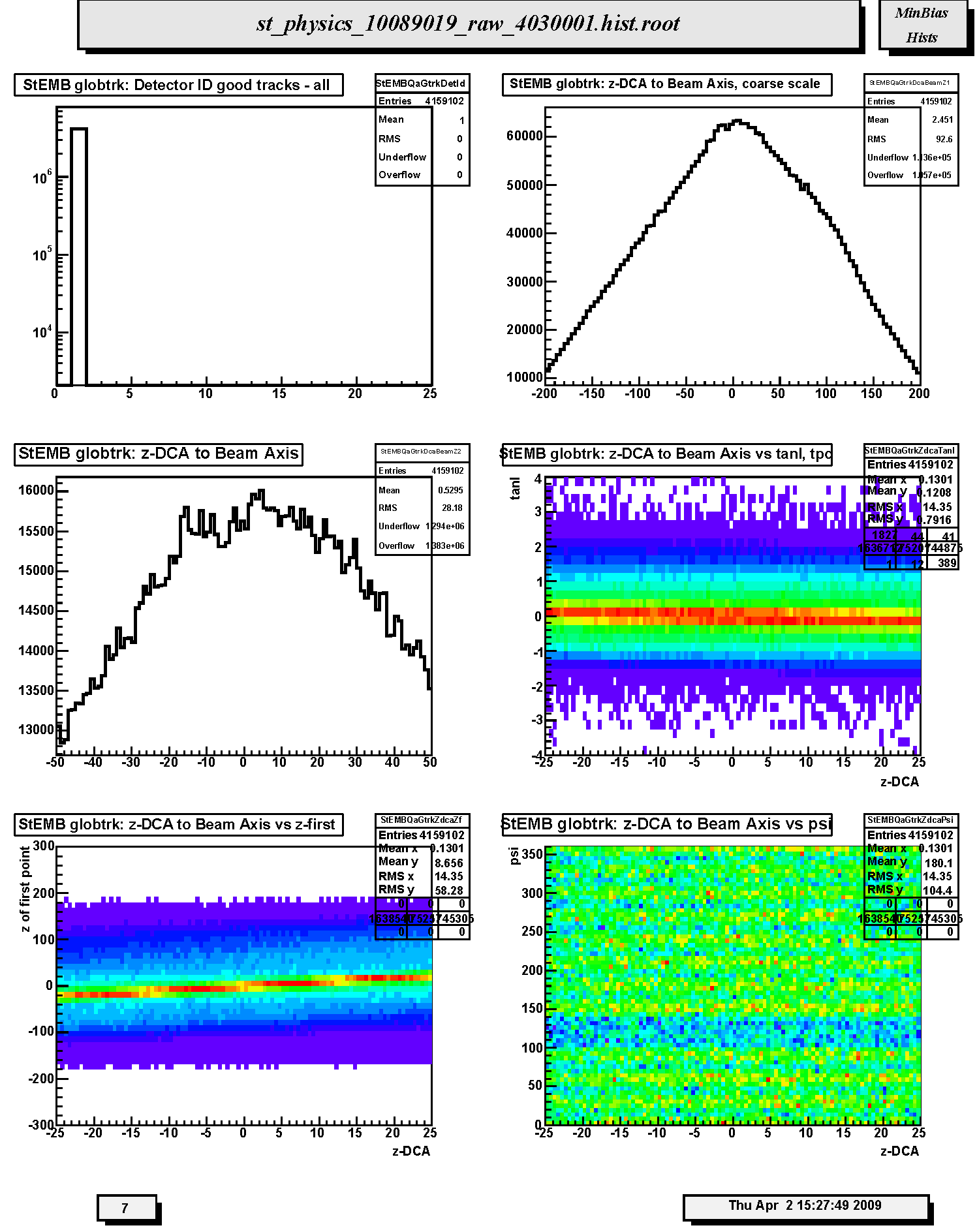

1. StEMB globtrk: Detector ID good tracks - all: Global track detector IDs for good tracks. Refer to: /afs/rhic/star/packages/DEV00/pams/global/inc/StDetectorDefinitions.h for Detector ID codes.

2. StEMB globtrk: z-DCA to Beam Axis, coarse scale: Coarse scale distribution along the z-axis (from -200 to +200 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Peaks indicate probable locations of individual collision vertices however indentification of individual collision vertices is unlikely.

3. StEMB globtrk: z-DCA to Beam Axis: Fine scale distribution along the z-axis (from -50 to +50 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Peaks indicate probable locations of individual collision vertices.

4. StEMB globtrk: z-DCA to Beam Axis vs tanl, tpc: Scatter plot of the tangent of the dip angle (tanl) versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Vertical bands should be seen corresponding to individual events. The bands should be smooth and continuous; breaks at tanl=0 indicate probable TPC calibration errors in either the t0 offset or the drift speed. This is best seen for high multiplicity events. Cross reference for calibration errors with z-DCA to beam axis versus z-coord. of first hit on track.

5. StEMB globtrk: z-DCA to Beam Axis vs z-first: Scatter plot of the z-coordinate of the first fitted hit in the TPC versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC-only global in which the first point used in the fit lies in the TPC. Vertical bands should be seen corresponding to individual events. The bands should be smooth and continuous; breaks at z=0 indicate TPC calibration errors in either the t0 offset or the drift speed. This is best seen for high multiplicity events.

6. StEMB globtrk: z-DCA to Beam Axis vs psi: Scatter plot of the azimuthal direction angle (psi) versus the z-coordinate (from -25 to 25 cm) of the DCA points to the nominal beam line (z-axis, x=y=0) for all TPC global tracks. Vertical bands should be seen corresponding to individual events. The bands should be smooth, straight and continuous indicating azimuthal symmetry in the tracking. Bends or offsets could indicate problems in individual TPC sectors such as voltage sags or drifts. These are best studied with high multiplicity events.

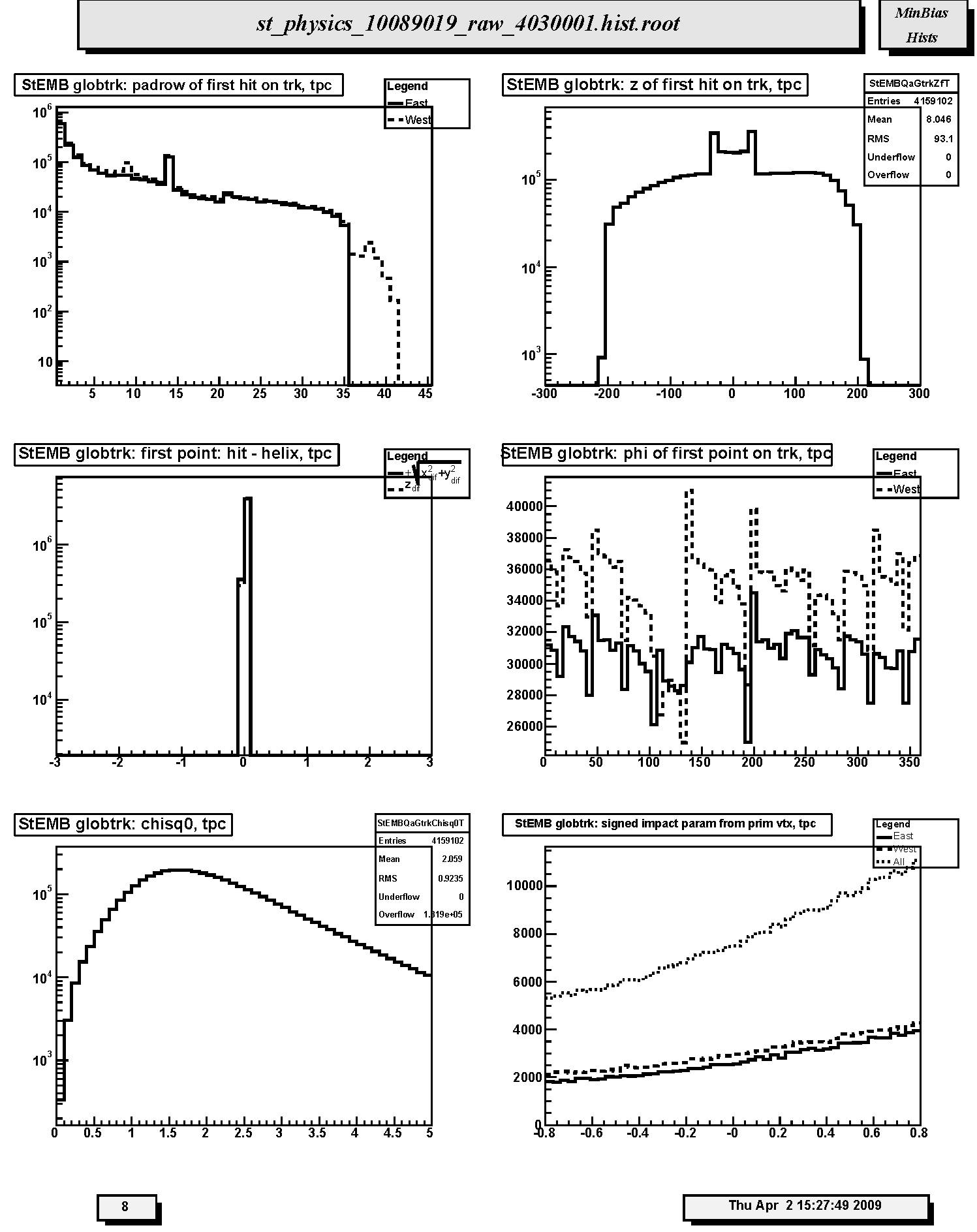

1. StEMB globtrk: padrow of first hit on trk, tpc: Distribution of first fitted space point with respect to pad row number for TPC-only global tracks. Should peak at 1 with a minor peak at padrow 14 (beginning of outer sector); the latter should not be too big relative to that at row 1. Other peaks may indicate large, missing sections of the TPC read-out causing breaks, and consequently additional, false new starting points for tracks. The excess data for TPC west beyond padrow 35 is due to EEMC tracking needs.

2. StEMB globtrk: z of first hit on trk, tpc: Distribution of first fitted space point with respect to z for TPC-only global tracks. Should be approx. symmetric.