Run 12 CuAu

Updated on Wed, 2015-04-01 14:00. Originally created by genevb on 2015-04-01 10:45.

Under:

The TPC drift velocities were re-calibrated using the most recent TPC alignment. The dataset included 5 different trigger setups: cuAu_production_2012, AuAu5Gev_test, CosmicLocalClock, laser_localclock, laser_rhicclock. The AuAu5Gev_test data was two runs taken on day 179 (the last two points in my plots below) with ReversedHalfField.

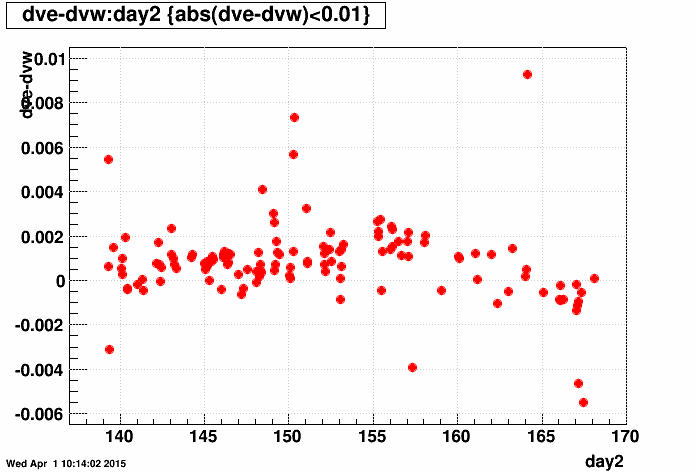

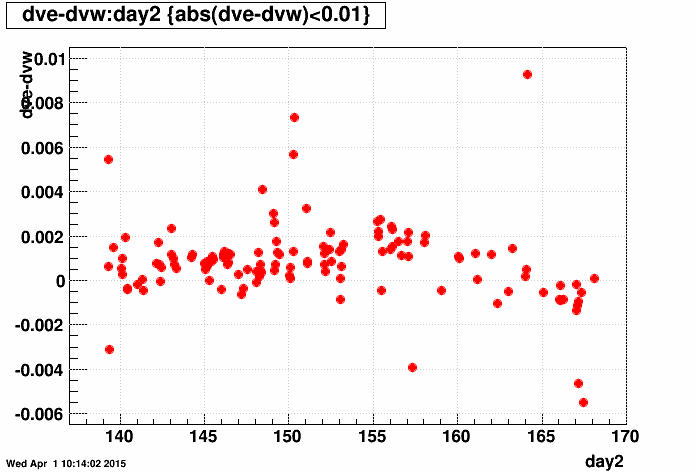

The first question is whether to use separate east and west drift velocities. Here are plots of the DVeast-DVwest difference vs. day for when both drift velocities could be measured, and then a simple histogram of the difference. The difference seems to be at the level of ~0.001+/-0.001 cm/μs, with only very little time dependence. So this effect is ~0.2 parts per mil (similar to what was seen in the You do not have access to view this node), or a difference of ~400 μm at the central membrane (full drift).

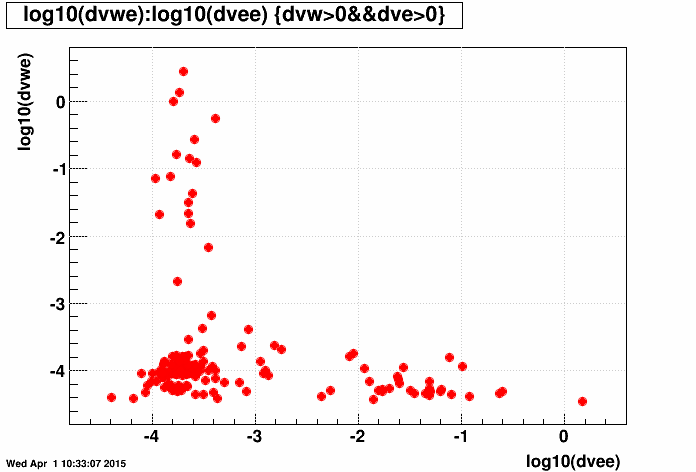

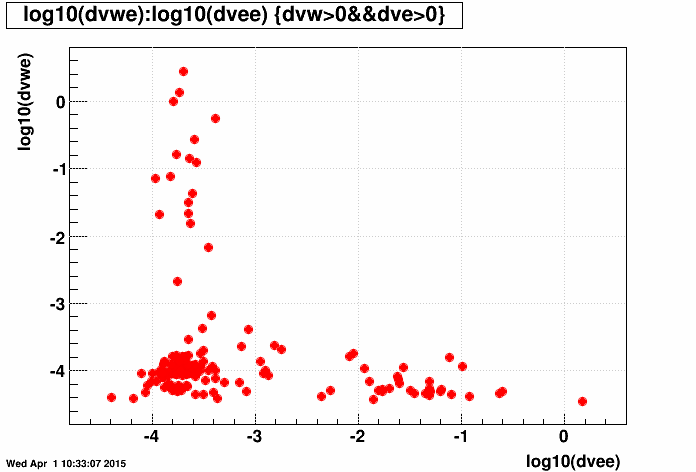

As further information on the separated east and west measurements, here are the errors on west (log10) vs. errors on east, errors on using the whole TPC vs. east, and whole vs. west. There are classes of runs where (1) the east was bad, (2) the west was bad, and (3) both east and west were reasonable. In class 3, the west error was typically less than the east error, and the result was that the error on using the whole TPC was dominated by the west error for those events.

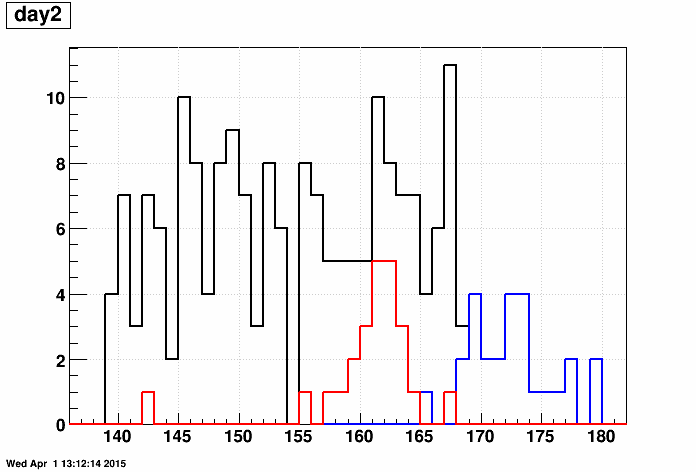

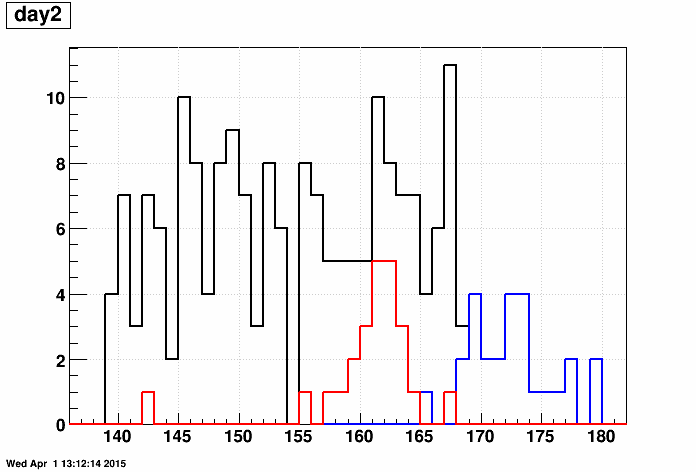

One more piece of interesting information is the distribution of days when we failed to get the separated east and west drift velocities. The following plot is a histogram of the days associated with all drift velocity measurements that came out of the calibration (black), along with the days when the east failed to get a value (red) and days the west failed (blue). The east laser was clearly bad for a large fraction of runs in the day 157-164 range, while the west laser was bad for all runs from day ~168 on!

The missing separability of east and west for large portions of the CuAu data, combined with the small magnitude of the east-west difference for a dataset which is TPC-only tracking, pushes us toward using the whole TPC for the calibration (symmetric drift velocities).

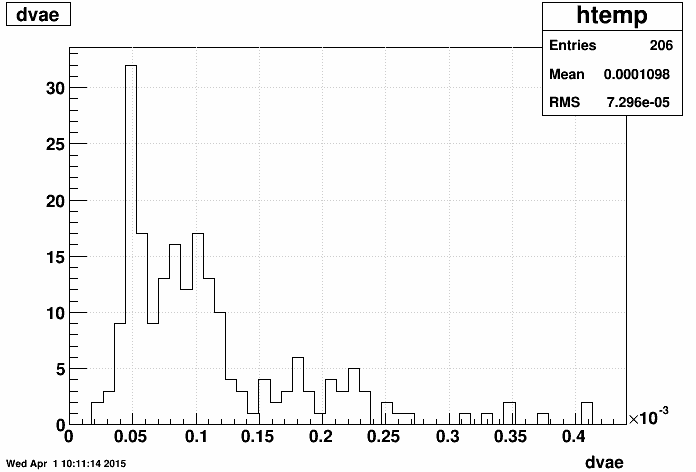

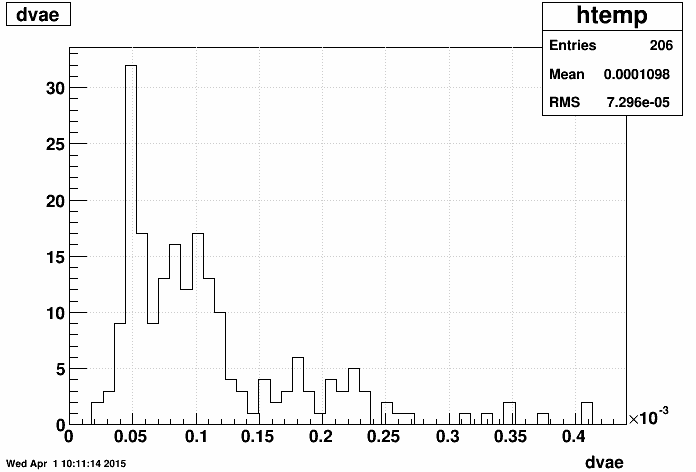

The next question is whether to cut on some maximum measurement error more tightly than what the calibration macro already does in order to remove outliers. Here is the distribution of errors:

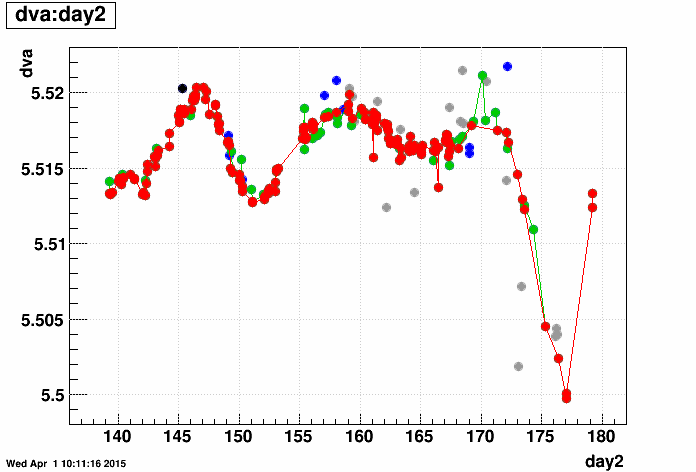

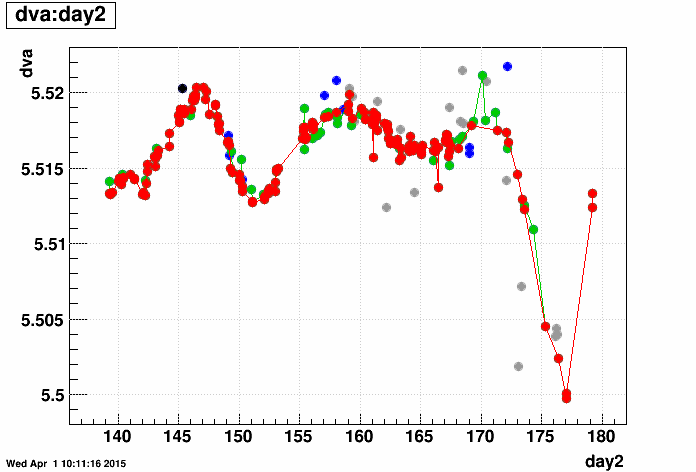

It looks like potential tighter cuts may be at either ~0.15e-3 cm/μs or ~0.3e-3 cm/μs. In the plot below, I show the following whole TPC drift velocity measurements vs. day:

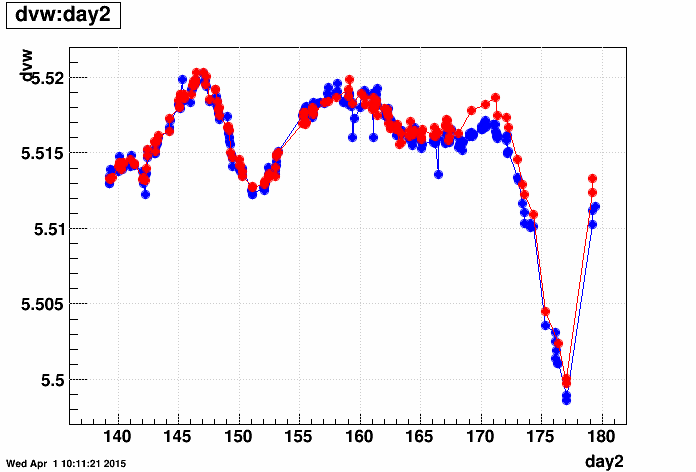

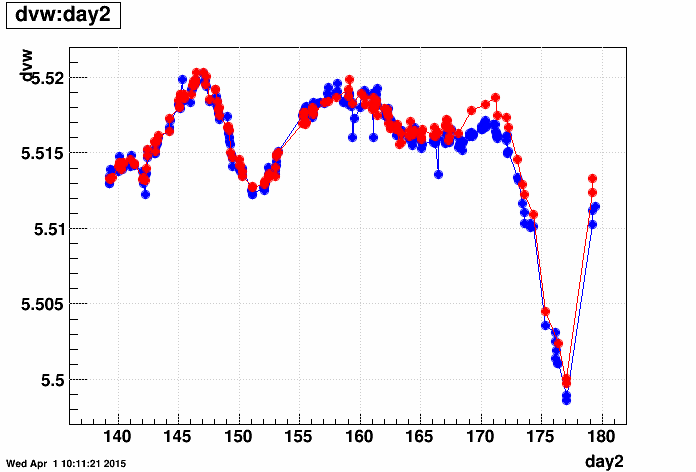

It appears that there are some outliers even in the red data to be removed, while we might actually want to keep a couple of the green ones near days 170-171. Doing so results in the dataset shown in red below which will be uploaded to the database. As a comparison, the plot below also includes in blue the data which was already in the database from the during-Run-12 calibration of drift velocities (using the old alignment, old raft positions, and the old LoopOverLaserTrees.C macro):

Some things to see in the comparion with the old calibration:

A final comment is that the last drift velocity taken during CuAu was measured using the last production run (13177009), not in a dedicated laser run afterwards. And the next drift velocity value measured two days later was significantly different (by value and slope, or trend). As we record the drift velocity for the timestamp at the beginning of the run, is this a problem for the interpolated drift velocity by the end of run 13177009? As the run is only ~half an hour long, the answer is no: the interpolated value at the end of run 13177009 will move by ~1% of the difference to the next value, or ~1% x 0.01 cm/μs = ~0.0001 cm/μs, which appears to be below both our sensitivity for physics and roughly at the level of our measurement error anyhow. So we can ignore this.

Therefore, the current proposal is to deactivate the old database entries, and upload the set used in making the red data points in the new vs. old calibration plot shown above.

-Gene

The first question is whether to use separate east and west drift velocities. Here are plots of the DVeast-DVwest difference vs. day for when both drift velocities could be measured, and then a simple histogram of the difference. The difference seems to be at the level of ~0.001+/-0.001 cm/μs, with only very little time dependence. So this effect is ~0.2 parts per mil (similar to what was seen in the You do not have access to view this node), or a difference of ~400 μm at the central membrane (full drift).

As further information on the separated east and west measurements, here are the errors on west (log10) vs. errors on east, errors on using the whole TPC vs. east, and whole vs. west. There are classes of runs where (1) the east was bad, (2) the west was bad, and (3) both east and west were reasonable. In class 3, the west error was typically less than the east error, and the result was that the error on using the whole TPC was dominated by the west error for those events.

One more piece of interesting information is the distribution of days when we failed to get the separated east and west drift velocities. The following plot is a histogram of the days associated with all drift velocity measurements that came out of the calibration (black), along with the days when the east failed to get a value (red) and days the west failed (blue). The east laser was clearly bad for a large fraction of runs in the day 157-164 range, while the west laser was bad for all runs from day ~168 on!

The missing separability of east and west for large portions of the CuAu data, combined with the small magnitude of the east-west difference for a dataset which is TPC-only tracking, pushes us toward using the whole TPC for the calibration (symmetric drift velocities).

The next question is whether to cut on some maximum measurement error more tightly than what the calibration macro already does in order to remove outliers. Here is the distribution of errors:

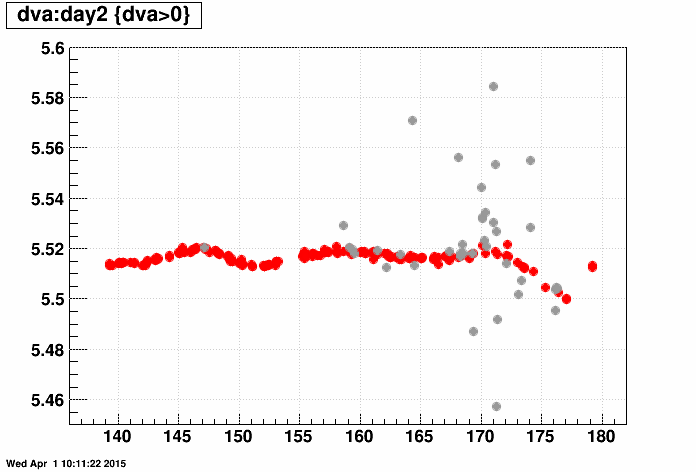

It looks like potential tighter cuts may be at either ~0.15e-3 cm/μs or ~0.3e-3 cm/μs. In the plot below, I show the following whole TPC drift velocity measurements vs. day:

- black: a single laser_rhicclock measurement that appears to be an outlier (will be discarded)

- grey: values as reported by the LanaTrees.C macro which failed the requirements to output a database entry (e.g. errors are much too large)

- red: values with errors less than 0.15e-3 cm/μs

- green: values with errors between 0.15e-3 and 0.2e-3 cm/μs

- blue: values with errors between 0.2e-3 and 0.3e-3 cm/μs

It appears that there are some outliers even in the red data to be removed, while we might actually want to keep a couple of the green ones near days 170-171. Doing so results in the dataset shown in red below which will be uploaded to the database. As a comparison, the plot below also includes in blue the data which was already in the database from the during-Run-12 calibration of drift velocities (using the old alignment, old raft positions, and the old LoopOverLaserTrees.C macro):

Some things to see in the comparion with the old calibration:

- The old calibration had some outliers (a couple were identical to outliers in the new calibration) which hadn't been removed.

- Before day 168, the two calibrations seem very similar; after day 168 the new calibration is systematically higher than the old by ~0.002 cm/μs. This seems to coincide with when the west laser became bad. We've seen that the east drift velocity measurements tend to be a little higher than the west by a comparable amount, but the lack of good west laser would be the case for both old and new calibrations.

- It is not obvious from the data shown here whether the old calibration was more trustworthy with poor west laser, or the new calibration, but the naive assumption would be to trust the new calibration more.

- And is it worth trying to correct the values for the absence of west to pull them down? Given that this is a sub-millimeter effect in a TPC-only tracking dataset, probably not.

- The old calibration included several more measurements between days 168-170 than the new calibration. I found that several of those runs had failed the requirement from LanaTrees.C to generate a database entry. Below is a plot of all drift velocities which came out of the new calibration with database entries (red, no other cuts applied) along with the drift velocities reported by the macro that failed (gray) (similar to the earlier plot, but not restricting the drift velocity range of the scatter plot). From this, one can clearly see that there was a surge in poor laser data in the latter half of the CuAu data operations that LanaTrees.C was perhaps more sensitive to than the old LoopOverLaserTrees.C.

A final comment is that the last drift velocity taken during CuAu was measured using the last production run (13177009), not in a dedicated laser run afterwards. And the next drift velocity value measured two days later was significantly different (by value and slope, or trend). As we record the drift velocity for the timestamp at the beginning of the run, is this a problem for the interpolated drift velocity by the end of run 13177009? As the run is only ~half an hour long, the answer is no: the interpolated value at the end of run 13177009 will move by ~1% of the difference to the next value, or ~1% x 0.01 cm/μs = ~0.0001 cm/μs, which appears to be below both our sensitivity for physics and roughly at the level of our measurement error anyhow. So we can ignore this.

Therefore, the current proposal is to deactivate the old database entries, and upload the set used in making the red data points in the new vs. old calibration plot shown above.

-Gene

»

- Printer-friendly version

- Login or register to post comments