Data readiness

The pages here relates to the data readiness sub-group of the S&C team. This area is comprise of calibration, database and quality assurance.

Please consult the You do not have access to view this node for a responsibility chart.

Calibration

STAR Calibrations

In addition to the child pages listed below:

Calibration Run preparation

This page is meant to organize information about the calibration preparations and steps required for each subdetector for upcoming runs.- Run preparation Run VI (2006)

- Run preparation Run VII

- preparation Run VIII (2008)

- You do not have access to view this node

Run 18 Calibration Datasets

Below are the calibration dataset considerations for isobar needs in Run 18:- EMC

- emc-check runs once per fill (at the start)

- For the purpose of EMC pedestals and status tables

- 50k events

- emc-check runs once per fill (at the start)

- TOF

- VPD

- Resolutions needs to be calibrated (for trigger) and confirmed to be similar for both species

- VPD

- TPC

- Laser runs

- For the purpose of calibrating TPC drift velocities

- Every few hours (as usual)

- Either dedicated laser runs, or included in physics runs

- ~5000 laser events

- GMT inclusion in a fraction of events

- For the purpose of understanding fluctuations in TPC SpaceCharge

- Trigger choice is not very important, as long as it has TPX and during most physics running

- Something around 100-200 Hz is sufficient (too much will cause dead time issues for the trigger)

- Vernier scans

- For the purpose of understanding backgrounds in the TPC that may be different between species

- Once for each species under typical operating conditions

- 4 incremental steps of collision luminosity, each step ~1 minute long and on the order of ~50k events (total = ~4 minutes)

- TPX, BEMC, BTOF must be included

- Minimum bias trigger with no (or wide) vertex z cut

- You do not have access to view this node

- Low luminosity fill IF a TPC shorted field cage ring occurs

- Only one species needed (unimportant which)

- Minimum bias trigger with no (or wide) vertex z cut

- ~1M events

- ZDC coincidence rates below 3 kHz

- Old ALTRO thresholds run

- For the purpose of TPC dE/dx understanding

- Only one species needed (unimportant which)

- ~2M events

- Could be at the end of a fill, or any other convenient time during typical operations

- Magnetic field flipped to A ("forward") polarity before AuAu27 data

- For the purpose of acquiring sufficient cosmic ray data with both magnetic fields to understand alignment of new iTPC sector

- Laser runs

-Gene

Run preparation Run VI (2006)

This page is meant to organize information about the calibration preparations and steps required for each subdetector for upcoming runs. Items with an asterisk (*) need to be completed in advance of data.For the run in winter 2006, the plan is to take primarily pp data. This may lead to different requirements than in the past.

- TPC

-

- * Code development for 1 Hz scalers.

- * Testing of T0/Twist code with pp data.

- * Survey alignment information into DB.

- Drift velocity from lasers into DB (automated).

- T0 calibration as soon as data starts.

- Twist calibration as soon as data starts (for each field).

- SpaceCharge and GridLeak (SpaceCharge and GridLeak Calibration How-To Guide) as soon as T0/Twist calibrated (for each field).

- Would like a field flip to identify origins of offsets in SpaceCharge vs. scalers.

- dEdx: final calibration after run ends by sampling over the whole run period, but initial calibration can be done once TPC momentum is well-calibrated (after distortion corrections).

- FTPC

-

- HV calibration performed at start of data-taking.

- Temperatures into DB (automated)

- Rotation alignment of each FTPC done for each field (needs calibrated vertex from TPC[+SVT][+SSD]). There was concern about doing this calibration with the pp data - status???

- SVT

-

- Steps for pp should be the same as heavy ion runs, but more data necessary.

- Self-alignment (to be completed January 2006)

- Requires well-calibrated TPC.

- Requires a few million events (a week into the run?)

- Would like field flip to discriminate between SVT/SSD alignment vs. TPC distortions.

- SSD

-

- * Code development in progress (status???)

- Requires well-calibrated TPC.

- EEMC

-

- EEMC initial setting & monitoring during data taking relies on prompt and fully automatic muDst EzTree production for all minias-only fast-only runs. Assume fast offline muDst exist on disk for 1 week.

- Initial settings for HV: 500k minbias fast-triggered events will give slopes necessary to adjust relative gans. Same 60 GeV E_T maximum scale as in previous years.

- Pedestals from 5k minbias events, once per fill.

- Stability of towers from one 200k minbias run per fill.

- Highly prescaled minbias and zerobias events in every run for "general monitoring" (e.g. correlated pedestal shift)

- Offline calibrations unnecessary for production (can be done on the MuDst level).

- "Basic" offline calibration from MIP response in 5M minbias fast events (taken a few weeks into the run)

- "Final" offline calibration from pi0 (or other TBD) signal requires "huge" statistics of EHT and EJP triggers to do tower-by-tower (will need the full final dataset).

- * Calibration codes exist, but are scattered. Need to be put in CVS with consistent paths and filenames.

- BEMC

-

- * LED runs to set HV for 60 GeV E_T maximum, changed from previous years (status???).

- Online HV calibration from 300k minbias events (eta ring by eta ring) - requires "full TPC reconstruction".

- MuDsts from calibration runs feedback to run settings (status???).

- Pedestals from low multiplicity events from the event pool every 24 hours.

- Offline calibrations unnecessary for production (can be done on the MuDst level).

- "Final" offline tower-by-tower calibration from MIPs and electrons using several million events.

- TOF

-

- upVPD upgrade (coming in March 2006) for better efficiency and start resolution

- Need TPC momentum calibrated first.

- Requires several million events, less with upVPD (wait for upVPD???)

Run preparation Run VII

There is some question as to whether certain tasks need to be done this year because the detector was not moved during the shutdown period. Omitting such tasks should be justified before skipping!

- TPC

-

- Survey alignment information into DB (appears to be no survey data for TPC this this year)

- High stats check of laser drift velocity calibration once there's gas in the TPC: 30k laser events with and without B field.

- Check of reversed GG cable on sector 8 (lasers) once there's gas in the TPC: same laser run as above

- Drift velocity from laser runs (laser runs taken once every 3-4 hours, ~2-3k events, entire run) into DB (automated); check that it's working

- T0 calibration, new method from laser runs (same laser runs as above).

- Twist calibration as soon as data starts: ~100k events, preferrably central/high multiplicity, near start of running for each field

- SpaceCharge and GridLeak (SpaceCharge and GridLeak Calibration How-To Guide) as soon as T0/Twist calibrated: ~100k events from various luminosities, for each field.

- BBC scaler study for correlation with azimuthally asymmetric backgrounds in TPC: needs several days of generic data.

- Zerobias run with fast detectors to study short time scale fluctuations in luminosity (relevant for ionization distortions): needs a couple minutes of sequential, high rate events taken at any time.

- Need a field flip to identify origins of offsets in SpaceCharge vs. scalers as well as disentangling TPC distortions from silicon alignment.

- dEdx: final calibration after run ends by sampling over the whole run period, but initial calibration can be done once TPC momentum is well-calibrated (after distortion corrections).

- FTPC

-

- HV calibration performed at start of data-taking (special HV runs).

- Temperatures into DB (automated)

- Rotation alignment of each FTPC done for each field (needs calibrated vertex from TPC[+SVT][+SSD]): generic collision data

- SSD

-

- Pulser runs (for initial gains and alive status?)

- Initial alignment can be done using roughly-calibrated TPC: ~100k minbias events.

- P/N Gain-matching (any special run requirements?)

- Alignment, needs fully-calibrated TPC: 250k minbias events from one low luminosity (low TPC distortions/background/occupancy/pile-up) fill, for each field, +/-30cm vertex constraint; collision rate preferrably below 1kHz.

- SVT

-

- Temp oscillation check with lasers: generic data once triggers are set.

- Initial alignment can be done using roughly-calibrated TPC+SSD: ~100k minbias events.

- Alignment, needs fully-calibrated TPC: 250k minbias events from one low luminosity fill (see SSD).

- End-point T0 + drift velocity, needs fully-calibrated SSD+TPC: same low luminosity runs for initial values, watched during rest of run.

- Gains: same low luminosity runs.

- EEMC

-

- Timing scan of all crates: few hours of beam time, ~6 minb-fast runs (5 minutes each) for TCD phase of all towers crates, another 6 minbias runs for the timing of the MAPMT crates, 2 days analysis

- EEMC initial setting & monitoring during data taking: requests to process specific data will be made as needed during the run.

- Initial settings for HV: 200k minbias fast-triggered events will give slopes necessary to adjust relative gans, 2 days analysis

- Pedestals (for offline DB) from 5k minbias events, once per 5 hours

- Stability of towers from one 200k minbias-fast run per fill

- "General monitoring" (e.g. correlated pedestal shift) from highly prescaled minbias and zerobias events in every run.

- Beam background monitoring from highly prescaled EEMC-triggered events with TPC for at the beginning of each fill.

- Expect commissioning of triggers using EMC after one week of collisions

- Offline calibrations unnecessary for production (can be done on the MuDst level).

- "Basic" offline calibration from MIP response in 5M minbias fast events taken a few weeks into the run

- "Final" offline calibration from pi0 (or other TBD) signal requires "huge" statistics of EHT and EJP triggers to do tower-by-tower (still undone for previous runs).

- Calibration codes exist, but are scattered. Need to be put in CVS with consistent paths and filenames (status?)

- BEMC

-

- Timing scan of all crates

- Online HV calibration of towers - do for outliers and new PMTs/crates; needed for EMC triggering. Needs~5 minutes of minbias fast-triggered events (eta ring by eta ring) at beginning of running (once a day for a few days) - same runs as for EEMC.

- Online HV calibration of preshower - matching slopes. Not done before, will piggyback off other datasets.

- Pedestals from low multiplicity events from the event pool every 24 hours.

- Offline calibrations unnecessary for production (can be done on the MuDst level).

- "Final" offline tower-by-tower calibration from MIPs and electrons using several million events

- upVPD/TOF

-

- upVPD (calibration?)

- No TOF this year.

preparation Run VIII (2008)

This page is meant to organize information about the calibration preparations and steps required for each subdetector for upcoming runs.Previous runs:

Red means that nothing has been done for this (yet), or that this needs to continue through the run.

Blue means that the data has been taken, but the calibration task is not completed yet.

Black indicates nothing (more) remaining to be done for this task.

- TPC

-

- Survey alignment information into DB (appears to be no survey data for TPC this this year)

- Drift velocity from laser runs (laser runs taken once every 3-4 hours, ~2-3k events, entire run) into DB (automated); check that it's working

- T0 calibration, using vertex-matching (~500k events, preferably high multiplicity, once triggers are in place).

- Twist calibration as soon as data starts: same data

- SpaceCharge and GridLeak (SpaceCharge and GridLeak Calibration How-To Guide) as soon as T0/Twist calibrated: ~500k events from various luminosities, for each field.

- BBC scaler study for correlation with azimuthally asymmetric backgrounds in TPC: needs several days of generic data.

- Zerobias run with fast detectors to study short time scale fluctuations in luminosity (relevant for ionization distortions): needs a couple minutes of sequential, high rate events taken at any time.

- dEdx: final calibration after run ends by sampling over the whole run period, but initial calibration can be done once TPC momentum is well-calibrated (after distortion corrections).

- TPX

- ???

- FTPC

-

- HV calibration performed at start of data-taking (special HV runs).

- Temperatures into DB (automated)

- Rotation alignment of each FTPC done for each field (needs calibrated vertex from TPC[+SVT][+SSD]): generic collision data

- EEMC

-

- Timing scan of all crates: few hours of beam time, ~6 fast runs (5 minutes each) for TCD phase of all towers crates

- EEMC initial setting & monitoring during data taking: requests to process specific data will be made as needed during the run.

- Initial settings for HV: 500k minbias fast-triggered events will give slopes necessary to adjust relative gans, 2 days analysis

- Pedestals (for offline DB) from 5k minbias events, once per fill

- "General monitoring" (e.g. correlated pedestal shift) from highly prescaled minbias and zerobias events in every run.

- Beam background monitoring from highly prescaled EEMC-triggered events with TPC for at the beginning of each fill.

- Expect commissioning of triggers using EMC after one week of collisions

- Offline calibrations unnecessary for production (can be done on the MuDst level).

- "Basic" offline calibration from MIP response in 5M minbias fast events taken a few weeks into the run

- "Final" offline calibration from pi0 (or other TBD) signal requires "huge" statistics of EHT and EJP triggers to do tower-by-tower.

- Calibration codes exist, but are scattered. Need to be put in CVS with consistent paths and filenames (status?)

- BEMC

-

- Timing scan of all crates

- Online HV calibration of towers - do for outliers and new PMTs/crates; needed for EMC triggering. Needs~5 minutes of minbias fast-triggered events (eta ring by eta ring) at beginning of running (once a day for a few days) - same runs as for EEMC.

- Online HV calibration of preshower - matching slopes. (same data).

- Pedestals from low multiplicity events from the event pool every 24 hours.

- Offline calibrations unnecessary for production (can be done on the MuDst level).

- "Final" offline tower-by-tower calibration from MIPs and electrons using several million events

- PMD

-

- Hot Cells (~100k generic events from every few days?)

- Cell-by-cell gains (same data)

- SM-by-SM gains (same data)

- VPD/TOF

-

- T-TOT (~3M TOF-triggered events)

- T-Z (same data)

Calibration Schedules

The listed dates should be considered deadlines for production readiness. Known issues with any of the calibrations by the deadline should be well-documented by the subsystems.- Run 15: projected STAR physics operations end date of

2015-06-19 (CAD)2015-06-22- pp200

- tracking: 2015-07-22

- all: 2015-08-22

- pAu200

- tracking: 2015-08-22

- all: 2015-09-05

- pAl200

- tracking: 2015-09-22

- all: 2015-10-06

- pp200

Calibration topics by dataset

Focus here will be on topics of note by datasetRun 12 CuAu200

Regarding the P14ia Preview Production of Run 12 CuAu200 from early 2014:A check-list of observables and points to consider to help understand alayses' sensitivity to non-final calibrations

To unambiguously see issues due to mis-calibration of the TPC, stringent determination of triggered-event tracks is necessary. Pile-up tracks are expected to be incorrect in many ways, and they constitute a larger and larger fraction of TPC tracks as luminosity grows, so their inclusion can lead to luminosity-dependencies of what appear to be mis-calibrations but are not.

- TPC dE/dx PID (not calibrated)

- differences between real data dE/dx peaks' means and width vs. nsigma provided in the MuDst

- variability of these differences with time

- TPC alignment (old one used)

- sector-by-sector variations in charge-separated signed DCAs and momentum spectra (e.g h-/h+ pT spectra) that are time- and luminosity-independent

- differences in charge-separated invariant masses from expectations that are time- and luminosity-independent

- any momentum effects (including invariant masses) grow with momentum: delta(pT) is proprotional to q*pT^2

- alternatively, and perhaps more directly, delta(q/pT) effects are constant, and one could look at charge-separated 1/pT from sector-to-sector

- TPC SpaceCharge & GridLeak (preliminary calibration)

- sector-by-sector variations in charge-separated DCAs and momentum spectra that are luminosity-dependent

- possible track splitting between TPC pad rows 13 and 14 + possible track splitting at z=0, in the radial and/or azimuthal direction

- differences in charge-separated invariant masses from expectations that are luminosity-dependent

- any momentum effects (including invariant masses) grow with momentum: delta(pT) is proprotional to q*pT^2

- alternatively, and perhaps more directly, delta(q/pT) effects are constant, and one could look at charge-separated 1/pT from sector-to-sector

- TPC T0 & drift velocities (preliminary calibration)

- track splitting at z=0, in the z direction

- splitting of primary vertices into two close vertices (and subsequent irregularities in primary track event-wise

distributions)

TOF PID (VPD not calibrated, BTOF calibration from Run 12 UU used)

[particularly difficult to disentangle from TPC calibration issues]- not expected to be much of an issue, as the "startless" mode of using BTOF was forced (no VPD) and the calibration used for BTOF is expected to be reasonable

broadening of, and differences in mass^2 peak positions from expectations are more likely due to TPC issues (particularly if charge-dependent, as BTOF mis-calibrations should see no charge sign dependence) while TOF results may not be the best place to identify TPC issues, it is worth noting that BTOF-matching is beneficial to removing pile-up tracks from studies

Docs

Miscellaneous calibration-related documentsIntrinsic resolution in a tracking element

Foreword: This has probably been worked out in a textbook somewhere, but I wanted to write it down for my own sake. This is a re-write (hopefully more clear, with slightly better notation) of Appendix A of my PhD thesis (I don't think it was well-written there)...-Gene

_______________________

Let's establish a few quantities:

- Eintr : error on the measurement by the element in question

- σintr2 = <Eintr2> : intrinsic resolution of the element, and its relation to an ensemble of errors in measurement

- Eproj : error on the track projection to that element (excluding the element from the track fit)

- σproj2 = <Eproj2> : resolution of the track projection to an element, and its relation to an ensemble of errors in track projections

- Etrack : error on track fit at an element including the element in the fit

- Rincl = Eintr - Etrack : residual difference between the measurement and the inclusive track fit

- σincl2 = <(Eintr - Etrack)2> : resolution from the inclusive residuals

- Rexcl = Eintr - Eproj : residual difference between the measurement and the exclusive track fit

- σexcl2 = <(Eintr - Eproj)2> : resolution from the exclusive residuals

Our goal is to determine σintr given that we can only observe σincl and σexcl.

To that end, we utilize a guess, σ'intr, and write down a reasonable estimation of Etrack using a weighted average of Eintr and Eproj, where the weights are wproj = 1/σproj2, and wintr = 1/σ'intr2:

= [(Eintr / σ'intr2) + (Eproj / σproj2)] / [(1/σ'intr2) + (1/σproj2)]

= [(σproj2 Eintr) + (σ'intr2 Eproj)] / (σ'intr2 + σproj2)

Substituing this, we find...

= <Eintr2> - 2 <Eintr Etrack> + <Etrack2>

= σintr2 - 2 <Eintr {[(σproj2 Eintr) + (σ'intr2 Eproj)] / (σ'intr2 + σproj2)}> + <{[(σproj2 Eintr) + (σ'intr2 Eproj)] / (σ'intr2 + σproj2)}2}>

Dropping terms of <Eintr Eproj>, replacing terms of <Eproj2> and <Eintr2> with σproj2 and σintr2 respectively, and multiplying through such that all terms on the right-hand-side of the equation have the denominator (σ'intr2 + σproj2)2, we find

= (σintr2 σ'intr4 + σ'intr4 σproj2) / (σ'intr2 + σproj2)2

= σ'intr4 (σintr2 + σproj2) / (σ'intr2 + σproj2)2

We can substitute for σproj2 using σexcl2 = σintr2 + σproj2:

σincl = σ'intr2 σexcl / (σ'intr2 + σexcl2 - σintr2)

And solving for σintr2 we find:

σintr = √{ σexcl2 - σ'intr2 [(σexcl / σincl) - 1] }

This is an estimator of σintr. Ideally, σintr and σ'intr should be the same. One can iterate a few times starting with a good guess for σ'intr and then replacing it in later iterations with the σintr found from the previous iteration until the two are approximately equal.

_______

-Gene

SVT Calibrations

SVT Self-Alignment

Using tracks fit with SVT points plus a primary vertex alone, we can self-align the SVT using residuals to the fits. This document explains how this can be done, but only works for the situation in which the SVT is already rather well aligned, and only small scale alignment calibration remains. The technique explained herein also allows for calibration of the hybrid drift velocities.

TPC Calibrations

TPC Calibration & Data-Readiness Tasks:

Notes:

* "Run", with a capital 'R', refers to a year's Run period, e.g. Run 10)

* Not all people who have worked on various tasks are listed as they were recalled only from (faulty) memory and only primary persons are shown. Corrections and additions are welcome.

- You do not have access to view this node

- Should be done each time the TPC may be moved (e.g. STAR is rolled out and back into the WAH) (not done in years)

- Must be done before magnet endcaps are moved in

- Past workers: J. Castillo (Runs 3,4), E. Hjort (Runs 5,6), Y. Fisyak (Run 14)

- TPC Pad Pedestals

- Necessary for online cluster finding and zero suppression

- Uses turned down anode HV

- Performed by DAQ group frequently during Run

- Past workers: A. Ljubicic

- TPC Pad Relative Gains & Relative T0s

- Necessary for online cluster finding and zero suppression

- Uses pulsers

- Performed by DAQ group occasionally during Run

- Past workers: A. Ljubicic

- TPC Dead RDOs

- Influences track reconstruction

- Monitored by DAQ

- Past workers: A. Ljubicic

- You do not have access to view this node

- Monitor continually during Run

- Currently calibrated from laser runs and uploaded to the DB automatically

- Past workers: J. Castillo (Runs 3,4), E. Hjort (Runs 5,6), A. Rose (Run 7), V. Dzhordzhadze (Run 8), S. Shi (Run 9), M. Naglis (Run 10), G. Van Buren (Run 12)

- TPC Anode HV

- Trips should be recorded to avoid during reconstruction

- Dead regions may influence track reconstruction

- Reduced voltage will influence dE/dx

- Dead/reduced voltage near inner/outer boundary will affect GridLeak

- Past workers: G. Van Buren (Run 9)

- TPC Floating Gated Grid Wires

- Wires no longer connected to voltage cause minor GridLeak-like distortions at one GG polarity

- Currently known to be two wires in Sector 3 (seen in reversed polarity), and two wires in sector 8 (corrected with reversed polarity)

- Past workers: G. Van Buren (Run 5)

- TPC T0s

- Potentially: global, sector, padrow

- Could be different for different triggers

- Once per Run

- Past workers: J. Castillo (Runs 3,4), Eric Hjort (Runs 5,6), G. Webb (Run 9), M. Naglis (Runs 10,11,12), Y. Fisyak (Run 14)

- You do not have access to view this node

- Dependence of reconstructed time on pulse height seen only in Run 9 so far (un-shaped pulses)

- Past workers: G. Van Buren (Run 9)

- You do not have access to view this node

- Known shorts can be automatically monitored and uploaded to the DB during Run

- New shorts need determination of location and magnitude, and may require low luminosity data

- Past workers: G. Van Buren (Runs 4,5,6,7,8,9,10)

- You do not have access to view this node

- Two parts: Inner/Outer Alignment, and Super-Sector Alignment

- Requires low luminosity data

- In recent years, done at least once per Run, and per magnetic field setting (perhaps not necessary)

- Past workers: B. Choi (Run 1), H. Qiu (Run 7), G. Van Buren (Run 8), G. Webb (Run 9), L. Na (Run 10), Y. Fisyak (Run 14)

- TPC Clocking (Rotation of east half with respect to west)

- Best done with low luminosity data

- Calibration believed to be infrequently needed (not done in years)

- Past workers: J. Castillo (Runs 3,4), Y. Fisyak (Run 14)

- TPC IFC Shift

- TPC Twist (ExB) (Fine Global Alignment)

- Best done with low luminosity data

- At least once per Run, and per magnetic field setting

- Past workers: J. Castillo (Runs 3,4), E. Hjort (Runs 5,6), A. Rose (Runs 7,8,9), Z. Ahammed (Run 10), R. Negrao (Run 11), M. Naglis (Run 12), J. Campbell (Runs 13,14), Y. Fisyak (Run 14)

- You do not have access to view this node

- Done with low (no) luminosity data from known distortions

- Done once (but could benefit from checking again)

- Past workers: G. Van Buren (Run 4), M. Mustafa (Run 4 repeated)

- You do not have access to view this node

- At least once per Run, and per beam energy & species, and per magnetic field setting

- Past workers: J. Dunlop (Runs 1,2), G. Van Buren (Runs 4,5,6,7,8-dAu,12-pp500), H. Qiu (Run 8-pp), J. Seele (Run 9-pp500), G. Webb (Run 9-pp200), J. Zhao (Run 10), A. Davila (Runs 11,12-UU192,pp200,pp500), D. Garand (Run 12-CuAu200), M Vanderbroucke (Run 13), M. Posik (Run 12-UU192 with new alignment), P. Federic (Run 12-CuAu200 R&D)

- You do not have access to view this node

- Once per Run, and per beam energy & species, and per magnetic field setting

- Past workers: Y. Fisyak (Runs 1,2,3,4,5,6,7,9), P. Fachini (Run 8), L. Xue (Run 10), Y. Guo (Run 11), M. Skoby (Runs 9-pp200,12-pp200,pp500), R. Haque (Run 12-UU192,CuAu200)

- TPC Hit Errors

- Once per Run, and per beam energy & species, and per magnetic field setting

- Past workers: V. Perev (Runs 5,7,9), M. Naglis (Runs 9-pp200,10,11,12-UU193), R. Witt (Run 12-pp200,pp500, Run13)

- TPC Padrow 13 and Padrow 40 static distortions

- Once ever

- Past workers: Bum Choi (Run 1), J. Thomas (Runs 1,18,19), G. Van Buren (Runs 18,19) I. Chakaberia (Runs 18,190

To better understand what is the effect of distortions on momentum measurements in the TPC, the attached sagitta.pdf file shows the relationship between track sagitta and its transverse momentum.

Miscellaneous TPC calibration notes

The log file for automated drift velocity calculations is at ~starreco/AutoCalib.log.Log files for fast offline production are at /star/rcf/prodlog/dev/log/daq.

The CVS area for TPC calibration related scripts, macros, etc., is StRoot/macros/calib.

Padrow 13 and Padrow 40 static distortions

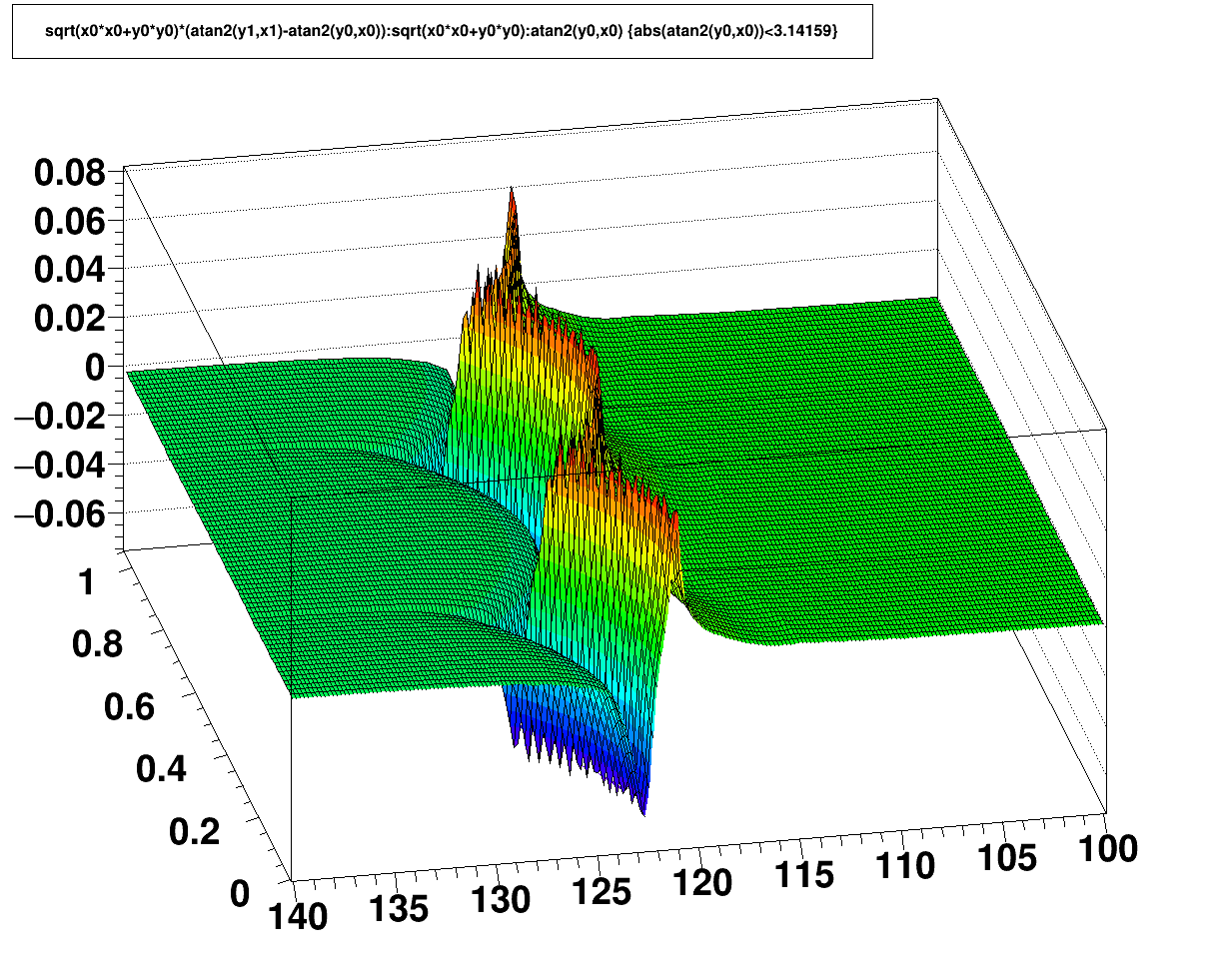

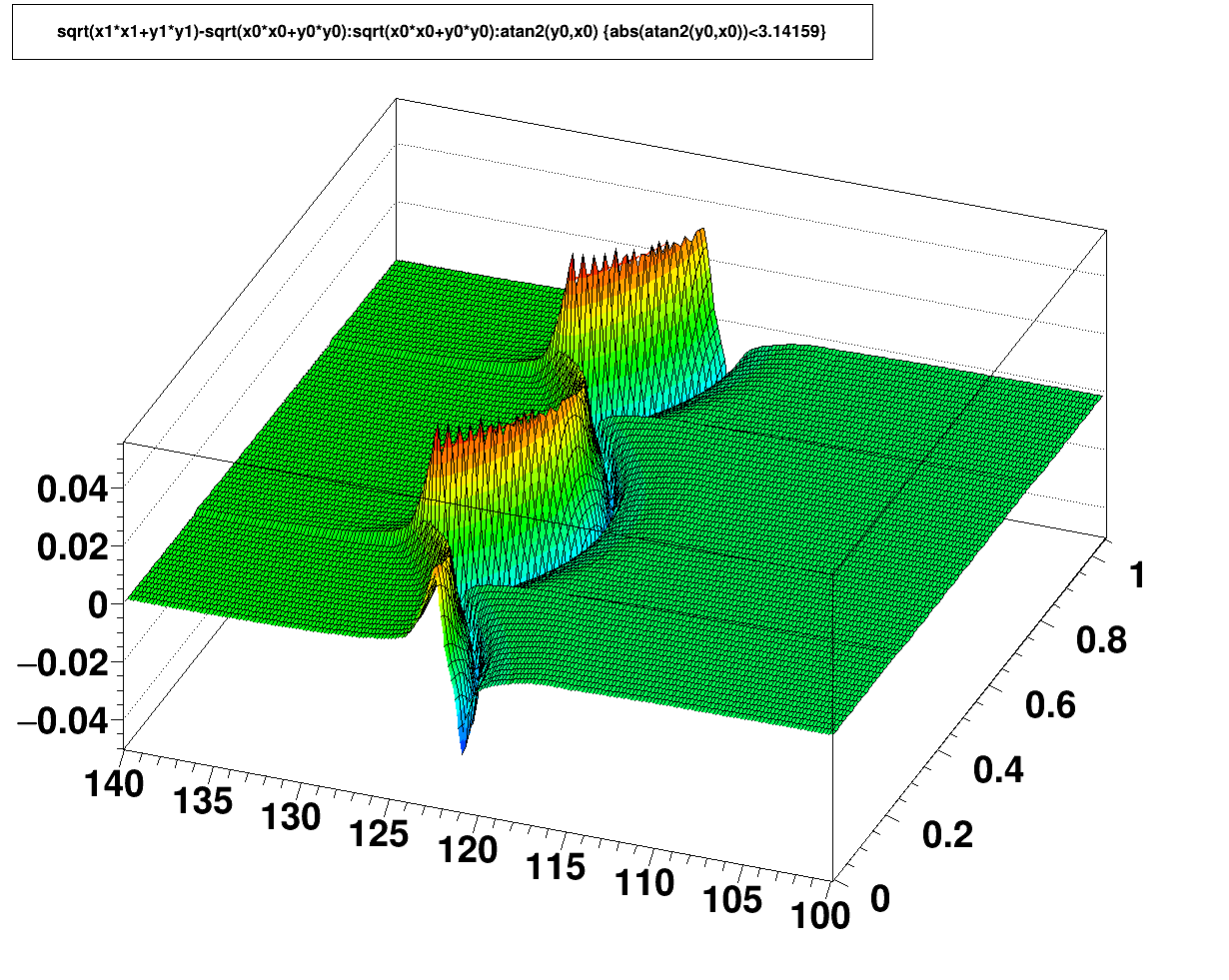

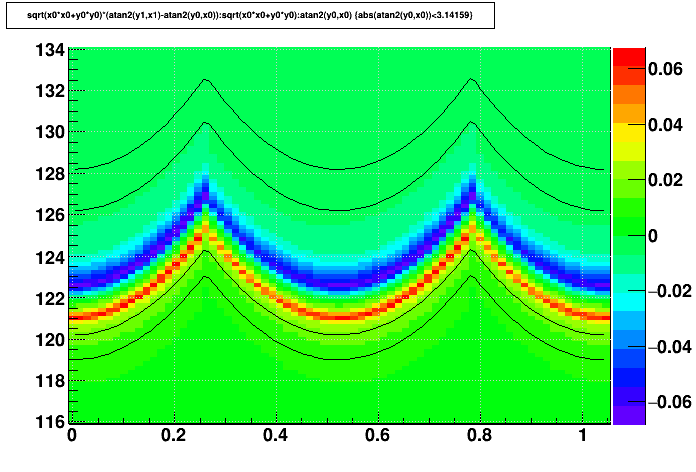

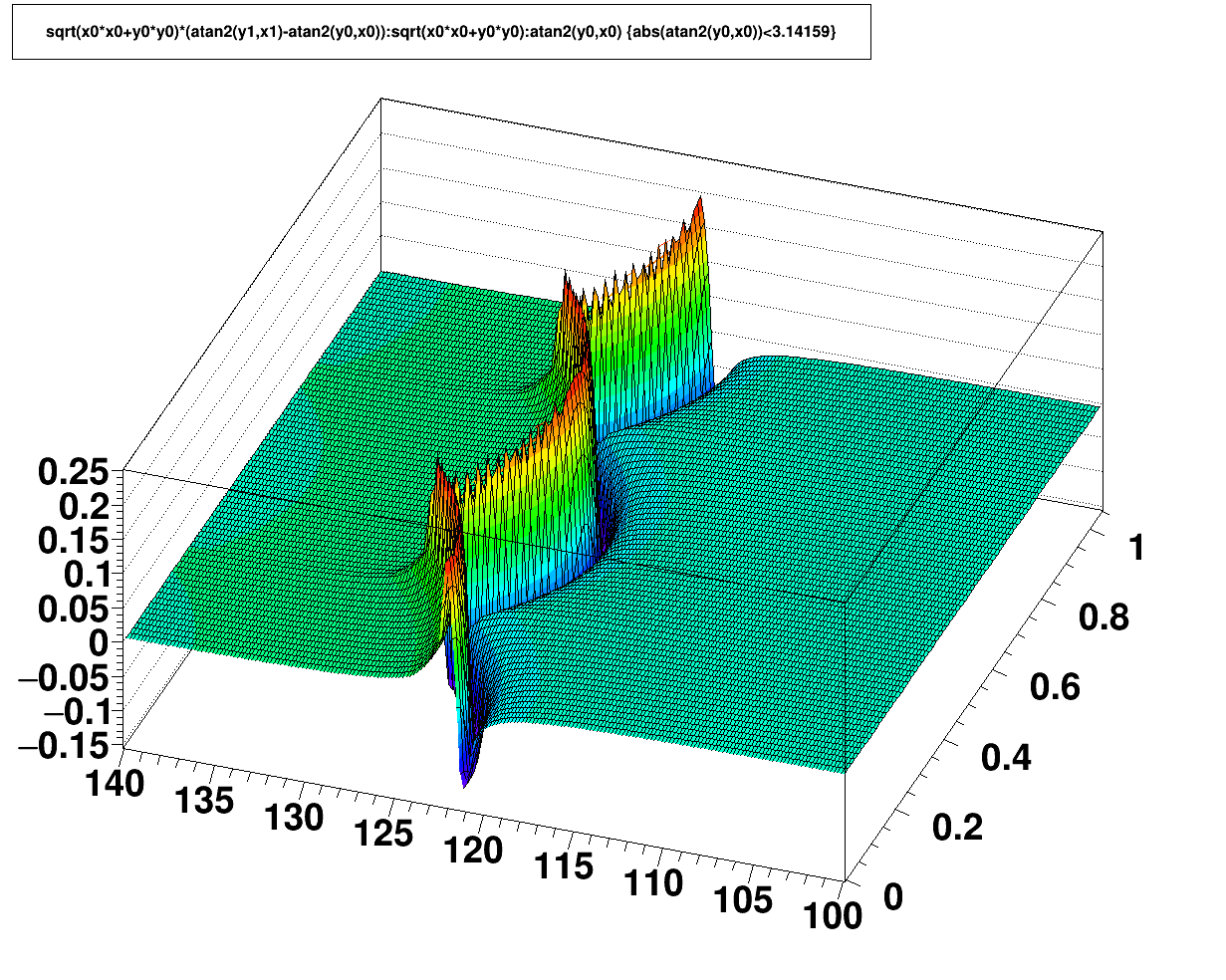

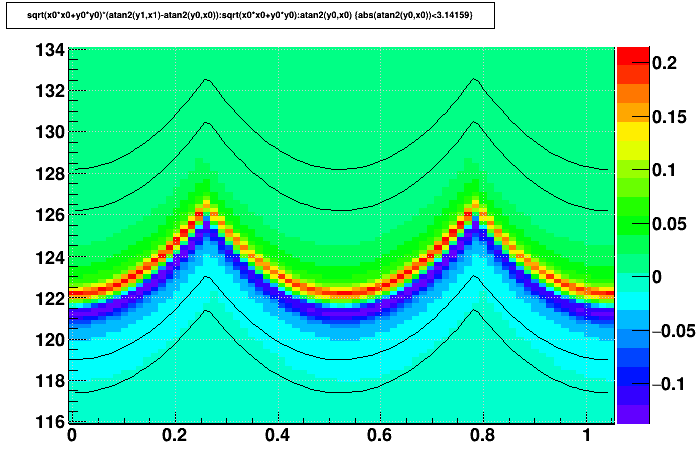

These two distortion corrections focus on static non-uniformities in the electric field in the gating grid region of the TPC, radially between the inner and outer sectors. This region has an absence of gating grid wires where the subsector structures meet, allowing some bending of the equipotential lines, creating radial electric field components. In both cases, the region of distorted fields is quite localized near the endcap and only over a small radial extent, but this then distorts all drifting electrons (essentially from all drift distances equally) in that small radial region, affecting signal only for a few padrows on each side of the inner/outer boundary.Padrow 13 Distortion

For the original TPC structure, the was simply nothing in the gap between the inner and outer sectors. More about the gap structure can be learned by looking some of the GridLeak documentation (which is different in that it is dynamic with the ionization in the TPC. This static distortion was observed in the early operation of STAR when luminosities were low, well before the GridLeak distortion was ever observed. It was modeled as an offset potential on a small strip of the TPC drift field boundary, with the offset equal to a scale factor times the gating grid voltage, where the scale factor was calibrated from fits to the data. Below are the related distortion correction maps.

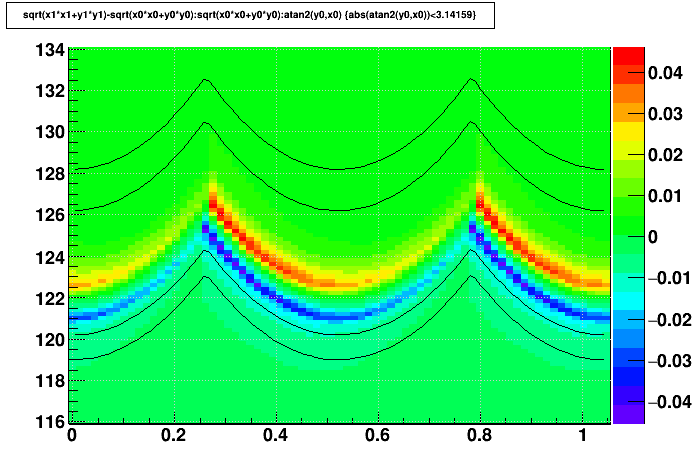

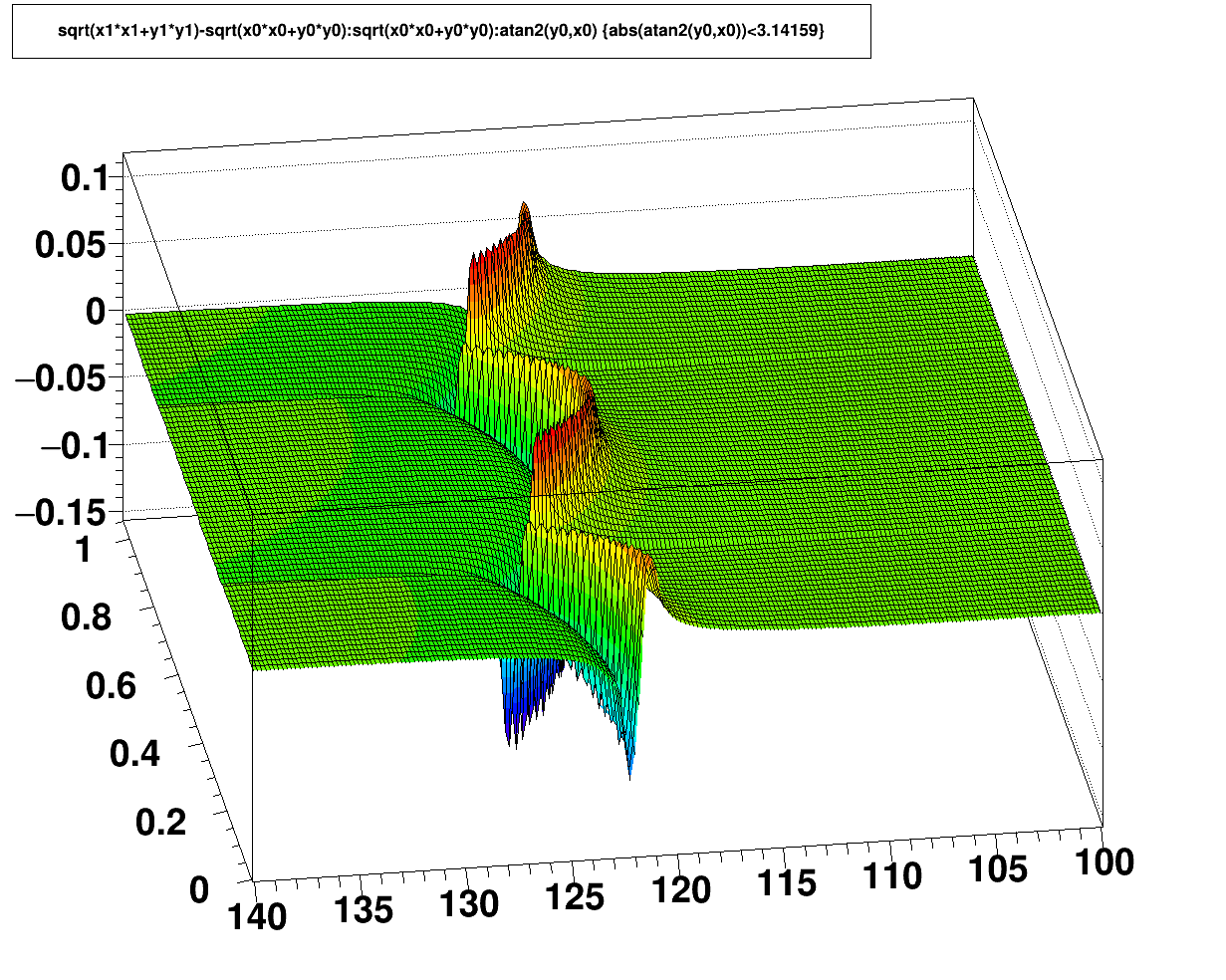

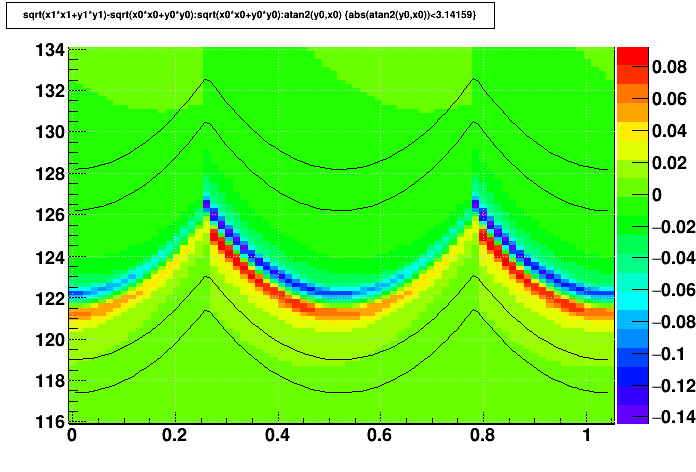

Padrow 40 Distortion

With the iTPC sectors, a wall was placed at the outer edge of the new inner sectors with conductive stripes on it to express potentials primarily to suppress the GridLeak distortion. It was understood that this would modify the static distortion, and in the maps below it is apparent that the static distortions became a few times larger with the wall, but in both cases only at the level of ~100 μm or less at the outermost padrow of the inner sectors. It is also worth noting that the sign of the distortion flipped with respect to Padrow 13.

This distortion correction is determined from the sum of 3 maps that represent the distortion contributions from (1) the wall's grounded structure, (2) the potential at the wall's tip [nominally -115 V], and (3) the potential on the wall's outer side [nominally -450 V]. Plots on this page were made using the nominal potentials.

Distortion correction maps:

The maps are essentially uniform in z, so the maps shown below focus only on the radial and azimuthal dependencies. Further, there is no distortion more than a few centimeters away from the inner/outer boundaries, and there is essentially a 12-fold symmetry azimuthally (though this is not strictly true if different wall voltages are used on different iTPC sectors), so the maps zoom in on a limited region of r and φ to offer a better view of the fine details. Also, the inner and outer edges of the nearest padrows are shown as thin black lines on some plots to help understand their proximity to the distortions.

Open each map in a new web browser tab or window to see higher resolution. Units are [cm] and [radian].

| Δ(r-φ) vs. r and φ | Δ(r) vs. r and φ | |

|---|---|---|

| Padrow 13 |  |

|

| Padrow 13 lines indicating locations of rows 13 and 14 |

|

|

| Padrow 40 |  |

|

| Padrow 40 lines indicating locations of rows 40 and 41 |

|

|

-Gene

RunXI dE/dx calibration recipe

This is a recipe of RunXI dEdx calibration by Yi Guo.

TPC Hit Errors

TPC T0s

Global T0:- Instructions (as of 2012, calibrated along with Twist)

- Run 10 calibration

- Run 12 calibration

Twist (ExB) Distortion

ExB (twist) calibration procedure

In 2012, the procedure documentation was updated, including global T0 calibration:

Below are the older instructions.________________

The procedure here is basically to calculate two beamlines using only west tpc data and only east tpc data independently and then adjust the XTWIST and YTWIST parameters so that the east and west beamlines meet at z=0. The calibration needs to be done every run for each B field configuration. The obtained parameters are stored in the tpcGlobalPosition table with four different flavors: FullmagFNegative, FullMagFPositive, HalfMagFPositive and HalfMagFNegative.

To calculate the beamline intercept the refitting code (originally written by Jamie Dunlop) is used. An older evr-based version used by Javier Castillo for the 2005 heavy ion run can be found at ~startpc/tpcwrkExB_2005, and a version used for the 2006 pp run that uses the minuit vertex finder can be found at ~hjort/tpcwrkExB_2006. Note that for the evr-based version the value of the B field is hard coded at line 578 of pams/global/evr/evr_am.F. All macros referred to below can be found under both of the tpcwrkExB_200X directories referred to above, and some of them under ~hjort have been extensively rewritten.

Step-by-step outline of the procedure:

1. If using evr set the correct B field and compile.

2. Use the "make_runs.pl" script to prepare your dataset. It will create links to fast offline event.root files in your runsXXX subdirectory (create it first, along with outdirXXX). The script will look for files that were previously processed in the outdirXXX file and skip over them.

3. Use the "submit.pl" script to submit your jobs. It has advanced options but the standard usage is "submit.pl rc runsXXX outdirXXX" where "rc" indicates to use the code for reconstructed real events. The jobs will create .refitter.root files in your ourdirXXX subdirectory.

4. Next you create a file that lists all of the .refitter.root files. A command something like this should do it: "ls outdirFF6094 | grep refitter | awk '{print "outdirFF6094/" $1}' > outdirFF6094/root.files"

5. Next you run the make_res.C macro (in StRoot/macros). Note that the input and output files are hard coded in this macro. This will create a histos.root file.

6. Finally you run plot_vtx.C (in StRoot/macros) which will create plots showing your beamline intercepts. Note that under ~hjort/tpcwrkExB_2006 there is also a macro called plot_diff.C which can be used to measure the offset between the east/west beams more directly (useful for pp where data isn't as good).

Once you have made a good measurement of the offsets an iterative procedure is used to find the XTWIST and YTWIST that will make the offset zero:

7. In StRoot/StDbUtilities/StMagUtilities.cxx change the XTWIST and YTWIST parameters to what was used to process the files you analyzed in steps 1-6, and then compile.

8. Run the macro fitDCA2new.C (in StRoot/macros). Jim Thomas produces this macro and you might want to consult with him to see if he has a newer, better version. An up-to-date version as of early 2006 is under ~hjort/tpcwrkExB_2006. When you run this macro it will first ask for a B field and the correction mode, which is 0x20 for this correction. Then it will ask for pt, rapidity, charge and Z0 position. Only Z0 position is really important for our purposes here and typical values to use would be "1.0 0.1 1 0.001". The code will then report the VertexX and VertexY coordinates, which we will call VertexX0 and VertexY0 in the following steps.

9. If we now take VertexX0 and VertexY0 and our measured beamline offsets we can calculate the values for VertexX and VertexY that we want to obtain when we run fitDCA2new.C - call them VertexX_target and VertexY_target:

VertexX_target = (West_interceptX - East_interceptX)/2 + VertexX0

VertexY_target = (East_interceptY - East_interceptY)/2 + VertexY0

The game now is to modify XTWIST and YTWIST in StMagUtilities, recompile, rerun fitDCA2new.C and obtain values for VertexX and VertexY that match VertexX_target and VertexY_target (within 10 microns for heavy ion runs in the past).

10. Once you have found XTWIST and YTWIST parameters you are happy with they can be entered into the db table tpcGlobalPosition as PhiXZ and PhiYZ.

However - IMPORTANT NOTE: XTWIST = 1000 * PhiXZ , but YTWIST = -1000 * PhiYZ.

NOTE THE MINUS SIGN!! What is stored in the database is PhiXZ and PhiYZ. But XTWIST and YTWIST are what are printed in the log files.

Enter the values into the db using AddGlobalPosition.C and a file like tpcGlobalPosition*.C. To check the correction you either need to use files processed in fast offline with your new XTWIST and YTWIST values or request (re)processing of files.

Databases

STAR DATABASE INFORMATION PAGES

Frequently Asked Questions

Frequently Asked Questions

Q: I am completely new to databases, what should I do first?

A: Please, read this FAQ list, and database API documentation :

Database documentation

Then, please read You do not have access to view this node

Don't forget to log in, most of the information is STAR-specific and is protected; If our documentation pages are missing some information (that's possible), please as questions at db-devel-maillist.

Q: I think, I've encountered database-related bug, how can I report it?

A: Please report it using STAR RT system (create ticket), or send your observations to db-devel maillist. Don't hesitate to send ANY db-related questions to db-devel maillist, please!

Q: I am subsystem manager, and I have questions about possible database structure for my subsystem. Whom should I talk to discuss this?

A: Dmitry Arkhipkin is current STAR database administrator. You can contact him via email, phone, or just stop by his office at BNL:

Phone: (631)-344-4922

Email: arkhipkin@bnl.gov

Office: 1-182

Q: why do I need API at all, if I can access database directly?

A: There are a few moments to consider :

a) we need consistent data set conversion from storage format to C++ and Fortran;

b) our data formats change with time, we add new structures, modify old structures;

b) direct queries are less efficient than API calls: no caching,no load balancing;

c) direct queries mean more copy-paste code, which generally means more human errors;

We need API to enable: schema evolution, data conversion, caching, load balancing.

Q: Why do we need all those databases?

A: STAR has lots of data, and it's volume is growing rapidly. To operate efficiently, we must use proven solution, suitable for large data warehousing projects – that's why we have such setup, there's simply no subpart we can ignore safely (without overall performance penalty).

Q: It is so complex and hard to use, I'd stay with plain text files...

A: We have clean, well-defined API for both Offline and FileCatalog databases, so you don't have to worry about internal db activity. Most db usage examples are only a few lines long, so really, it is easy to use. Documentation directory (Drupal) is being improved constantly.

Q: I need to insert some data to database, how can I get write access enabled?

A: Please send an email with your rcas login and desired database domain (e.g. "Calibrations/emc/[tablename]") to arkhipkin@bnl.gov (or current database administrator). Write access is not for everyone, though - make sure that you are either subsystem coordinator, or have proper permission for such data upload.

Q: How can I read some data from database? I need simple code example!

A: Please read this page : You do not have access to view this node

Q: How can I write something to database? I need simple code example!

A: Please read this page : You do not have access to view this node

Q: I'm trying to set '001122' timestamp, but I cannot get records from db, what's wrong?

A: In C++, numbers starting with '0' are octals, so 001122 is really translated to 594! So, if you need to use '001122' timestamp (any timestamp with leading zeros), it should be written as simply '1122', omitting all leading zeros.

Q: What time zone is used for a database timestamps? I see EDT and GMT being used in RunLog...

A: All STAR databases are using GMT timestamps, or UNIX time (seconds since epoch, no timezone). If you need to specify a date/time for db request, please use GMT timestamp.

Q: It is said that we need to document our subsystem's tables. I don't have privilege to create new pages (or, our group has another person responsible for Drupal pages), what should I do?

A: Please create blog page with documentation - every STAR user has this ability by default. It is possible to add blog page to subsystem documentation pages later (webmaster can do that).

Q: Which file(s) is used by Load Balancer to locate databases, and what is the order of precedence for those files (if many available)?

A: Files being searched by LB are :

1. $DB_SERVER_LOCAL_CONFIG env var, should point to new LB version schema xml file (set by default);

2. $DB_SERVER_GLOBAL_CONFIG env. var, should point to new LB version schema xml file (not set by default);

3. $STAR/StDb/servers/dbLoadBalancerGlobalConfig.xml : fallback for LB, new schema expected;

if no usable LB configurations found yet, following files are being used :

1. $STDB_SERVERS/dbServers.xml - old schema expected;

2. $HOME/dbServers.xml - old schema expected;

3. $STAR/StDb/servers/dbServers.xml - old schema expected;

How-To: user section

Useful database tips and tricks, which could be useful for STAR activities, are stored in this section.

Time Stamps

| STAR Computing | Tutorials main page |

| STAR Databases: TIMESTAMP

|

|

| Offline computing tutorial | |

TIMESTAMPS

|

There are three timestamps used in STAR databases;

EntryTime and deactive are essential for 'reproducibility' and 'stability' in production. The beginTime is the STAR user timestamp. One manifistation of this, is the time recorded by daq at the beginning of a run. It is valid unti l the the beginning of the next run. So, the end of validity is the next beginTime. In this example it the time range will contain many eve nt times which are also defined by the daq system. The beginTime can also be use in calibration/geometry to define a range of valid values. EXAMPLE: (et = entryTime) The beginTime represents a 'running' timeline that marks changes in db records w/r to daq's event timestamp. In this example, say at some tim e, et1, I put in an initial record in the db with daqtime=bt1. This data will now be used for all daqTimes later than bt1. Now, I add a second record at et2 (time I write to the db) with beginTime=bt2 > bt1. At this point the 1st record is valid from bt1 to bt2 and the second is valid for bt2 to infinity. Now I add a 3rd record on et3 with bt3 < bt1 so that

Let's say that after we put in the 1st record but before we put in the second one, Lydia runs a tagged production that we'll want to 'use' fo rever. Later I want to reproduce some of this production (e.g. embedding...) but the database has changed (we've added 2nd and 3rd entries). I need to view the db as it existed prior to et2. To do this, whenever we run production, we defined a productionTimestamp at that production time, pt1 (which is in this example < et2). pt1 is passed to the StDbLib code and the code requests only data that was entered before pt1. This is how production in 'reproducible'. The mechanism also provides 'stability'. Suppose at time et2 the production was still running. Use of pt1 is a barrier to the production from 'seeing' the later db entries. Now let's assume that the 1st production is over, we have all 3 entries, and we want to run a new production. However, we decide that the 1st entry is no good and the 3rd entry should be used instead. We could delete the 1st entry so that 3rd entry is valid from bt3-to-bt2 but the n we could not reproduce the original production. So what we do is 'deactivate' the 1st entry with a timestamp, d1. And run the new production at pt2 > d1. The sql is written so that the 1st entry is ignored as long as pt2 > d1. But I can still run a production with pt1 < d1 which means the 1st entry was valid at time pt1, so it IS used. email your request to the database expert.

In essence the API will request data as following: 'entryTime <productionTime<deactive || entryTime< productionTime & deactive==0.' To put this to use with the BFC a user must use the dbv switch. For example, a chain that includes dbv20020802 will return values from the database as if today were August 2, 2002. In other words, the switch provides a user with a snapshot of the database from the requested time (which of coarse includes valid values older than that time). This ensures the reproducability of production.

Below is an example of the actual queries executed by the API:

select unix_timestamp(beginTime) as bTime,eemcDbADCconf.* from eemcDbADCconf Where nodeID=16 AND flavor In('ofl') AND (deactive=0 OR deactive>=1068768000) AND unix_timestamp(entryTime) < =1068768000 AND beginTime < =from_unixtime(1054276488) AND elementID In(1) Order by beginTime desc limit 1

For a description of format see ....

|

Test

Test page

Quality Assurance

Welcome to the Quality assurance and quality control pages.

Proposal and statements

.

Proposal for Run IV

Procedure proposal for production and QA in Year4 run

Jérôme LAURET & Lanny RAY, 2004

Summary: The qualitative increase in data volume for run 4 together with finite cpu capacity at RCF precludes the possibility for multiple reconstruction passes through the full raw data volume next year. This new computing situation together with recent experiences involving production runs which were not pre-certified prior to full scale production motivates a significant change in the data quality assurance (QA) effort in STAR. This note describes the motivation and proposed implementation plan.

Introduction

The projection for the next RHIC run (also called, Year4 run which will start by the end of 2003), indicates a factor of five increase in the number of collected events comparing to preceding runs. This will increase the required data production turn-around time by an order of magnitude, from months to one year per full-scale production run. The qualitative increase in the reconstruction demands combined with an increasingly aggressive physics analysis program will strain the available data processing resources and poses a severe challenge to STAR and the RHIC computing community for delivering STAR’s scientific results in a reasonable time scale. This situation will become more and more problematic as our Physics program evolves to include rare probes. This situation is not unexpected and was anticipated since before the inception of RCF. The STAR decadal plan (10 year projection of STAR activities and development) clearly describes the need for several upgrade phases, including a factor of 10 increase in data acquisition rate and analysis throughput by 2007.

Typically, 1.2 represents an ideal, minimal number of passes through the raw data in order to produce calibrated data summary tapes for physics analysis. However, it is noteworthy that in STAR we have typically processed the raw data an average of 3.5 times where, at each step, major improvements in the calibrations were made which enabled more accurate reconstruction, resulting in greater precision in the physics measurements. The Year 4 data sample in STAR will include the new ¾ barrel EMC data which makes it unlikely that sufficiently accurate calibrations and reconstruction can be achieved with only the ideal 1.2 number of passes as we foresee the need for additional calibration passes through the entire data in order to accumulate enough statistics to push the energy calibration to the high Pt limit.

While drastically diverging from the initial computing requirement plans ( 1), this mode of operation, in conjunction with the expanded production time table, calls for a strengthening of procedures for calibration, production and quality assurance.

The following table summarizes the expectations for ~ 70 Million events with a mix of central and minbias triggers. Numbers of files and data storage requirements are also included for guidance

|

Au+Au 200 (minbias) |

35 M central |

35 M minbias |

Total |

|

No DAQ100 (1 pass) |

329 days |

152 days |

481 days |

|

No DAQ100 (2 passes) |

658 days |

304 days |

962 days |

|

Assuming DAQ100 (1 pass) |

246 days |

115 days |

361 days |

|

Assuming DAQ100 (2 passes) |

493 days |

230 days |

723 days |

|

Total storage estimated (raw) |

x |

x |

203 TB |

|

Total storage estimated |

x |

x |

203 TB |

Quality Assurance: Goals and proposed procedure for QA and productions

The goal of the QA activities in STAR is the validation of data and software, up to DST production. While QA testing can never be exhaustive, the intention is that data that pass the QA testing stage should be considered highly reliable for downstream physics analysis. In addition, QA testing should be performed soon after production of the data, so that errors and problems can be caught and fixed in a timely manner.

QA processes are run independently of the data taking and DST production. These processes contain the accumulated knowledge of the collaboration with respect to potential modes of failure of data taking and DST production, along with those physics distributions that are most sensitive to the health of the data and DST production software. The results probe the data in various ways:

-

At the most basic level, the questions asked are whether the data can be read and whether all the components expected in a given dataset are present. Failures at this level are often related to problems with computing hardware and software infrastructure.

-

At a more sophisticated level, distributions of physics-related quantities are examined, both as histograms and as scalar quantities extracted from the histograms and other distributions. These distributions are compared to those of previous runs that are known to be valid, and the stability of the results is monitored. If changes are observed, these must be understood in terms of changing running conditions or controlled changes in the software, otherwise an error flag should be raised (deviations are not always bad, of course, and can signal new physics: QA must be used with care in areas where there is a danger of biasing the physics results of STAR).

The focus of the QA activities until summer 2000 has been on Offline DST production for the DEV branch of the library. With the inception of data taking, the scope of QA has broadened considerably. There are in fact two different servers running autoQA processes:

-

Offline QA. This autoQA-generated web page accesses QA results for all the varieties of Offline DST production:

-

Real data production produced by the Fast Offline framework. This is used to catch gross errors in data taking, online trigger and calibration, allowing for correcting the situation before too much data is accumulated (this framework also provides on the fly calibration as the data is produced).

-

Nightly tests of real and Monte Carlo data (almost always using the DEV and NEW branches of the library). This is used principally for the validation of migration of library versions

-

Large scale production of real and Monte Carlo data (almost always using the PRO branch of the library). This is used to monitor the stability of DSTs for physics.

-

-

Online QA. This autoQA-generated web page accesses QA results for data in the Online event pool, both raw data and DST production that is run on the Online processors.

The QA dilemma

While a QA shift is usually organized during data taking, the later, official production runs were encouraged (but not mandated) to be regularly QA-ed. Typically, there has not been an organized QA effort for post-experiment DST production runs. The absence of organized quality assurance efforts following the experiment permitted several post-production problems to arise. These were eventually discovered at the (later) physics analysis stage, but the entire production run was wasted. Examples include the following:

-

missing physics quantities in the DSTs (e.g. V0, Kinks, etc ...)

-

missing detector information or collections of information due to pilot errors or code support

-

improperly calibrated and unusable data

-

...

The net effect of such late discoveries is a drastic increase in the production cycle time, where entire production passes have to be repeated, which could have been prevented by a careful QA procedure.

Production cycles and QA procedure

To address this problem we propose the following production and QA procedure for each major production cycle.

-

A data sample (e.g. from a selected trigger setup or detector configuration) of not more than 100k events (Au+Au) or 500k events (p+p) will be produced prior to the start of the production of the entire data sample.

-

This data sample will remain available on disk for a period of two weeks or until all members of “a” QA team (as defined here) have approved the sample (whichever comes first).

-

After the two week review period, the remainder of the sample is produced with no further delays, with or without the explicit approval of everyone in the QA team.

-

Production schedules will be vigorously maintained. Missing quantities which are detected after the start of the production run do not necessarily warrant a repetition of the entire run.

-

The above policy does not apply to special or unique data samples involving calibration or reconstruction studies nor would it apply to samples having no overlaps with other selections. Such unique data samples include, for example, those containing a special trigger, magnetic field setting, beam-line constraint (fill transition), etc., which no other samples have and which, by their nature, require multiple reconstruction passes and/or special attention.

In order to carry out timely and accurate Quality Assurance evaluations during the proposed two week period, we propose the formation of a permanent and QA team consisting of:

-

One or two members per Physics Working group. This manpower will be under the responsibility of the PWG conveners. The aim of these individuals will be to rigorously check, via the autoQA system or analysis codes specific to the PWG, for the presence of the required physics quantities of interest to that PWG which are understood to be vital for the PWG’s Physics program and studies.

-

One or more detector sub-system experts from each of the major detector sub-systems in STAR. The goal of these individuals will be to ensure the presence and sanity of the data specific to that detector sub-system.

-

Within the understanding that the outcome of such procedure and QA team is a direct positive impact on the Physics capabilities of a PWG, we recommend that this QA service work be done without shift signups or shift credit as is presently being done for DAQ100 and ITTF testing.

Summary

Facing important challenges driven by the data amount and Physics needs, we proposed an organized procedure for QA and production relying on a cohesive feedback from the PWG and detector sub-system’s experts within time constraints guidelines. It is understood that the intent is clearly to bring the data readiness to the shortest possible turn around time while avoiding the need for later re-production causing waste of CPU cycles and human hours.

Summary list of STAR QA Provisions

Summary of the provisions of Quality Assurance and Quality Control for the STAR Experiment

- Online QA (near real-time data from the event pool)

-

- Plots of hardware/electronics performance

- Histogram generation framework and browsing tools are provided

- Shift crew assigned to analyze

- Plots are archived and available via web

- Data can be re-checked

- Yearly re-assessment of plot contents during run preparation meetings and via pre-run email request by the QA coordinator

- Visualization of data

- Event Display (GUI running at the control room)

- DB data validity checks

- Plots of hardware/electronics performance

- FastOffline QA (full reconstruction within hours of acquisition)

-

- Histogram framework, browsing, reporting, and archiving tools are provided

- QA shift crew assigned to analyze and report

- Similar yearly re-assessment of plot contents as Online QA plots

- Data and histograms on disk for ~2 weeks and then archived to HPSS

- Available to anyone

- Variety of macros provided for customized studies (some available from histogram browser, e.g. integrate over runs)

- Archived reports always available

- Report browser provided

- Histogram framework, browsing, reporting, and archiving tools are provided

- Reconstruction Code QA

-

- Standardized test suite of numerous reconstruction chains in DEV library performed nightly

- Analyzed by S&C team

- Browser provided

- Results kept at migration to NEW library

- Standardized histogram suite recorded at library tagging (2008+)

- Analyzed by S&C team

- Test suite grows with newly identified problem

- Discussions of analysis and new issues at S&C meetings

- Test productions before full productions (overlaps with Production QA below)

- Provided for calibration and PWG experts to analyze (intended to be a requirement of the PWGs, see Production cycles and QA procedure under Proposal for Run IV)

- Available to anyone for a scheduled 2 weeks prior to commencing production

- Discussions of analysis and new issues at S&C meetings

- Standardized test suite of numerous reconstruction chains in DEV library performed nightly

- Production QA

-

- All aspects of FastOffline QA also provided for Production QA (same tools)

- Data and histograms are archived together (i.e. iff data, then histograms)

- Same yearly re-assessment of plot contents as FastOffline QA plots (same plots)

- Formerly analyzed during runs by persons on QA shift crew (2000-2005)

- No current assignment of shift crew to analyze (2006+)

- Visualization of data

- Event Display: GUI, CLI, and visualization engine provided

- See "Test productions before full production" under Reconstruction Code QA above (overlaps with Production QA)

- Resulting data from productions are on disk and archived

- Available to anyone (i.e. PWGs should take interest in monitoring the results)

- All aspects of FastOffline QA also provided for Production QA (same tools)

- Embedding QA

-

- Standardized test suite of plots of baseline gross features of data

- Analyzed by Embedding team

- Provision for PWG specific (custom) QA analysis (2008+)

- Standardized test suite of plots of baseline gross features of data

Offline QA

Offline QA Shift Resources

STAR Offline QA Documentation (start here!)Quick Links: Shift Requirements , Automated Browser Instructions , You do not have access to view this node , Online RunLog Browser

|

|

Automated Offline QA BrowserQuick Links: You do not have access to view this node |

QA Shift Report FormsQuick Links: Issue Browser/Editor, Dashboard, Report Archive |

QA Technical, Reference, and Historical Information

Reconstruction Code QA

As a minimal check on effects caused by any changes to reconstruction code, the following code and procedures are to be exercised:

A suite of datasets has been selected which should serve as a reference basis for any changes. These datasets include:

Real data from Run 7 AuAu at 200 GeV

Simulated data using year 2007 geometry with AuAu at 200 GeV

Real data from Run 8 pp at 200 GeV

Simulated data using year 2008 geometry with pp at 200 GeV

These datasets should be processed with BFC as follows to generate historgrams in a hist.root file:

root4star -b -q -l

'bfc.C(100,"P2007b,ittf,pmdRaw,OSpaceZ2,OGridLeak3D","/star/rcf/test/daq/2007/113/8113044/st_physics_8113044_raw_1040042.daq")' root4star -b -q -l

'bfc.C(100, "trs,srs,ssd,fss,y2007,Idst,IAna,l0,tpcI,fcf,ftpc,Tree,logger,ITTF,Sti,SvtIt,SsdIt,genvtx,MakeEvent,IdTruth,geant,tags,bbcSim,tofsim,emcY2,EEfs,evout,GeantOut,big,fzin,MiniMcMk,-dstout,clearmem","/star/rcf/simu/rcf1296_02_100evts.fzd")' root4star -b -q -l

'bfc.C(1000,"pp2008a,ittf","/star/rcf/test/daq/2008/043/st_physics_9043046_raw_2030002.daq")' ?

The RecoQA.C macro generates CINT files from the hist.root files

root4star -b -q -l 'RecoQA.C("st_physics_8113044_raw_1040042.hist.root")'

root4star -b -q -l 'RecoQA.C("rcf1296_02_100evts.hist.root")'

root4star -b -q -l 'RecoQA.C("st_physics_9043046_raw_2030002.hist.root")'

?

The CINT files are then useful for comparison to the previous reference, or storage as the new reference for a given code library. To view these plots, simply execute the CINT file with root:

root -l st_physics_8113044_raw_1040042.hist_1.CC

root -l st_physics_8113044_raw_1040042.hist_2.CCroot -l rcf1296_02_100evts.hist_1.CC

root -l rcf1296_02_100evts.hist_2.CCroot -l st_physics_9043046_raw_2030002.hist_1.CC

root -l st_physics_9043046_raw_2030002.hist_2.CC?

One can similarly execute the reference CINT files for visual comparison:

root -l $STAR/StRoot/qainfo/st_physics_8113044_raw_1040042.hist_1.CC

root -l $STAR/StRoot/qainfo/st_physics_8113044_raw_1040042.hist_2.CCroot -l $STAR/StRoot/qainfo/rcf1296_02_100evts.hist_1.CC

root -l $STAR/StRoot/qainfo/rcf1296_02_100evts.hist_2.CCroot -l $STAR/StRoot/qainfo/st_physics_9043046_raw_2030002.hist_1.CC

root -l $STAR/StRoot/qainfo/st_physics_9043046_raw_2030002.hist_2.CC?

Steps 1-3 above should be followed immediately upon establishing a new code library. At that point, the CINT files should be placed in the appropriate CVS directory, checked in, and then checked out (migrated) into the newly established library:

cvs co StRoot/qainfo mv *.CC StRoot/qainfo cvs ci -m "Update for library SLXXX" StRoot/qainfo cvs tag SLXXX StRoot/info/*.CC cd $STAR cvs update StRoot/info

Missing information will be filled in soon. We may also consolidate some of these steps into a single script yet to come.

Run QA

Helpful links:

- STAR Operations Page: https://drupal.star.bnl.gov/STAR/public/operations

- Online QA

- know issues: STARONL Jira Board (Summary) [discontinued]

- the plots listed in the previously mentioned attachments are a subset of this and are based on the JEVP plots that typically monitored by the shift crew and can be found listed for each run in the Run Log

- https://online.star.bnl.gov/RunLog/

- Offline QA

- based on FastOffline production and monitored by QA-shift person

- https://drupal.star.bnl.gov/STAR/comp/qa/offline-qa

- PWG/Run QA

- These pages!

Run 19 (BES II) QA

Run 19 (BES 2) Quality Assurance

Run Periods

- Au+Au at 19.6GeV

- Au+Au at 14.5GeV

- Fixed Target Au+Au (√sNN = 3.9, 4.5, and 7.7GeV)

Detector Resources

| BBC | BTOF | BEMC | EPD |

| eTOF | GMT | iTPC/TPC | HLT |

| MTD | VPD | ZDC |

Other Resources

QA Experts:- BBC - Akio Ogawa

- BTOF - Zaochen Ye

- BEMC - Raghav Kunnawalkam Elayavalli

- EPD - Rosi Reed

- eTOF - Florian Seck

- GMT - Dick Majka

- iTPC- Irakli Chakaberia

- HLT - Hongwei Ke

- MTD - Rongrong Ma

- VPD - Daniel Brandenburg

- ZDC - Miroslav Simko and Lukas Kramarik

- Offline-QA - Lanny Ray + this week's Offline-QA shift taker

- LFSUPC conveners: David Tlusty, Chi Yang, and Wangmei Zha

- delegate: Ben Kimelman

- BulkCorr conveners: SinIchi Esumi, Jiangyong Jia, and Xiaofeng Luo

- delegate: Takafumi Niida (BulkCorr)

- PWGC - Zhenyu Ye

- TriggerBoard (and BES focus group) - Daniel Cebra

- S&C - Gene van Buren

Meeting Schedule

- Weekly on Thursdays at 2pm EST

- Blue Jeans information:

To join the Meeting: https://bluejeans.com/967856029 To join via Room System: Video Conferencing System: bjn.vc -or-199.48.152.152 Meeting ID : 967856029 To join via phone : 1) Dial: +1.408.740.7256 (United States) +1.888.240.2560 (US Toll Free) +1.408.317.9253 (Alternate number) (see all numbers - http://bluejeans.com/numbers) 2) Enter Conference ID : 967856029

AuAu 19.6GeV (2019)

Run 19 (BES-2) Au+Au @ √sNN=19.6 GeV

PWG QA resources:

- BulkCorr PWG QA (Takafumi Niida)

- LFSUPC PWF QA (Ben Kimelman)

- presentations: Feb.28, March 7, March 14, March 21

- Run-by-run QA Plots (use the standard STAR login information)

- Combined QA:

- Good/Bad run list, incl. injection runs

- 19.6GeV run history

Direct links to the relevant Run-19 QA meetings:

- You do not have access to view this node

- You do not have access to view this node

- You do not have access to view this node

- You do not have access to view this node

- You do not have access to view this node

- You do not have access to view this node

LFSUPC Run-by-run QA

AuAu 11.5GeV (2020)

Run 20 (BES-2) Au+Au @ √sNN=11.5 GeV

PWG QA resources:

- BulkCorr PWG QA (Takafumi Niida)

- LFSUPC PWF QA (Ben Kimelman)

- presentations: Dec. 12

- Run-by-run QA Plots

Event Level QA

Track QA (no track cuts)

Track QA (with track cuts)

nHits QA (no track cuts)

AuAu Fixed Target (2019)

Run 20 (BES II) QA

Run 20 (BES 2) Quality Assurance

Run Periods

Detector Resources

| BBC | BTOF | BEMC | EPD |

| eTOF | GMT | iTPC/TPC | HLT |

| MTD | VPD | ZDC |

Other Resources

- Run-20 iTPC QA Blog (by Flemming Videbaek)

- Beam Use Request for Runs 19 and 20

- BBC - Akio Ogawa

- BTOF - Zaochen Ye

- BEMC - Raghav Kunnawalkam Elayavalli

- EPD - Rosi Reed

- eTOF - Florian Seck

- GMT - Dick Majka

- TPC- Irakli Chakaberia, Fleming Videbaek

- HLT - Hongwei Ke

- MTD - Rongrong Ma

- VPD - Daniel Brandenburg

- ZDC - Miroslav Simko and Lukas Kramarik

- Offline-QA - Lanny Ray

- TriggerBoard - Daniel Cebra

- S&C - Gene van Buren

- LFSUPC conveners: Wangmei Zha, Daniel Cebra,

- delegate: Ben Kimelman

- BulkCorr conveners: SinIchi Esumi, Jiangyong Jia, and Xiaofeng Luo

- delegate: Takafumi Niida (BulkCorr)

- PWGC - Zhenyu Ye

- PWG Delegates

- LFSUPC: Ben Kimelman, Chenliang Jin

- BulkCorr: Kosuke Okubo, Ashish Pandav

-

- HeavyFlavor - Kaifeng Shen, Yingjie Zhou

- JetCorr - Tong Liu, Isaac Mooney

- Spin/ColdQCD : Yike Xu

- PWGC - Rongrong Ma

Meeting Schedule

- Weekly on Fridays at noon EST/EDT

- Blue Jeans information:

Meeting URL https://bluejeans.com/563179247?src=join_info Meeting ID 563 179 247 Want to dial in from a phone? Dial one of the following numbers: +1.408.740.7256 (US (San Jose)) +1.888.240.2560 (US Toll Free) +1.408.317.9253 (US (Primary, San Jose)) +41.43.508.6463 (Switzerland (Zurich, German)) +31.20.808.2256 (Netherlands (Amsterdam)) +39.02.8295.0790 (Italy (Italian)) +33.1.8626.0562 (Paris, France) +49.32.221.091256 (Germany (National, German)) (see all numbers - https://www.bluejeans.com/premium-numbers) Enter the meeting ID and passcode followed by # Connecting from a room system? Dial: bjn.vc or 199.48.152.152 and enter your meeting ID & passcode

AuAu 11.5GeV (2020)

Run-20 (BES-2) RunQA :: Au+Au at 11.5GeV- HLT QA:

- Detector QA:

- PWG QA:

- Weekly Meetings

AuAu 9.2GeV (2020)

Run-20 (BES-2) RunQA :: Au+Au at 9.2GeV

- Trigger Board Sumary Report

- Combined FastOffline QA reports

- HLT QA:

- Detector QA:

- PWG QA:

- Weekly Meetings

Fixed Target Au+Au (2020)

Run-20 (BES-2) RunQA :: Fixed Target Au+Au

- FXT 5.7GeV - √sNN=3.5GeV

- days 44-45

- HLT

- PWG::LFSUPC

- PWG::BulkCorr

- FXT 7.3GeV - √sNN=3.9GeV

- days 35 - 36

- HLT

- PWG::LFSUPC

- PWG::BulkCorr

- FXT 9.8GeV - √sNN=4.5GeV

- days 29 - 32

- HLT

- PWG::LFSUPC

- PWG::BulkCorr

- FXT 13.5GeV - √sNN=5.2GeV

- days 33 - 34

- HLT

- PWG::LFSUPC

- PWG::BulkCorr

- FXT 19.5GeV - √sNN=6.2GeV

- days 32 - 33

- HLT

- PWG::LFSUPC

- PWG::BulkCorr

- FXT 26.5 GeV - √sNN=7.2GeV

- between days 55 and 258

- FXT 31.2GeV - √sNN=7.7GeV

- days 28 - 29

- HLT

- PWG::LFSUPC

Run 21 (BES II) QA

Run 21 (BES 2) Quality Assurance

Run Period(s)

Detector Resources

| BBC | BTOF | BEMC | EPD |

| eTOF | GMT | iTPC/TPC | HLT |

| MTD | VPD | ZDC |

Other Resources

- Run-21 iTPC QA Blog (by Flemming Videbaek)

- Beam Use Request for Runs 21-25

- BBC - Akio Ogawa

- BTOF - Zaochen Ye

- BEMC - Raghav Kunnawalkam Elayavalli

- EPD - Joey Adams

- eTOF - Philipp Weidenkaff

- GMT -

- TPC- Flemming Videbaek

- HLT - Hongwei Ke

- MTD - Rongrong Ma

- VPD - Daniel Brandenburg

- ZDC - Miroslav Simko and Lukas Kramarik

- Offline-QA - Lanny Ray

- TriggerBoard - Daniel Cebra

- Production & Calibrations - Gene Van Buren

- PWG Delegates

- LFSUPC: Chenliang Jin, Ben Kimelman

- BulkCorr: Kosuke Okubo, Ashish Pandav

- HeavyFlavor - Kaifeng Shen, Yingjie Zhou

- JetCorr - Tong Liu, Isaac Mooney

- Spin/ColdQCD : Yike Xu

- PWGC - Rongrong Ma

Meeting Schedule

- Weekly on Fridays at noon EST/EDT

- Zoom information:

Topic: STAR QA Board Time: This is a recurring meeting Meet anytime Join Zoom Meeting https://riceuniversity.zoom.us/j/95314804042?pwd=ZUtBMzNZM3kwcEU3VDlyRURkN3JxUT09 Meeting ID: 953 1480 4042 Passcode: 2021 One tap mobile +13462487799,,95314804042# US (Houston) +12532158782,,95314804042# US (Tacoma) Dial by your location +1 346 248 7799 US (Houston) +1 253 215 8782 US (Tacoma) +1 669 900 6833 US (San Jose) +1 646 876 9923 US (New York) +1 301 715 8592 US (Washington D.C) +1 312 626 6799 US (Chicago) Meeting ID: 953 1480 4042 Find your local number: https://riceuniversity.zoom.us/u/amvmEfhce Join by SIP 95314804042@zoomcrc.com Join by H.323 162.255.37.11 (US West) 162.255.36.11 (US East) 115.114.131.7 (India Mumbai) 115.114.115.7 (India Hyderabad) 213.19.144.110 (Amsterdam Netherlands) 213.244.140.110 (Germany) 103.122.166.55 (Australia) 149.137.40.110 (Singapore) 64.211.144.160 (Brazil) 69.174.57.160 (Canada) 207.226.132.110 (Japan) Meeting ID: 953 1480 4042 Passcode: 2021

AuAu 7.7GeV (2021)

Run-21 (BES-2) RunQA :: Au+Au at 7.7GeV

- Trigger Board Sumary Report

- Combined FastOffline QA reports

- HLT QA:

- Detector QA:

- PWG QA:

- Weekly Meetings

Fixed Target Au+Au (2021)

Run-21 (BES-2) RunQA :: Fixed Target Au+Au

-

(((PLACEHOLDERS)))

- FXT - √sNN=3.0GeV

- days:

- HLT

- PWG::LFSUPC

- PWG::BulkCorr

- FXT - √sNN=9.2GeV

- FXT - √sNN=11.5GeV

- FXT - √sNN=13.7GeV

- FXT - √sNN=3.0GeV

- ...

Run 22 QA

Weekly on Fridays at noon EST/EDT

Zoom information:

=========================

Topic: STAR QA board meeting

Mailing List:

https://lists.bnl.gov/mailman/listinfo/STAR-QAboard-l

=========================

BES-II Data QA:

Summary Page by Rongrong:

https://drupal.star.bnl.gov/STAR/pwg/common/bes-ii-run-qa

==================

Run QA: Ashik Ikbal, Li-Ke Liu (Prithwish Tribedy, Yu Hu as code developers)

Centrality: Zach Sweger, Shuai Zhou, Zuowen Liu (pileup rejection), Xin Zhang

- Friday, Oct 15, 2021. 12pm BNL Time

- Run-by-Run QA code introduction (Yu Hu)

- Friday, Oct 22, 2021. 12pm BNL Time

- List of variables from different groups

- Daniel: centrality

- Chenliang: LF

- Ashish: bulk cor

- Ashik: FCV

- Kaifeng: HF

- Tong: jet cor

- List of variables from different groups

- Friday, Oct 29, 2021. 12pm BNL Time

- Friday, Nov 5, 2021. 12pm BNL Time

- Friday, Nov 12, 2021. 12pm BNL Time

- Update on 19.6GeV QA after removing injection runs (Ashik)

- Update on 19.6GeV QA for centrality determination (Li-ke Liu)

- Au+Au 7.7 GeV QA (Vipul Bairathi)

- Maker for Bad Runs (Xiaoyu Liu)

- Progress on BES-II Data QA

- Friday, Nov 19, 2021. 12pm BNL Time

- Progress on BES-II Data QA

- Offline QA from ColdQCD group

- Friday, Dec 3, 2021. 12pm BNL Time

- Progress on BES-II Data QA (Ashik)

- Run22 Offline QA

- Friday, Dec 10, 2021. 12pm BNL Time

- Progress on BES-II Data QA (Ashik)

- Run22 Offline QA (Lanny, Gene, Akio)

- Friday, Dec 17, 2021. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- Progress on BES-II Data QA

- Update on StBadRunChecker (Xiaoyu Liu)

- Run-by-Run QA Status Report (Daniel Cebra)

- Run-by-Run QA for 19.6 GeV (Ashik)

- QA variables for heavy flavor pwg (Kaifeng)

- Run22 Offline QA

- Friday, Dec 24, 2021. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- Run22 Offline QA

- Friday, Dec 31, 2021. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- Run22 Offline QA

- Friday, January 7, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Status of 14.6GeV Run-by-Run QA (Ashik)

- Run22 Offline QA

- Friday, January 14, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Update on 14.6GeV QA (Li-Ke, Ashik)

- Run22 Offline QA

- Friday, January 21, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Run22 Offline QA

- Friday, January 28, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- Run22 Offline QA

- Friday, February 4, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- 14.6 GeV bad runs list assessment (Daniel Cebra)

- Run22 Offline QA

- Friday, February 11, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- Run22 Offline QA

- Friday, February 18, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- 14.6GeV Run QA Update (Li-Ke Liu)

- Run22 Offline QA

- Friday, February 25, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- Run22 Offline QA

- Friday, March 4, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Run-by-Run QA 4.59GeV (Eugenia Khyzhniak)

- Run22 Offline QA

- Friday, March 11, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Run22 Offline QA

- Friday, March 18, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Run22 Offline QA

- Friday, March 25, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- Run22 Offline QA

- Friday, April 1, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Run22 Offline QA

- Friday, April 8, 2022. 12pm BNL Time

- Run22 Offline QA

- Offline QA Report (Lanny Ray)

- QA Report from PWG

- BES-II Data QA

- Run22 Offline QA

- Friday, April 15, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- Run22 Offline QA

- Friday, April 22, 2022. 12pm BNL Time

- Run22 Offline QA

- BES-II Data QA

- Run22 Offline QA

- Friday, May 20, 2022. 12pm BNL Time

- ETOF QA Variables Suggestion (Philipp Weidenkaff)

- Friday, July 1, 2022. 12pm BNL Time

- An assessment of the various run-by-run QA for the 3.2 GeV FXT dataset (Daniel Cebra)

- New run-by-run algorithm (Yu Hu)

- Run-by-run algorithm (Tommy)

- Friday, July 15, 2022. 12pm BNL Time

- Friday, July 22, 2022. 12pm BNL Time

- Friday, July 29, 2022. 12pm BNL Time

- FXT Run-by-Run QA (Eugenia Khyzhniak)

- Friday, Aug 12, 2022. 12pm BNL Time

- Run21 7.7GeV brief QA (Chenliang Jin)

- Friday, Aug 19, 2022. 12pm BNL Time

- Run21 7.7GeV data QA (Emmy Duckworth)

- Run21 7.7GeV dEdx QA (Chenliang Jin)

- 7.7GeV 2021 ETOF QA ( Matt Harasty)

- Run-by-run QA FXT: Au+Au @5.75 GeV (Sameer Aslam)

- Friday, Aug 26, 2022. 12pm BNL Time

- Run20 FXT Bad Runs Lists (Daniel Cebra)

- Run-by-run QA FXT: Au+Au @5.75 GeV (Sameer Aslam)

- Friday, Sep 2, 2022. 12pm BNL Time

- Run21 7.7GeV run-by-run QA (Chenliang Jin)

- Friday, Sep 9, 2022. 12pm BNL Time

- Run-by-run QA FXT (4.59/7.3/31.2 AGeV run19) (5.75/7.3/9.8/13.5/19.5/31.2 AGeV run20) (Eugenia Khyzhniak)

- Run-By-Run QA for Run18 Au+Au @ 26.5 GeV (Muhammad Ibrahim Abdulhamid)

- Friday, Oct 14, 2022. 12pm BNL Time

- 2021 7.7GeV QA (Emmy Duckworth)

- Friday, Nov 11, 2022. 12pm BNL Time

- QA status for Run19 AuAu200 dataset (Takahito Todoroki)

- Friday, Dec 2, 2022. 12pm BNL Time