2009.03.02 Application of the neural network for the cut optimization (zero try)

Multilayer perceptron (feedforward neural networks)

Multilayer perceptron (MLP) is feedforward neural networks

trained with the standard backpropagation algorithm.

They are supervised networks so they require a desired response to be trained.

They learn how to transform input data into a desired response,

so they are widely used for pattern classification.

With one or two hidden layers, they can approximate virtually any input-output map.

They have been shown to approximate the performance of optimal statistical classifiers in difficult problems.

ROOT implementation for Multilayer perceptron

TMultiLayerPerceptron class in ROOT

mlpHiggs.C example

Application for cuts optimization in the gamma-jet analysis

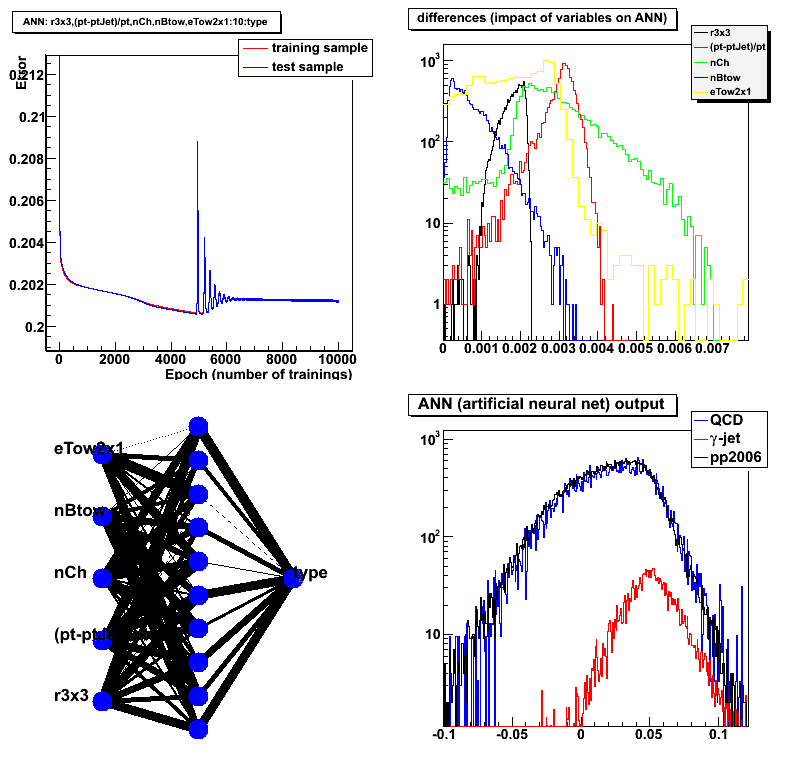

Netwrok structure:

r3x3, (pt_gamma-pt_jet)/pt_gamma, nCharge, bBtow, eTow2x1: 10 hidden layers: one output later

Figure 1:

- Upper left: Learning curve (error vs. number of training)

Learing method is: Steepest descent with fixed step size (batch learning) - Upper right: Differences (how important are initial variableles for signal/background separation)

- Lower left: Network structure (ling thinkness corresponds to relative weight value)

- Lower right: Network output. Red - MC gamma-jets, blue QCD background, black pp2006 data

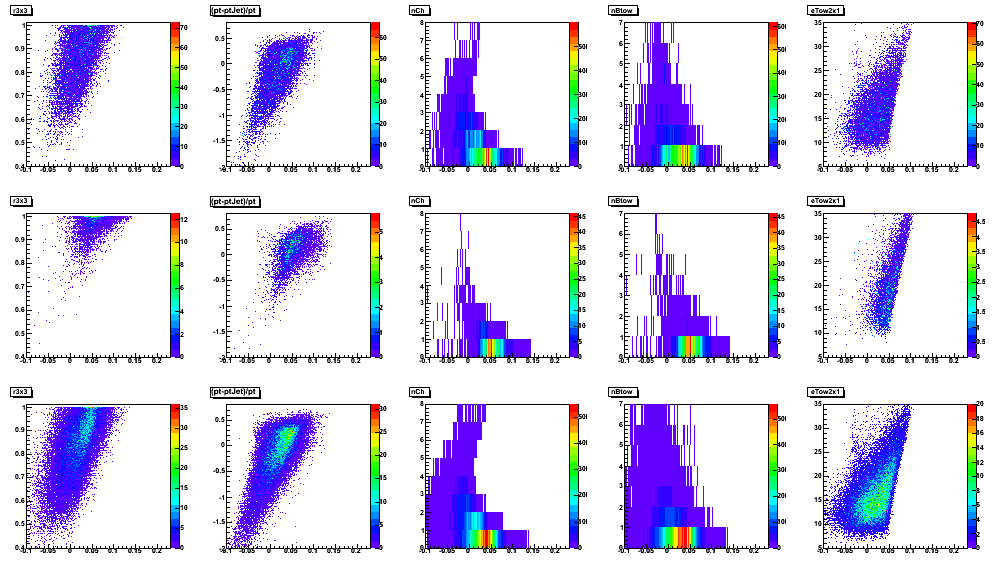

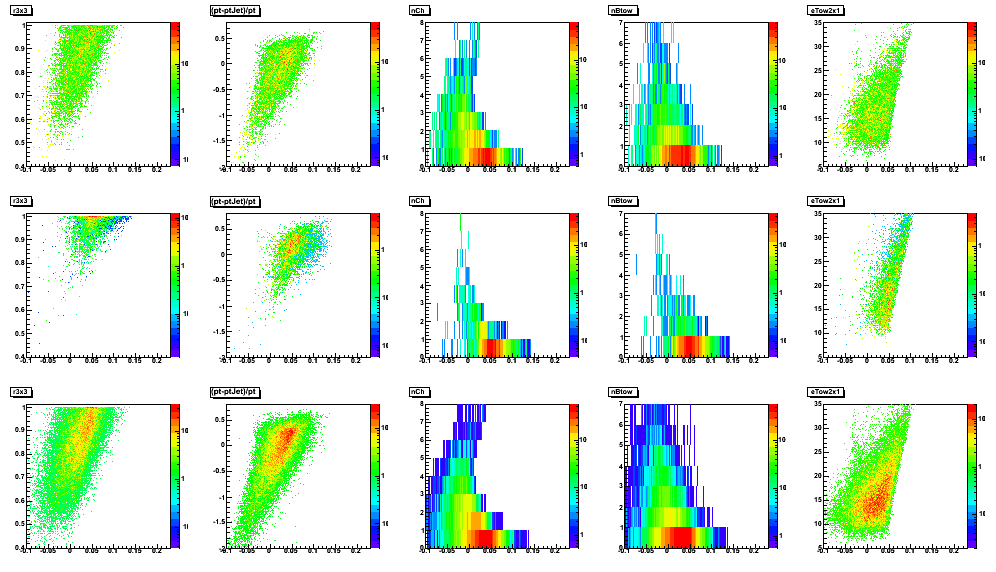

Figure 2: Input parameters vs. network output

Row: 1: MC QCD, 2: gamma-jet, 3 pp2006 data

Vertical axis: r3x3, (pt_gamma-pt_jet)/pt_gamma, nCharge, bBtow, eTow2x1

Horisontal axis: network output

- Printer-friendly version

- Login or register to post comments