Detector Sub-systems

Welcome to the STAR sub-system page. The menu displays all major sub-systems in the STAR setup.

Those pages are used for keeping documentation and information about the subsystems as well as some operational procedures, drawing, pictures and for detector sub-systems calibration procedures and result of studies as well.

Also consult the STAR internal reviews for more information.

- 2009/09 - 01 CPSN0510 : STAR FMS Review report, Summer 2009

- 2008/11 - 17 PSN0467 : TOF software review report, Fall 2008

- 2008/11 - 12 PSN0465 : EMC Calibrations Workshop report, fall 2008

- 2008/03 - 14 CPSN0455 : FTPC sub-system review, 2008

- 2007/01 - 11 You do not have access to view this node

- 2006/12 - 07-08 Review of STAR Tracking Upgrades and the You do not have access to view this node

See also Preview of the 2006/12 review findings for a summary of suggested configurations. - 2006/10 - 06-07 STAR TPC Review and the You do not have access to view this node

- 2006/07 - 07-08 STAR Inner Silicon tracking detector review and the You do not have access to view this node

- 2004/07 - 19-20 CPSN0454 : Report of the FTPC Review Committee, 2004

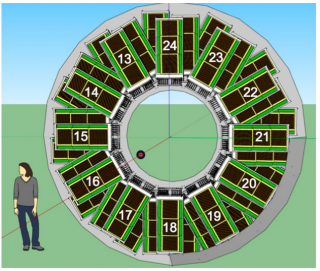

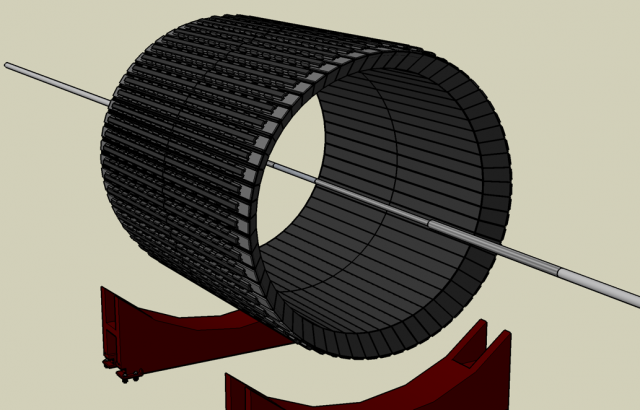

BEMC

The STAR Barrel Electromagnetic Calorimeter

BEMC Detector Operator Manual

Authored by O. Tsai, 04/13/2006 (updated 02/02/2011)

You will be operating three detectors:

| BTOW | Barrel Electromagnetic Calorimeter |

| BSMD | Barrel Shower Maximum Detector |

| BPSD | Barrel Preshower Detector |

All three detectors are delicate instruments and require careful and precise operation.

It is critical to consult and follow the “STAR DETECTOR STATES FOR RUN 11”

and “Detector Readiness Checklist” for instructions.

Rule 1: If you have a concern of what you are going to do with any of these detectors please don’t hesitate to ask people around you in the control room or call experts to get help or explanations.

This manual will tell you:

- how to turn On/Off low and high voltages for all three detectors.

- how to prepare BTOW for “Physics”.

- how to recover from a PMT HV Trip.

- how to deal with common problems.

First, familiarize yourself with the environment of the control room. This is a picture of the four terminal windows which you will be using to operate the BEMC systems. For run 11, Terminal 4 is not in use. Terminal 0 (not shown) is on the left side of terminal 1/

(clicking on a link will take you directly to that section in the manual)

0 - (on beatrice.starp.bnl.gov)

1 - (on emc02.starp.bnl.gov)

BEMC PMT Low Voltage Power Supply Slow Control

2 - (on emcsc.starp.bnl.gov)

BTOW HV Control

3 - (on hoosier.starp.bnl.gov)

BSMD HV Control

0 - (on emc01.starp.bnl.gov)

BPSD HV Control

To login on any of these computers use the emc account with password (check the control room copy of the manual).

Terminal 0 “BEMC Main Control Window”

Usually this terminal is logged on to beatrice.starp.bnl.gov

The list of tasks which you will be doing from this terminal is:

- Prepare BEMC detectors for Physics.

- Turning On/Off low voltages on FEEs.

- Turning On/Off BEMC crates.

- Resetting Radstone Boards (HDLC).

- Explain to experts during phone calls what you see on some of the terminals.

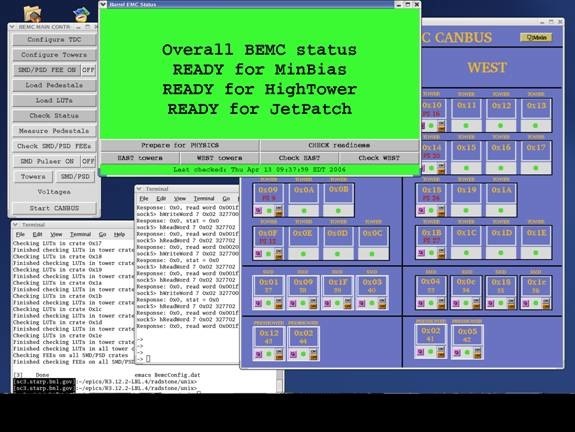

The screenshot above shows how the display on emc02.starp.bnl.gov usually looks during the run. There are five windows open all the time. They are:

- “Barrel EMC Status” - green.

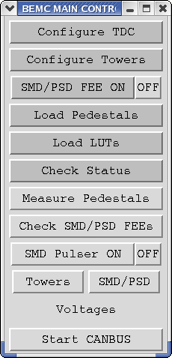

- “BEMC MAIN CONTROL” – gray.

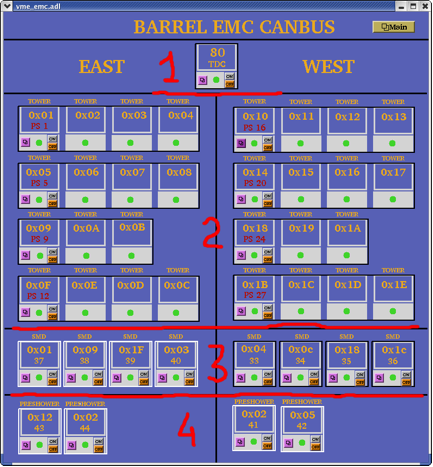

- “BARREL EMC CANBUS” – blue.

- Terminal on sc5.starp.bnl.gov (referred to as the ‘sc5 window’)

- Terminal from telnet scserv 9039 (referred to as ‘HDLC window’)

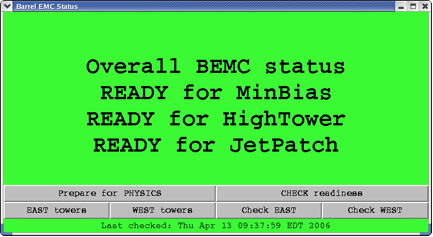

Prepare BEMC detectors for PHYSICS.

In normal operation this is a one click operation.

Click “Prepare for PHYSICS” button and in about 10 minutes “Barrel EMC Status” window will turn green and tell you that you are ready to run. This window may look a little bit different from year to year depending trigger requirements for BTOW.

However if this window turns red, then you will be requested to follow the suggested procedures which will popup on this window: simply click on these procedures to perform them.

During “prepare for physics” you can monitor the messages on the sc5 window. This will tell you what the program is actually doing. For example, when you click “Prepare for Physics” you will start a multi-step process which includes:

- Turning OFF FEEs on all SMD/PSD crates

- Programming TDC (Tower Data Collector, Crate 80)

- Reprogramming FPGAs on all BTOW crates

- Configuring all BTOW crates

- Configuring all SMD/PSD FEEs

- Loading pedestals on all BTOW crates

- Loading LUTs on all BTOW crates (only for pp running)

- Checking BTOW crate configuration

- Checking SMD/PSD configuration

- Checking that pedestals were loaded correctly (optional)

- Checking that LUTs were loaded correctly (only for pp running)

Usually, you will not be asked to use any other buttons shown on this window.

BEMC MAIN CONTROL

You can initiate all steps outlined above manually from the BEMC MAIN CONTROL window shown below, and do much more with the BEMC system. However, during normal operation you will not be asked to do that, except in cases when an expert on the phone might ask you to open some additional window from this panel and read back some parameters to diagnose a problem.

You might be asked to:

- Turn OFF or ON SMD/PSD FEE

- Open SMD/PSD panel and read voltages and currents on different SMD/PSD FEEs

- Open East or West panels to read voltages on some BTOW crates. (Click on Voltages)

That will help experts to diagnose problems you are calling about.

BARREL EMC CANBUS

From this window you can turn Off and On BEMC crates, read parameters of VME crates. This screenshot shows you this window during normal operation with all BEMC crates being ON.

- Crate 80 TDC (Tower Data Collector and “Radstone boards”)

- Thirty crates for BTOW

- Eight crates for BSMD

- Four crates for BPSD

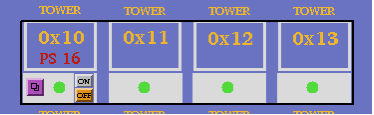

The BTOW crates are powered in groups of three or four from a single power supply (PS) units. The fragment below explains what you see.

Tower crates 0x10, 0x11, 0x12, 0x13 are all powered from a single power supply: PS 16.

Thus, by clicking the On and Off buttons you will be switching On/Off all four crates and the communication with PMT boxes which are associated with them. (see details in Tower HV Control GUI description).

Sc5 and HDLC windows.

Two other terminal windows on “Terminal 0” are the so-called sc5, and HDLC windows.

These need to be open at all times. To open the sc3 terminal you will need to login as sysuser on sc5.starp.bnl.gov with password (check the control room copy of the manual).

From this sc5 terminal you run two programs. The first program is emc.tcl. If you need for some reason to restart “BEMC MAIN CONTROL” or “Barrel EMC Status” GUI you need to start emc.tcl: the alias for this is emc. To kill this program use alias kill_emc.

To open “Barrel EMC Canbus” GUI use alias emc_canbus_noscale.

If you need to reboot canbus then:

- open sc5 window

- telnet scserv 9040

- press “Ctrl” and “x” keys

- wait while canbus reboots (~5 minutes or so)

- press “Ctrl” and “]” keys

- quit telnet session

- close sc5 window

To open an HDLC window, first you need to open an sc3 window and then telnet scserv 9039.

To close telnet session you need to press “Ctrl” and “]”, and then quit from telnet.

You may be asked by experts on the phone to reset the radstone boards. This is why you need this window open. There are two radstone boards and to reset them type:

radstoneReset 0

and

radstoneReset 1

Terminal 1. “BEMC PMT Low Voltage Power Supply Slow Control”

There is a change in operation procedures for Run7 for PMT HV Low Voltage power supplies.

There are two low voltage power supply PL512 units which powers PMT bases.

PL512 with IP address 130.199.60.79 powers West side and PL512 with IP address 130.199.60.81 powers East side of the detector. A single power supply feeds thirty PMT boxes. The GUI for both PL512 should be open all time on one of the workspace on Terminal1. A screenshot below shows GUI at normal conditions. Both PL512 should be ON all the time, except the case when power cycling of the PMT bases is required.

There are two buttons to turn power On and Off, as usual, wait 30 sec. after turning power supply Off before you will turn it On again. To start GUI use aliases bemc_west and bemc_east on sc5.starp.bnl.gov

Terminal 2. “BTOW HV Control”

This is typical screen shot of the BTOW HV GUI during “Physics” running.

What is shown on this screen?

The top portion of the screen shows the status of the sixty BTOW PMT boxes. In this color scheme green means OK, yellow means bad, gray means masked out.

Buttons marked “PS1 ON” etc. allows to ramp slowly HV on the groups of the boxes. (PS1 ON will bring only boxes 32-39)

Buttons “EAST ON” and “WEST ON” allows to ramp up slowly entire east or west sides.

The fragment below explains what the numbers on this screen means.

![]()

Each green dot represent a single PMT box. Label 0x10 tells you that signals from PMTs in boxes 1 and 2 feed to BTOW crate 0x10, boxes 3 and 4 feed crate 0x11 etc. You will need to know this correspondence to quickly recover from HV trips.

Turning ON HV on BTOW from scratch.

1. BEMC PMT LV East and West should be ON.

2. EMC_HighVoltage.vi should be running.

3. Made a slow ramp on the West Side by pressing button “WEST ON”

4. Made a slow ramp on the East Side by pressing button “EAST ON”

Both steps 3 and 4 takes time, there is no audio or visual alarm to tell operators that HV was ramped – operators should observed progress in the window in the “Main Control” subpanel (see below).

Subpanel “Main Control”

The HV on PMTs is usually ON during the Run. The two buttons on top are for turning the HV On and OFF on all PMT boxes. The most frequently used button on this subpanel is “Re-Apply HV all PMT Boxes” which is usually used to recover after HV trip. Sometimes you will need to “Re-Apply HV current PMT box” if the system does not set the HV on all boxes cleanly.

The scale 0-60 shows you a progress report. The small icons below this scale tells you what PMT box and PMT were addressed or readout.

Once you recover from a HV trip please pay attention to the small boxes labeled “Box”, “PMT”, and the speed at which the program reads the voltages on the PMTs. This will tell you which box has “Timeout” problems and which power supply will need to be power cycled.

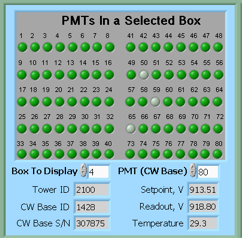

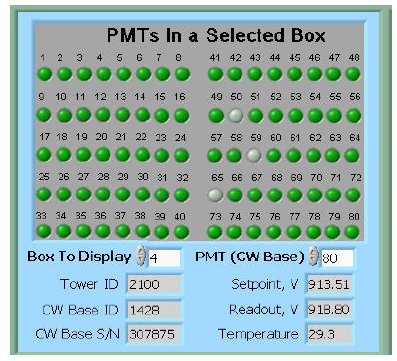

Subpanel “PMTs In a Selected Box”

This subpanel shows you the status of the PMTs in a given PMT box.

If you want to manually bring a single PMT box to the operational state by clicking on “Re-Apply HV current PMT box” on the Main Control subpanel you will need to specify which Box To Display on the panel first.

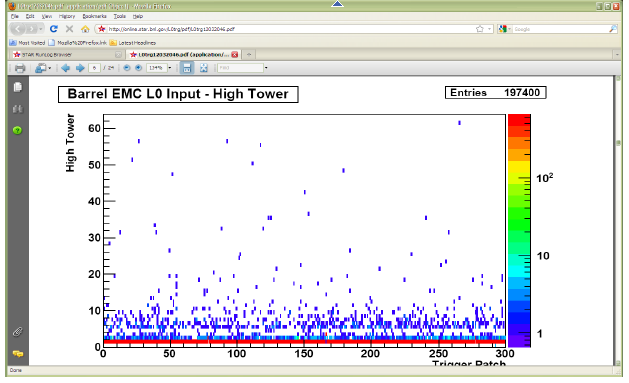

Subpanel “Alarm Control”

On the Alarm Control sub-panel the SOUND should be always ON, except for the case when you are recovering from a HV trip and wish to mute this annoying sound.

Shift personnel are asked to report HV trips in the shift log (type of trip, e.g. single box#, with or without timeout, massive trip, etc…)

Please don’t forget to switch this button back to the ON position after recovering from a trip.

The Main Alarm LED will switch color from green to red in case of an alarm.

HV trip with Timeout problem.

Typical situation – you hear a sound alarm indicating a HV trip. The auto-recovery program did not bring all PMT boxes to the operational state, e.g. some boxes will be shown in yellow. First thing to check for the presence of a “Timeout” problem.

Look at the right upper corner of the GUI. If the field below “Timeouts” is blank then try to recover by re-applying HV to all PMT boxes if the number of bad boxes more then two. If only one or two boxes is yellow then you can try to re-apply HV to the current PMT box.

If only one or two PMT bases timed out and HV tripped try to recover using above procedure. It is possible that one or two PMT bases will timed without causing trips of HV, then just continue running and made a note in the shift log about timed out PMT bases, experts will take care of this problem during the day.

But it is also a chance that a single timed out PMT base will trip lots of other PMT. In this case this bad PMT should be masked out. The procedure to do this is simple and can be found at the end of this manual. However, this is an expert operation and should only be performed after consulting with a BEMC expert.

However, if the field below “Timeouts” is filled with numbers (these are PMTs addresses) then you have a Timeout problem. The procedure to recover is below:

- notify shift leader about this problem and tell him that it will take at least 20 min. to bring back BEMC for Physics running.

- Second, try to identify which PMT box has timeouts (usually it will be first box in yellow counting from 1 to 60). If you are not sure which box has the timeout problem then read all pmt boxes by clicking on corresponding button at the “Main Control” subpanel, and observe which box creates the problem. The box with timeout problem will be responding VERY slowly and will be clearly seen on the “Main Control” subpanel. At the same time, PMTs addresses will be appear in “Timeouts” white space to the right of the green control panel.

- As soon as you find the box with the timeout problem, click “Cancel” on the “Main Control” subpanel and then click “OFF” – you will need to turn HV OFF on all PMTs.

- Wait till HV is shut OFF (all PMT Boxes).

- From Terminal 1, power cycle correspondent PL512 (Off, wait 30 sec., On).

- Now turn ON HV on PMT boxes from the “Main Control” subpanel. It will take about 2 to 3 minutes first to send desired voltages to all PMTs and then read them back – if the HV is set correctly.

It is possible that during step (6) one of the BEMC PMT LV on the East or West side will trip. In this case cancel ramp (press “Cancel” button on the “Main Control” subpanel). Power cycle tripped BEMC PMT LV. Proceed with the slow ramp.

However, if during step (6) you still get a “Timeout” problem then you will need to:

- Call experts

-------------------- Changes for Run10 operation procedures ----------------------------

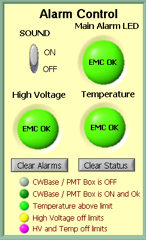

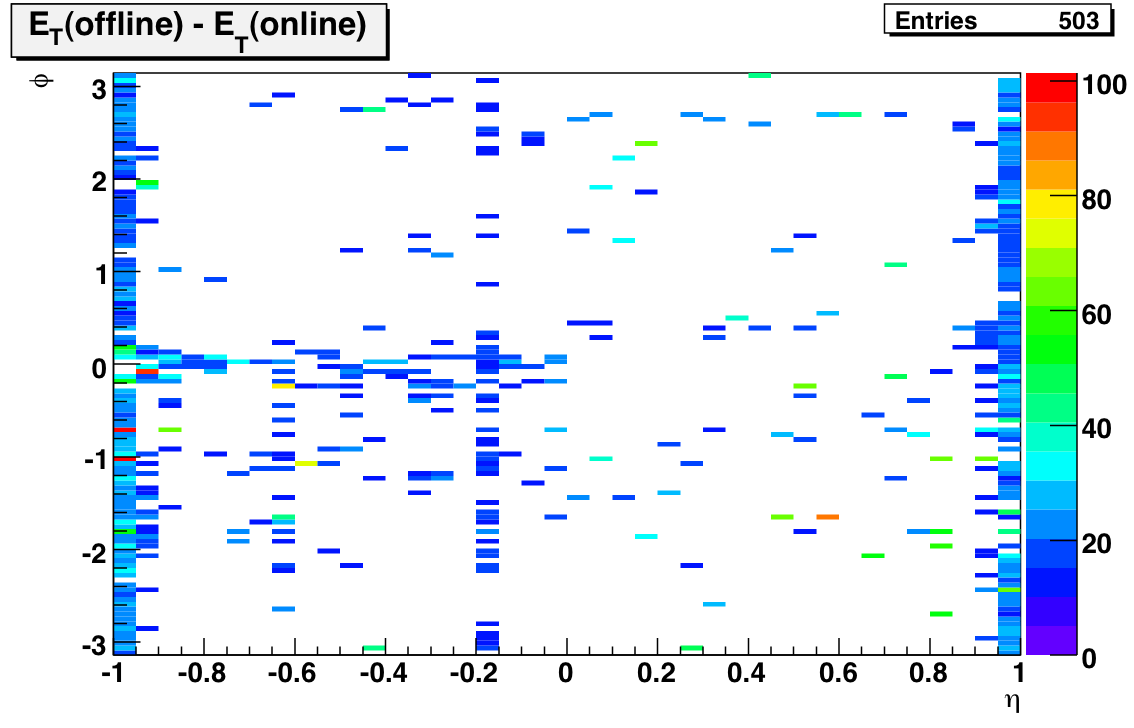

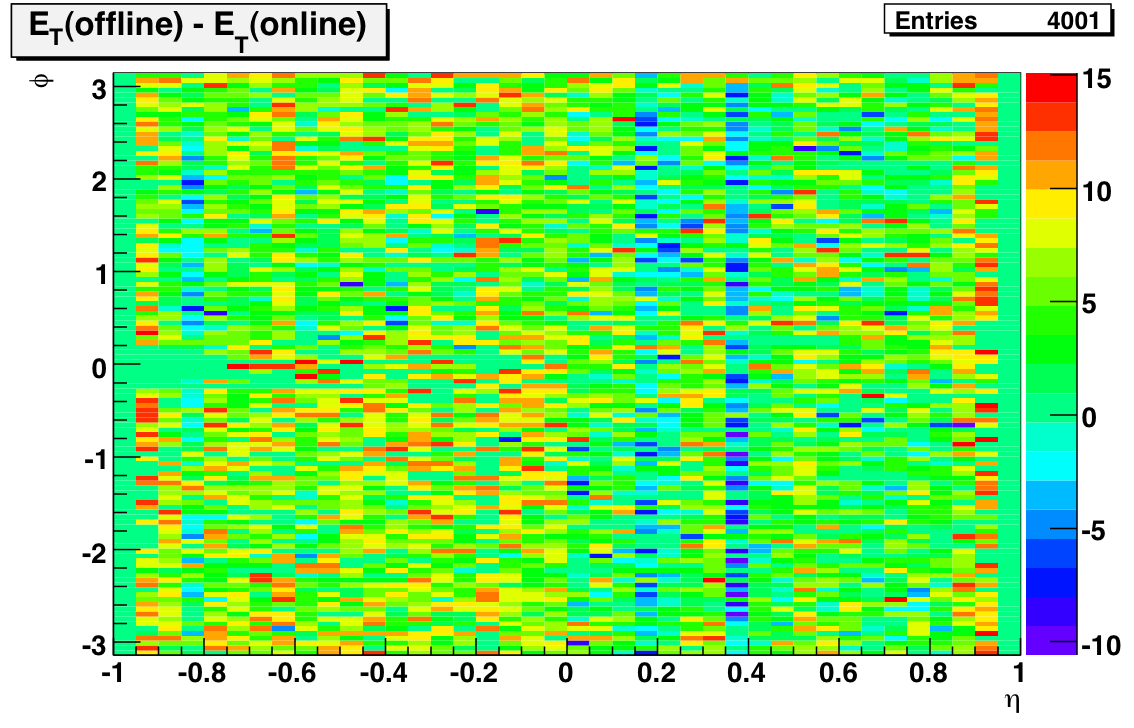

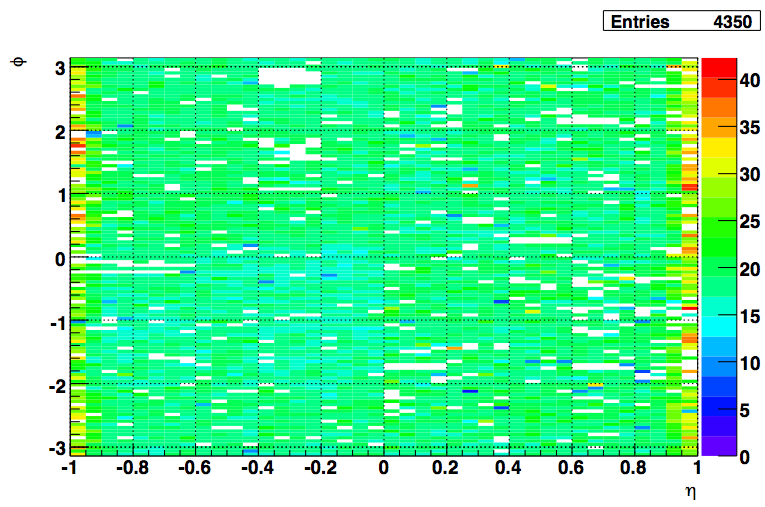

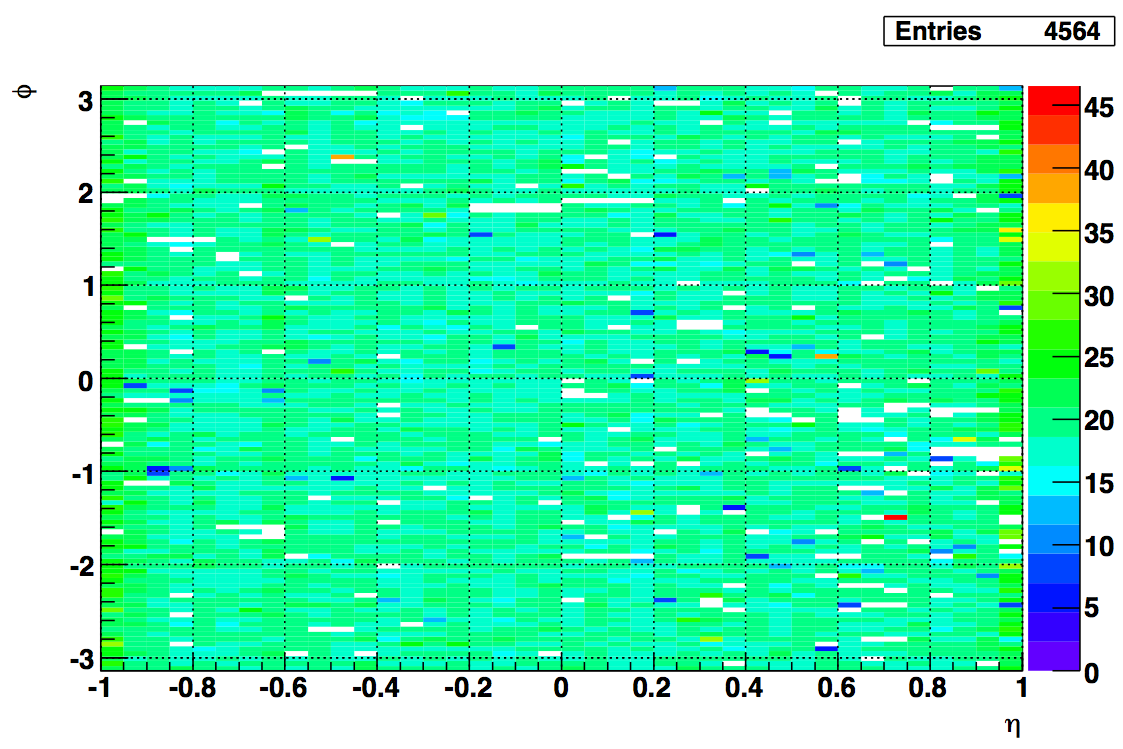

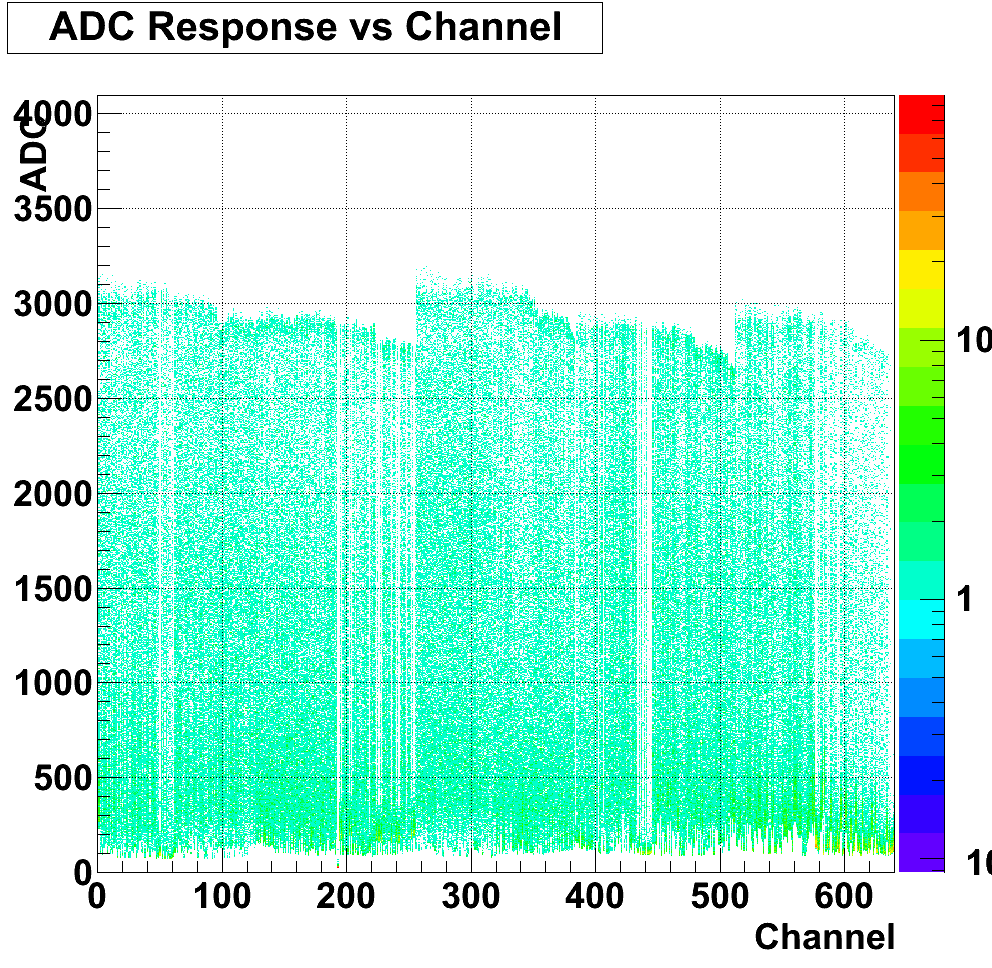

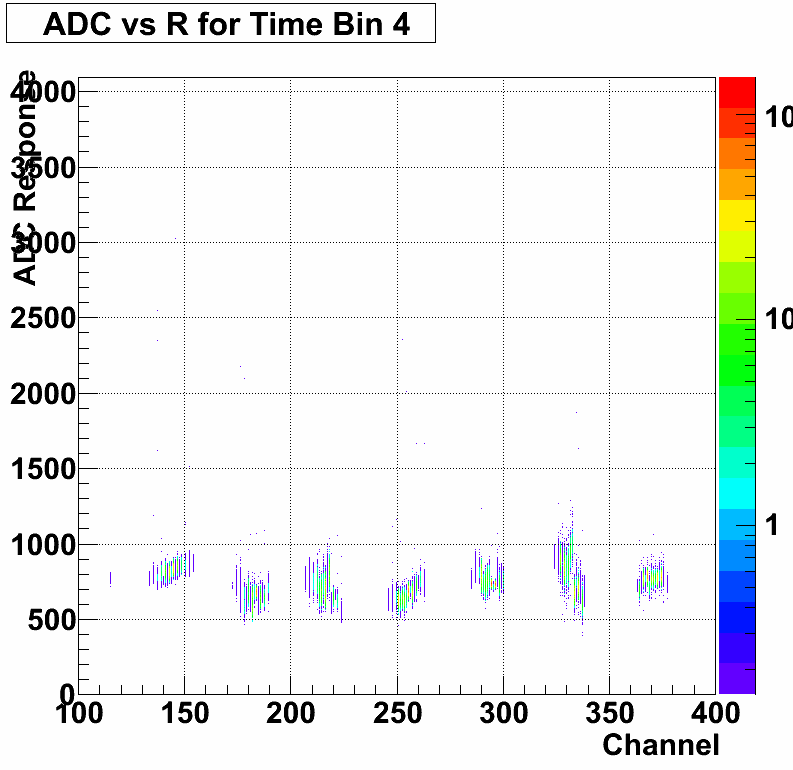

To reduce the number of HV trips and associated efficiency losses during data taking we changed functionality of the PMT HV program. Namely, the HV read back time interval was changed from 15 minutes to 9999 hours, because it was found that most of the HV trips were self induced during HV read back. As a result efficiency of data taking was improved for the price of “conscious operation”. You can‟t relay anymore on absence of the HV Trip alarm as an indicator that HV on all PMTs is at nominal values. Instead shift crew should monitor associated online plots during data taking to be sure that HV was not tripped. In particular, for Run 10, shift crew should watch two plots “ADC Eta Vs Phi” and “Tower ADC” under the “BEMC Shift” tab. Example of missed HV trip on one of the PMT box during recent data taking is shown below.

The white gap in the upper left corner shows absence of hits in BTOW due to HV trip in one of the BTOW PMT Box. It is easy to find out which box tripped by looking at second plot.

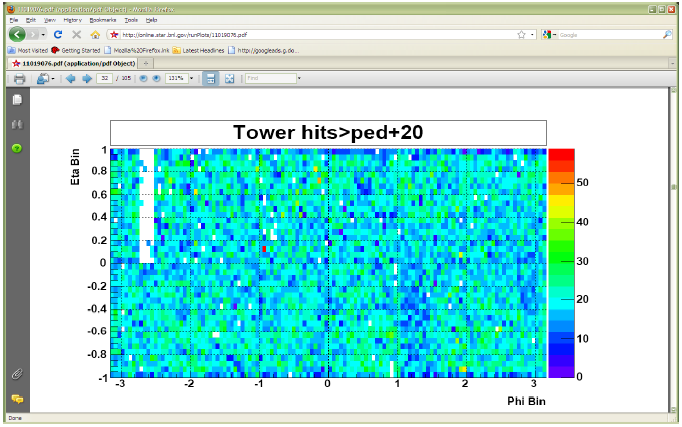

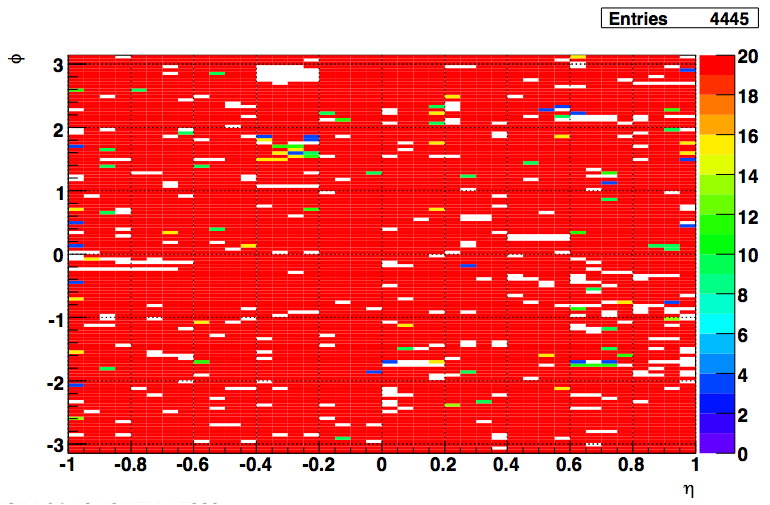

The gap with missing hits on the second subpanel for crate 0x15 will tell you that one of the PMT box 11 or 12 was tripped (correspondence between BTOW crates ID and PMT boxes is shown in the BTOW HV GUI see the picture at the beginning of this section).

What to do if shift crew will notify you of HV trip?

The fastest way to recover is to identify what box tripped and then try to recover only this PMT box. In some case it will be impossible to do this, because you will be needed to powercycle LV power supply for PMT HV system (timeout problems).

This is typical scenario:

1. Identify which PMT box(es) potentially tripped. (In the example above one PMT box 11 or 12 lost HV) to do that:

1.1 From the BTOW HV GUI form subpanel “PMTs In a Selected Box” select needed box in the “Box To Display” window.

1.2 From the BTOW HV GUI form subpanel “Main Control” press “Read HV current PMT Box”. (In the example above, detector operator found that Box 11 was OK after reading HV, but Box 12 had timeout problems).

1.3 Depending what will be result of (1.2) you may need to simply Re-Apply HV to current PMT box (no timeouts during step (1.2), or you will need to resolve timeout problem.

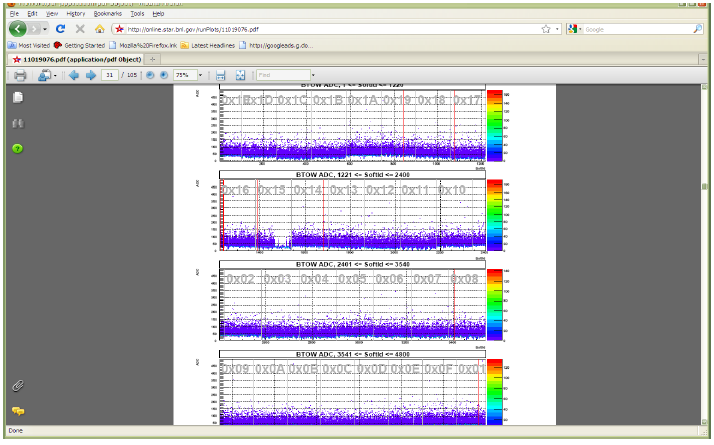

There are additional duties for detector operators when STAR is not taking data for long period of time for any reasons. We need to keep HV On on all PMTs at all time. This will assure stable gains on PMTs. If for some reasons PMTs will be Off for long time (few hours) then it will be difficult to assure that the PMTs gain will not drift once we turn HV On again. Typical situation is APEX days, when STAR is not taking data for 12 hours or so. To check that HV is on shift will be asked to take a short run 1k events using “bemcHTtest” configuration. TRG+DAQ+BTOW only. Once the data will be taken use a link from the run log browser to “LO Trigger”. Check page 6.

All trigger patches should have some hits. In case of the HV trips you will see blank spots.

Procedure to mask out single timeout PMT base.

Information you will need:

1. In which PMT box timeout PMT base is located

2. PMT (CW Base) to masked out.

In the timeout window the displayed number is CW Base ID this number need to be translated to PMT (CW Base).

From this sub panel you can find out which PMT in the affected PMT box need to be masked out. Scroll thru the

PMT (CW Base) top right small window and read out at the same time CW Base ID in the second from the bottom left window. (As shown, PMT (CW Base) 80 correspond to CW Base ID 1428).

Now, click “Configuration (expert only)” button on the Main Control panel.

Another panel EMC_Configuration.vi will open.

From this panel click “CW Bases Configuration” button on the right bottom.

Another panel EMC_CWBasesConf.vi will open.

On this panel specify PMT BOX Number and then click on desired CW Base to be masked out. The color of the dot will change from bright green to pale green. Then click OK button.

Panel will close after that.

Click OK button on EMC_Configuration.vi panel, this panle will close after that.

To check that you masked out right CW Base, Re-Apply HV to current PMT box. Once HV will be re-applied you will see masked CW base will be in the gray color as shown in the picture above (Bases 50, 59, 65 were masked out in the PMT box 4).

Terminal 3. “BSMD HV Control”

Your login name is emc, your password is __________________________

This screenshot shows how the window on the terminal3 will look when the HV is Off on the BSMD modules. There should be two open windows. One is a LabView GUI and another is a telnet session SY1527 (CAEN HV Main Frame).

In normal operation it is a one click procedure to turn the HV On or OFF on the BSMD.

There is complete description of the BSMD HV program in a separate folder in this document.

Although, operation is very simple, attention should be made for audio alarms.

Do not mute the ALARM. Shift personnel are asked to report all BSMD trips in the shift log.

Terminal 0. “BPSD HV Control”

The BPSD (Barrel PreShower Detector) HV supplies are two Lecroy 1440 HV systems located on the second floor platform, racks 2C5. Each 1440 is commanded by a 1445A controller which communicates via the telnet server on the second floor of the platform (SCSERV [130.199.60.167]). The left supply uses port 9041 and the right supply uses port 9043.

The HV for BPRS should be On at all times.

From Run 10 for BPRS control we will be using new GUI. They will be open on one of the desktop on Terminal 0 (Beatrice.starp.bnl.gov). Usually detector operators no need to take any actions regarding BPRS HV unless specifically requested by experts. The screen shots of of the new GUIs shown below.

To start the GUI use type bemc_lecroy and bemc_lecroy2.

A Green LED indicators tells you that HV is On and at desired level.

You can open subpanel for any slot to read actual values of HV. The screen shot is shown below.

Please Ignore empty current charts – it is normal.

Sometime, BEMC PSD GUI can turn white, due to intermittent problems with LeCroy crate controller. Simply make a log in the shift log and continue normal operation. It is likely HV is still On and at desired level. Experts not need to be called right away in this case.

Easy Troubles:

BEMC Main Control seems to be frozen, e.g. program doesn’t respond to operators requests.

Probable reason: RadStone cards in a “funny state” and needs to be reset

From Terminal 1 try to:

- kill_emc

- soft reboot first “Ctrl” + “x” from HDLC terminal window.

- start emc and see if problem solved

If the problem is still there then:

- kill_emc

- Power Cycle Crate 80

- start emc and see if problem solved

If problem is still there call experts

PL512 Information (Run 11 configuration)

There are two PL512 power supplies which provides power to the BEMC PMT boxes. Both are located in the rack 2C2, second floor.

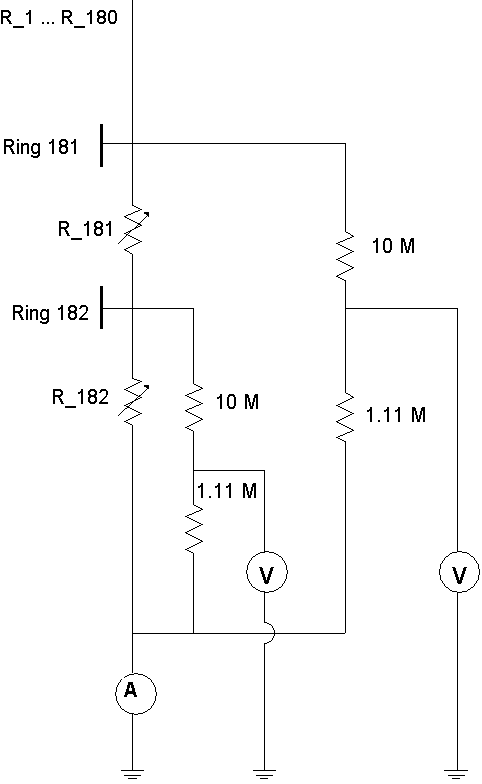

The top unit (IP address 130.199.60.79) serves West side of the detector. The bottom unit (IP address 130.199.60.81) serves East side of the detector. The connection scheme is shown below

PMT Boxes

West Side East Side

| 1-8 | 9-16 | 17-22 | 23-30 | 32-39 | 40-47 | 48-53 | 54-31 | |

| U 0,4,8 | 1,5,9 | 3,7,11 | 2,6,10 | 0,4,8 | 1,5,9 | 3,7,11 | 2,6,10 | |

| U 0,1,2,3 | +5 V | |||||||

| U 4,5,6,7 | -5 V | |||||||

| U 8,9,10,11 | +12 V |

Note , BEMC PMT Low Voltage Power Supply Slow Control channels enumerated from 1 to 12 in the Labview GUI.

Slow control for PL512 runs on EMC02, login as emc, alias PMT_LV.

You will need to specify IP address.

Configuration (Experts Only) password is ____________.

Log files will be created each time you will start PMT_LV in the directory

/home/emc/logs/

for example

/home/emc/logs/0703121259.txt (March 12, 2007, 12:59)

To restart PL512 epics applications.

Login to softioc1.starp.bnl.gov bemc/star_daq_sc

Look at procIDs

->screen –list

->screen –r xxxx.Bemc-west(east) xxxx is procID

->bemclvps2 > (ctrl A) to detach

exit to kill

->BEMC-West or East to restart

Experts control for PL512

If you need to adjust LV on PL512 you can do this using expert_bemc_west or (east).

These GUI has experts panel. Adjusting LV setting DO NOT try to slide the bars.

Instead click on it then on popup window you can simply type desired value.

Make sure you will close expert GUI and return to normal operational GUI once

you will finish adjustments.

A copy of this manual as a PDF document is available for download below.

Expert Contacts

Steve Trentalange (on site all run) e-mail: trent@physics.ucla.eduPhone: x1038 (BNL) or (323) 610-4724 (cell)

Oleg Tsai (on site all run) e-mail: tsai@physics.ucla.edu

Phone: x1038 (BNL)

SMD High Voltage Operation

Version 1.10 -- 04/25/06, O.D.TsaiOverview

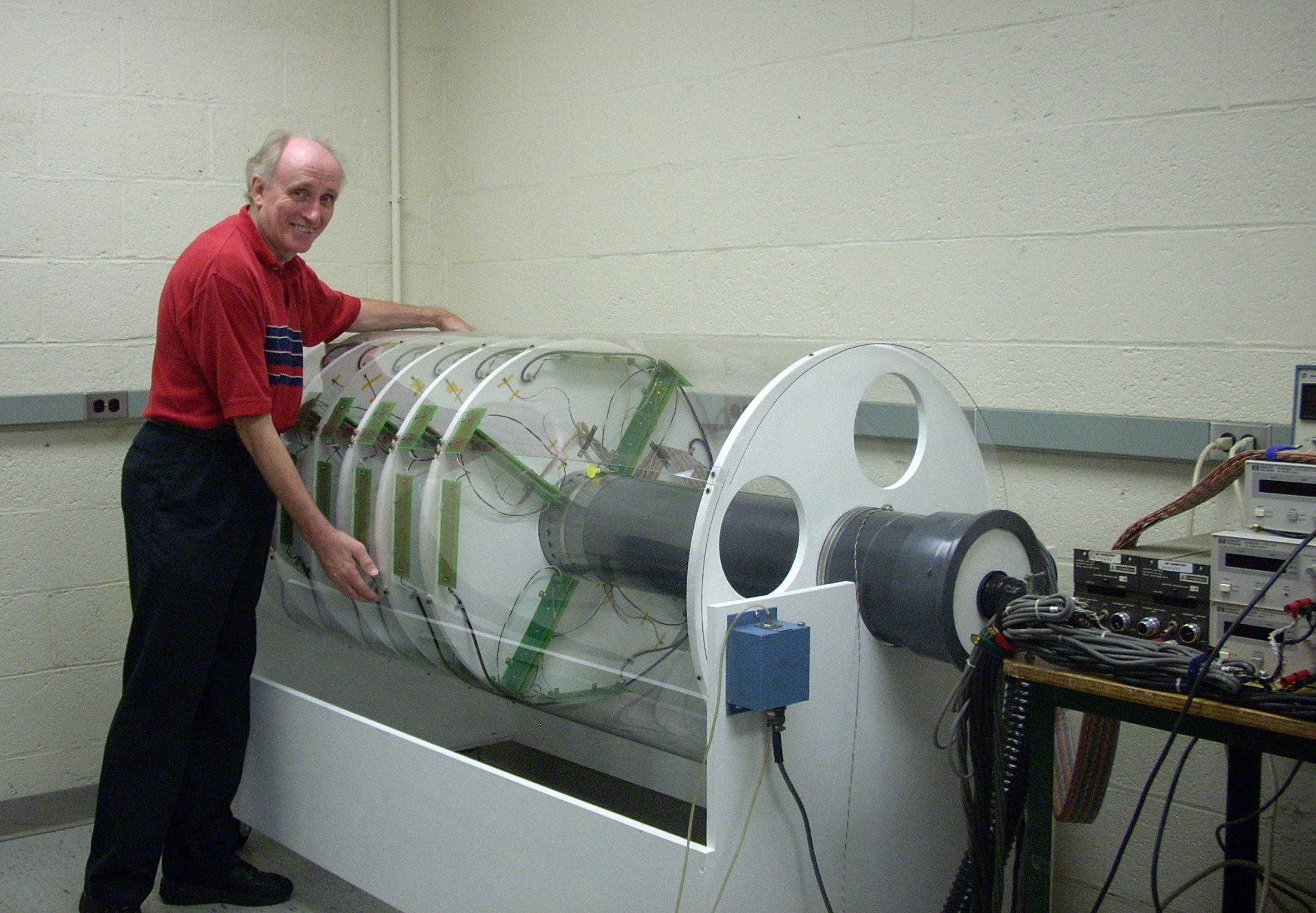

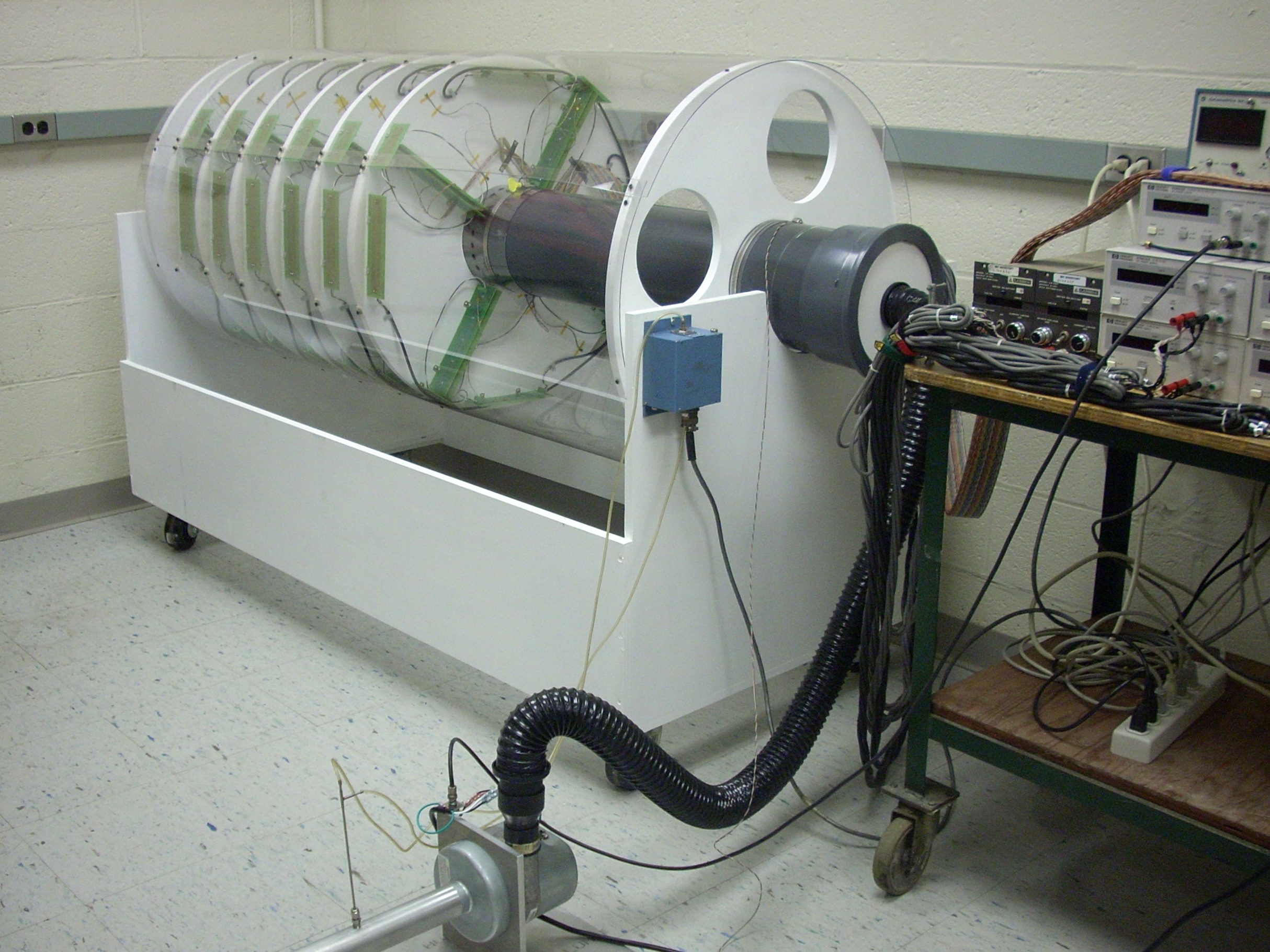

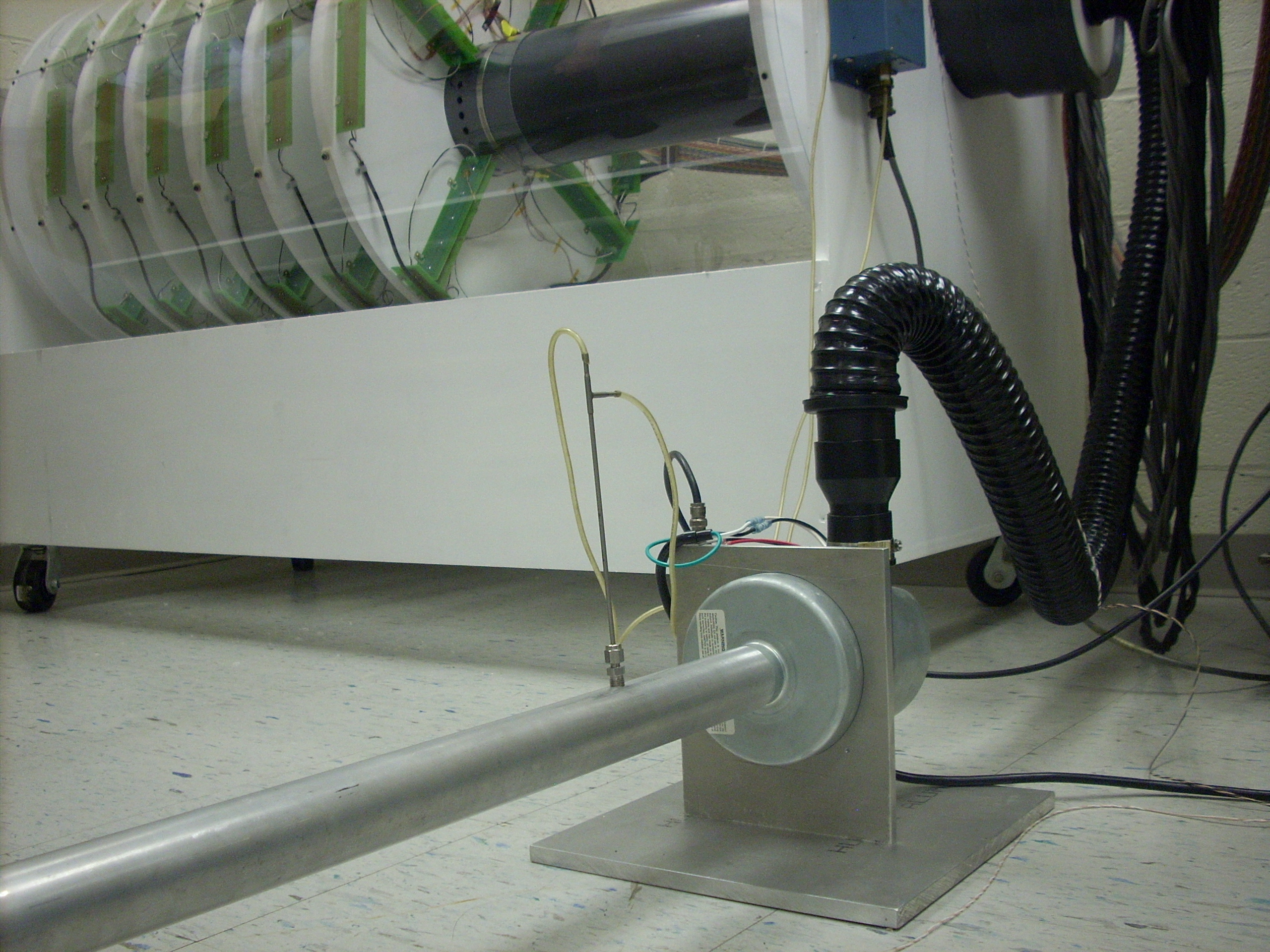

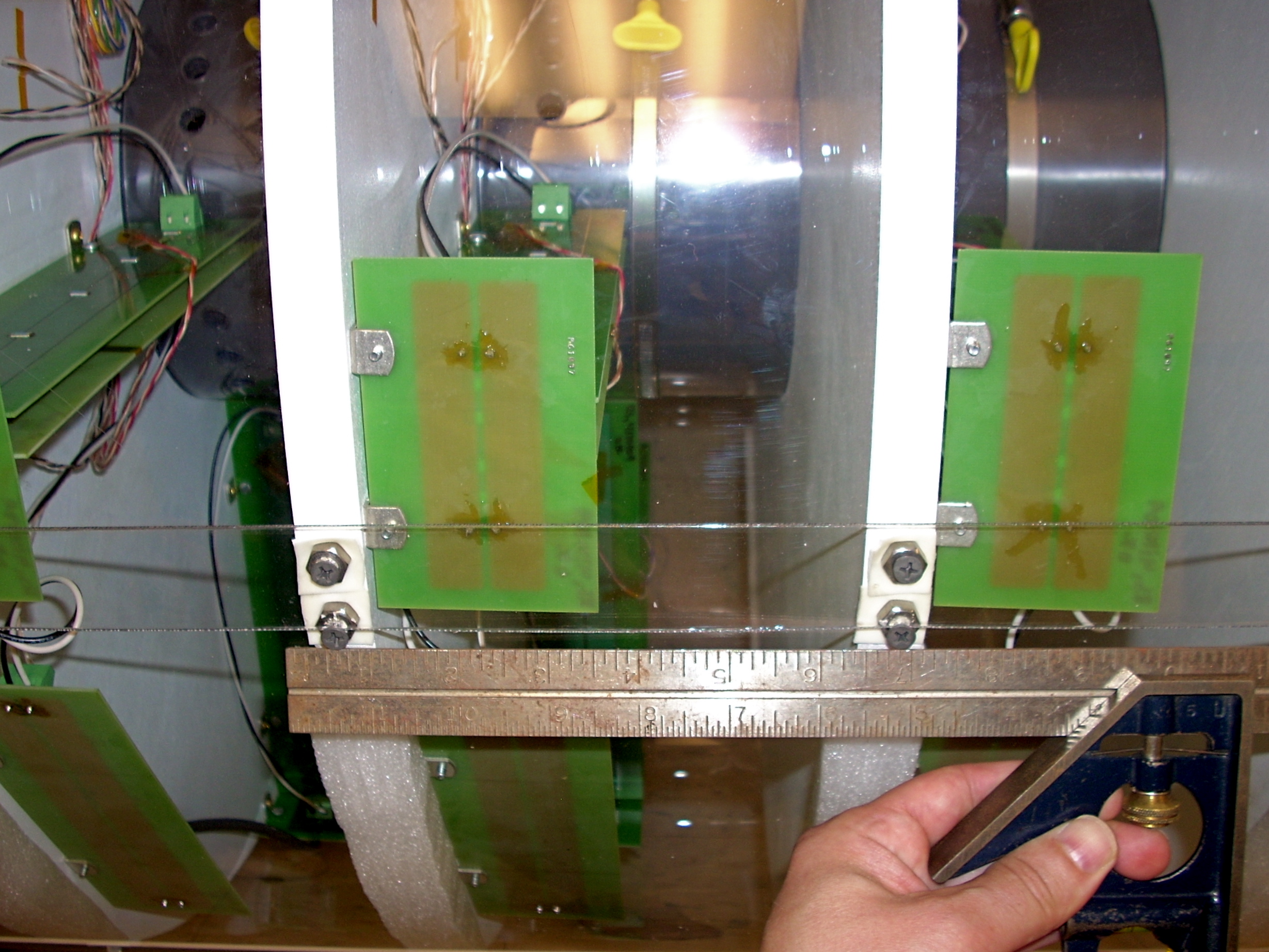

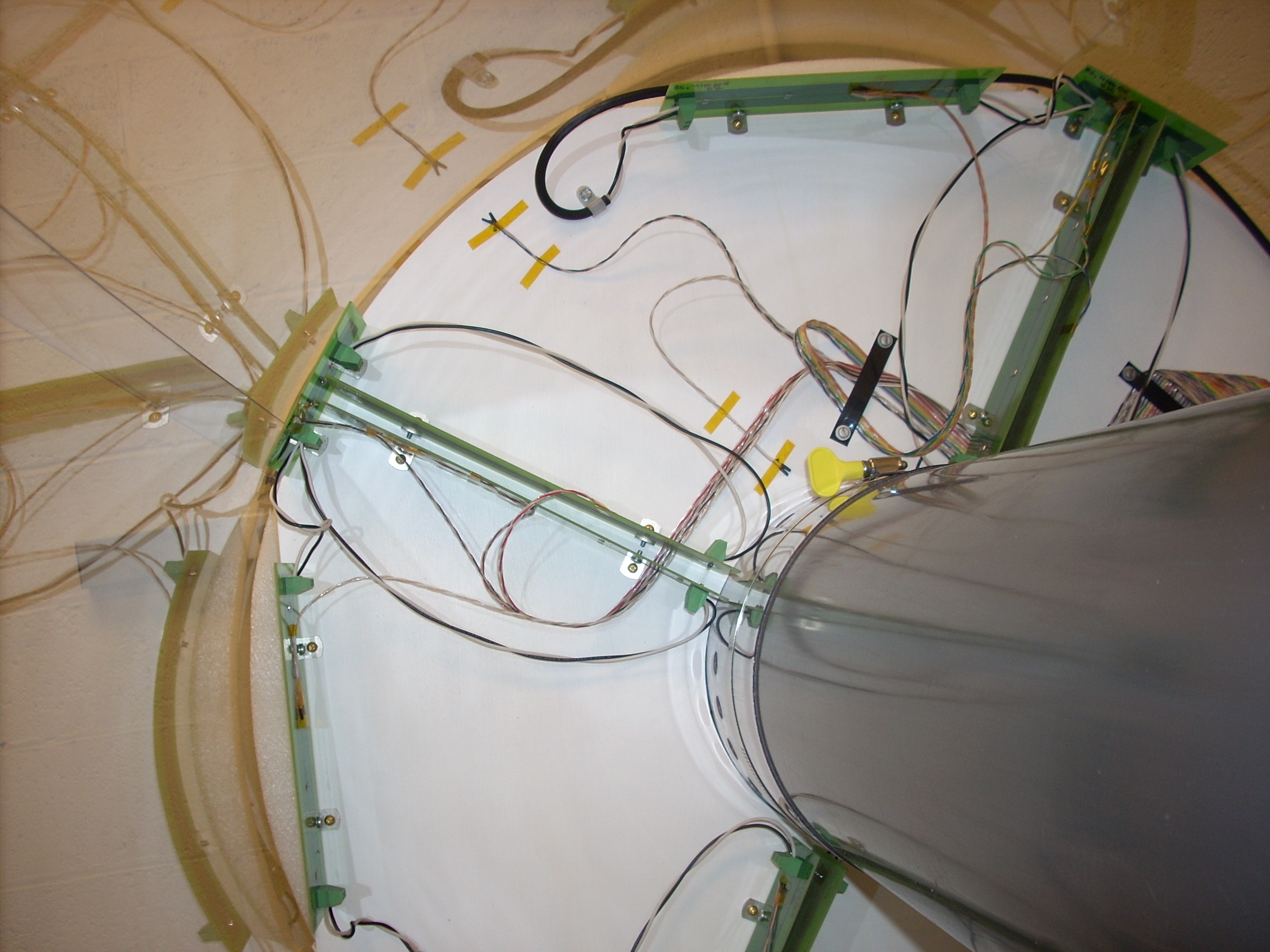

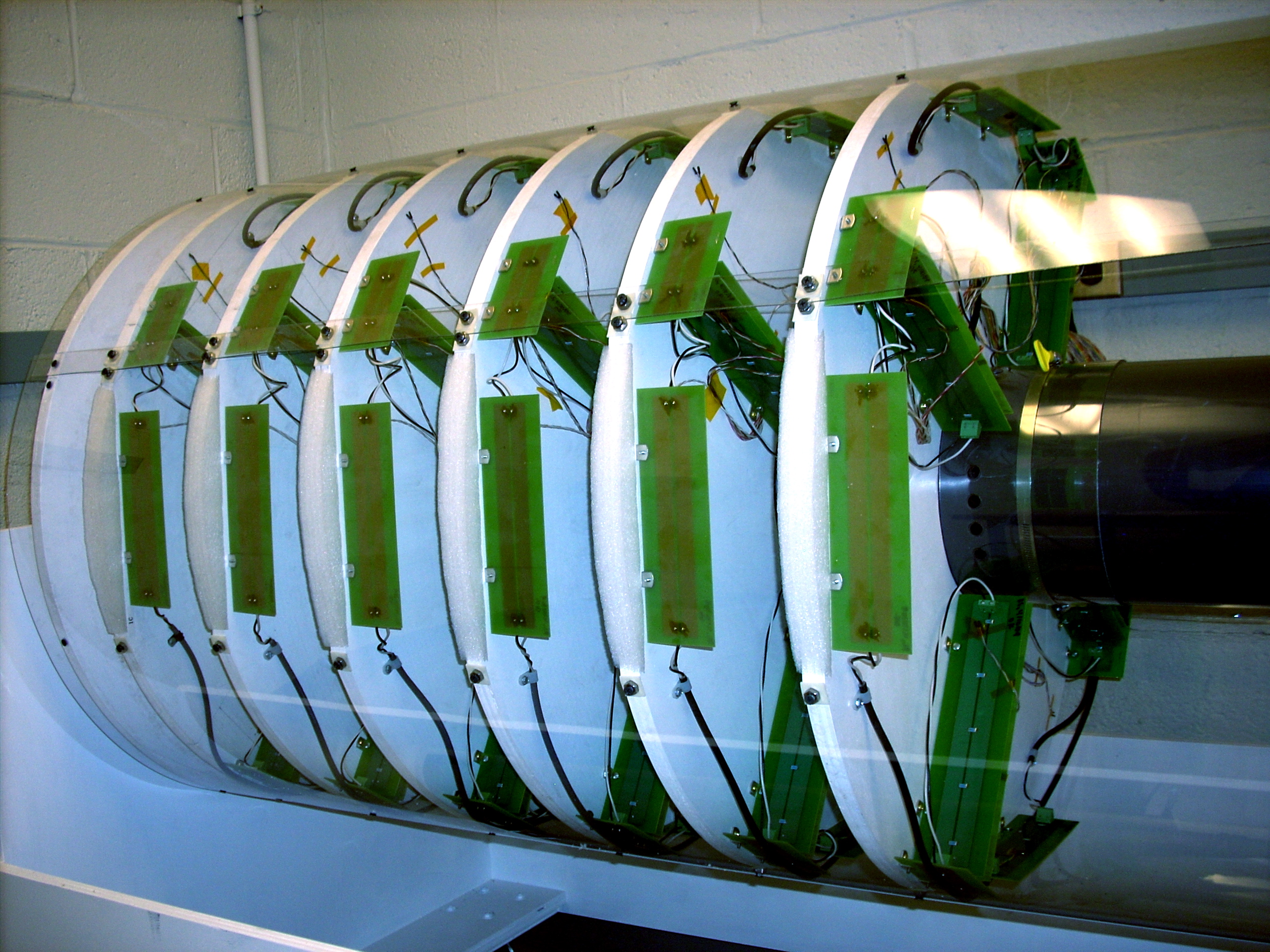

The SMD detectors are a set of 120 proportional wire chambers located inside the EMC modules (one per module). The operating gas is Ar/CO2(90/10). The nominal operating voltage is +1430 V. As for any other gaseous detector, manipulation with high voltage should be performed with great care.A detailed description of the system is given in the Appendix.

SMD HV must be turned OFF before magnet ramp !

Standard Operation includes three steps.

Turn HV ON

Turn HV OFF

Log Defective Modules

To turn SMD HV ON the procedure is:

- On HOOSIER.STARP.BNL.GOV computer double click on the SMDHV icon on the Windows desktop. The 'SMD HIGH VOLTAGE CONTROL' window will open.

- On the SMD HIGH VOLTAGE CONTROL window click 'POWER' button.

- 'POWER' button will turn RED

- In no later than 90 + 150 + 300 sec in window 'Current Mode' you will see the message - "Physics Mode"

- All modules with high voltage on them will be shown in GREEN, LIGHT BROWN or YELLOW.

To turn HV OFF on SMD the procedure is:

- Click on the green 'POWER' button. Result:

- 'POWER' button will turn from green to BROWN

- after a 30 sec. or so small window will pop-up telling you that voltages an all channels reached zero.

- Click 'OK' on that small window to stop the program.

To Log Defective Modules

- Scroll down the window -- you will see three tables

- Log contents of the left table called 'Defect Module List' if any modules are presented here.

- Log contents of the right table called 'Modules tripped during Standby' (for example Run XXX #8 - 3 trips, Run XXX #54 - 1 trip)

- Close the 'SMD HIGH VOLTAGE CONTROL' window.

Detailed description of the SMD HV Program and associated hardware settings can be found in Appendix.

-------------------

Indicators to watch:

1. Interlock went RED

In case STAR global interlock went ON

- Interlock led will turn in RED

- the SMD HV program will turn OFF HV on all SMD channels and program will halt.

- Operator should close SMD HV Voltage control window.

Once STAR global interlock will be cleared follow usual procedure to power up SMD.

2. Server Timeout went RED or SMD HV Control program is frozen.

This is an unusual situation and SMD Expert Contacts should be alerted. The lost communication to SY1527 should not lead to immediate damages to the SMD chambers.

In case communication to the SY1527 is lost for some reason the 'SERVER TIMEOUT' led will turn RED. In case SMD HV Control program is frozen the 'Current Time' will not be updated.

Procedure to resolve problem is:

- Open terminal window on EMC01.STARP.BNL.GOV (monitor is on top of SMD HV Control PC)

- ping 130.199.60.50 -- observe that packets transmitted and received.

- If there are no communication with SY1527 (packets lost) -- Call one of the Expert Contacts!

- If communication is OK, then stop ping and type telnet 130.199.60.50 1527 -- You should see 'login window for CAEN SY1527 system'

- Press any key

- Login as 'admin' with password 'admin' -- you will see Main Menu window for SY1527

- From 'Main' chose 'Channels' by pressing 'Enter' -- you will see Channels menu window

- Verify HV is presented on channels (VMon)

Usually second terminal window is open on HOOSIER.STARP.BNL.GOV to monitor SY1527 HV power supply. If this window is not open use “putty” and open SY1527 session.

Now you can operate HV using this window, but if there is no emergency to turn HV OFF you should first try to restart SMD HV Control program.

Basic operations from that window are:

Turn HV OFF

Turn HV ON

Turn HV OFF

- press Tab key

- scroll to 'Groups' menu

- press 'Enter' to chose "Group Mode" --you will see highlighted column

- scroll to "Pw"

- press space bar -- you will see "Pw" will switched from On to Off and VMon will start to decrease.

- press Tab key

- select 'Group' mode

- scroll to IOSet

- type 5.0 (Current limit 5uA)

- scroll to Trip

- type 0.5 sec

- Verify that V0Set is 1430 V

- Scroll to Pw

- press Space bar -- you will see Pw will switch from OFF to ON and Status goes to Up. VMon will start to increase.

- Press Tab key

- reselect 'Group' mode

- Change I0Set and Trip for tripped channels

- Power them up - scroll to Pw, and press Space bar.

Appendix

Before you start:

"Be afraid, even paranoid, and that gives you a chance to catch bad effects in the early stages when they still do not matter"

--J. Va'vra (Wire Aging Conference)

Detailed information regarding SY1527 mainframe and A1733 HV distribution boards can be found at CAEN web page.

The SMD HV is supplied by the CAEN SY1527 HV system. The mainframe is located in rack 2C5 (Second Floor, Third Row, near the center). The HV cables run from modules to the SMD crates (15 cables per each crate). At each crate HV cables re-grounded on patch panels assuring same ground for HV and signals to be read. From SMD crates, the HV lines then run to the SY1527 system. There are 10 HV cards, 12 HV channels each (model A1733) inside the mainframe to supply high voltages to the SMD chambers. The parameters of the high voltage system controlled via Ethernet. The GUI based on LabView and CAEN OPC server.

Hardware settings are:

HV Hardware limit set to +1500 V on each of A1733 cards.

HV Software limit (SVMax) set to +1450 V for each channel.

Communications settings for SY1527 are:

IP 130.199.60.50

Net Mask 255.255.254.0

Gateway 0.0.0.0

User name Admin

Password Admin(from top to bottom, HV is Off)

LED

Chk Pass On

Toggle Switch 'Loc enable' On

Ch Status 'NIM' On

Interlock 'Open'

Master On

+48 V On

+5 V On

+12 V On

-12 V On

Main On

Each A1733 card should have 50 Ohm Lemo 00 terminator to enable HV.

Description of SMD High Voltage Control program.

All SMD HV software is installed on EMCSC.STARP.BNL.GOV in folder C:/SY1527

The SMD HV Control provides one button operation of the HV system for the SMD. There are three main functions Power On, Physics Mode, Power Off. There are two configuration files Conf.txt and Conf2.txt which defines ramp up speed and trip settings for different mode of operation.

The nominal settings for Power On are (C:/SY1527/Conf.txt):

V0 1430 V

I0Set 5 uA

Trip 0.5 s

Ramp Down 50 V/sec

Ramp Up 20 V/sec

All channels are allowed to be ramp up in three consecutive attempts. If the first attempt (90 sec) for given channel lead to trip then ramp up speed will be set to 10 V/sec and second attempt (150 sec) will be performed. If second attempt will lead to trip then ramp up speed will be set to 7 V/sec and third attempt (300 sec) will be made. If all three attempt led to trip the program disconnect that particular channel from HV (corresponding led on main panel will turn in RED).

In no later then 90 + 150 + 300 seconds program will change parameters of I0Set and Trip form 5 uA and 0.5 sec to 1.6 uA and 0.1 sec and will switch to the Physics Mode.

The nominal settings for Physics Mode are (C:/SY1527/Conf2.txt)

V0 1430 V

I0Set 1.6 uA

Trip 0.1 s

Ramp Down 50 V/sec

Ramp Up 20 V/sec

Channels allowed to trip no more then 6 times during the Physics Mode. If channel will trip then I0Set and Trip for that particular channel will be set to 5 uA and 0.5 sec and program will try to bring that channel up. On front panel Alarm Status will turn in RED, corresponded message will pop up in 'Current Mode' window, and corresponded to that channel LED will turn in YELLOW or RED. Once voltage reach 90% of nominal all indicators will turn to normal status. Usually it takes about 15 seconds to bring channel back to normal. At the bottom of the screen the right table with modules that tripped during the Physics Mode will be updated. If some channel will trip more than 6 times that channel will be HV disabled by the program and corresponded led will turn RED. The left table on the bottom of the screen will be updated. Such cases should be treated by experts only later on.

All SMD channels during the Physics Mode is under the monitoring. Each 3 seconds or so, the values of Voltage, Current and time are logged in files XX_YY_ZZ_P.txt in C:/SY1527/Log, where XX - current month, YY - current day, ZZ - current year, and P is V for Voltages and I for Currents. The current time saved in seconds. The macro C:/SY1527/smdhv.C plots bad channels history and fills histograms for all other channels.

Turn Off is trivial and no need explanations.

The meaning of the LED on main panel are:

Green – channel is at nominal HV, current < 1 uA

Brown – channel is at nominal HV, 1 uA < current < 5 uA

Yellow – the HV is < 50% of nominal

Red - channel was disconnected due trips during ramp up or physics modes.

Black - channel disabled by user (Conf.txt, Conf2.txt)

It is important to monitor channels with high current (“Brown”), as well as channels that shows high number of trips during the operation. Note: some channels (54 for example) probably has a leakage on external HV distribution board and believed to be OK in terms of discharges on anode wires as was verified during summer shutdown. The other might develop sparking during the run or might have intermittent problems (#8 for example). If problems with sparking will be detected at early stages then those channels might be cured by experts during the run, without of loss of entire chamber as it was happened during the first two years of operation.

The C:/SY1527/ReadSingleChannel.vi allows to monitor single channel. You may overwrite nominal parameters of HV for any channel using that program (it is not desired to do that). This program can run in parallel with the Main SMD HV Control program. The operation is trivial, specify 'Channel to monitor' and click on 'Update Channel'. If you wish to overwrite some parameters (see above) then fill properly VOSet etc. icons and click on Update Write.

Important! Known bug. Before you start you better to fill all parameters, if you do not do that and stop the program later on the parameters will be overwritten with whatever it was in those windows, i.e. if V0Set was 0 then you will power Off the channel.

In some cases it is easy to monitor channels by looking at front panel of the SY1527 mainframe. The image of this panel can be

obtained by opening telnet session (telnet 130.199.60.50 1527) on EMC01.STARP.BNL.GOV.

All three programs can run in parallel.

-----Experts to be called day or night no matter what---

1. The operation crew lost communication with SY1527

2. Any accidental cases (large number (>5) of SMD channels

suddenly disconnected from HV during the run)

For experts only!

What to do with bad modules?

1. If anode wire were found broken then disable channel by changing 1430 to 0 in both configuration files. That should help to avoid confusions of detector operators.

2. If anode wires are OK, and module trips frequently during first half an hour after power Up then it is advised to set HV on that particular channel to lower value (-100V, -200V from nominal etc…) and observe the behavior of the chamber (ReadSingleChannel.vi). In some cases after a few hours module can be bring back to normal operation.

3. If step 2 did not help, then wait till scheduled access and try to cure chamber by applying reverse polarity HV. Important, you can do that using good HV power supply (fast trip protection, with current limit 5uA), or by using something like ‘Bertran’ with external microammeter and balance resistor of no less then 10 MOhm, only! In any case you need to observe the current while gradually increase HV. In no case ‘Bertarn’ like power supply might be left unattended during the cure procedure. It is not advised to apply more then -1000 V. In some cases curing procedure might be fast (one hour or so). In others it might take much longer (24 hours and more) to bring module back to operation. In any case I would request to talk with me.

4. The SMDHV is also installed on EMCSC.STARP.BNL.GOV and can be run from there, although that will for sure affect BTOW HV, be advised.

LOG:

Version 1.00 was written 11/12/03

Version 1.10 corrected 04/25/06

A copy of this manual as a Word document is available for download below.

Calibrations

Here you'll find links to calibration studies for the BEMC:

BTOW

2006

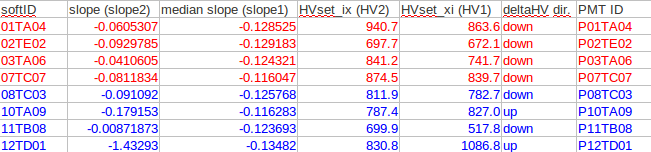

procedure used to set the HV online

MIP study to check HV values -- note: offline calibrations available at Run 6 BTOW Calibration

2005

final offline

2004

final offline

2003

offline slope calibration

MIPs

electrons

BSMD

2004

Dmitry & Julia - SMD correlation with BTOW for 200 GeV AuAu

BPSD

2005

Rory - CuCu PSD calibration studies

2004

Jaro - first look at PSD MIP calibration for AuAu data

BPRS

This task has been picked up by Rory Clarke from TAMU. His page is here:

Run 8 BPRS Calibration

Parent for Run 8 BPRS Calibration done mostly by Jan

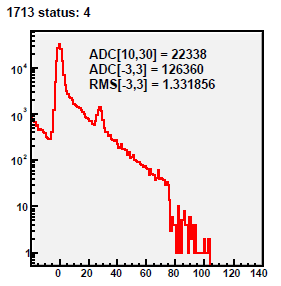

01 DB peds R9069005, 9067013

Pedestal residua for 434 zero-bias events from run 9069005.

The same pedestal for all caps was used - as implemented in the offline DB.

Fig 1.

Fig 2. Run 9067013, excluded caps >120. All 4800 tiles, pedestal residua from 100 st_zeroBias events. Y-axis [-50,+150].

Fig 3. Run 9067013, excluded caps >120. Pedestal corrected spectra for all 4800 tiles, 10K st_physics events. Y-axis [-50,+150].

Dead MAPMT results with 4 patches 4 towers wide.

Fig 4.

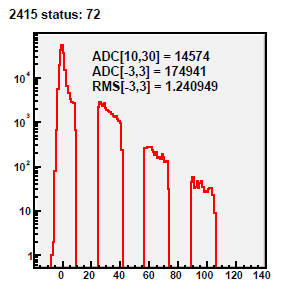

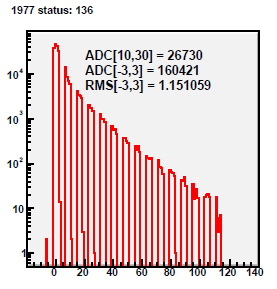

Run 9067013, excluded caps >120.

Zoom-in Pedestal corrected spectra, one ped per channel.

Top 10K st_physics events (barrel often triggered)

Bottom pedestal residua 100 st_zeroBias events

Fig 5.

Run 9067013, input =100 events, accept if capID=124 , raw spectra.

There are 4 BPRS crates, so 1200 channels/crate. In terms of softIds it's

PSD1W: 1-300 + 1501-2400

PSD19W: 301-1500

PSD1E: 2401-2900 + 4101-4800

PSD20E: 2901-4100

Why only 2 channels fired in crate PSD20E ?

02 pedestal(capID)

Run 9067013, 30K st_physics events, spectra accumulated separately for every cap.

Top plot pedestal (channel), bottom plot integral of pedestal peak to total spectrum.

Fig 1. CAP=122

Fig 2. CAP=123

Fig 3. CAP=124

Fig 4. CAP=125

Fig 5. CAP=126

Fig 6. CAP=127

Fig 7. Raw spectra for capID=125. Left: typical good pedestal, middle: very wide pedestal, right: stuck lower bit.

For run 9067013 I found: 7 tiles with ADC=0, ~47 tiles with wide ped, ~80 tiles with stuck lower bit.

Total ~130 bad BPRS tiles based on pedestal shape, TABLE w/ bad BPRS tiles

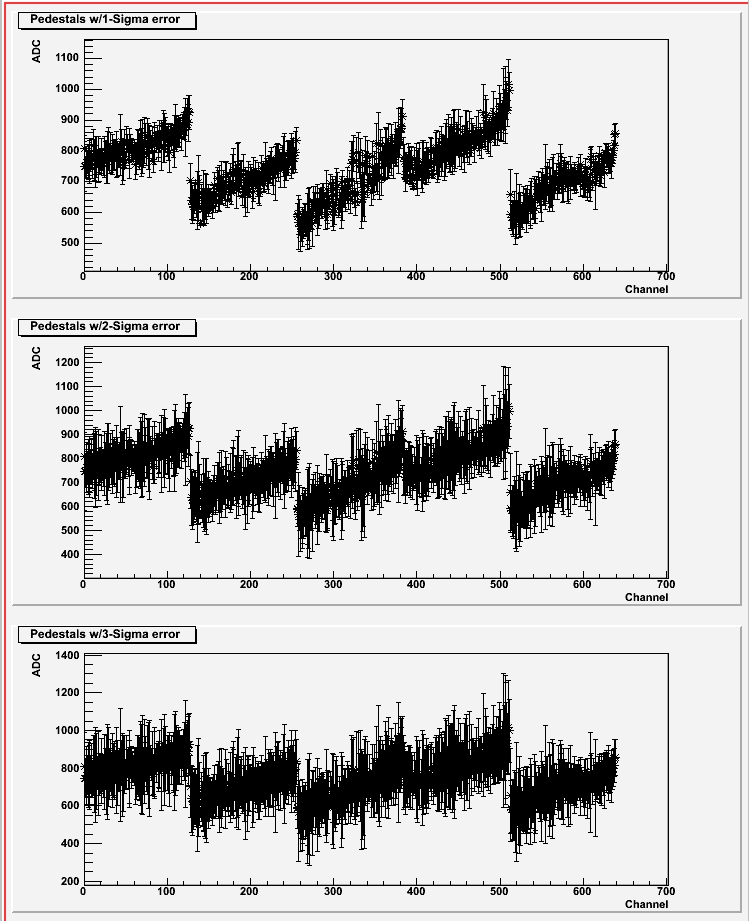

Fig 8. QA of pedestals, R9067013, capID=125. Below is 5 plots A,...,F all have BPRS soft ID on the X-axis.

A: raw spectra (scatter plot) + pedestal from the fit as black cross (with error).

B: my status table based on pedestal spectrum. 0=good, non-zero =sth bad.

C: chi2/DOF from fitting pedestal, values above 10. were flagged as bad

D: sigma of pedestal fit, values aove 2.7 were flagged as bad

E: integral of the found pedestal peak to the total # of entries. On average there was ~230 entries per channel.

Fig 9. BPRS pedestals for 'normal' caps=113,114,115 shown with different colors

Fig 10. BPRS pedestals for caps=100..127 and all softID , white means bad spectrum, typical stats of ~200 events per softID per cap

Fig 11. BPRS:" # of bad caps , only capID=100...127 were examined.

Fig 12. BPRS:" sig(ped)

Fig 13. BPRS:" examples of ped distribution for selected channels. Assuming for sing;e capID sig(ped)=1.5, the degradation of pedestal resolution if capID is not accounted for would be: sqrt(1.5^2 +0.5^2)=1.6 - perhaps it is not worth the effort on average. There still can be outliers.

03 tagging desynchronized capID

BPRS Polygraph detecting corrupted capIDs.

Goal: tag events with desynchronized CAP id, find correct cap ID

Method:

- build ped(capID, softID)

- pick one BPRS crate (19W)

- compute chi2/dof for series capID+/-2

- pick 'best' capID with smallest chi2/dof

- use pedestals for best capID for this crate for this event

- if best capID differs from nominal capID call this event 'desynchronized & fixed'

Input: 23K st_physics events from run 9067013.

For technical reason limited range of nominal capID=[122,126] was used, what reduces data sample to 4% ( 5/128=0.04).

Results:

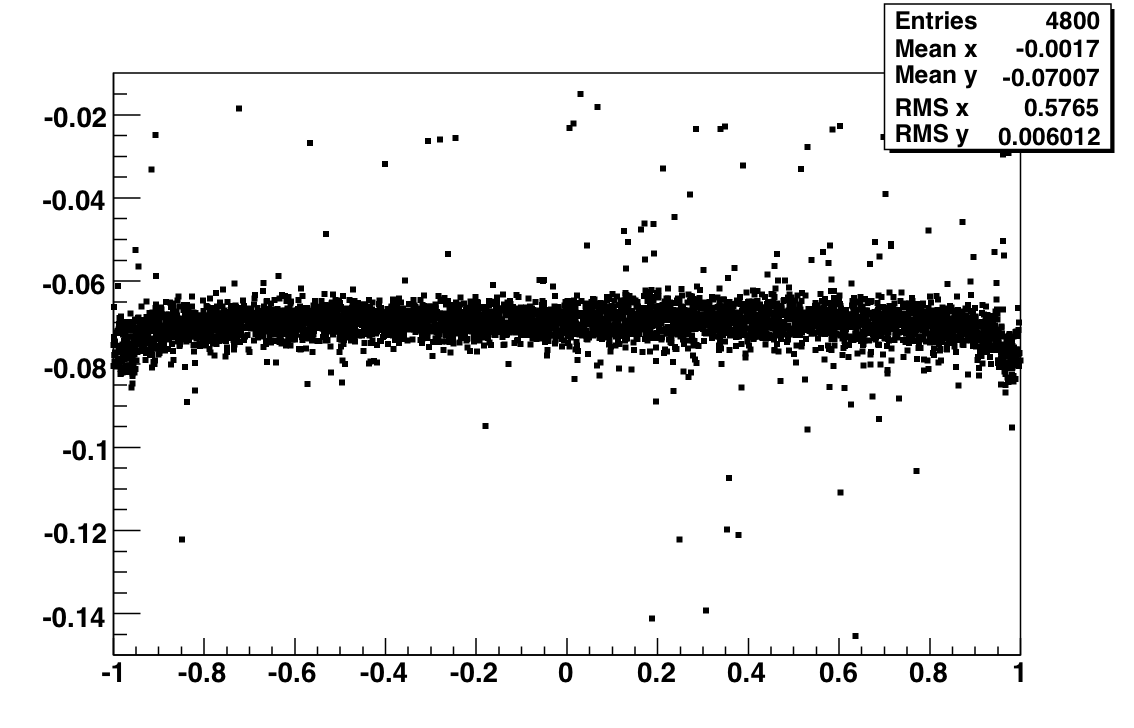

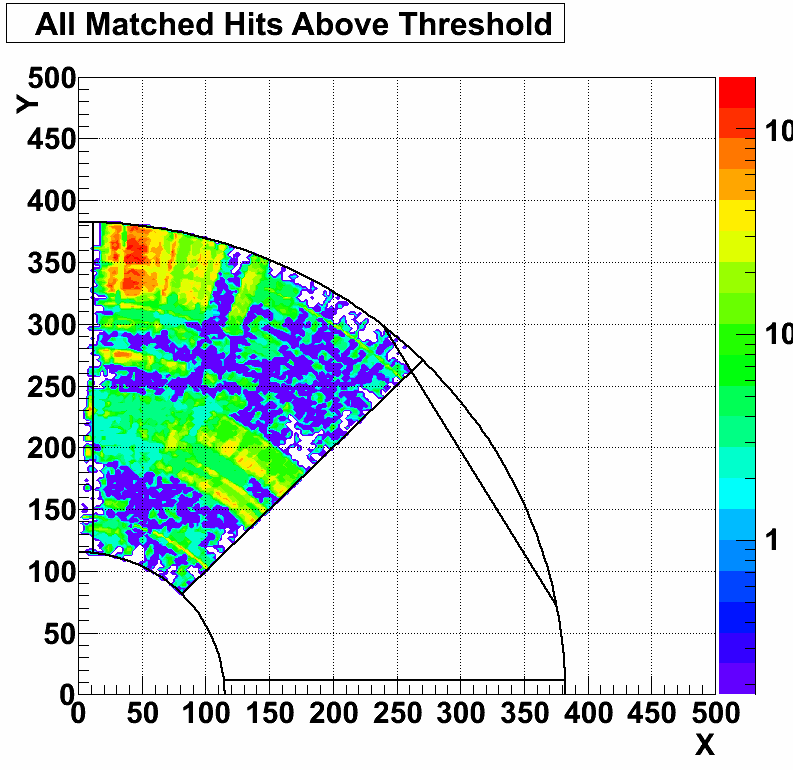

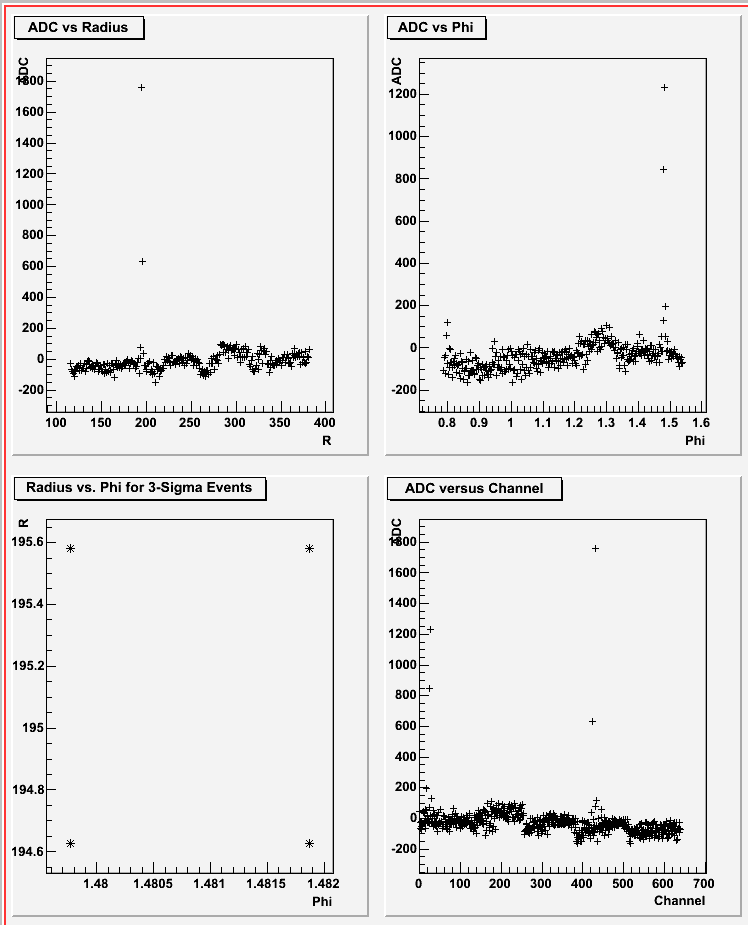

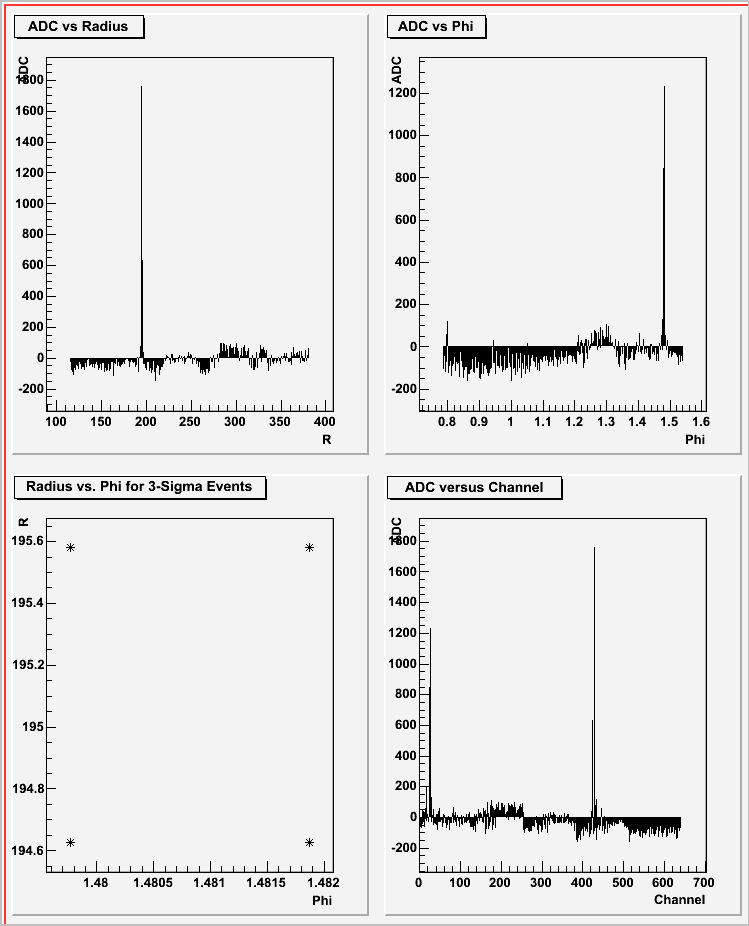

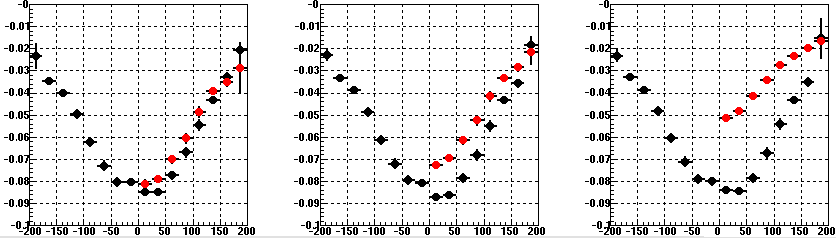

Fig 1. ADC-ped(capID,softID) vs. softID for crate 1 (i.e. PSD19W) as is'. No capID corruption detection.

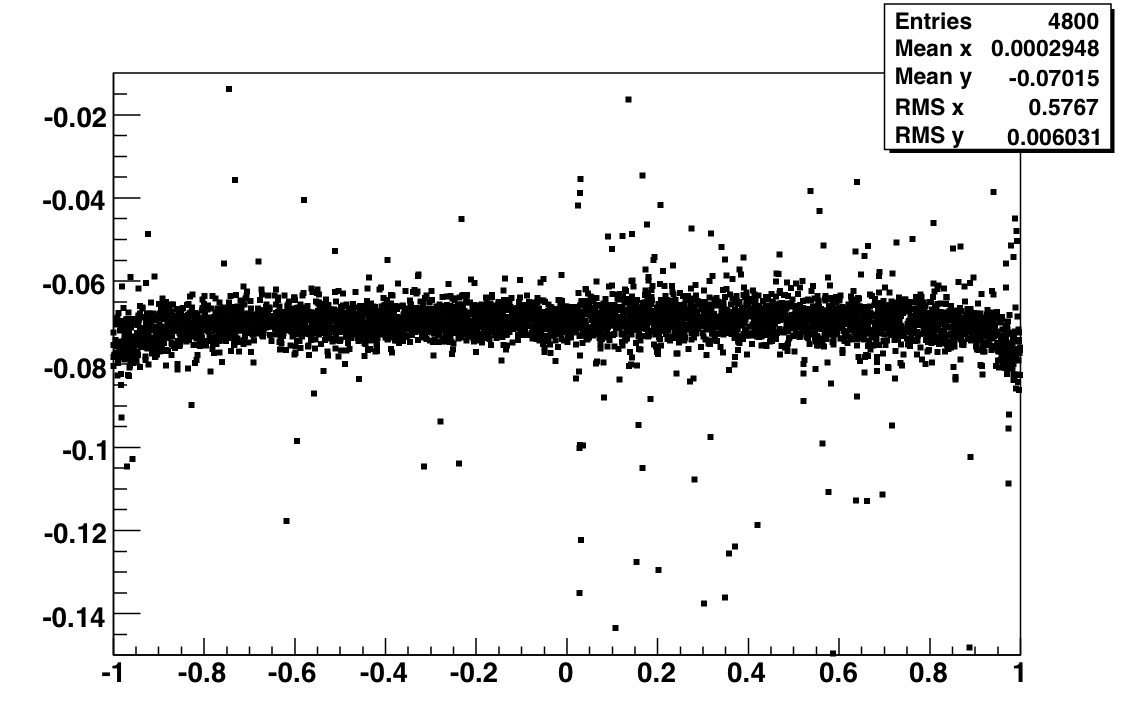

Fig 2. ADC-ped(capID,softID) with capID detection & correction enabled. The same events.

Note, all bands are gone - the capID fix works.

Right: ADC-ped spectra: Black: 594 uncorrupted events, red: 30 corrupted & fixed events.

The integral for ADC[10,70] are 2914 and 141 for good and fixed events, respectively.

141/2914=0.048; 30/594=0.051 - close enough to call it the same (within stats).

Fig 3. Auxiliary plots.

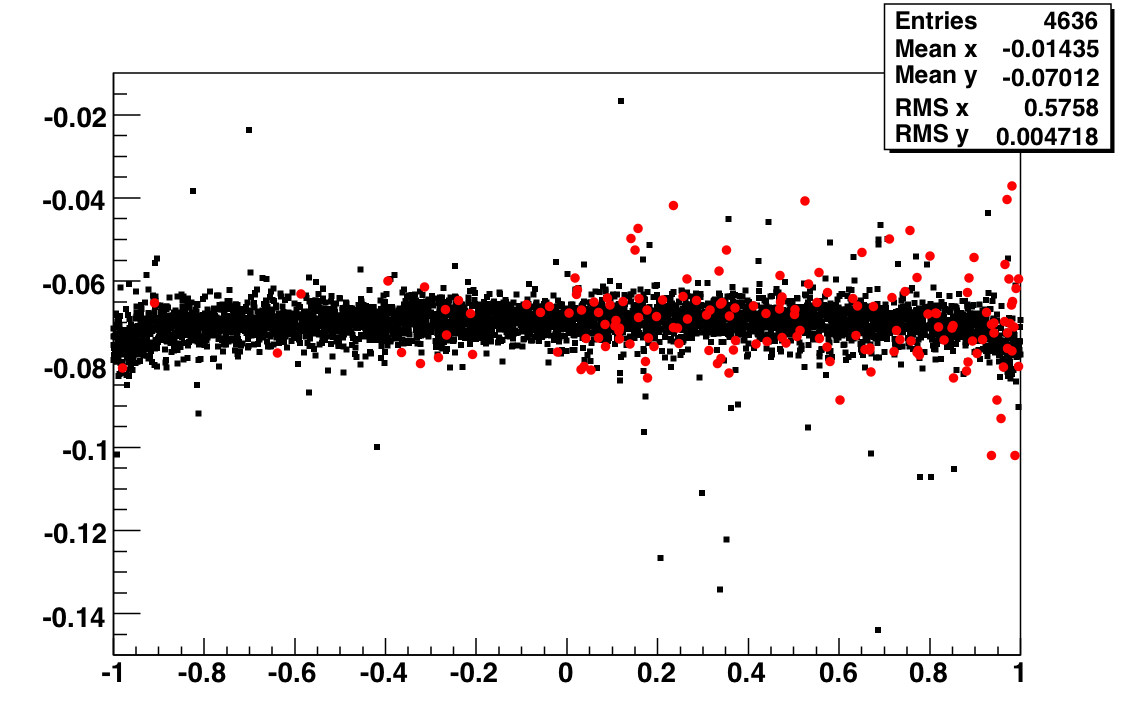

TOP left: chi/dof for all events. About 1100 channels is used out of 1200 in served by crate 1. Rejected are bad & outliers.

TOP right: change of chi2/dof for events with corrupted & fixed capID.

BOTTOM: frequency of capID for good & fixed events, respectively.

Conclusions:

- BPRS-Polygraph algo efficiently identifies and corrects BPRS for corrupted capID, could be adopted to used offline .

- there is no evidence ADC integration widow changes for BPRS data with corrupted capID.

Table 1.

shows capIDs for the 4 BPRS crates for subsequent events. Looks like for the same event cap IDs are strongly correlated, but different.

Conclusion: if we discard say capID=125, we will make hole of 1/4 of barrel , different in every event. This holds for BPRS & BSMD.

capID= 83:89:87:90: eve=134 capID= 1:4:11:3: eve=135 capID= 74:74:81:72: eve=136 capID= 108:110:116:110: eve=137 capID= 68:72:73:75: eve=138 capID= 58:55:65:64: eve=139 capID= 104:110:106:101: eve=140 capID= 9:6:8:15: eve=141 capID= 43:37:47:46: eve=142 capID= 120:126:118:122: eve=143 capID= 34:41:41:40: eve=144 capID= 3:0:126:2: eve=145 capID= 28:33:28:30: eve=146 capID= 72:64:70:62: eve=147 capID= 2:6:7:5: eve=148 capID= 22:32:33:24: eve=149 capID= 8:4:5:124: eve=150 capID= 23:17:17:19: eve=151 capID= 62:57:63:61: eve=152 capID= 54:53:45:47: eve=153 capID= 68:75:70:67: eve=154 capID= 73:79:73:72: eve=155 capID= 104:98:103:103: eve=156 capID= 12:5:13:10: eve=157 capID= 5:10:10:2: eve=158 capID= 32:33:27:22: eve=159 capID= 96:102:106:97: eve=160 capID= 79:77:72:77: eve=161

04 BPRS sees beam background?

The pair of plots below demonstrates BPRS pedestal residua are very clean once peds for 128 caps are used and this 5% capID corruption is detected and fixed event by event.

INPUT: run 9067013, st_phys events, stale BPRS data removed, all 38K events .

Fig 0. capID correction was enabled for the bottom plot. Soft ID is on the X-axis; rawAdc-ped(softID, capID) on the Y-axis.

Now you can believe me the BPRS pedestals are reasonable for this run. Look at the width of pedestal vs. softID, shown in Fig 1 below.

There are 2 regions with wider peds, marked by magenta(?) and red circle.

The individual spectra look fine (fig 2b, 2bb).

But the correlation of pedestal width with softID (fig 1a,1b) and phi-location of respective modules (fig 3a, 3b) suggest it could be due to the beam background at

~7 o'clock on the West and at 6-9 o'clock on the East.

05 ---- peds(softID,capID) & status table, ver=2.2, R9067013

INPUT: st_hysics events from run=9067013

Fig 1. Top: pedestal(softID & capID), middle: sigma of pedestal, bottom: status table, Y-axis counts how many capID had bad spectra.

Based on pedestal spectra there are 134 bad BPRS tiles.

Fig 2. Distribution of pedestals for 4 selected softIDs, one per crate.

Fig 3. Zoom-in of ped(soft,cap) spectrum to see there is more pairs of 2 capID which have high/low pedestal vs. average, similar to the known pair (124/125).

Looks like such piar like to repeat every 21 capIDs - is there a deeper meaning it?

(I mean will the World end in 21*pi days?)

Fig 4. Example of MIP spectra (bottom). MIP peak is very close to pedestal, there are worse cases than the one below.

06 MIP algo ver 1.1

TPC based MIP algo was devised to calibrate BPRS tiles.

Details of the algo are in 1st PDF,

example of MIP spectra for 40 tails with ID [1461,1540] are in subsequent 5 PDF files, sorted by MAPMT

Fig 1 shows collapsed ADC-ped response for all 4800 BPRS tiles. The average MIP response is only 10 ADC counts above ped wich has sigma of 1.5 ADC. The average BPRS gain is very is very low.

07 BPRS peds vs. time

Fig 1. Change of BPRS pedestal over ... within the same fill, see softID~1000

Pedestal residua (Y-axis) vs. softID (X-axis), same reference pedestals used from day 67 (so some peds are wrong for both runs) were used both plots.

Only fmsslow events, no further filtering, capID corruption fixed in fly.

Top run 9066001, start 0:11 am, fill 9989

Bottom run 9066012, start 2:02 am, fill 9989

Fig 2. Run list. system config was changed between those 2 runs marked in blue.

Fig 3. zoom in of run 9066001

Fig 4. another example of BPRS ped jump between runs: 9068022 & 9068032, both in the same fill.

08 BPRS ped calculation using average

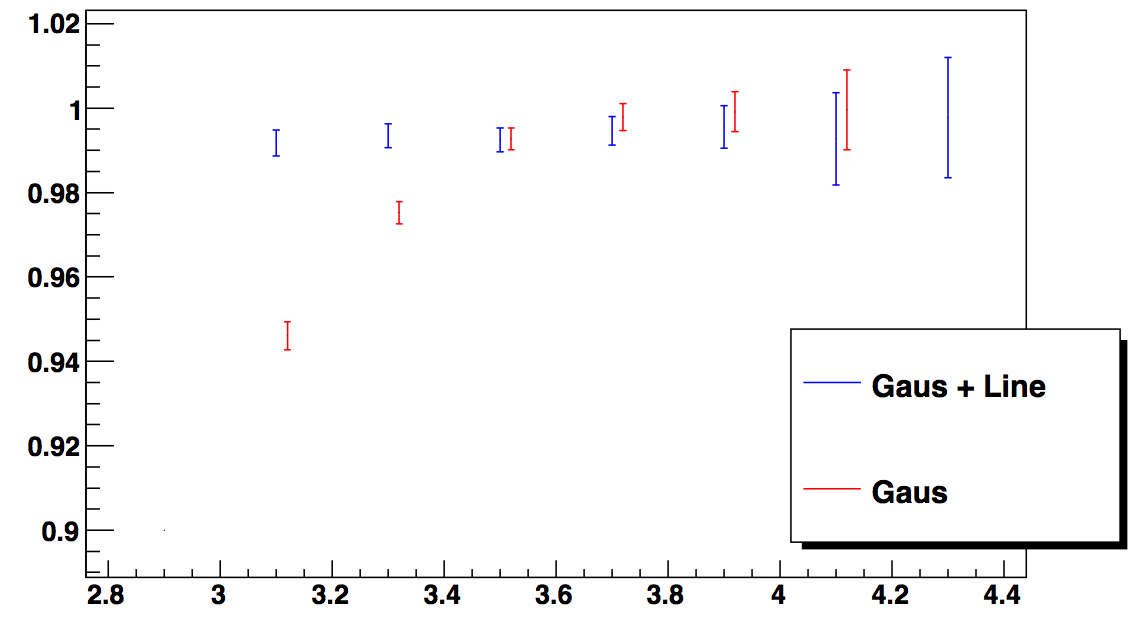

Comparison of accuracy of pedestal calculation using Gauss fit & plain average of all data.

The plain average method is our current scheme for ZS for BPRS & BSMD for 2009 data taking.

Fig 2. TOP: RMS of the plain average, using 13K of fmsslow-triggered events which are reasonable surrogate of minBias data for BPRS.

Middle: sigma of pedestal fit using Gauss shape

Bottom: ratio of pedestals from this 2 method. The typical pedestal value is of 170 ADC. I could not make root to display the difference, sorry.

09 BPRS swaps, IGNORING Rory's finding from 2007, take 1

This page is kept only for the record- information here is obsolete.

This analysis does not accounts for BPRS swaps discovered by Rory's in 2007, default a2e maker did not worked properly

- INPUT: ~4 days of fmsslow-triggered events, days 65-69

- DATA CORRECTIONS:

- private BPRS peds(cap,softID) for every run,

- private status table, the same , based on one run from day 67

- event-by-event capID corruption detection and correction

- use vertex with min{|Z|}, ignore ranking to compensate for PPV problem

- TRACKING:

- select prim tracks with pr>0.4 GeV, dEdX in [1.5,3.7] keV, |eta|<1.2

- require track enters tower 1cm from the edge and exists tower at any distance to the edge

- tower ADC is NOT used (yet)

- 2 histograms of rawADC-ped were accumulated: for all events (top plot) and for BPRS tiles pointed by TPC track (middle plot w/ my mapping & lower plot with default mapping)

There are large section of 'miples' BPRS tiles if default mapping is used: 3x80 tiles + 2x40 tiles=320 tiles, plus there is a lot of small mapping problems. Plots below are divided according to 4 BPRS crates - I assumed the bulk of mapping problems should be contained within single crate.

Fig 1, crate=0, Middle plot is after swap - was cured for soft id [1861-1900]

if(softID>=1861 && softID<=1880) softID+=20;

else if(softID>=1881 && softID<=1900) softID-=20;

Fig2, crate=1, Middle plot is after swap - was cured for soft id [661-740]

if(softID>=661 && softID<=700) softID+=40;

else if(softID>=701 && softID<=740) softID-=40;

Fig3, crate=2, Middle plot is after swap - was cured for soft id [4181-4220].

But swap in [2821-2900] is not that trivial - suggestions are welcome?

if(softID>=4181 && softID<=4220) softID+=40;

else if(softID>=4221 && softID<=4260) softID-=40;

Fig4, crate=3, swap in [3781-3800] is not that trivial - suggestions are welcome?

10 -------- BPRS swaps take2, _AFTER_ applying Rory's swaps

2nd Correction of BPRS mapping (after Rory's corrections are applied).

- INPUT: ~7 days of fmsslow-triggered events, days 64-70, 120 runs

- DATA CORRECTIONS:

- private BPRS peds(cap,softID) for every run,

- private status table, excluded only 7 strips with ADC=0

- event-by-event capID corruption detection and correction

- use vertex with min{|Z|}, ignore PPV ranking, to compensate for PPV problem

- BPRS swaps detected by Rory in 2007 data have been applied

- TRACKING:

- select prim tracks with pr>0.4 GeV, dEdX in [1.5,3.7] keV, |eta|<1.2, zVertex <50 cm

- require track enters tower 1cm from the edge and exist the same tower at any distance to the edge (0 cm)

- tower ADC is NOT used (yet)

- 3 2D histograms of were accumulated:

- rawADC-ped (softID) for all events

- the same but only for BPRS tiles pointed by QAed MIP TPC track

- frequency of correlation BPRS tiles with MIP-like ADC=[7,30] with towers pointed by TPC MIP track

Based on correlation plot (shown as attachment 6) I found ~230 miss-mapped BPRS tiles (after Rory's correction were applied).

Once additional swaps were added ( listed in table 1, and in more logical for in attachment 3) the correlation plot is almost diagonal, shown in attachment 1.

Few examples of discovered swaps are in fig1. The most important are 2 series of 80 strips, each shifted by 1 software ID.

Fig 2 shows MIP signal shows up after shit by 1 softID is implemented.

The ADC spectra for all 4800 strips are shown in attachment 2. Attachment 5 & 6 list basic QA of 4800 BRS tiles for 2 cases: only Rory's swaps and Rory+Jan swaps.

Fig 1. Examples of swaps, dotted line marks the diagonal. Vertical axis shows towers pointed by TPC MIP track. X-axis shows BPRS soft ID if given ADC was in the range [7,30] - the expected MIP response. Every BPRS tile was examined for every track, multiple times per event if more than 1 MIP track was found.

Left: 4 sets of 4 strips needs to be rotated. Right: shift by 1 of 80 strips overlaps with rotation of 6 strips.

Fig 2. Example of recovered 80 tiles for softID~2850-2900. Fix: softID was shifted by 1.

Fig 3. Summary of proposed here corrections to existing BPRS mapping

Table 1. List of all BPRS swaps , ver 1.0, found after Rory's corrections were applied, based on 2008 pp data from days 64-70.

The same list in human readable from is here

Identified BPRS 233 swaps. Convention: old_softID --> new_softID

389 --> 412 , 390 --> 411 , 391 --> 410 , 392 --> 409 , 409 --> 392 ,

410 --> 391 , 411 --> 390 , 412 --> 389 , 681 --> 682 , 682 --> 681 ,

685 --> 686 , 686 --> 685 ,1074 -->1094 ,1094 -->1074 ,1200 -->1240 ,

1220 -->1200 ,1240 -->1260 ,1260 -->1220 ,1301 -->1321 ,1303 -->1323 ,

1313 -->1333 ,1321 -->1301 ,1323 -->1303 ,1333 -->1313 ,1878 -->1879 ,

1879 -->1878 ,1898 -->1899 ,1899 -->1898 ,2199 -->2200 ,2200 -->2199 ,

2308 -->2326 ,2326 -->2308 ,2639 -->2640 ,2640 -->2639 ,2773 -->2793 ,

2793 -->2773 ,2821 -->2900 ,2822 -->2821 ,2823 -->2822 ,2824 -->2823 ,

2825 -->2824 ,2826 -->2825 ,2827 -->2826 ,2828 -->2827 ,2829 -->2828 ,

2830 -->2829 ,2831 -->2830 ,2832 -->2831 ,2833 -->2832 ,2834 -->2833 ,

2835 -->2834 ,2836 -->2835 ,2837 -->2836 ,2838 -->2837 ,2839 -->2838 ,

2840 -->2839 ,2841 -->2840 ,2842 -->2841 ,2843 -->2842 ,2844 -->2843 ,

2845 -->2844 ,2846 -->2845 ,2847 -->2846 ,2848 -->2847 ,2849 -->2848 ,

2850 -->2849 ,2851 -->2850 ,2852 -->2851 ,2853 -->2852 ,2854 -->2853 ,

2855 -->2854 ,2856 -->2855 ,2857 -->2856 ,2858 -->2857 ,2859 -->2858 ,

2860 -->2859 ,2861 -->2860 ,2862 -->2861 ,2863 -->2862 ,2864 -->2863 ,

2865 -->2864 ,2866 -->2865 ,2867 -->2866 ,2868 -->2867 ,2869 -->2868 ,

2870 -->2869 ,2871 -->2870 ,2872 -->2871 ,2873 -->2872 ,2874 -->2873 ,

2875 -->2874 ,2876 -->2875 ,2877 -->2876 ,2878 -->2877 ,2879 -->2878 ,

2880 -->2879 ,2881 -->2880 ,2882 -->2881 ,2883 -->2882 ,2884 -->2883 ,

2885 -->2884 ,2886 -->2885 ,2887 -->2886 ,2888 -->2887 ,2889 -->2888 ,

2890 -->2889 ,2891 -->2890 ,2892 -->2891 ,2893 -->2892 ,2894 -->2893 ,

2895 -->2894 ,2896 -->2895 ,2897 -->2896 ,2898 -->2897 ,2899 -->2898 ,

2900 -->2899 ,3121 -->3141 ,3141 -->3121 ,3309 -->3310 ,3310 -->3309 ,

3717 -->3777 ,3718 -->3778 ,3719 -->3779 ,3720 -->3780 ,3737 -->3757 ,

3738 -->3758 ,3739 -->3759 ,3740 -->3760 ,3757 -->3717 ,3758 -->3718 ,

3759 -->3719 ,3760 -->3720 ,3777 -->3737 ,3778 -->3738 ,3779 -->3739 ,

3780 -->3740 ,3781 -->3861 ,3782 -->3781 ,3783 -->3782 ,3784 -->3783 ,

3785 -->3784 ,3786 -->3785 ,3787 -->3786 ,3788 -->3787 ,3789 -->3788 ,

3790 -->3789 ,3791 -->3790 ,3792 -->3791 ,3793 -->3792 ,3794 -->3793 ,

3795 -->3794 ,3796 -->3835 ,3797 -->3836 ,3798 -->3797 ,3799 -->3798 ,

3800 -->3799 ,3801 -->3840 ,3802 -->3801 ,3803 -->3802 ,3804 -->3803 ,

3805 -->3804 ,3806 -->3805 ,3807 -->3806 ,3808 -->3807 ,3809 -->3808 ,

3810 -->3809 ,3811 -->3810 ,3812 -->3811 ,3813 -->3812 ,3814 -->3813 ,

3815 -->3814 ,3816 -->3855 ,3817 -->3856 ,3818 -->3817 ,3819 -->3818 ,

3820 -->3819 ,3821 -->3860 ,3822 -->3821 ,3823 -->3822 ,3824 -->3823 ,

3825 -->3824 ,3826 -->3825 ,3827 -->3826 ,3828 -->3827 ,3829 -->3828 ,

3830 -->3829 ,3831 -->3830 ,3832 -->3831 ,3833 -->3832 ,3834 -->3833 ,

3835 -->3834 ,3836 -->3795 ,3837 -->3796 ,3838 -->3837 ,3839 -->3838 ,

3840 -->3839 ,3841 -->3800 ,3842 -->3841 ,3843 -->3842 ,3844 -->3843 ,

3845 -->3844 ,3846 -->3845 ,3847 -->3846 ,3848 -->3847 ,3849 -->3848 ,

3850 -->3849 ,3851 -->3850 ,3852 -->3851 ,3853 -->3852 ,3854 -->3853 ,

3855 -->3854 ,3856 -->3815 ,3857 -->3816 ,3858 -->3857 ,3859 -->3858 ,

3860 -->3859 ,3861 -->3820 ,4015 -->4055 ,4016 -->4056 ,4017 -->4057 ,

4018 -->4058 ,4055 -->4015 ,4056 -->4016 ,4057 -->4017 ,4058 -->4018 ,

4545 -->4565 ,4546 -->4566 ,4549 -->4569 ,4550 -->4570 ,4565 -->4545 ,

4566 -->4546 ,4569 -->4549 ,4570 -->4550 ,

For the reference:

- mapping of BPRS softID to MAPMT made by Will J. in 2007 is shown here: http://www.star.bnl.gov/protected/emc/wwjacobs/tmp/bprs_tubehookup_run7.pdf

Identified BPRS hardware problems:

Tiles w/ ADC=0 for all events:

* 3301-4, 3322-4 - all belong to PMB44-pmt1, dead Fee in 2007

belonging to the same pmt:

3321 has pedestal only

3305-8 and 33205-8 have nice MIP peaks, work well

* 2821, 3781 , both at the end of 80-chanel shift in mapping

neighbours of 2821: 2822,... have nice MIP

similar for neighbours of 3781

* 4525, 4526 FOUND! should be readout from cr=2 position 487 & 507, respectively

I suspect all those case we are reading wrong 'positon' from the DAQ file

Pair of consecutive tiles with close 100% cross talk, see Fig 4.

35+6, 555+6, 655+6, 759+60, 1131+2, 1375+6, 1557+8,

2063+4, 2163+4, 2749+50, 3657+8,

3739 & 3740 copy value of 3738 - similar case but with 3 channels.

4174+5

Hot pixels, fire at random

1514, 1533, 1557,

block: 3621-32, 3635,3641-52 have broken fee, possible mapping problem

block: 3941-3976 have broken fee

Almost copy-cat total 21 strips in sections of 12+8+1

3021..32, 3041..48, 3052

All have very bread pedestal. 3052 may show MIP peak if its gain is low.

Fig 4. Example of pairs of correlated channels.

11 BPRS absolute gains from MIP, ver1.0 ( example of towers)

BPRS absolute gains from MIP, ver 1.0

Fig 1 Typical MIP signal seen by BPRS(left) & BTOW (right) for soft ID=??, BPM16.2.x (see attachment 1 for more)

Magenta line is at MIP MPV-1*sigma -> 15% false positives

Fig 2 Typical MIP signal seen by BPRS, pmt=BPM16.2

Average gain of this PMT is on the top left plot, MIP is seen in ADC=4.9, sig=2.6

Fig 3 Most desired MIP signal (ADC=16) seen by BPRS(left) & BTOW (right) for soft ID=1388, BPM12.1.8

Magenta line is at MIP MPV-1*sigma -> 15% false positives, (see attachment 2 for more)

Fig 4 Reasonable BPRS, pmt=BPM11.3, pixel to pixel gain variations is small

Fig 5 High MIP signal (ADC=28) seen by BPRS(left) & BTOW (right) , BPM11.5.14

Magenta line is at MIP MPV-1*sigma -> 15% false positives, (see attachment 3 for more)

Fig 6 High gain BPRS, pmt=BPM11.5

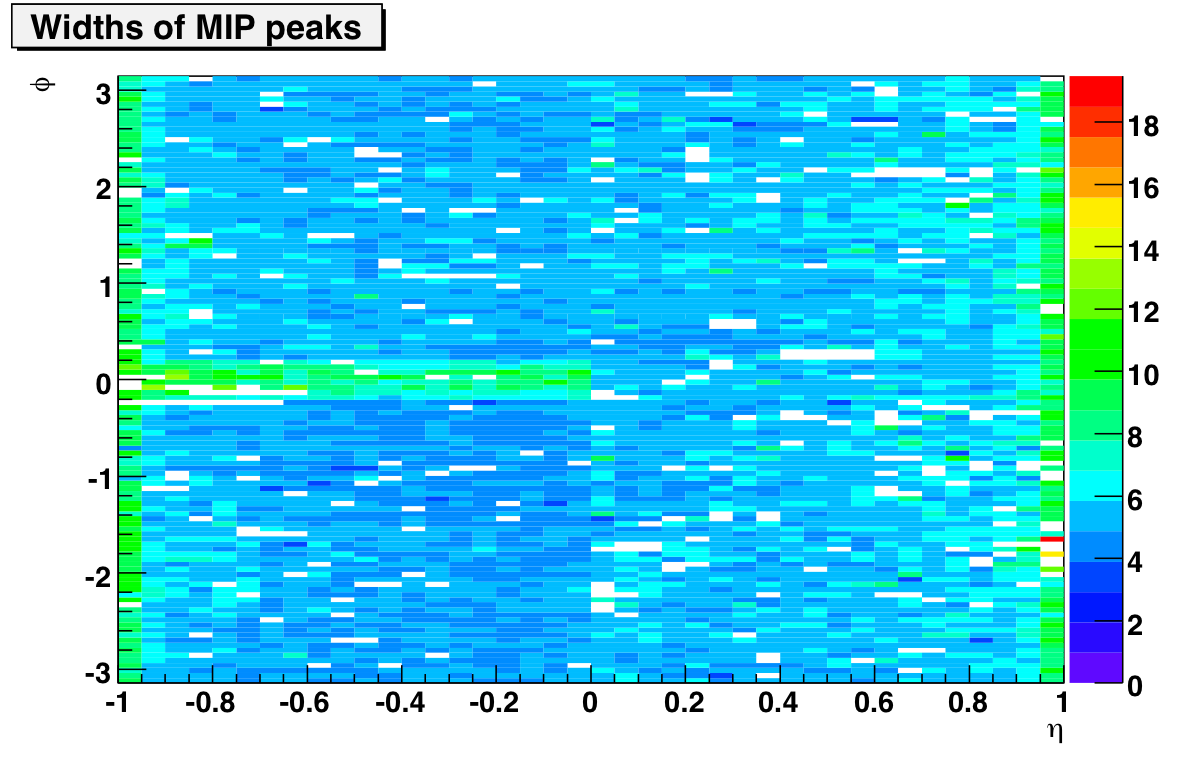

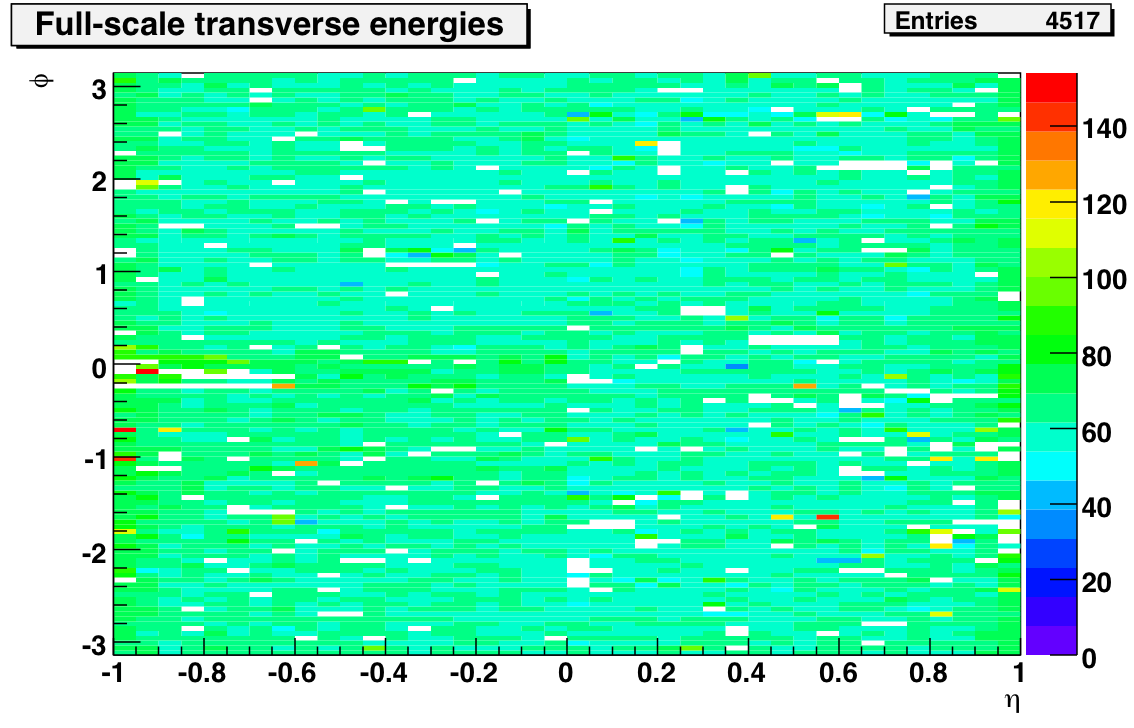

12 MIP gains ver1.0 (all tiles, also BTOW)

This is still work in progress, same algo as on previous post.

Now I run on 12M events (was 6 M) and do rudimentary QA on MIP spectra, which results with ~10% channel loss (the bottom figure). However, the average MIP response per tile is close to the final value.

Conclusion: only green & light yellow tiles have reasonable MIP response of ~15 ADC. For blue we need to rise HV, for red we can lower it (to reduce after pulsing). White area are masked/dead pixels.

Fig 1: BPRS MIP gains= gauss fit to MIP peak.

A) gains vs. eta & phi to see BPM pattern. B) gains with errors vs. softID, C) sigma MIP shape, D) tiles killed in QA

Fig 2: BTOW MIP gains= gauss fit to MIP peak. Content of this plot may change in next iteration.

A) gains vs. eta & phi. "ideal" MIP is at ADC=18, all towers. Yellow& red have significantly to high HV, light blue & blue have too low HV.

B) gains with errors vs. softID, C) sigma MIP shape, D) towers killed in QA

13 MIP algo, ver=1.1 (example of towers)

This is an illustration of improvement of MIP finding efficiency if ADC gates are set on BPRS & BTOW at the places matched to actual gains instead of fixed 'ideal' location.

Fig 1 is from previous iteration (item 11) with fixed location MIP gates. Note low MIP yield in BPRS (red histo) due to mismatched BTOW ADC gate (blue bar below green histo).

Fig 2 New iteration with adjusted MIP gates. (marked by blue dashed lines).

MIP ADC gate is defined (based on iteration 1) by mean value of the gauss fit +/- 1 sigma of the gauss width, but not lower than ADC=3.5 and not higher than 2* mean ADC

Note similar MIP yield in BPRS & BTOW. Also new MIP peak position from Gauss fit did not changed, meaning algo is robust. The 'ideal' MIP ADC range for BTOW is marked by magenta bar (bottom right) - is visibly too low.

Attached PDF shows similar plots for 16 towers. Have a look at page 7 & 14

14 ---- MIP gains ver=1.6 , 90% of 4800 tiles ----

BPRS absolute gains using TPC MIPs & BTOW MIP cut, ver 1.6 , 2008 pp data

- INPUT: fmsslow-triggered events, days 43-70, 525 runs, 16M events (see attachment 1)

- BTOW peds & ped-status from offline DB

- DATA CORRECTIONS (not avaliable using official STAR software)

- discard stale events

- private BPRS peds(cap,softID) for every run,

- private status table

- event-by-event capID corruption detection and correction

- use vertex with min{|Z|}, ignore PPV ranking, to compensate for PPV problem

- BPRS swaps detected by Rory in 2007 data have been applied

- additional ~240 BPRS swaps detected & applied

- BTOW ~50 swaps detected & applied

- BTOW MIP position determine independently (offline DB gains not used)

- TRACKING:

- select prim tracks with pr>0.35 GeV, dEdX in [1.5,3.3] keV, |eta|<1.3, zVertex <60 cm, last point on track Rxy>180cm

- require track enters & exits a tower 1cm from the edge, except for etaBin=20 - only 0.5cm is required (did not helped)

- triple MIP coincidence, requires the following (restrictive) cuts:

- to see BPRS MIP ADC : TPC MIP track and in the same BTOW tower ADC in MIP peak +/- 1 sigma , but above 5 ADC

- to see BTOW MIP ADC : TPC MIP track and in the same BPRS tile ADC in MIP peak +/- 1 sigma , but above 3.5 ADC

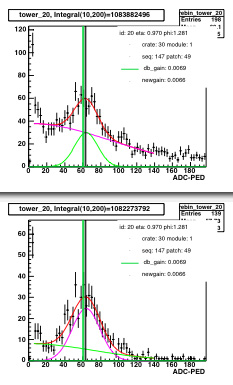

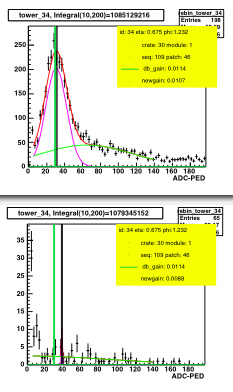

Fig 1. Example of typical BPRS & BTOW MIP peak determine in this analysis.

MIP ADC gate (blue vertical lines) is defined (based on iteration 1.0) by the mean value of the gauss fit +/- 1 sigma of the gauss width, but not lower than ADC=3.5 (BPRS) or 5 (BTOW) and not higher than 2* mean ADC.

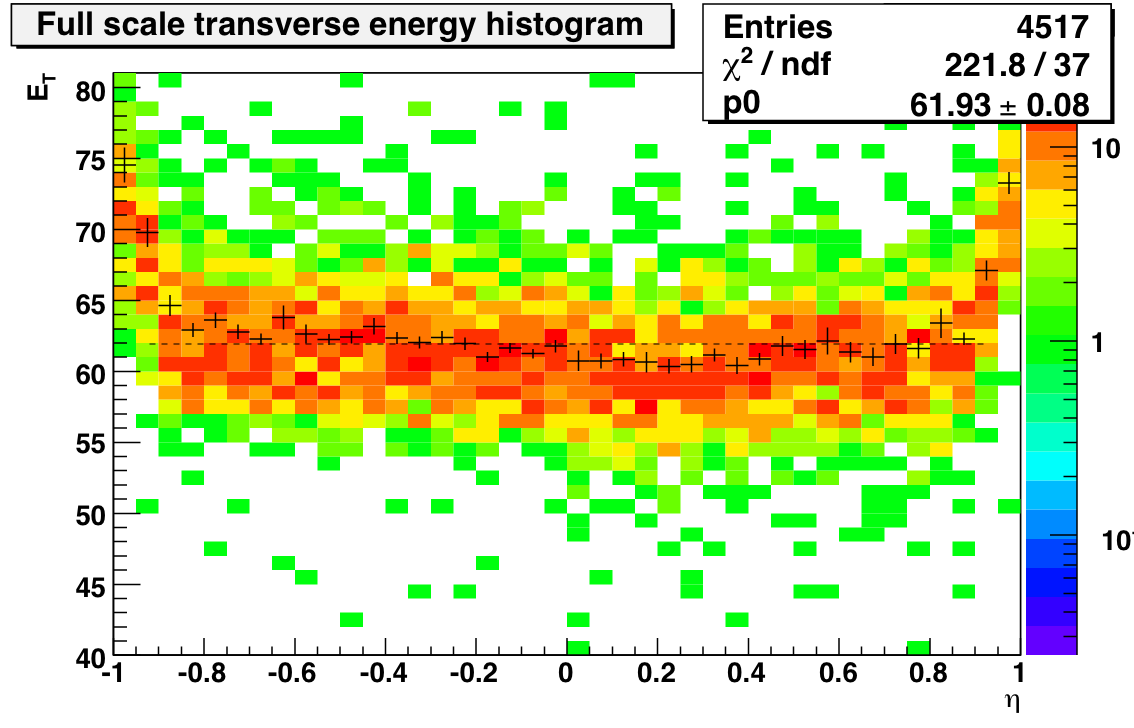

FYI, the nominal MIP ADC range for BTOW (ADC=4096 @ ET=60 GeV/c) is marked by magenta bar (bottom right).

- Attachment 6 contains 4800 plots of BPRS & BTOW like this one below ( large 53MB !!)

- Numerical values of MIP peak position,width, yield for all 4800 BPRS tiles are in attachment 4.

Fig 2. ADC of MIP peak for 4800 BPRS tiles.

Top plot: mean, X-axis follows eta bin , first West then East. Y-axis follows |eta| bin, 20 is at physical eta=+/- 1.0.Large white areas are due to bad BPRS MAPMT (4x4 or 2x8 channels), single white rectangles are due to bad BPRS tile or bad BTOW tower.

Middle plot: mean +/- error of mean, X-axis =soft ID. One would wish mean MIP value is above 15 ADC to place MIP cut comfortable above the pedestal (sig=1.5-1.8 ADC counts).

Bottom plot: width of MIP distribution. Shows the width of MIP shape is comparable to the mean and we want to put MIP cut well below the mean to not loose half of discrimination power.

Note, the large # 452 of not calibrated BPRS tiles does not mean that many are broken. There are 14 known bad PMTs and e 'halves' , total=15*16=240 (see attachment 2). There rest are due broken towers (required by MIP coincidence) and isolated broken fibers, FEE channels.

Fig 3. Example of PMT with fully working 16 channels.

Top left plot shows average MIP ADC from 16 pixels. Top middle: correlation between MIP peak ADC and raw slope - can be used for relative gain change in 2009. Top right shows BTOW average MIP response.

Middle: MIP spectra for 16 pixels.

Bottom: raw spectra fro the same 16 pixels.

300 plots like this is in attachment 3.

Fig 4. Top plot: average over 16 pixels MIP ADC for 286 BPRS pmts. X axis = PMB# [1-30] + pmt #[1-5]/10. Error bars represent RMS of distribution (not error of the mean).

Middle plot : ID of 14 not calibrated PMTs. For detailed location of broken PMTs see attachment 2, the red computer-generated ovals on the top of 2007 Will's scribbles mark broken PMTs (blue ovals are repaired PMTs) found in this 2008 analysis.

Bottom plot shows # of pixels in given PMT with reasonable MIP signal (used in the top figure).

- Numerical values of MIP peak per PMT are in attachment 5.

Fig 5. ADC of MIP peak for 4800 BTOW tower. Top lot: mean, middle plot: mean +/- error of mean, bottom plot: width of MIP

- Numerical values of MIP peak position,width, yield for all 4800 BTOW towers are in attachment 4.

Note, probably 1/2 of not calibrated BTOW towers are broken, the other half is due to bad BPRS tiles, required to work by this particular algo.

Koniec !!!

15 Broken BPRS channels ver=1.6, based on data from March of 2008

The following BPRS pmts/tiles have been found broken or partially not functioning, based on reco MIP response from pp 2008 data.

Based on PMT-sorted spectra available HERE (300 pages , 3.6MB)

Table 1. Simply dead PMT's. Raw spectra contain 16 nice pedestals, no energy above, see Fig 2.

PMB,pmt, PDF page # , 16 mapped softIDs 2,3 8, 2185 2186 2187 2188 2205 2206 2207 2208 2225 2226 2227 2228 2245 2246 2247 2248 2,4, 9, 2189 2190 2191 2192 2209 2210 2211 2212 2229 2230 2231 2232 2249 2250 2251 2252 4,5, 20, 2033 2034 2035 2036 2053 2054 2055 2056 2073 2074 2075 2076 2093 2094 2095 2096 5,1, 21, 1957 1958 1959 1960 1977 1978 1979 1980 1997 1998 1999 2000 2017 2018 2019 2020 12,2, 57, 1421 1422 1423 1424 1425 1426 1427 1428 1441 1442 1443 1444 1445 1446 1447 1448 14,1, 66, 1221 1222 1223 1224 1225 1226 1227 1228 1241 1242 1243 1244 1245 1246 1247 1248 24,4, 119, 433 434 435 436 453 454 455 456 473 474 475 476 493 494 495 496 25,4, 124, 353 354 355 356 373 374 375 376 393 394 395 396 413 414 415 416 26,4, 129, 269 270 271 272 289 290 291 292 309 310 311 312 329 330 331 332 32,3, 158, 2409 2410 2411 2412 4749 4750 4751 4752 4769 4770 4771 4772 4789 4790 4791 4792 44,5, 220, 3317 3318 3319 3320 3337 3338 3339 3340 3357 3358 3359 3360 3377 3378 3379 3380 39,2, 192, 2905 2906 2907 2908 2925 2926 2927 2928 2945 2946 2947 2948 2965 2966 2967 2968

Table 2. FEE is broken (or 8-connector has a black tape), disabling 1/2 of PMT, see Fig 3.

PMB,pmt, nUsedPix, avrMIP (adc), rmsMIP (adc),PDF page # , all mapped softIDs 7,1, 7, 19.14, 4.36, 31, 1797 1798 1799 1800 1817 1818 1819 1820 1837 1838 1839 1840 1857 1858 1859 1860 40,1, 8, 5.16, 0.53, 196, 2981 2982 2983 2984 3001 3002 3003 3004 3021 3022 3023 3024 3041 3042 3043 3044 40,2, 6, 5.44, 0.80, 197, 2985 2986 2987 2988 3005 3006 3007 3008 3025 3026 3027 3028 3045 3046 3047 3048 40,3, 12, 8.50, 1.05, 198, 2989 2990 2991 2992 3009 3010 3011 3012 3029 3030 3031 3032 3049 3050 3051 3052 44,1, 10, 13.72, 8.24, 216, 3301 3302 3303 3304 3305 3306 3307 3308 3321 3322 3323 3324 3325 3326 3327 3328 45,2, 8, 5.04, 1.55, 222, 3421 3422 3423 3424 3425 3426 3427 3428 3441 3442 3443 3444 3445 3446 3447 3448 51,5, 7, 11.37, 2.15, 255, 3877 3878 3879 3880 3897 3898 3899 3900 3917 3918 3919 3920 3937 3938 3939 3940 52,5, 8, 15.84, 4.80, 260, 3957 3958 3959 3960 3977 3978 3979 3980 3997 3998 3999 4000 4017 4018 4019 4020 60,1, 8, 15.12, 3.41, 296, 4581 4582 4583 4584 4601 4602 4603 4604 4621 4622 4623 4624 4641 4642 4643 4644

Table 3. Very low yield of MIPs (1/5 of typical), may de due to badly sitting optical connector, see Fig 4

PMB,pmt, QAflag, nUsedPix, avrMIP (adc), rmsMIP (adc),PDF page # , all mapped softIDs

10,5, 14, 0, 0.00, 0.00, 50, 1553 1554 1555 1556 1573 1574 1575 1576 1593 1594 1595 1596 1613 1614 1615 1616

31,5, 14, 0, 0.00, 0.00, 155, 4677 4678 4679 4680 4697 4698 4699 4700 4717 4718 4719 4720 4737 4738 4739 4740

37,2, 0, 10, 12.90, 7.41, 182, 2745 2746 2747 2748 2765 2766 2767 2768 2785 2786 2787 2788 2805 2806 2807 2808

49,1, 0, 16, 9.36, 3.55, 241, 3701 3702 3703 3704 3705 3706 3707 3708 3721 3722 3723 3724 3725 3726 3727 3728

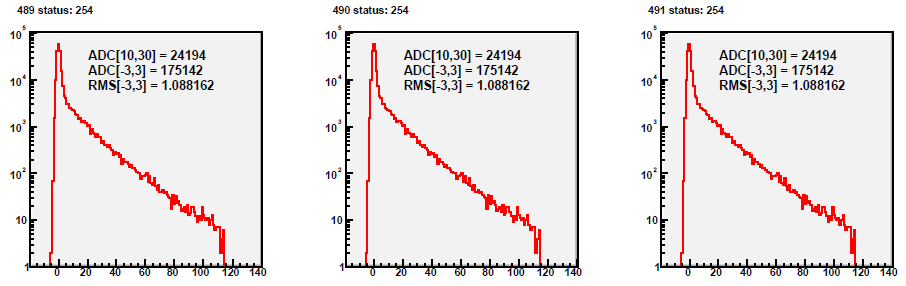

Table 4. Stuck LSB in FEE, we can live with this. (do NOT mask those tiles)

PMB,pmt, QAflag, nUsedPix, avrMIP (adc), rmsMIP (adc),PDF page # , all mapped softIDs 51,1,0, 15, 8.37, 1.30, 251, 3861 3862 3863 3864 3881 3882 3883 3884 3901 3902 3903 3904 3921 3922 3923 3924 51,2,0, 16, 8.66, 1.06, 252, 3865 3866 3867 3868 3885 3886 3887 3888 3905 3906 3907 3908 3925 3926 3927 3928 51,3,0, 16, 11.08, 1.28, 253, 3869 3870 3871 3872 3889 3890 3891 3892 3909 3910 3911 3912 3929 3930 3931 3932 51,4,0, 16, 17.16, 2.70, 254, 3873 3874 3875 3876 3893 3894 3895 3896 3913 3914 3915 3916 3933 3934 3935 3936

Table 5. Other problems:

PMB,pmt, QAflag, nUsedPix, avrMIP (adc), rmsMIP (adc),PDF page # , all mapped softIDs 31,2,0, 16, 12.32, 3.73, 152,4665 4666 4667 4668 4685 4686 4687 4688 4705 4706 4707 4708 4725 4726 4727 4728

Fig 1. Example of fully functioning PMT (BPM=4, pmt=5). 16 softID are listed at the bottom o fthe X-axis.

Top plot: ADC spectra after MIP condition is imposed based on TPC track & BTOW response.

Bottom plot: raw ADC spectra for the same channels.

Fig 2. Example of dead PMT with functioning FEE.

Fig 3. Example of half-dead PMT, comes in pack, most likely broken FEE.

Fig 4. Example of weak raw ADC, perhaps optical connector got loose.

Fig 5. Example of stuck LSB, We can live with this, but gain hardware must be ~10% higher (ADC--> 18)

16 correlation of MIP ADC vs. raw slopes

Scott asked for crate based comparison of MIP peak position vs. raw slopes .

I selected 100 consecutive BPRS tiles, in 2 groups, from each of the 4 crates. The crate with systematic lower gain is the 4th (PSD-20E).

The same spectra from pp 2008 fmsslow events are used as for all items in the Drupal page.

Fig 1. BPRS carte=PSD-1W

Fig 2. BPRS carte=PSD-19W

Fig 3. BPRS carte=PSD-1E

Fig 4. BPRS carte=PSD-20E This one has lower MIP peak

Run 9 BPRS Calibration

Parent for Run 9 BPRS Calibration

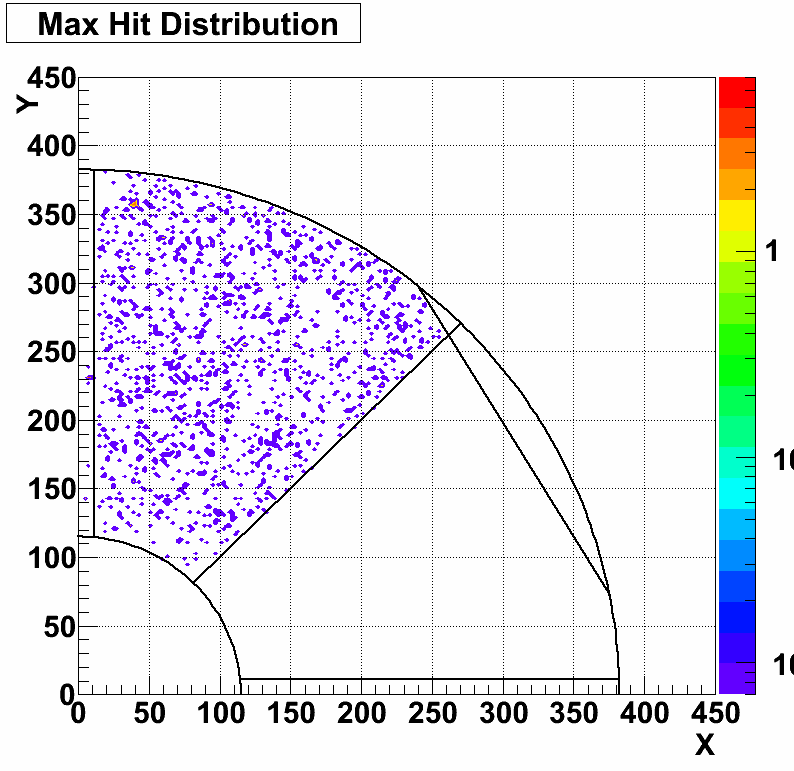

01 BPRS live channels on day 82, pp 500 data

Status of BPRS live channels on March 23, 2009, pp 500 data.

Input: 32K events accepted by L2W algo, from 31 runs taken on day 81 & 82.

Top fig shows high energy region for 4800 BPRS tiles

Middle fig shows pedestal region, note we have ZS & ped subtracted data in daq - plots is consistent. White area are not functioning tiles.

Bottom fig: projection of all tiles. Bad channels included. Peak at ADC~190 is from corrupted channels softID~3720. Peak at the end comes most likely form saturation of BPRS if large energy is deposited. It is OK - BPRS main use is as a MIP counter.

Attached PDF contains more detailed spectra so one can see every tile.

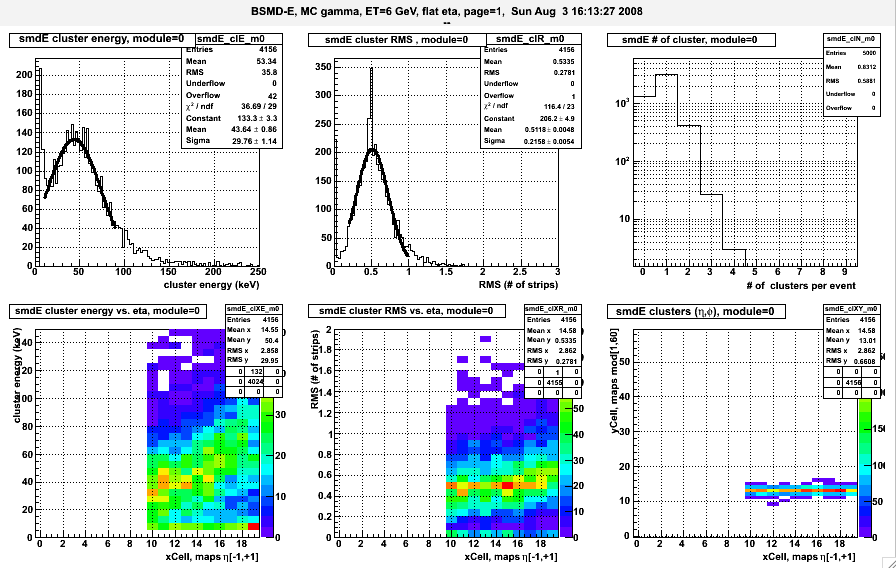

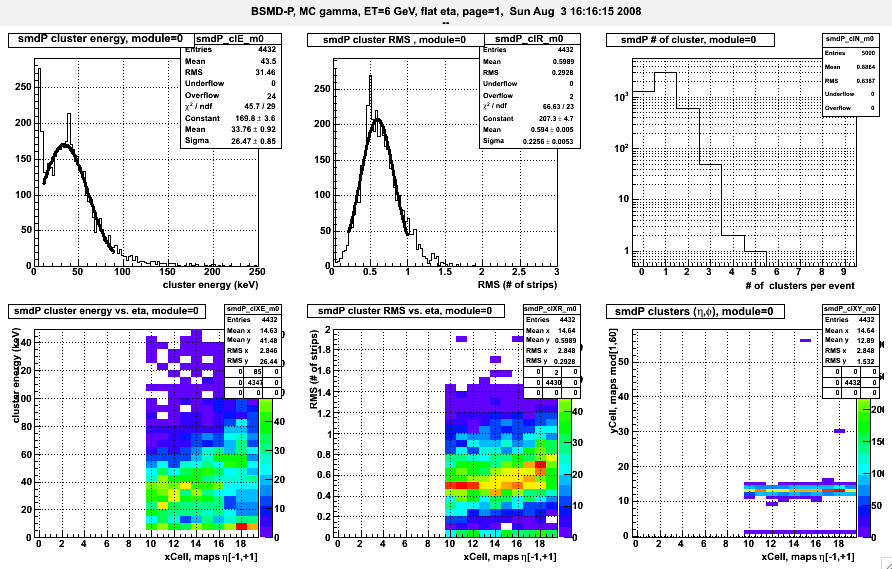

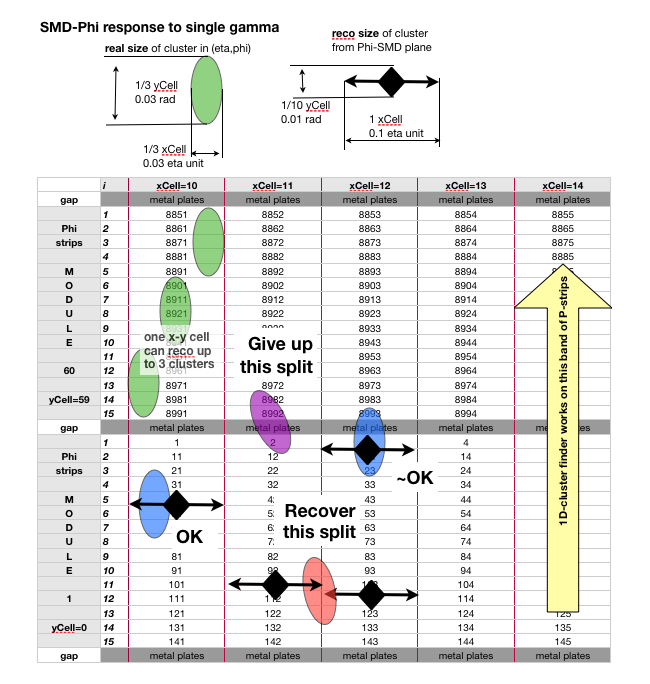

BSMD

Collected here is information about BSMD Calibrations.

There are 36,000 BSMD channels, divided into 18,000 strips in eta and 18,000 strips in phi. They are located 5.6 radiation lengths deep in the Barrel Electromagnetic Calorimeter (BEMC).

1) DATA: 2008 BSMD Calibration

Information about the 2008 BSMD Calibration effort will be posted below as sub-pages.

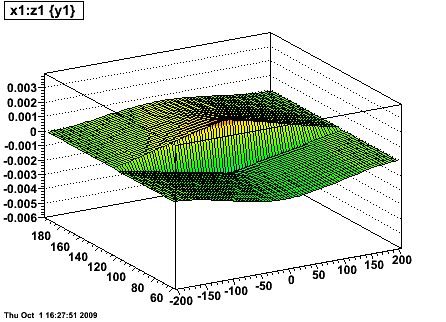

Fig 1. BSMD-E 2D mapping of soft ID. (plot for reverse mapping is attached)

01) raw spectra

Goals:

- verify pedestals loaded to DB are reasonable for 2008 pp data

- estimate stats needed to find slopes of individual strips for minB events

Method:

- look at pedestal residua for individual strips, exclude caps 1 & 2, use only status==1

- fit gauss & compare with histo mean

- find integrals of individual strips, sum over 20 ADC channels starting from ped+5*sig

To obtain muDst w/o zero suppression I run privately the following production chain:

chain="DbV20080703 B2008a ITTF IAna ppOpt VFPPV l3onl emcDY2 fpd ftpc trgd ZDCvtx NosvtIT NossdIT analysis Corr4 OSpaceZ2 OGridLeak3D beamLine BEmcDebug"

Fig 1

Examples of single strip pedestal residua, based on ~80K minB events from days 47-65, 30 runs. (1223 is # of bins, ignore it).

Left is typical good spectrum, see Fig2.3. Middle is also reasonable, but peds is 8 channels wide vs. typical 4 channels.

The strip shown on the right plot is probably broken.

Fig 2

Detailed view on first 500 strips. X=stripID for all plots.

- Y=mean of the gauss fit to pedestal residuum, in ADC channels, error=sigma of the gauss.

- Y=integral of over 20 ADC channels starting from ped+5*sig.

- Raw spectra, Y=ADC-ped, exclude caps 1 & 2, use only status==1

Fig 3

Broader view of ... problems in BSMD-E plane. Note, status flag was taken in to account.

Top plot is sum of 30 runs from days 47-65, 80K events. Bottom plot is just 1 run, 3K events. You can't distinguish individual channels, but scatter plot works like a sum of channels, so it is clear the slopes are there, we need just more data.

Conclusions:

- DB peds for BSMDE look good on average.

- with 1M eve we will start to see gains for individual strip relativewith ~20% error. Production will finish tomorrow.

- there are portions of SMDE masked out (empty area in fig 3.2, id=1000) - do why know what broke? Will it be fixed in 2009 run

- there are portions of SMDE not masked but dead (solid line in fig 3.2, id=1400) - worth to go after those

- there are portions of SMDE not masked with unstable (or wrong) pedestal, (fig 3.1 id=15000)

- for most channels there is one or more caps with different ped not accounted for in DB ( thin line below pedestal in fig 2.3)

- One gets a taste of gain variation from fig 2.2

- Question: what width of pedestal is tolerable. Fig 2.1 shows width as error bars. Should I kill channel ID=152?

02) relative BSMD-E gains from 1M dAu events

Glimpse of relative calibration of BSMDE from 2008 d+Au data

Input: 1M dAu minb events from runs: 8335112+8336019

Method : fit slopes to individual strips, as discussed 01) raw spectra

Fig 1

Examples of raw pedestal corrected spectra for first 9 strips, 1M dAu events

Fig 2

Detailed view on first 500 strips. X=stripID for all plots.

- Y=mean of the gauss fit to pedestal residuum, in ADC channels, error=sigma of the gauss.

- Y=integral of over 20 ADC channels starting from ped+5*sig. Raw spectra,

- Y=gain defined as "-slope" from the exponential fit over ADC range 20-40 channels, errors from expo fit. Blue line is constant fit to gains.

Fig 3

BSMDE strips cover the whole barrel and eta-phi representation is better suited to present 18K strips in one plot.

- Mapping of BSMDE-softID (Z-axis) in to eta-phi space. Eta bin 0 is eta=-1.0, eta bin 299 is eta=+1.0. Phi bin 0 starts at 12:30 and advances as phi angle.

- gains for majority of 18K BSMDE strips. White means no data or discarded by rudimentary QA of peds, yields or slope.

Fig 4

For reference spectra from 1M pp events from ~12 EmcCheck runs from days 47-51. It proves I did it and it was naive on my side to expect 1M pp events is enough.

Fig 5

More pp events spectra - lot of problems with DB content.

03) more details , answering Will

This page provides more details addressing some of Will's questions.

2) fig 2: well, 500 chns is not a very "natural" unit, but I wonder

what corresponds to 50 chns (e.g., the region of fluctuation

250-300) ... I need to check my electronics readout diagrams

again, or maybe folks more expert will comment

Fig 1.

Zoom-in of the god-to-bad region of BSMDE

Fig 2.

'Good' strips belong to barrel module 2, crate 2, sitting at ~1 o'cloCk on the WEST

Fig 3.

'BAD' strips belong also to barrel module 2, crate 2, sitting at ~1 o'cloCk on the WEST

04) bad CAP 123

Study of pedestal correlation for BSMDE

Goal: identify source of the band below main pedestals.

Figs 1,2 show pedestals 'breathe' in correlated way for channels in the same crate, but this mode is decoupled between crates. It may be enough to use individual peds for all CAPS to reduce this correlation.

Fig3 shows CAP=123 has bi-modal pedestals. FYI, CAPS 124,125 were excluded because they also are different.

Based on Fig1 & 3 one could write an algo identifying event by event in which mode CAP=123 settled, but for now I'll discard CAP123 as well.

All plots are made based on 500K d-Au events from the run 8336052.

Fig 0

Example of pedestal residua for BSMDE strips 1-500, after CAPS 124 and 125 were excluded.

Fig 1

Correlation between pedestal residua for neighbor strips. Strip 100 is used on all plots on the X-axis

Fig 2

Correlation between pedestal residua for strips in different crates. Strip 100 is used on all plots on the X-axis

Fig 3

Squared pedestal residua for strips [1,150] were summed for every event and plotted as function of CAP ID (Y-axis).

Those strips belong to the same module #1 . X-axis shoes SQRT(sum) for convenience. CAP=123 has double pedestal.

05) BSMDE saturation, dAu, 500K minB eve

Input: 500K d-Au events from run 8336052,

Method : drop CAPS 123,124,125, subtract single ped for all other CAPS.

Fig 1 full resolution, only 6 modules , every module contains 150 strips.

Fig 2 All 18K strips (120 modules), every module contain only 6 bins, every bin is sum of 25 strips.

h->RebinX(25), h->SetMinimum(2), h->SetMaximum(1e5)

06) QAed relative gains BSMDE, 3M d-Au events , ver1.0

Version 1 of relative gains for BSMDE, d-AU 2008.

INPUT: 3M d-AU events from day ~336 of 2007.

Method: fit slopes to ADC =ped+30,ped+100.

The spectra, fits of pedestal residuum, and slopes were QAed.

Results: slopes were found for 16,577 of 18,000 strips of BSMDE.

Fig1 Good spectrum for strip ID=1. X-axis ADC-ped, CAPs=123,124,124 excluded.

Fig2 TOP: slopes distribution (Y-axis) vs. stripID within given module ( X-axis). Physical eta=0.0 is at X=0, eta=1.0 is at X=150.

BOTTOM: status tables with marked eta-phi location excluded 1423 strips of BSMDE by multi-stage QA of the spectra. Different colors denote various failed tests.

Fig3 Mapping of known BSMDE topology on chosen by us eta-phi 2D localization. Official stripID is shown in color.

07) QA method for SMD-E, slopes , ver1.1

Automatic QA of BSMDE minB spectra.

Content

- example of good spectra (fig 0)

- QA cuts definition (table 1) + spectra (figs 1-7)

- Result:

- # of bad strips per module. BSMDE modules 10,31,68 are damaged above 50%+. (modules 16-30 served by crate 4 were not QAed).

- eta-phi distributions of: slopes, slope error (fig 9) , pedestal, pedestal (fig 10) width _after_ QA

- sample of good and bad plots from every module, including modules 16-30 (PDF at the end)

The automatic procedure doing QA of spectra was set up in order to preserve only good looking spectra as shown in the fig 0 below.

Fig 0 Good spectra for random strips in module=2. X-axis shows pedestal residua. It is shown to set a scale for the bad strips shown below.

INPUT: 3M d-AU events from day ~336 of 2007.

All spectra were pedestals subtracted, using one value per strip, CAPS=123,124,125 were excluded. Below I'll use term 'ped' instead of more accurate pedestal residuum.

Method: fit slopes to ADC =ped+40,ped+90 or 5*sig(ped) if too low.

The spectra, fits of pedestal residuum, and slopes were QAed.

QA method was set up as sequential series of cuts, upon failure later cuts were not checked.

Note, BSMD rate 4 had old resistors in day 366 of 2007 and was excluded from this analysis.

This reduces # of strips from 18,000 to 15,750 .

| cut# | cut code | description | # of discarded strips | figure |

| 1 | 1 | at least 10,000 entries in the MPV bin | 4 | - |

| 2 | 2 | MPV position within +/-5 ADC channels | 57 | Fig 1 |

| 3 | 4 | sig(ped) of gauss fit in [1.6,8] ADC ch | 335 | Fig 2 |

| 4 | 8 | position of mean gauss within +/- 4 ADC | 11 | Fig 3 |

| 5 | 16 | yield from [ped+40,ped+90] out of range | 441 | Fig 4 |

| 6 | 32 | chi2/dof from slop fit in [0.6,2.5] | 62 | Fig 5 |

| 7 | 64 | slopeError/slop >16% | 4 | Fig 6 |

| 8 | 128 | slop within [-0.015, -0.05] | 23 | Fig 7 |

| - | sum | out of processed 15,750 strips discarded | 937 ==> 5.9% |

Fig 1 Example of strips failing QA cut #2, MPV position out of range , random strip selection

Fig 2a Distribution of width of pedestal vs. eta-bin

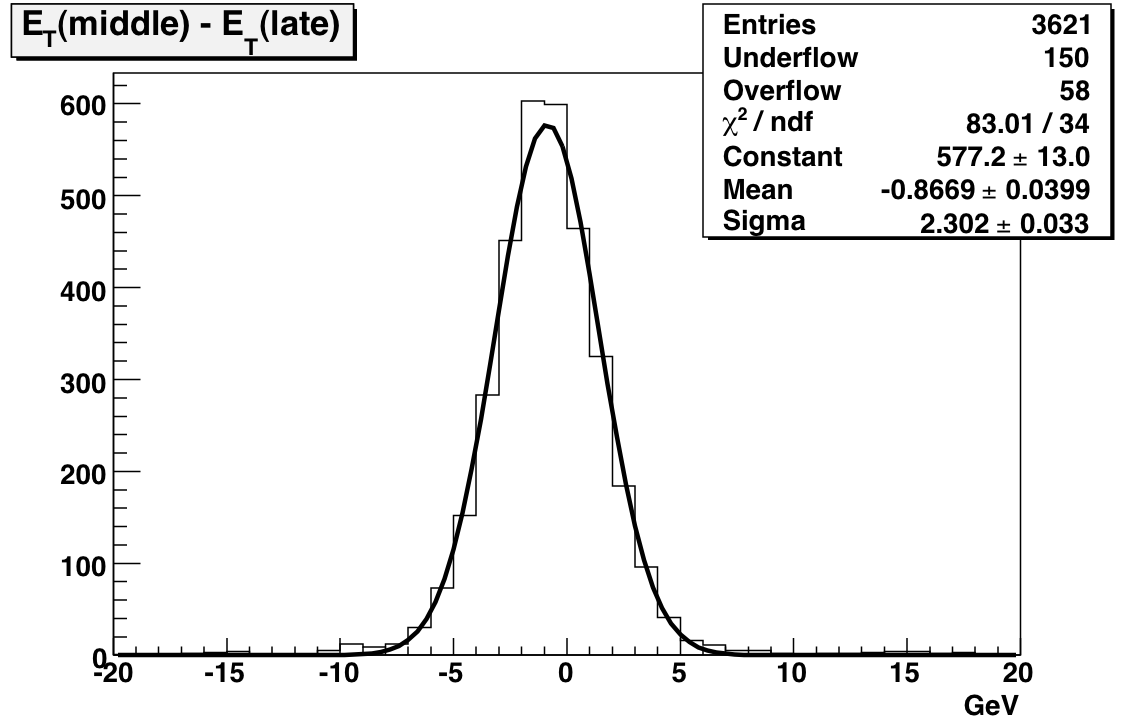

Fig 2b Example of strips failing QA cut #3, width of pedestal out of range , random strip selection